Model and data lineage in machine learning experimentation

Modern quantitative finance is based around the approach of pattern recognition in historical data. This approach requires teams of scientists to work in a collaborative and regulated setting in order to develop models that can be used to make trading predictions. With the growing influence of this field, both participants and regulators are looking to put in place mechanisms to understand how and why models have been developed, for reasons such as regulatory compliance and model reproducibility. We refer to this tractability problem as lineage.

The challenge of reproducibility and lineage in machine learning (ML) is three-fold: code lineage, data lineage, and model lineage. Source version control is a standard for managing changes to code. For data lineage, most data storage services support versioning, which gives you the ability to track datasets at a given point in time. Model lineage combines code lineage, data lineage, and ML-specific information such as Docker containers used for training and deployment, model hyperparameters, and more. The focus of this post is model lineage and how it pertains to reproducibility. For the purposes of this post, we illustrate lineage using the example of quantitative finance.

Problem statement

When developing a model, a quantitative finance researcher experiments with many different model configurations and parameters, which are trained and simulated on many different datasets. Such research often entails varying one parameter while holding all others constant and then simulating against some target metric (for example Sharpe ratio). For a given model, the process of research may last for days, weeks, or even months. Eventually the researcher settles on a given model, with a given set of parameters, which produces certain results on a given dataset. The researcher may work as part of a larger team, directly reporting into a research manager and collaborating with peers. The research team might work collaboratively alongside other teams, such as development, quant-dev, and risk. Keeping track of the changes associated with data versions and model parameters has typically happened in spreadsheets or shared notes, and as such doesn’t scale well.

In such a collaborative setting, the researcher has various tools and approaches open to them, the primary of which is version control software, such as GitHub and AWS CodeCommit. With version control software, you can track and share model configurations based in code. You can also look back in history to identify which model was being developed when and by whom, based on commits made to the source code. A second approach is data versioning, whereby data is versioned and the associated metadata (including the path to the data) is stored in a relational or graph database. A related tool is the feature store, whereby the researcher generates features one time and then persists them, allowing different models and different researchers and teams to access the same feature.

At some point, after selecting a given model or set of parameters, you may need to replay the simulation and regenerate the results. Examples of times when such a course of action might be necessary include a trading loss or a regulatory breach. The ex-post examination of the model selection criteria may be internal to the firm, or might be external. One example of the latter occurred in 2014 when AMF, the French financial regulator, asked the market-maker GETCO to explain the actions of one of its algorithms in 2010 on Euronext, resulting in a fine for the firm.

A closely related problem exists in academia. The issue of reproducibility is one that peer-reviewed journals have struggled with for years. A researcher will present results for peer review, with no easy mechanism for the peers to reproduce and thereby verify those results. With the passage of time, the problem compounds as readers who want to replicate published results in order to build on them are faced with a near impossible task.

Theoretical basis to a solution

To ensure reproducibility of experimental results, the researcher needs to store versions of parameters, models, input data, features data, managed services, proprietary libraries, third-party libraries, container images, environmental configurations, RNG seeds, and other variable quantities. They then need to link all these entities together, persist them, and allow the user to recall them on demand.

In addition to storing all the inputs to an experiment, the experimental outputs should also be stored such that the experiment can be reproduced and verified. Experimental outputs range widely, and may include scalars, time series, and images.

Simply recording all this information in a spreadsheet isn’t a viable solution. The way this information should be stored needs to have certain properties. The records should be immutable, searchable, and the relationships between the entities tracked should be reflected by the storage mechanism.

A model’s lineage is a set of associations between a model and all the components that were involved in the creation of that model. A model has relationships with experiments, datasets, container images, and so on. Model building experiments make use of trials, and each trial has components for data preprocessing, model training, and data postprocessing.

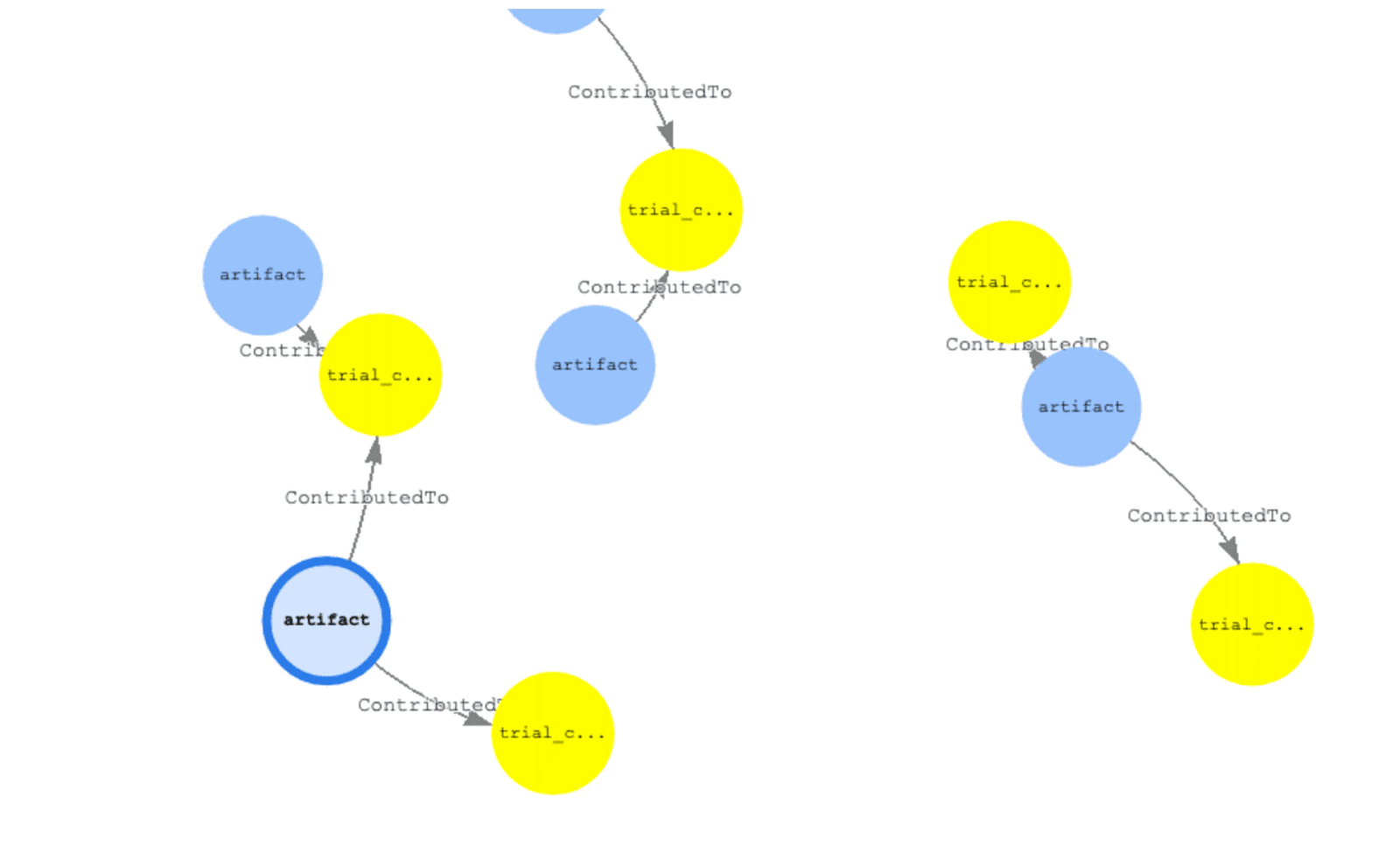

When representing a model’s lineage, the associations between the artifacts associated with a model are at the core of what needs to be represented. A graph structure is the most suitable approach for this kind of highly associated artifact representation.

Graphs model data as a set of vertices that may be connected to other vertices by edges. Vertices typically represent entities like people, places, and things. Vertices can further be described with attributes, and edges define relationships between vertices. As with entities, relationships may have additional details that can be modeled.

Building an equivalent application using a relational database requires creating many tables with multiple foreign keys and then writing nested SQL queries and complex joins. Not only does that approach quickly become unwieldy from a coding perspective, its performance degrades quickly as the amount of data increases.

A best practice is to use a model registry as a centralized store for models that should be tracked, shared, deployed, and reused. The model registry should support tracking a model’s lineage so that at any point in time, all the relevant metadata for a model can be viewed. When you use the model registry’s versioning capability for models, you have a mapping between specific versions of a model and its entire lineage all accessible from the model registry itself.

Given the number of components that are part of a model’s lineage, you may want to inspect the lineage of not only the model, but any object associated with the model, for example, an experiment. With a graph as the underlying data structure that supports lineage, you should have the flexibility to traverse an entity’s lineage from different focal points. You should be able to find the entire lineage of an experiment to retrieve the experiment components and its associations, the data used by each trial in the experiment, the parameters used by the trials, and so on.

Your organization’s governance polices may require tight controls over model and lineage data access. Using role or resource-based policies to each of the resources that are part of a model’s lineage allows your organization to enforce governance requirements.

In summary, an end-to-end lineage solution needs to give you the means to access information about parameters, versioning, and object locations, and ensure that access controls are enforced. A GUI enables you to view a model’s lineage by clicking through a model’s associations to get all the way down to the data used to train the model.

One challenge with this approach is although artifacts are small in size and cheap to store, raw data is not. Storing all experimental data for periods of years in order to enable a lineage solution isn’t realistic. A compromise solution might be ex-post an experiment, choosing to enable lineage tracking for that experiment with the option of only storing metadata. Any solution in this space requires you to closely monitor storage costs, as well as enabling a subset of users to delete lineage that’s no longer required.

AWS services and solutions in this space

AWS has a system of services and features that support an end-to-end ML workflow. With Amazon SageMaker features, you can build your own solutions to manage the end-to-end lineage of your ML models:

- Amazon SageMaker Studio is an IDE to support the ML experience. Studio consists of multiple separate services, several of which have applicability to the lineage use case.

- Amazon SageMaker Feature Store is a fully managed repository to store, update, retrieve, and share ML features. If a common set of features are shared across model building runs or across teams, you can use the feature store to avoid recreating the same set of features each time they’re needed. This saves time and money spent on resources. You can reference feature store data with a feature store ID, which you can associate with a training job while tracking the lineage of a model.

- Amazon SageMaker Lineage Tracking creates and stores information about the steps of a ML workflow from data preparation to model deployment. With the tracking information, you can reproduce the workflow steps, track model and dataset lineage, and establish model governance and audit standards. Lineage Tracking uses tracking entities to represent each of the elements in the ML workflow. SageMaker automatically creates tracking entities for SageMaker jobs (training, processing, batch transform), models, model packages, and endpoints if the data is available. Two types of tracking entities are defined: experiment entities and lineage entities. Experiment entities include trial components, trials, and experiments. Lineage entities are contexts, actions, artifacts, and associations. Each entity has a set of properties that you can use to associate relevant information to it, including type (model, image, dataset, and so on). You can supplement the SageMaker-created tracking entities with manually created tracking entities of your own; for example, you can represent a CodeCommit repository or an Amazon Simple Storage Service (Amazon S3) URI as artifacts.

- Amazon SageMaker Pipelines is CI/CD service for ML. With Pipelines, you can manage your end-to-end ML workflow at scale by orchestrating all the steps of the ML workflow using a single interface. Pipelines can stitch together data processing steps, model training steps, postprocessing steps, model evaluation steps, and so on into a single workflow. Because a pipeline is defined as a set of steps with metadata associated with each of those steps, saving the pipeline definition (a JSON object) allows you to recreate the workflow at any point in time as long as the resources needed by each step can be accessed. Keeping track of the entire workflow is an important step towards model reproducibility.

- Amazon SageMaker Experiments lets you organize, track, compare and evaluate ML experiments and model versions. Experiments are made up of trials and trial components that can be referenced by IDs and can track all metadata associated with a model training job, such as the hyperparameters used to train the model, the performance metrics of the model, the data used, bias reports, model explainability reports, and more. Trials and trial components are encapsulated into experiments. You can use multiple experiments during the model building process.

- Amazon SageMaker Projects allow you to create a repeatable end-to-end ML solution using AWS Service Catalog provisioned products. This allows data scientists, MLOps teams, and organizations to create a single template or a set of templates for model building and deployment to bring standardization into the ML process. With this, you can track the specific template and version of the template used in an ML workflow in Lineage Tracking so that the same template can be used at the time of reproducing a model.

- The Amazon SageMaker Model Registry stores models and model versions in a centralized repository. The model registry is organized into model package groups, with each group having several versions of a model package. These versions can be marked as approved or rejected depending on the acceptance criteria determined by the team building these models. The model registry provides a centralized place for model management, model sharing within and across teams, and a standard mechanism to access and identify models.

In addition to the various features of SageMaker, several other AWS services relate to the concept of lineage:

- Amazon ECR is a fully managed container registry that makes it easy to store, manage, and track your container images. You may have separate container images used for preprocessing, training, and inference that need to be stored and versioned. To reproduce any step of the model building workflow, the exact version of the container image used by that step is required. When you store the Amazon ECR image ID along with the version of that image, you can retrieve the specific version of the container used at the point of interest even if that version is several iterations old and no longer in use.

- Amazon Simple Storage Service (Amazon S3) is the Amazon object store. You can use it to store the data for model training and for the model artefacts themselves. Amazon S3 supports data versioning, which allows tracking the data used for training a model not only by the Amazon S3 URI, but also by the version tag associated with that object in Amazon S3. As models are trained, the training dataset is continuously updated as new data is made available, or the entire dataset itself could be replaced with higher quality data. Keeping track of not only the Amazon S3 URI, but the version as well, allows you to have constant references to object locations in Amazon S3 by defining logic in your code to always use the latest version of an object. Without versioning, you need to upload new datasets to different Amazon S3 locations, and update the reference to a training dataset in the training code to reflect that.

- AWS CodeCommit is the Amazon fully managed source control service. The model building process is often a collaborative one, with source code being shared across individuals and teams. Source control practices are strongly encouraged when creating ML models for two reasons: collaboration and reproducibility. With a version control tool like CodeCommit, developers can have IDs associated with each version of code they have used (commit ID) while developing their models. After a successful training job, you can commit code and push it to CodeCommit, and link the specific commit ID associated with that code version with this training job. When reproducing a model, or rerunning a training job, you can roll back to the specific version of code used to generate that model or run that training job.

Build a solution with AWS

A complete ML workflow consists of many or all of the services described in the previous section, each of which is an important component of a model’s lineage.

Upstream and downstream lineage

The concepts of upstream and downstream lineage explore different mechanisms by which a model’s lineage is traversed and different problems that need to be solved using lineage. Take the example of reproducibility: given a model, you need to find all the entities that were part of the model creation—the training parameters, the datasets, preprocessing steps, and so on. The upstream lineage of a model is necessary in cases where there are stringent governance policies for ML models and when reproducing models across teams or different points in time.

Downstream lineage approaches traversing the entities leading to a model’s creation from a different angle. Instead of starting with a model as the focal point, you may need to identify all the models that were trained by a given dataset, or trained using a certain version of training code. This is useful when troubleshooting issues or doing a blast radius analysis; for example, you may want to find all the models trained by a version of code that was later found to contain a bug. You need to use a specific version of code as the lineage focal point, and then traverse the lineage of that code artifact until the model artifacts are identified.

SageMaker Search API

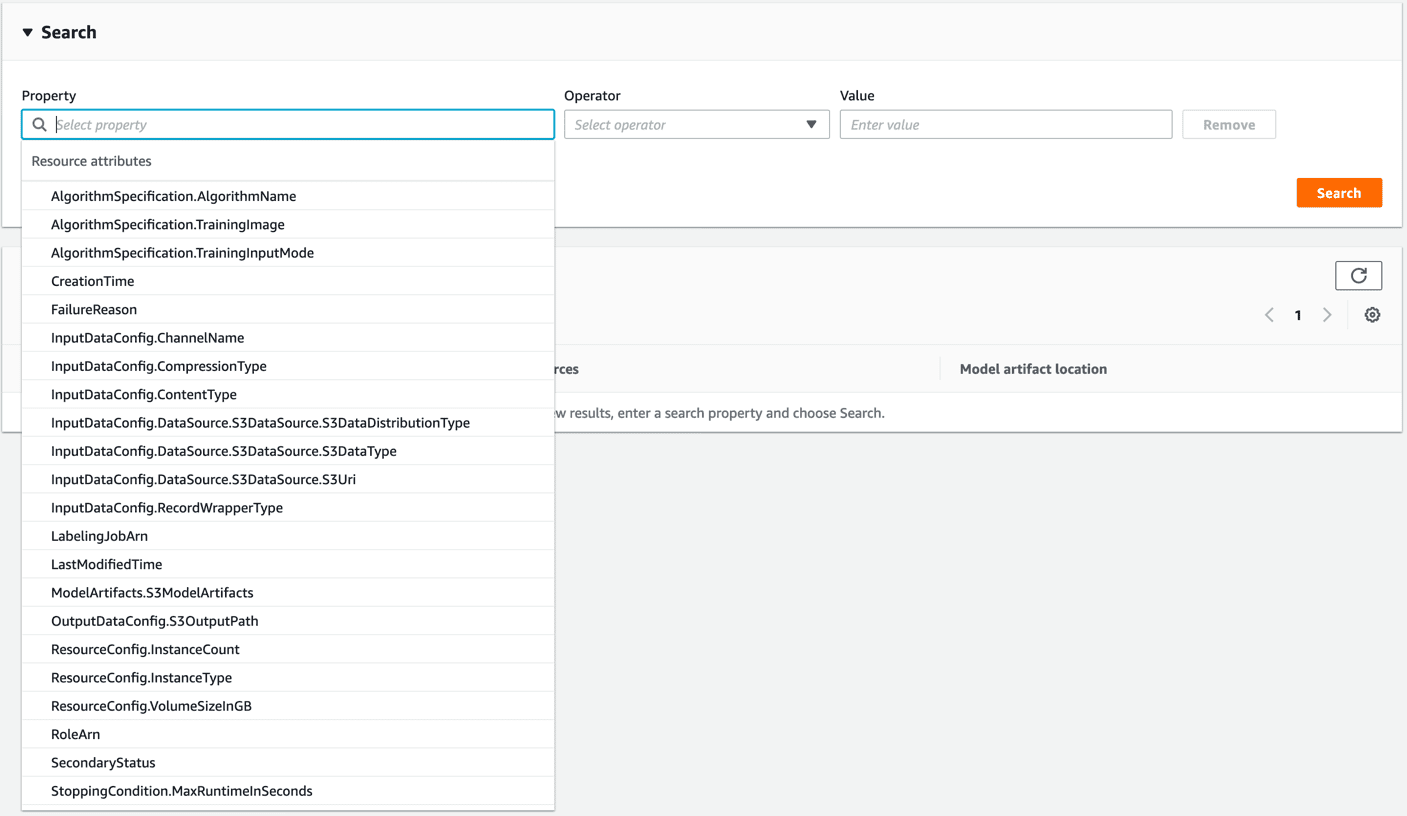

You can use the SageMaker Search API to look up information about the data that was used to train a model, the parameters used for training, the container image used, and model metrics. The following code is an example of how you can use the Search API to find the training job that created the model by using the model data URI:

After the training job is found, the job can be described and all its information can be extracted, such as hyperparameters, input data, experiment configuration, trial components, Amazon ECR image used, and so on.

In addition to the API, SageMaker Search provides a UI with which you can look up information related to a model using several properties. You can look for all the models that were trained using a specific training dataset by providing the Amazon S3 URI to that data, look for models based on roles, use the Search UI to find parameters associated with a training job, and more.

SageMaker Lineage Tracking

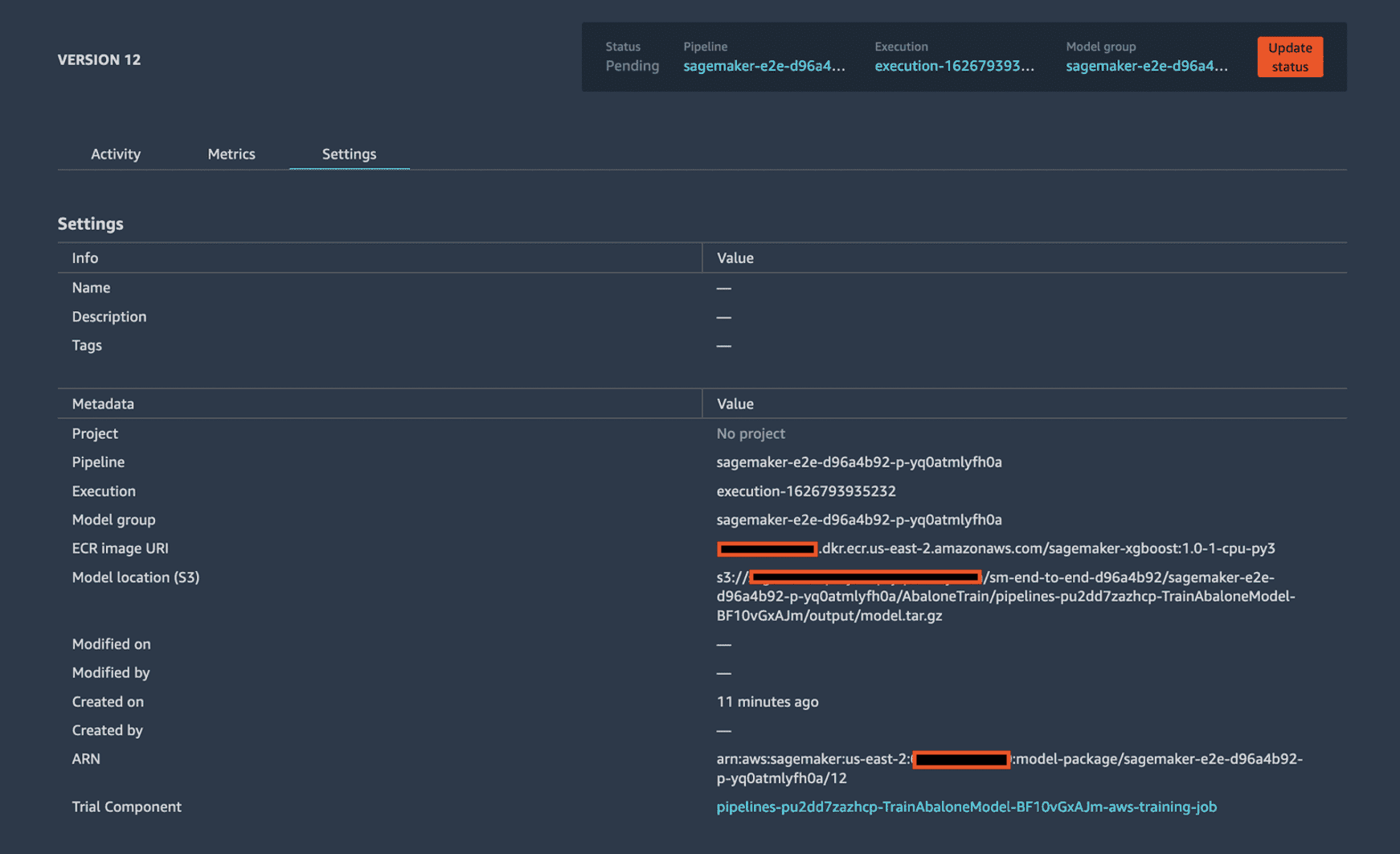

When a model is trained and added to the model registry, the trial component that created the model is linked to the model version in the registry so that the lineage of the model leading to the dataset that was used for training is accessible. Through the model registry, you can access the training job details, the inputs and outputs to that job, and any profiling information generated during training.

In addition to the trial component, through the model registry, you have visibility into the specific version of a pipeline if one was used to generate the model, with a link to the pipeline and execution ID.

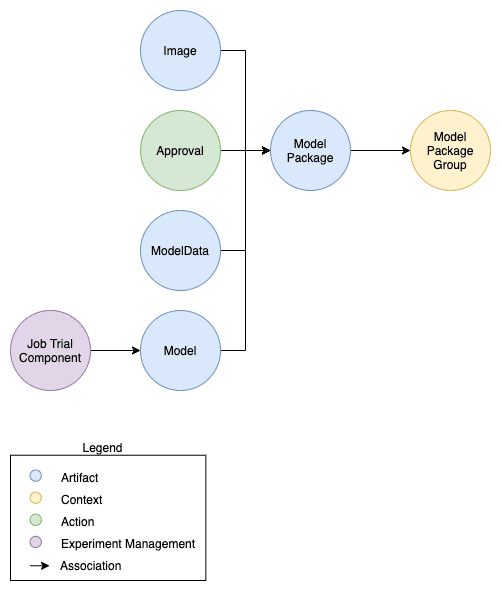

The following diagram shows the lineage structure that is created by Lineage Tracking when a model is trained and added to the registry.

SageMaker provides an API for lineage that allows you to interact with SageMaker artifacts, actions, associations, and contexts. For example, you may want to use the lineage API to find the training data used to generate a model. Starting with the model in the model registry, the first step is to find the artifact ARN associated with the model (see the following code). Note the artifact ARN for the model is different to the model’s ARN.

SageMaker lineage artifacts are associated to other artifacts through associations. All the associations for this model can be found using the associations API:

In addition to the information automatically captured in the artifacts for SageMaker entities, artifacts have properties that you can use to add custom information to the model. For example, if you’re using source version control, a commit ID might need to be associated with a model. You can update artifacts to add these properties.

You can create artifacts for code as well. For example, if a training or inference script is stored in Amazon S3, you can create an artifact with that Amazon S3 URI and associate the code artifact with the model.

When you use the artifacts and associations created by default when training and registering a model in SageMaker, along with taking advantage of the flexibility to create custom artifacts and associations, you can build a robust lineage solution to support upstream and downstream lineage use cases.

If you want to create a lineage solution by ingesting all the artifacts, associations, and data related to the model building process into a graph database, Amazon Neptune is a suitable option as the basis of a lineage solution. An example notebook that walks through the process of ingesting lineage information into Neptune to enable easy access of upstream and downstream lineage is available on GitHub.

Conclusion

Reproducibility in the ML research space is a challenging and multi-faceted topic, and quantitative finance is a prominent use case. By working with our customers across industry verticals, AWS is leading the way towards a holistic solution set in this field. We encourage you to reach out and discuss your use cases with the authors via your AWS account manager. To learn more about Amazon SageMaker, visit the webpage.

About the Authors

Tags: Archive

Leave a Reply