Organize product data to your taxonomy with Amazon SageMaker

When companies deal with data that comes from various sources or the collection of this data has changed over time, the data often becomes difficult to organize. Perhaps you have product category names that are similar but don’t match, and on your website you want to surface these products as a group. Therefore, you need to go through the tedious work of manually creating a map from source to target to be able to transform the data into your own taxonomy. In these cases, we’re not talking about a few hundred rows of data, but more often many hundreds of thousands of rows, with new data flowing in regularly.

In this post, I discuss how to organize product data to your classification needs with Amazon SageMaker.

Business problem

Many companies ingest data from various third-party sources into their data lake. This data may come in various formats such as documents, product catalogs, and TV program metadata. After this data lands in the data lake, you may need to organize this data into your company’s own taxonomy to create, for example, a better user search experience on front-end and back-office applications.

For this post, I use a public dataset of books that contains metadata associated with the book such as title, description, and category. We want to see if we can train a classifier to predict the category of a book based on its title and description. You can apply this solution to other use cases, where you could take the product title and product description and predict the product category. To improve the performance of this model, we may also want to introduce other associated data, but for this example we focus on these two features.

Solution overview

To tackle this problem, I solve it in two parts. In the first, I use a natural language processing (NLP) algorithm to create a vector from the words in the title and description. From this vector, we can capture the semantics of the sentence and therefore infer meaning. Word2vec is a powerful NLP algorithm that you can use to convert words into vectors with numerical values after training the algorithm on a corpus of text. An example of this is where Word2vec was used to do arithmetic:

King – Man + Woman = Queen

The vector has in essence captured that King is royalty, a senior position, and male. If you remove Male and replace with Female, you have the vector of Queen. Queen and King would likely appear in close distance and share many of the same characteristics apart from gender.

The performance of this relies on the corpus of text it was trained on and doesn’t necessarily work well in other cases with other words. However, the example demonstrates how words are converted into vectors and can capture the semantics of that word. It can help you find similar words using an algorithm such as nearest neighbors.

If two vectors are close together, they likely have similar meaning. This helps when I use these vectors to predict what class or genre the book belongs to. For creating the word embeddings, I use FastText. FastText is a library that allows you to map words to vectors that contain numerical values. FastText is an extension to Word2Vec. It takes longer to train the text representation with FastText than with Word2Vec, but it performs better. Also, because the training is done on subwords; it also works well with words that weren’t part of the training vocabulary. Subwords are all the substrings contained in a word. For example, if we set the subword length to 3, then fasttext is represented as xt>. The special characters < and > define the start and end of the word. This is key because you may have new data flowing in that wasn’t part of the original vocabulary. If the word’s substrings are known, you can still generate a vector representation of it.

This leads to the second part in which I use another algorithm, XGBoost, to do the classification of our data, specifically to predict the class or genre of the book. This requires supervised learning, that is, a dataset that already has a target column, also known as the label. Therefore, there are some prerequisites to start labeling enough data to be able to train a classifier, because XGBoost is a supervised learning algorithm.

With machine learning (ML) problems, it all starts with the data. Ideally, your supervised dataset is balanced, that is, it has an evenly distributed number of rows per class. This improves the performance of the model training. If not, you can use some procedures to improve that situation. An example procedure for this is to use over-sampling or under-sampling. To do this, you could duplicate rows of the dataset from the minority class or remove rows from the majority class, respectively, to reach a more balanced dataset.

Dataset

I use a public dataset; the following table contains a subset of this dataset, including the features that we use to create our model.

| category | description | title |

| [Books, History, Military] | [] | War Plans of the Great Powers, 1880–1914 |

| [Books, Science Fiction & Fantasy, Science… | [In bestsellers McCaffrey and Scarborough’s ch… | First Warning: Acorna’s Children |

| [Books, Science Fiction & Fantasy, Fantasy] | [1st UK edition paperback fine In stock shipped… | Xena Warria Princess – Prophecy of Darkness |

| [Books, Medical Books, Medicine] | [Master dosage calculations with the ratio-pro… | Dosage Calculations: Ratio-Proportion Approach… |

| [Books, Biographies & Memoirs, True Crime] | [] | A Handful of Summers |

This particular dataset is largely imbalanced. The main problem with this is that when training the model, the model favors the majority class. You might find yourself with a model that always predicts well for the majority class, but doesn’t perform as well for the non-majority classes. To this point, when analyzing the performance of the model, you want to check different metrics like precision, recall, and F1 score. These reveal that although the accuracy metric of the model is high, it isn’t good at predicting the minority class. In this case, you need to go back to the data and resolve the problem there.

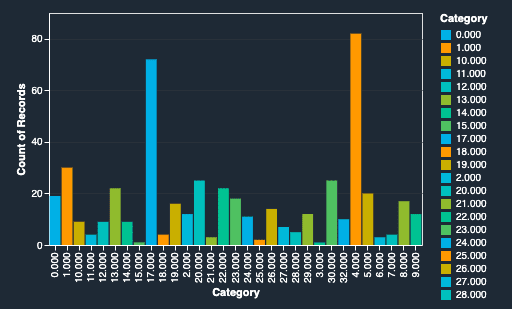

In the following chart, on the x-axis we have the class label, where we have converted the category of the book to a number. The y-axis shows the count of each class label. The chart shows the class distribution of the data. You can see that categories 4.000 and 17.000 far outnumber the other classes. Even with the best algorithm, you will struggle to get good performance because of the large imbalance.

Methodology

SageMaker offers many built-in ML algorithms. A good candidate algorithm for a problem like this is to use BlazingText to do the text classification. However, in this post, I use GenSim’s implementation of FastText, a powerful NLP toolkit. FastText is useful because it can create word vectors from words that weren’t in the original training vocabulary. This functionality provides an advantage over Word2vec, which is part of the BlazingText implementation. This functionality also allows the solution to work reliably for the new data that is flowing in regularly.

I use the framework container for XGBoost and the scikit-learn implementation for XGBoost classification, which allows me to pass in a sample_weight parameter. This parameter isn’t available with the built-in XGBoost algorithm. By setting this parameter, I can inform the algorithm of weights per class, and the model performs better for the minority classes.

SageMaker notebook

I have published a SageMaker notebook that takes you through the preprocessing and training of the models.

You can launch a copy of the notebook within the SageMaker console and run the notebook cells to go through the entire workflow of inferring a product category from its product description and title.

You’re taken through a process of cleansing the data first, where I transform the case of the words. It also removes stopwords, that is, the common words that appear often and don’t help capture the semantics of the sentences. Then I tokenize the sentences so that they’re in a format ready to be consumed by the algorithm.

The following code snippets illustrate how you can generate vectors for the sentences in each row after you train your model.

The following code is an example sentence:

The following is an example of the sentence after feature engineering and tokenizing:

The next cell shows an example of the vector representation of the preceding sentence after our training. When training the model, I selected a vector size of 50, so the model has mapped this sentence to a vector in this space. From this vector, you could measure the cosine distance between this vector and another, and from that measurement, you could assess whether the vectors are similar in semantics.

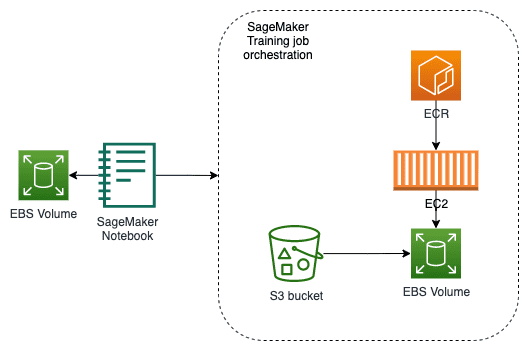

One benefit of using SageMaker is that from your notebook, with a few lines of code, you can launch your training job on the Amazon Elastic Compute Cloud (Amazon EC2) instance of your choice. SageMaker orchestrates the sync of data to the training instance, deploys and runs the Docker image on the instance, and runs your training script or serves your inference. It also shuts down the infrastructure when the training is finished so you’re only charged for the seconds that your training took to complete. The EC2 instance and Docker container are spun up automatically. There is no need for the ML engineer to configure it, except for a single line of code to specify the size of the instances and the number of instances you require.

Results

When we train both models using different versions of XGBoost, the weighted version gives a slightly better F1 score than the built-in method. However, we could still do much more to the data to improve this metric. For instance, by using a larger sample of the same dataset, this metric starts to improve and the gap between the algorithms closes. So while building models, you sometimes need to test different methods, run experiments, and compare the metrics that are important to you. The first step to improve the performance is always to return to the data.

In the following code snippets, the F1 scores are largely the same, with the FastText score slightly higher. Out of the XGBoost’s F1 score, the version where you can supply the class weights performs slightly better. Keep in mind that we’re dealing with 32 classes with heavily imbalanced data. These are factors that you can control with your data before you start training a model for inference.

The following is the F1 score when we used XGBoost and supplied the weights of the classes:

The following is the F1 score when we used the built-in container image of XGBoost from SageMaker:

The following is the F1 score when we use FastText’s text classification library:

Furthermore, in my notebook, I share an example of how you can create your FastText model locally. Then you can store this model binary in Amazon Simple Storage Service (Amazon S3). You can then deploy your model to a real-time SageMaker inference endpoint using the BlazingText container. This is compatible with FastText, so you don’t need to create your own container image to serve your predictions.

The following cell shows an example of invoking a prediction locally with FastText:

The following cell shows an example of invoking a prediction in SageMaker, in the cloud with the same model, but using the BlazingText code to serve the inference:

Although FastText returns multiple labels with a score for each, we can see label 27 comes in at 63% for both as the top result:

Clean up

To avoid incurring future charges, delete the SageMaker endpoints and stop the SageMaker notebook.

Conclusion

In this post, I explored how you can extract the semantics from sentences and use that to predict what class that sentence belongs to. You can use this solution to scale out a taxonomy workload that may otherwise be manual or require constant updating to work efficiently. Because you can deploy these models to a real-time inference endpoint, you can classify your data as soon as it lands in your data lake.

As a next step, try to modify the SageMaker notebook to download your dataset and experiment building your own classifier.

About the Author

Mark Watkins is a Solutions Architect within the Media and Entertainment team, supporting his customers solve many data and ML problems. Away from professional life, he loves spending time with his family and watching his two little ones growing up.

Mark Watkins is a Solutions Architect within the Media and Entertainment team, supporting his customers solve many data and ML problems. Away from professional life, he loves spending time with his family and watching his two little ones growing up.

Tags: Archive

Leave a Reply