Implement MLOps using AWS pre-trained AI Services with AWS Organizations

The AWS Machine Learning Operations (MLOps) framework is an iterative and repetitive process for evolving AI models over time. Like DevOps, practitioners gain efficiencies promoting their artifacts through various environments (such as quality assurance, integration, and production) for quality control. In parallel, customers rapidly adopt multi-account strategies through AWS Organizations and AWS Control Tower to create secure, isolated environments. This combination can introduce challenges for implementing MLOps with AWS pre-trained AI Services, such as Amazon Rekognition Custom Labels. This post discusses design patterns for reducing that complexity while still maintaining security best practices.

Overview

Customers across every industry vertical recognize the value of operationalizing machine learning (ML) efficiently and reducing the time to deliver business value. Most AWS pre-trained AI Services address this situation through out-of-the-box capabilities for computer vision, translation, and fraud detection, among other common use cases. Many use cases require domain-specific predictions that go beyond generic answers. The AI Services can fine-tune the predictive model results using customer-labeled data for those scenarios.

Over time, the domain-specific vocabulary changes and evolves. For example, suppose a tool manufacturer creates a computer vision model to detect its products in images (such as hammers and screwdrivers). In a future release, the business adds support for wrenches and saws. These new labels necessitate code changes on the manufacturer’s websites and custom applications. Now, there are dependencies that both artifacts must release simultaneously.

The AWS MLOps framework addresses these release challenges through iterative and repetitive processes. Before reaching production end-users, the model artifacts must traverse various quality gates like application code. You typically implement those quality gates using multiple AWS accounts within an AWS organization. This approach gives the flexibility to centrally manage these application domains and enforce guardrails and business requirements. It’s becoming increasingly common to have tens or even hundreds of accounts within your organization. However, you must balance your workload isolation needs against the team size and complexity.

MLOps practitioners have standard procedures for promoting artifacts between accounts (such as QA to production). These patterns are straightforward to implement, relying on copying code and binary resources between Amazon Simple Storage Service (Amazon S3) buckets. However, AWS pre-trained AI Services don’t currently support copying the trained custom model across AWS accounts. Until such a mechanism exists, you need to retrain the models in each AWS account using the same dataset. This approach involves time and cost for retraining the model in a new account. This mechanism can be a viable option for some customers. However in this post, we demonstrate the means to define and evolve these custom models centrally while securely sharing them across an AWS organization’s accounts.

Solution overview

This post discusses design patterns for securely sharing AWS pre-trained AI Service domain-specific models. These services include Amazon Fraud Detector, Amazon Transcribe, and Amazon Rekognition, to name a few. Although these strategies are broadly applicable, we focus on Rekognition Custom Labels as a concrete example. We intentionally avoid diving too deep into Rekognition Custom Labels-specific nuances.

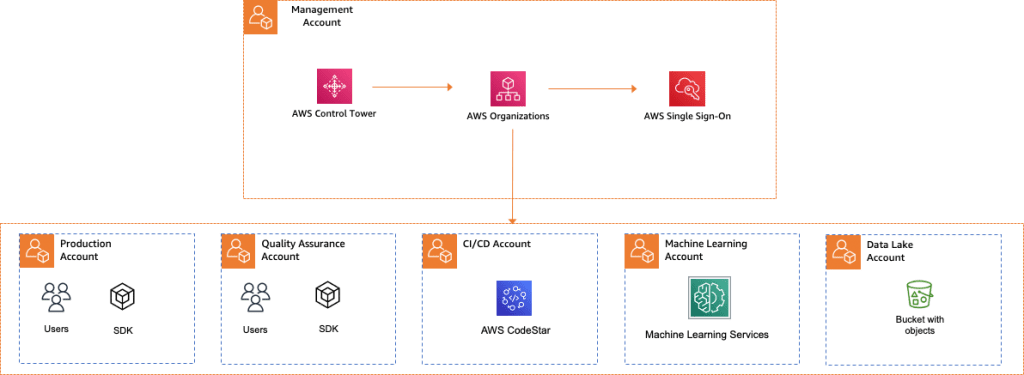

The architecture begins with a configured AWS Control Tower in the management account. AWS Control Tower provides the easiest way to set up and govern a secure, multi-account AWS environment. As shown in the following diagram, we use Account Factory in AWS Control Tower to create five AWS accounts:

- CI/CD account for deployment orchestration (for example, with AWS CodeStar)

- Production account for external end-users (for example, a public website)

- Quality assurance account for internal development teams (such as preproduction)

- ML account for custom models and supporting systems

- AWS Lake House account holding proprietary customer data

This configuration might be too granular or coarse, depending on your regulatory requirements, industry, and size. Refer to Managing the multi-account environment using AWS Organizations and AWS Control Tower for more guidance.

Create the Rekognition Custom Labels model

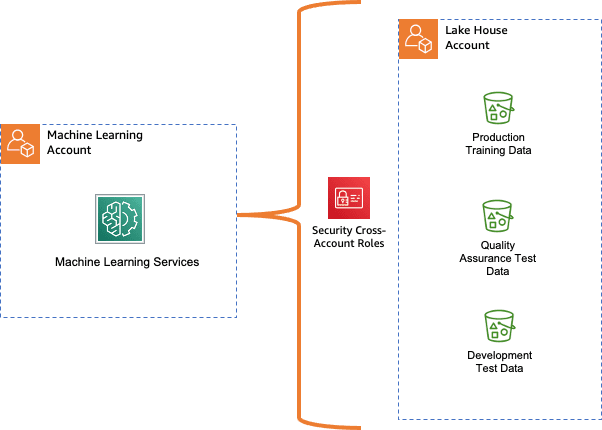

The first step to creating a Rekognition Custom Labels model is choosing the AWS account to host it. You might begin your ML journey using a single ML account. This approach consolidates any tooling and procedures into one place. However, this centralization can cause bloat in the individual account and lead to monolithic environments. More mature enterprises segment this ML account by team or workload. Regardless of the granularity, the object is the same to define centrally and train models once.

This post demonstrates using a Rekognition Custom Labels model with a single ML account and a separate data lake account (see the following diagram). When the data resides in a different account, you must configure a resource policy to provide cross-account access to the S3 bucket objects. This procedure securely shares the bucket’s contents with the ML account. See the quick start samples for more information on creating an Amazon Rekognition domain-specific model.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AWSRekognitionS3AclBucketRead20191011",

"Effect": "Allow",

"Principal": {

"Service": "rekognition.amazonaws.com"

},

"Action": [

"s3:GetBucketAcl",

"s3:GetBucketLocation"

],

"Resource": "arn:aws:s3:::S3:"

},

{

"Sid": "AWSRekognitionS3GetBucket20191011",

"Effect": "Allow",

"Principal": {

"Service": "rekognition.amazonaws.com"

},

"Action": [

"s3:GetObject",

"s3:GetObjectAcl",

"s3:GetObjectVersion",

"s3:GetObjectTagging"

],

"Resource": "arn:aws:s3:::S3:/*"

},

{

"Sid": "AWSRekognitionS3ACLBucketWrite20191011",

"Effect": "Allow",

"Principal": {

"Service": "rekognition.amazonaws.com"

},

"Action": "s3:GetBucketAcl",

"Resource": "arn:aws:s3:::S3:"

},

{

"Sid": "AWSRekognitionS3PutObject20191011",

"Effect": "Allow",

"Principal": {

"Service": "rekognition.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::S3:/*",

"Condition": {

"StringEquals": {

"s3:x-amz-acl": "bucket-owner-full-control"

}

}

}

]

}Enable cross-account access

After you build and deploy the model, the endpoint is only available within the ML account. Do not use a static key to share access. You must delegate access to the production (or QA) account using AWS Identity and Access Management (IAM) roles. To create a cross-account role in the ML account, complete the following steps:

- On the Rekognition Custom Labels console, choose Projects and choose your project name.

- Choose Models and your model name.

- On the Use model tab, scroll down to the Use your model section.

- Copy the model Amazon Resource Name (ARN). It should be formatted as follows:

arn:aws:rekognition:region-name:account-id:project/model-name/version/version-id/timestamp. - Create a role with

rekognition:DetectCustomLabelspermissions to the model ARN and a trust policy allowingsts:AssumeRolefrom the production (or QA) account (for example,arn:aws:iam::PROD_ACCOUNT_ID_HERE:root). - Optionally, attach additional policies for any workload-specific actions (such as accessing S3 buckets).

- Optionally, configure the condition element to enforce additional delegation requirements.

- Record the new role’s ARN to use in the next section.

Invoke the endpoint

With the security policies in place, it’s time to test the configuration. A simple approach involves creating an Amazon Elastic Compute Cloud (Amazon EC2) instance and using the AWS Command Line Interface (AWS CLI). Invoke the endpoint with the following steps:

- In the production (or QA) account, create a role for Amazon EC2.

- Attach a policy allowing

sts:AssumeRoleto the ML account’s cross-role ARN. - Launch an Amazon Linux 2 instance with the role from the previous step.

- Wait for it to provision, then connect to the Linux instance using SSH.

- Invoke the command

aws iam assume-roleto switch to the cross-account role from the previous section. - Start the model endpoint, if not already running, using the Rekognition console or the start-project-version AWS CLI command.

- Invoke the command aws rekognition detect-custom-labels to test the operation.

You can also perform this test using the AWS SDK and another compute resource (for example, AWS Lambda).

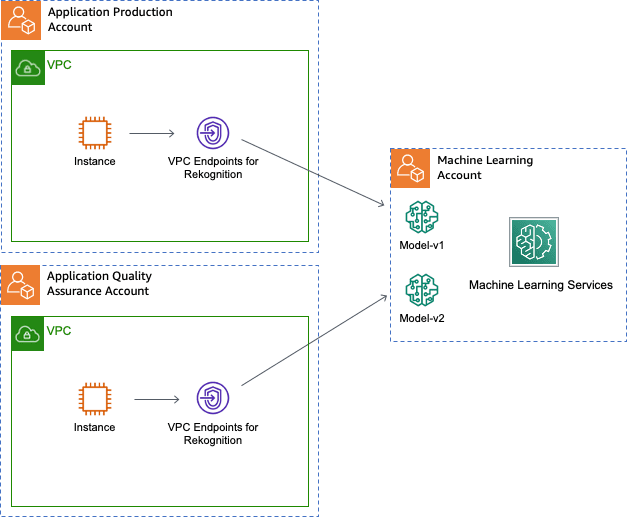

Avoiding the public internet

In the previous section, the detect-custom-labels request uses the virtual private cloud’s (VPC) internet gateway and traverses the public internet. TLS/SSL encryption sufficiently secures the communication channel for many workloads. You can use AWS PrivateLink to enable connections between the VPC and supporting services without requiring an internet gateway, NAT device, VPN connection, transit gateway, or AWS Direct Connect connection. Then, the detect-custom-labels request never leaves the AWS network exposed to the public internet. AWS PrivateLink supports all services used within this post. You can also enforce pre-trained AI Services using private connectivity with IAM in the cross-role policy. This control adds another level of protection that prevents misconfigured clients from using the pre-trained AI Service’s internet-facing endpoint. For additional information, see Using Amazon Rekognition with Amazon VPC endpoints, AWS PrivateLink for Amazon S3, and Using AWS STS interface VPC endpoints.

The following diagram illustrates the VPC endpoint configuration between the production account, ML account, and QA account.

Build a CI/CD pipeline for promoting models

AWS recommends continuously providing more training and test data to custom label the Amazon Rekognition project dataset to improve models. After you add more data to a project, a new model can enhance accuracy or alter labels.

In MLOps, model artifacts must be consistent. To accomplish this with pre-trained AI Services, AWS recommends promoting the model endpoint by updating the code’s reference to the new model version’s ARN. This approach avoids retraining the domain-specific model in each environment (such as QA and production accounts). Your applications can use the new model’s ARN as a runtime variable using AWS Systems Manager within a multi-account or multi-stage environment.

Three granularity levels limit access to the cross-account model, specifically at the account, project, and model version level. Models are idempotent and have a unique ARN that maps to specific point-in-time training: arn:aws:rekognition:account:region:project/project_name/version/name/timestamp.

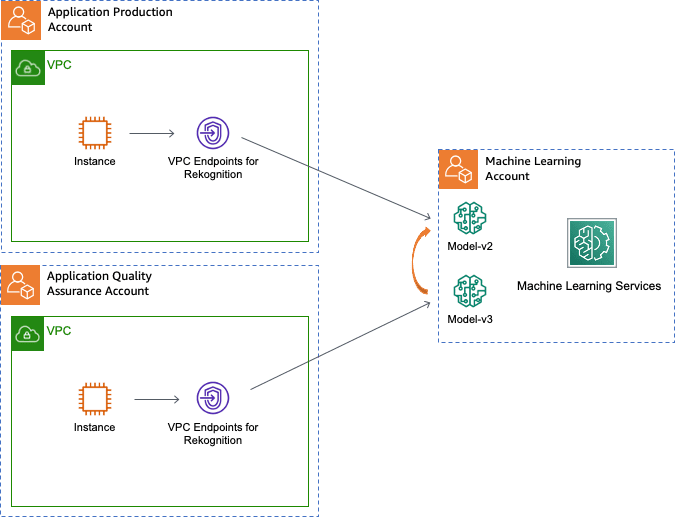

The following diagram illustrates the model rotation from QA to production.

In the preceding architecture, the production and QA applications make API calls to use the v2 or v3 model endpoints through their respective VPC endpoints. They receive the ARN from its configuration store (for example, Amazon Systems Manager Parameter Store or AWS AppConfig). This process works with n number of environments, but we demonstrate only using two accounts for simplicity. Optionally, removing the superseded model versions prevents additional consumption of those resources.

The ML account has an IAM role for each environment-specific (such as the Production account) that requires access. The CI/CD pipeline as part of the deployment alters the inline policy of the IAM role to allow for access to the appropriate model.

Consider the scenario of promoting Model-v2 from the QA account to the production account. This process requires the following steps:

- On the Rekognition Custom Labels console, transition the Model-v2 endpoint into a running state.

- Grant the IAM cross-account role in the ML account access to the new version of Model-v2.

Note that the resource element supports wildcards in the ARN.

- Send a test invocation to Model-v2 from the production application using the delegation role.

- Optionally, remove the cross-account role’s access to Model-v1.

- Optionally, repeat steps 2–3 for each additional AWS account.

- Optionally, stop the Model-v1 endpoint to avoid incurring costs.

Global policy propagation from the IAM control plane to the IAM data plane in every Region is an eventually consistent operation. This design can create slight delays for multi-Regional configurations.

Create guardrails through service control policies

Using cross-account roles creates a secure mechanism for sharing pre-trained managed AI resources. But what happens when that role’s policy is too permissive? You can mitigate these risks by using service control policies (SCPs) to set permission guardrails across accounts. Guardrails specify the maximum permissions available for an IAM identity. These capabilities can prevent a model consumer account from, for example, stopping the shared Amazon Rekognition endpoint. After defining appropriate guardrail requirements, organizational units within Organizations allow centrally managing those policies across multiple accounts.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "DenyModifyingRekgnotionProjects",

"Effect": "Deny",

"Action": [

"rekognition:CreateProject*",

"rekognition:DeleteProject*",

"rekognition:StartProject*",

"rekognition:StopProject*",

],

"Resource": [

“arn:aws:rekognition:*:*:project/*

]

}

]

}

You can also configure detective controls to monitor their configuration and make sure it doesn’t drift out of compliance. AWS IAM Access Analyzer supports assessing policies across the organization and reporting unused permissions. Additionally, AWS Config enables assessing, auditing, and evaluating configurations of AWS resources. This capability supports standard security and compliance requirements, such as verifying and remediating the S3 bucket’s encryption settings.

Conclusion

You need out-of-the-box solutions to add ML capabilities like computer vision, translation, and fraud detection. You also need security boundaries that isolate your different environments for quality control, compliance, and regulatory purposes. AWS pre-trained AI services and AWS Control Tower deliver that functionality in a manner that is easily accessible and secure.

AWS pre-trained AI services don’t currently support copying the trained custom model across AWS accounts. Until such a mechanism exists, you need to retrain the models in each AWS account using the same dataset. This post demonstrates an alternative design approach using IAM cross-account policies to share model endpoints while maintaining robust security control. Furthermore, you can stop paying for redundant training jobs! For more information on cross-account policies, see IAM tutorial: Delegate access across AWS accounts using IAM roles.

About the Authors

Nate Bachmeier is an AWS Senior Solutions Architect that nomadically explores New York, one cloud integration at a time. He specializes in migrating and modernizing customers’ workloads. Besides this, Nate is a full-time student and has two kids.

Nate Bachmeier is an AWS Senior Solutions Architect that nomadically explores New York, one cloud integration at a time. He specializes in migrating and modernizing customers’ workloads. Besides this, Nate is a full-time student and has two kids.

Mario Bourgoin is a Senior Partner Solutions Architect for AWS, an AI/ML specialist, and the global tech lead for MLOps. He works with enterprise customers and partners deploying AI solutions in the cloud. He has more than 30 years experience doing machine learning and AI at startups and in enterprises, starting with creating one of the first commercial machine learning systems for big data. Mario spends the balance of his time playing with his three Belgian Tervurens, cooking dinners for his family, and learning about mathematics and cosmology.

Mario Bourgoin is a Senior Partner Solutions Architect for AWS, an AI/ML specialist, and the global tech lead for MLOps. He works with enterprise customers and partners deploying AI solutions in the cloud. He has more than 30 years experience doing machine learning and AI at startups and in enterprises, starting with creating one of the first commercial machine learning systems for big data. Mario spends the balance of his time playing with his three Belgian Tervurens, cooking dinners for his family, and learning about mathematics and cosmology.

Tim Murphy is a Senior Solutions Architect for AWS, working with enterprise customers in various industries to build business based solutions in the cloud. He has spent the last decade working with startups, non-profits, commercial enterprise, and government agencies, deploying infrastructure at scale. In his spare time when he isn’t tinkering with technology, you’ll most likely find him in far flung areas of the earth hiking mountains, surfing waves, or biking through a new city.

Tim Murphy is a Senior Solutions Architect for AWS, working with enterprise customers in various industries to build business based solutions in the cloud. He has spent the last decade working with startups, non-profits, commercial enterprise, and government agencies, deploying infrastructure at scale. In his spare time when he isn’t tinkering with technology, you’ll most likely find him in far flung areas of the earth hiking mountains, surfing waves, or biking through a new city.

Tags: Archive

Leave a Reply