Transforming qualitative research by automating speech to text-to-text analytics

This post is authored by Satish Jha, Intelligent Automation Manager, Matt Docherty, Data Science Manager, Jayesh Muley, Associate Consultant and Tapan Vora, Rapid Prototyping, from ZS Associates.

At ZS Associates, we do a significant amount of qualitative market research. The work involves interviewing relevant subjects (such as healthcare professionals and sales representatives) and developing bespoke analytics on the interview data. We’ve taken advantage of the advances in AI, machine learning (ML), and cloud computing to reimagine qualitative market research and developed a scalable solution that is equipped to perform speech-to-text conversion and natural language processing (NLP) on the audio recordings of interviewed subjects. The solution is better, cheaper, and faster than the current ways of working (manual interpretation), giving a competitive advantage in this space.

This post discusses how ZS used Amazon Transcribe, Amazon Comprehend Medical, and custom NLP for text summarization and graph visualization to create a scalable, automated solution that helps us provide insights in a faster, better, and more efficient way.

Background assessment

The traditional method of performing qualitative market research requires human intervention and interpretation, which is highly subjective in nature. We used advanced AI and ML to develop a platform that is capable of the following:

- Performing speech-to-text conversion; specifically with high precision, converting interview audio recordings conducted for the purpose of qualitative market research

- Drawing analytical insights from the converted text using a state-of-the-art NLP model

To achieve this, we combined state-of-the-art AWS AI services and cloud computing capabilities with our propriety NLP and text summarization algorithms to drive impact at scale.

Solution overview

To build our solution, we adopted the methodology of starting small, highlighting value, and scaling fast. We identified a key user group and defined phase one of the solution to do automated speech-to-text and analytics. We defined a key user interface and developed the technology architecture for the solution. Because ZS is an AWS Partner and has already been using multiple AWS Cloud services for our enterprise products and solutions, AWS was the preferred choice for this project. We used Amazon Transcribe and Amazon Comprehend Medical for transcription and theme identification purposes. For hosting custom NLP analytics APIs, we used a serverless infrastructure using Amazon API Gateway, AWS Lambda, and Amazon Elastic Container Service (Amazon ECS) with AWS Fargate. These services are HIPAA-eligible and compliant with pharma regulatory requirements.

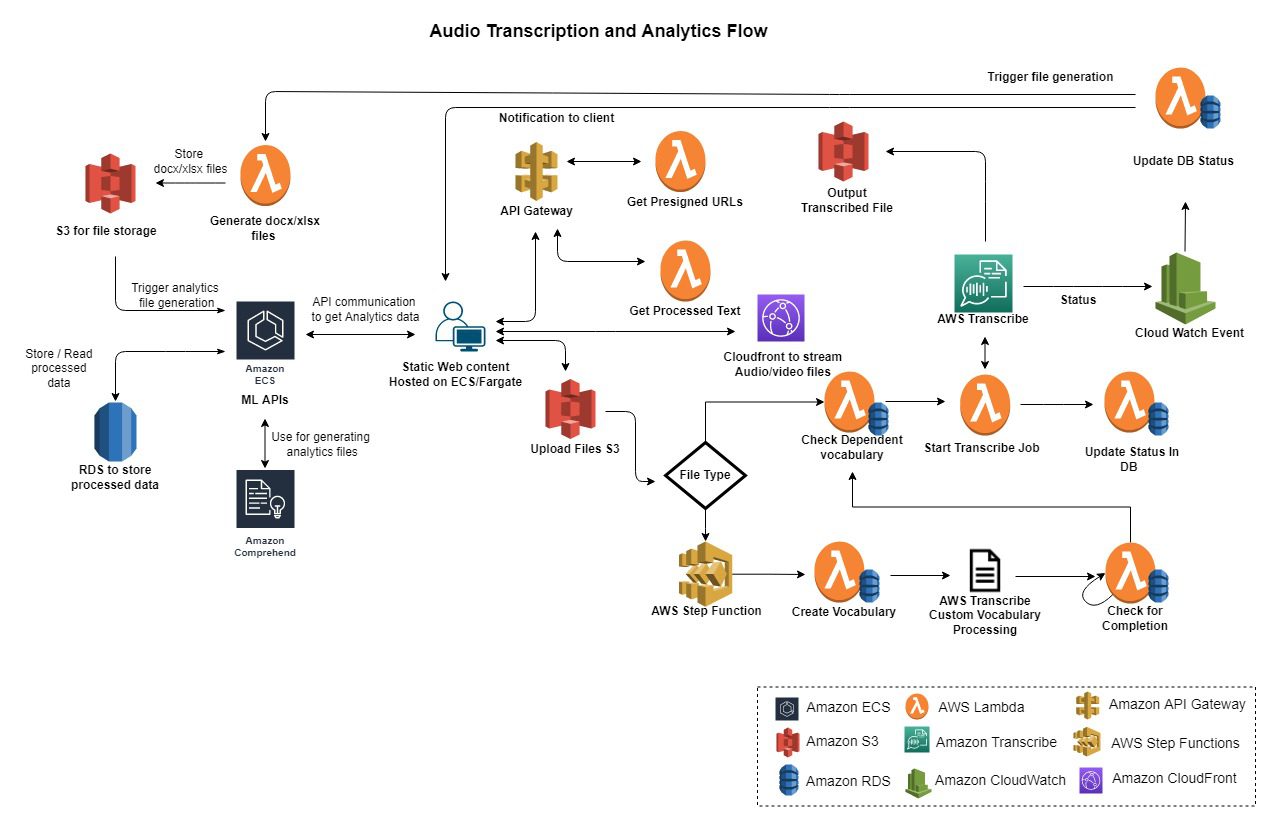

The process includes the following stages:

- File upload to Amazon S3 – The process starts when the user uploads one or more audio recording files for transcription to the site on which our tool is hosted. To upload the files to Amazon Simple Storage Service (Amazon S3), the user is provided with a temporary written token or pre-signed URL using API Gateway, which provides Amazon S3 access.

- Audio transcription – Depending on the type of file uploaded, different triggers are in place to initiate the appropriate workflow:

- Audio files uploaded without a dictionary file – If the user didn’t provide a dictionary file, the tool processes the audio file using Amazon Transcribe.

- Audio files uploaded with a dictionary file – If the user provided a dictionary file, certain AWS Step Functions steps are triggered, followed by processing the dictionary file using Amazon Transcribe. When the dictionary processing is complete, the tool transcribes the audio file using Amazon Transcribe.

- Transcript file generation – In either of the preceding two cases, when the transcription is in progress, the tool uses Amazon CloudWatch Events to update the transcription status. Lambda functions trigger the tool to update the status on the RDBMS and convey the status to the user through the tool’s UI using sockets. When the transcription is complete, the final output file is stored in Amazon S3.

- File type conversion – After the output file is generated, the tool uses triggers to create a .doc or .xlsx file, stored again in Amazon S3.

- Generating analytical insights – With Amazon Comprehend Medical and certain ZS in-house NLP tools, the tool generates analytics based on the transcribed data and updates dashboards on our site to access them in real time.

- Audio streaming with Amazon Transcribe – We use Amazon CloudFront audio streaming paired with our final output file, which is generated from Amazon Transcribe. The user can simultaneously listen to the recording and read the transcript.

The following diagram shows the high-level architecture and workflow.

The platform is designed to process a large number of files in real time. Therefore, the solution greatly augments the work of our current ZS qualitative research team by making the process more efficient and giving it an entirely new dimension!

Overall, our solution has the following features:

- The ability to upload single or multiple audio files

- Automated speech-to-text conversion, with the ability to add a custom dictionary

- The ability to listen to the uploaded audio and refine text

- Text summarization and analytics

Process map

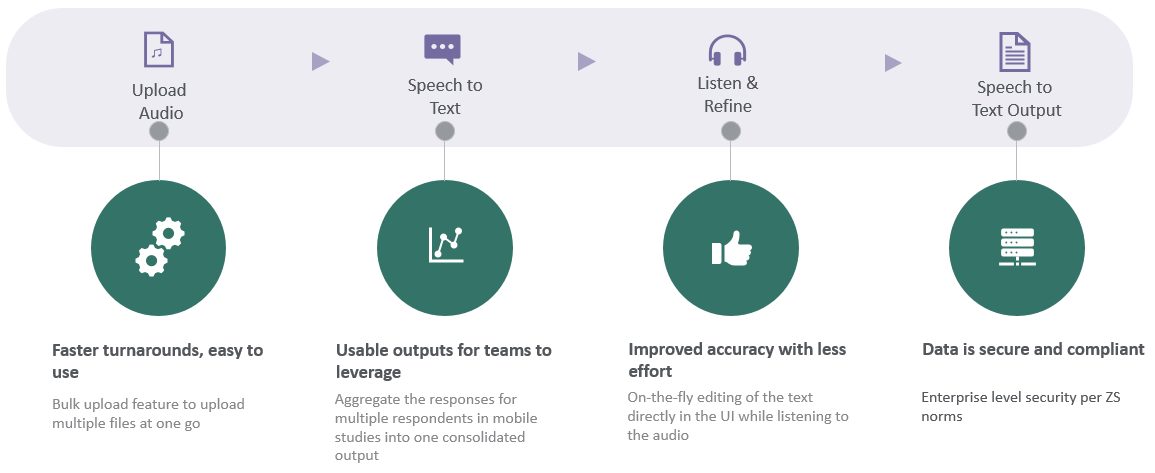

The following diagram gives a high-level visualization of our developed solution, with the following stages:

- Upload audio – The process starts with the user uploading their audio recording (with or without a dictionary file) to the tool

- Speech to text – These uploaded audio files are transcribed by converting speech to text

- Listen and refine – The user can simultaneously listen to the recording and read the transcript and make changes wherever necessary

- Speech-to-text output – The consolidated file includes the converted transcript and its corresponding analytics

It took us approximately 5–6 months to develop this solution end to end with a four-member team. Today it is being used by over 300 people, and the tool has processed thousands of hours of audio.

AWS services used

The solution uses multiple AWs services:

- AWS Lambda and API Gateway – Hosted the serverless APIs and functions.

- We developed multiple API Gateways to ensure loose coupling and easy integration with external APIs. Custom authorizers were implemented to enable token-based authentication and restrict unauthorized access to the web content.

- We also built the Lambda APIs (using Python and NodeJS) that could easily interact with a website hosted on ECS containers and can also be easily linked with Amazon Relational Database Service (Amazon RDS) for PostgreSQL. The use of Lambda functions in our solution helped us avoid the load balancing, restoring, and stopping clusters efforts and reduce overall costs, because the clusters only ran when the functions were running. Additionally, we were able to easily scale our solution because of the serverless architecture.

- Amazon Transcribe – Provided us options to easily configure the batch processing of audio files up to 100 at a time and even scale a larger load using its built-in queuing mechanism. It also allowed us to load a custom dictionary to transcribe the audio data more accurately.

- Amazon Comprehend Medical – Generated analytical insights from the text data using its built-in NLP capabilities to sort through text for valuable information.

- AWS CloudFormation – We used AWS CloudFormation to deploy the Lambda functions and APIs across environments (various S3 buckets and multiple environments in the same bucket, such as production and development) using stage variables.

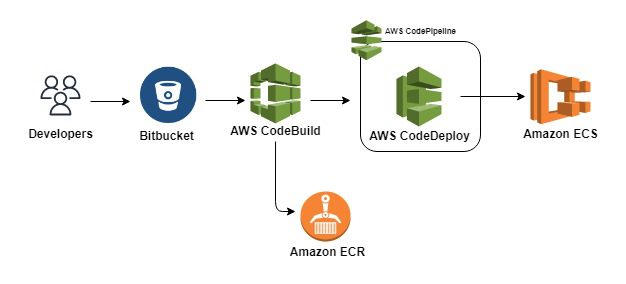

- AWS CodeBuild, AWS CodeDeploy, and AWS CodePipeline – We used AWS CodeBuild, AWS CodeDeploy, and AWS CodePipeline to perform continuous deployment of the front end and analytics backend to ECS clusters.

The following diagram illustrates the architecture of these services.

Conclusion

We used AWS services to develop a platform that helped our teams apply cutting-edge AI to their projects. It has helped our teams do the following:

- Automate the process of speech-to-text conversion and only focus on low-accuracy aspects.

- Drive automation of insights with NLP algorithms.

- Drive self-service. Because we do not need to launch any particular server, we can easily create Lambda functions, make changes to the code on the fly, and provide key ML services as plug and play so that users don’t need to be data scientists.

Today the solution is used by over 300 people, and we have processed thousands of hours of audio. We’re now integrating our solution with other applications to provide users with the flexibility to either upload audio files for transcription or directly upload transcribed files for drawing analytical insights.

We also derived multiple benefits from building our platform with AWS:

- Using an end-to-end cloud-based architecture proved beneficial in terms of managing environments for business applications

- With management tools such as CloudWatch, AWS CloudFormation, CodeBuild, CodeDeploy, and CodePipeline, it was easier to monitor, track, and deploy development changes

- We used AWS’s built-in security with virtual private clouds and identity management with customized policies

- We were able to reduce load on valuable microservices, with the additional benefit of quick hosting and deployment

About ZS

ZS Associates is a consulting and professional services firm focusing on consulting, software, and technology, headquartered in Evanston, Illinois, that provides services for clients in pharma, healthcare, and technology. The firm employs more than 10,000 employees in 30 offices in North America, South America, Europe, and Asia. ZS works with 49 of the 50 largest drug-makers and 17 of the 20 largest medical device makers and serves consumer products, financial services, industrial products, telecommunications, transportation, and logistics industries.

Disclaimer: AWS is not responsible for the content or accuracy of this post. The content and opinions in this post are solely those of the third-party author. It is each customers’ responsibility to determine whether they are subject to HIPAA, and if so, how best to comply with HIPAA and its implementing regulations. Before using AWS in connection with protected health information, customers must enter an AWS Business Associate Addendum (BAA) and follow its configuration requirements.

About the Authors

Satish Jha is a Manager with ZS Associates. He is a leader in the firm’s Intelligent Automation Practice, where he works side by side with several pharma clients to transform operations and drive impact.

Satish Jha is a Manager with ZS Associates. He is a leader in the firm’s Intelligent Automation Practice, where he works side by side with several pharma clients to transform operations and drive impact.

Matt Docherty is a Data Science Manager with ZS Associates in the Philadelphia office. He is focused on applying data science in the pharmaceutical industry.

Matt Docherty is a Data Science Manager with ZS Associates in the Philadelphia office. He is focused on applying data science in the pharmaceutical industry.

Jayesh Muley is an Associate Consultant for Process Excellence & Transformation with ZS Associates. He has 4 years of experience advising pharma clients in the forecasting, process excellence, and digital transformation spaces. He played a critical role in establishing ZS’s automation center of excellence. He is always keen on learning new technologies and is always evolving in his role.

Jayesh Muley is an Associate Consultant for Process Excellence & Transformation with ZS Associates. He has 4 years of experience advising pharma clients in the forecasting, process excellence, and digital transformation spaces. He played a critical role in establishing ZS’s automation center of excellence. He is always keen on learning new technologies and is always evolving in his role.

Tapan Vora is a Manager for Rapid Prototyping with ZS Associates. Tapan has over 14 years of technology and engineering management experience. He plays multiple roles in the team, such as business analyst, people manager, solution designer, data analyst, and product leader.

Tapan Vora is a Manager for Rapid Prototyping with ZS Associates. Tapan has over 14 years of technology and engineering management experience. He plays multiple roles in the team, such as business analyst, people manager, solution designer, data analyst, and product leader.

Tags: Archive

Leave a Reply