Make batch predictions with Amazon SageMaker Autopilot

Amazon SageMaker Autopilot is an automated machine learning (AutoML) solution that performs all the tasks you need to complete an end-to-end machine learning (ML) workflow. It explores and prepares your data, applies different algorithms to generate a model, and transparently provides model insights and explainability reports to help you interpret the results. Autopilot can also create a real-time endpoint for online inference. You can access Autopilot’s one-click features in Amazon SageMaker Studio or by using the AWS SDK for Python (Boto3) or the SageMaker Python SDK.

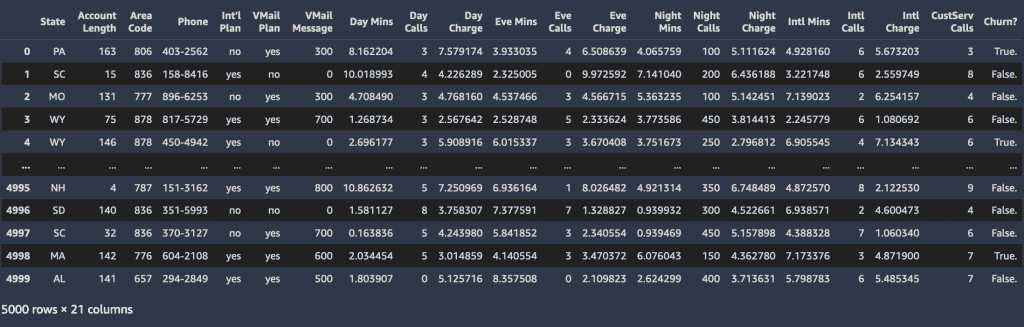

In this post, we show how to make batch predictions on an unlabeled dataset using an Autopilot-trained model. We use a synthetically generated dataset that is indicative of the types of features you typically see when predicting customer churn.

Solution overview

Batch inference, or offline inference, is the process of generating predictions on a batch of observations. Batch inference assumes you don’t need an immediate response to a model prediction request, as you would when using an online, real-time model endpoint. Offline predictions are suitable for larger datasets and in cases where you can afford to wait several minutes or hours for a response. In contrast, online inference generates ML predictions in real time, and is aptly referred to as real-time inference or dynamic inference. Typically, these predictions are generated on a single observation of data at runtime.

Losing customers is costly for any business. Identifying unhappy customers early on gives you a chance to offer them incentives to stay. Mobile operators have historical customer data showing those who have churned and those who have maintained service. We can use this historical information to construct a model to predict if a customer will churn using ML.

After we train an ML model, we can pass the profile information of an arbitrary customer (the same profile information that we used for training) to the model, and have the model predict whether or not the customer will churn. The dataset used for this post is hosted under the sagemaker-sample-files folder in an Amazon Simple Storage Service (Amazon S3) public bucket, which you can download. It consists of 5,000 records, where each record uses 21 attributes to describe the profile of a customer for an unknown US mobile operator. The attributes are as follows:

- State – US state in which the customer resides, indicated by a two-letter abbreviation; for example, TX or CA

- Account Length – Number of days that this account has been active

- Area Code – Three-digit area code of the corresponding customer’s phone number

- Phone – Remaining seven-digit phone number

- Int’l Plan – Has an international calling plan: Yes/No

- VMail Plan – Has a voice mail feature: Yes/No

- VMail Message – Average number of voice mail messages per month

- Day Mins – Total number of calling minutes used during the day

- Day Calls – Total number of calls placed during the day

- Day Charge – Billed cost of daytime calls

- Eve Mins, Eve Calls, Eve Charge – Billed cost for calls placed during the evening

- Night Mins, Night Calls, Night Charge – Billed cost for calls placed during nighttime

- Intl Mins, Intl Calls, Intl Charge – Billed cost for international calls

- CustServ Calls – Number of calls placed to Customer Service

- Churn? – Customer left the service: True/False

The last attribute, Churn?, is the target attribute that we want the ML model to predict. Because the target attribute is binary, our model performs binary prediction, also known as binary classification.

Prerequisites

Download the dataset to your local development environment and explore it by running the following S3 copy command with the AWS Command Line Interface (AWS CLI):

You can then copy the dataset to an S3 bucket within your own AWS account. This is the input location for Autopilot. You can copy the dataset to Amazon S3 by either manually uploading to your bucket or by running the following command using the AWS CLI:

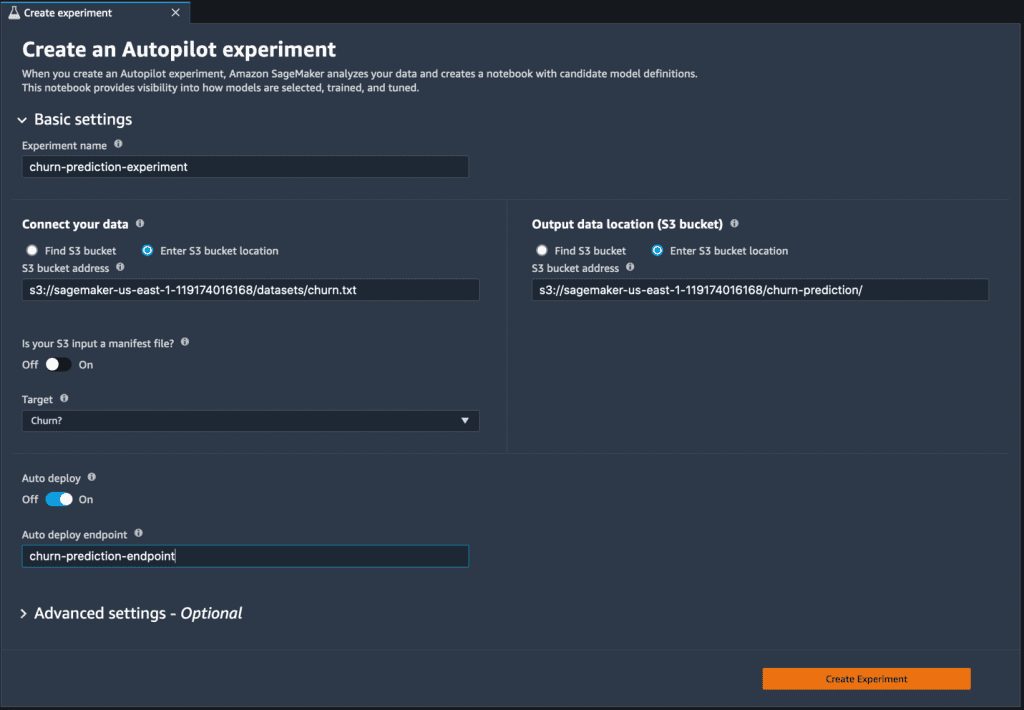

Create an Autopilot experiment

When the dataset is ready, you can initialize an Autopilot experiment in SageMaker Studio. For full instructions, refer to Create an Amazon SageMaker Autopilot experiment.

Under Basic settings, you can easily create an Autopilot experiment by providing an experiment name, the data input and output locations, and specifying the target data to predict. Optionally, you can specify the type of ML problem that you want to solve. Otherwise, use the Auto setting, and Autopilot automatically determines the model based on the data you provide.

You can also run an Autopilot experiment with code using either the AWS SDK for Python (Boto3) or the SageMaker Python SDK. The following code snippet demonstrates how to initialize an Autopilot experiment using the SageMaker Python SDK. We use the AutoML class from the SageMaker Python SDK.

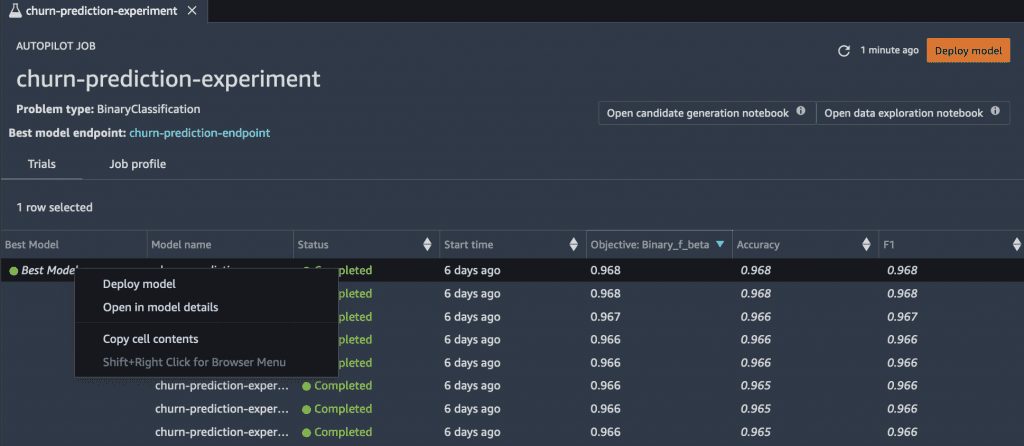

After Autopilot begins an experiment, the service automatically inspects the raw input data, applies feature processors, and picks the best set of algorithms. After it choose an algorithm, Autopilot optimizes its performance using a hyperparameter optimization search process. This is often referred to as training and tuning the model. This ultimately helps produce a model that can accurately make predictions on data it has never seen. Autopilot automatically tracks model performance, and then ranks the final models based on metrics that describe a model’s accuracy and precision.

You also have the option to deploy any of the ranked models either by choosing the model (right-click) and choosing Deploy model, or by selecting the model in the ranked list and choosing Deploy model.

Make batch predictions using a model from Autopilot

When your Autopilot experiment is complete, you can use the trained model to run batch predictions on your test or holdout dataset for evaluation. You can then compare the predicted labels against expected labels if your test or holdout dataset is pre-labeled. This is essentially a way to compare a model’s predictions to the truth. If more of the model’s predictions match the true labels, we can generally categorize the model as performing well. You can also run batch predictions to label unlabeled data. You can easily accomplish the same using the high-level SageMaker Python SDK with a few lines of code.

Describe a previously run Autopilot experiment

We first need to extract the information from a previously completed Autopilot experiment. We can use the AutoML class from the SageMaker Python SDK to create an automl object that encapsulates the information of a previous Autopilot experiment. You can use the experiment name you defined when initializing the Autopilot experiment. See the following code:

With the automl object, we can easily describe and recreate the best trained model, as shown in the following snippets:

In some cases, you might want to use a model other than the best model as ranked by Autopilot. To find such a candidate model, you can use the automl object and iterate through the list of all or the top N model candidates and choose the model you want to recreate. For this post, we use a simple Python For loop to iterate through a list of model candidates:

Customize the inference response

When recreating either the best or any other of Autopilot’s trained models, we can customize the inference response for the model by adding in the extra parameter inference_response_keys, as shown in the preceding example. You can use this parameter for both binary or multiclass classification problem types:

- predicted_label – The predicted class.

- probability – In binary classification, the probability that the result is predicted as the second or True class in the target column. In multiclass classification, the probability of the winning class.

- labels – A list of all possible classes.

- probabilities – A list of all probabilities for all classes (order corresponds with labels).

Because the problem we’re tackling in this post is binary classification, we set this parameter as follows in the preceding snippets while creating the models:

Create transformer and run batch predictions

Finally, after we recreate the candidate models, we can create a transformer to start the batch predictions job, as shown in the following two code snippets. While creating the transformer, we define the specifications of the cluster to run the batch job, such as instance count and type. The batch input and output are the Amazon S3 locations where our data inputs and outputs are stored. The batch prediction job is powered by SageMaker batch transform.

When the job is complete, we can read the batch output and perform evaluations and other downstream actions.

Summary

In this post, we demonstrated how to quickly and easily make batch predictions using Autopilot-trained models for your post-training evaluations. We used SageMaker Studio to initialize an Autopilot experiment to create a model for predicting customer churn. Then we referenced Autopilot’s best model to run batch predictions using the automl class with the SageMaker Python SDK. We also used the SDK to perform batch predictions with other model candidates. With Autopilot, we automatically explored and preprocessed our data, then created several ML models with one click, letting SageMaker take care of managing the infrastructure needed to train and tune our models. Lastly, we used batch transform to make predictions with our model using minimal code.

For more information on Autopilot and its advanced functionalities, refer to Automate model development with Amazon SageMaker Autopilot. For a detailed walkthrough of the example in the post, take a look at the following example notebook.

About the Authors

Arunprasath Shankar is an Artificial Intelligence and Machine Learning (AI/ML) Specialist Solutions Architect with AWS, helping global customers scale their AI solutions effectively and efficiently in the cloud. In his spare time, Arun enjoys watching sci-fi movies and listening to classical music.

Arunprasath Shankar is an Artificial Intelligence and Machine Learning (AI/ML) Specialist Solutions Architect with AWS, helping global customers scale their AI solutions effectively and efficiently in the cloud. In his spare time, Arun enjoys watching sci-fi movies and listening to classical music.

Peter Chung is a Solutions Architect for AWS, and is passionate about helping customers uncover insights from their data. He has been building solutions to help organizations make data-driven decisions in both the public and private sectors. He holds all AWS certifications as well as two GCP certifications. He enjoys coffee, cooking, staying active, and spending time with his family.

Peter Chung is a Solutions Architect for AWS, and is passionate about helping customers uncover insights from their data. He has been building solutions to help organizations make data-driven decisions in both the public and private sectors. He holds all AWS certifications as well as two GCP certifications. He enjoys coffee, cooking, staying active, and spending time with his family.

Tags: Archive

Leave a Reply