Build a mental health machine learning risk model using Amazon SageMaker Data Wrangler

This post is co-written by Shibangi Saha, Data Scientist, and Graciela Kravtzov, Co-Founder and CTO, of Equilibrium Point.

Many individuals are experiencing new symptoms of mental illness, such as stress, anxiety, depression, substance use, and post-traumatic stress disorder (PTSD). According to Kaiser Family Foundation, about half of adults (47%) nationwide have reported negative mental health impacts during the pandemic, a significant increase from pre-pandemic levels. Also, certain genders and age groups are among the most likely to report stress and worry, at rates much higher than others. Additionally, a few specific ethnic groups are more likely to report a “major impact” to their mental health than others.

Several surveys, including those collected by the Centers for Disease Control (CDC), have shown substantial increases in self-reported behavioral health symptoms. According to one CDC report, which surveyed adults across the US in late June of 2020, 31% of respondents reported symptoms of anxiety or depression, 13% reported having started or increased substance use, 26% reported stress-related symptoms, and 11% reported having serious thoughts of suicide in the past 30 days.

Self-reported data, while absolutely critical in diagnosing mental health disorders, can be subject to influences related to the continuing stigma surrounding mental health and mental health treatment. Rather than rely solely on self-reported data, we can estimate and forecast mental distress using data from health records and claims data to try to answer a fundamental question: can we predict who will likely need mental health help before they need it? If these individuals can be identified, early intervention programs and resources can be developed and deployed to respond to any new or increase in underlying symptoms to mitigate the effects and costs of mental disorders.

Easier said than done for those who have struggled with managing and processing large volumes of complex, gap-riddled claims data! In this post, we share how Equilibrium Point IoT used Amazon SageMaker Data Wrangler to streamline claims data preparation for our mental health use case, while ensuring data quality throughout each step in the process.

Solution overview

Data preparation or feature engineering is a tedious process, requiring experienced data scientists and engineers spending a lot of time and energy on formulating recipes for the various transformations (steps) needed to get the data into its right shape. In fact, research shows that data preparation for machine learning (ML) consumes up to 80% of data scientists’ time. Typically, scientists and engineers use various data processing frameworks, such as Pandas, PySpark, and SQL, to code their transformations and create distributed processing jobs. With Data Wrangler, you can automate this process. Data Wrangler is a component of Amazon SageMaker Studio that provides an end-to-end solution to import, prepare, transform, featurize, and analyze data. You can integrate a Data Wrangler data flow into your existing ML workflows to simplify and streamline data processing and feature engineering using little to no coding.

In this post, we walk through the steps to transform original raw datasets into ML-ready features to use for building the prediction models in the next stage. First, we delve into the nature of the various datasets used for our use case and how we joined these datasets via Data Wrangler. After the joins and the dataset consolidation, we describe the individual transformations we applied on the dataset like de-duplication, handling missing values, and custom formulas, followed by how we used the built-in Quick Model analysis to validate the current state of transformations for predictions.

Datasets

For our experiment, we first downloaded patient data from our behavioral health client. This data includes the following:

- Claims data

- Emergency room visit counts

- Inpatient visit counts

- Drug prescription counts related to mental health

- Hierarchical condition coding (HCC) diagnoses counts related to mental health

The goal was to join these separate datasets based on patient ID and utilize the data to predict a mental health diagnosis. We used Data Wrangler to create a massive dataset of several million rows of data, which is a join of five separate datasets. We also used Data Wrangler to perform several transformations to allow for column calculations. In the following sections, we describe the various data preparation transformations that we applied.

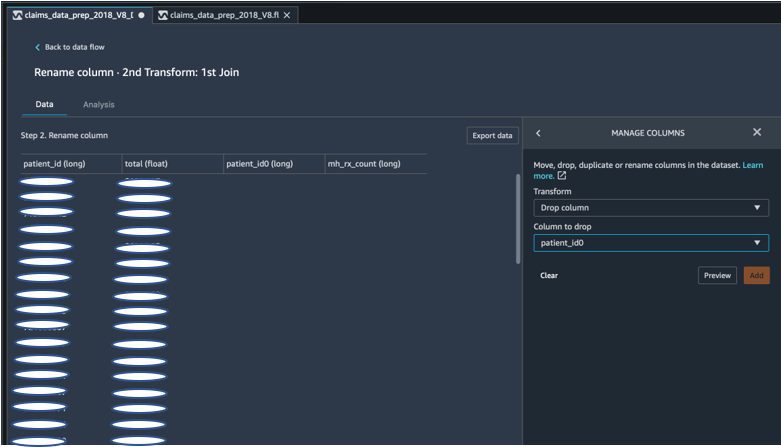

Drop duplicate columns after a join

Amazon SageMaker Data Wrangler provides numerous ML data transforms to streamline cleaning, transforming, and featurizing your data. When you add a transform, it adds a step to the data flow. Each transform you add modifies your dataset and produces a new dataframe. All subsequent transforms apply to the resulting dataframe. Data Wrangler includes built-in transforms, which you can use to transform columns without any code. You can also add custom transformations using PySpark, Pandas, and PySpark SQL. Some transforms operate in place, while others create a new output column in your dataset.

For our experiments, since after each join on the patient ID, we were left with duplicate patient ID columns. We needed to drop these columns. We dropped the right patient ID column, as shown in the following screenshot using the pre-built Manage Columns –>Drop column transform, to maintain only one patient ID column (patient_id in the final dataset).

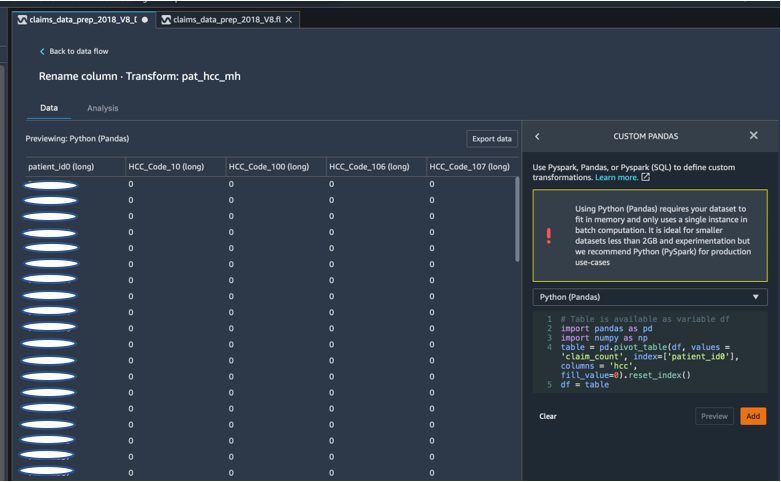

Pivot a dataset using Pandas

Claims datasets were patient level with emergency visit (ER), inpatient (IP), prescription counts, and diagnoses data already grouped by their correspondent HCC codes (approximately 189 codes). To build a patient datamart, we aggregate the claims HCC codes by patient and pivot the HCC code from rows to columns. We used Pandas to pivot the dataset, count the number of HCC codes by patient, and then join to the primary dataset on patient ID. We used the custom transform option in Data Wrangler choosing Python (Pandas) as the framework of choice.

The following code snippet shows the transformation logic to pivot the table:

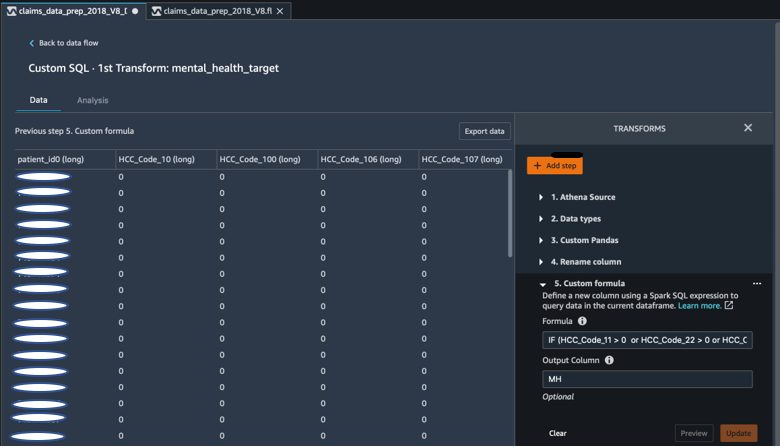

Create new columns using custom formulas

We studied research literature to determine which HCC codes are deterministic in mental health diagnoses. We then wrote this logic using a Data Wrangler custom formula transform that uses a Spark SQL expression to calculate a Mental Health Diagnosis target column (MH), which we added to the end of the DataFrame.

We used the following transformation logic:

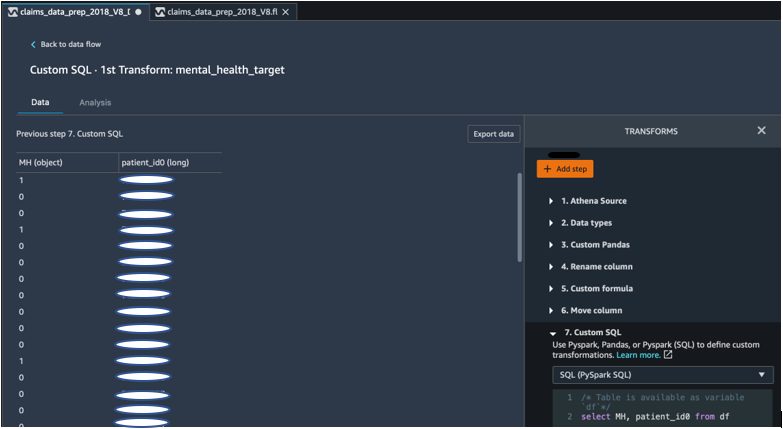

Drop columns from the DataFrame using PySpark

After calculation of the target (MH) column, we dropped all the unnecessary duplicate columns. We preserved the patient ID and the MH column to join to our primary dataset. This was facilitated by a custom SQL transform that uses PySpark SQL as a framework of our choice.

We used the following logic:

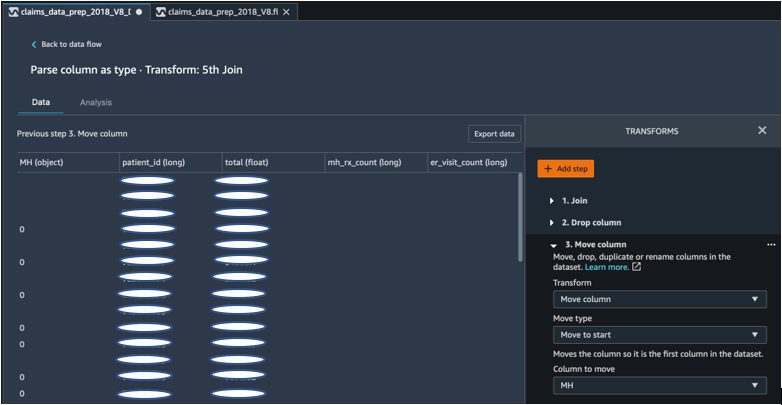

Move the MH column to start

Our ML algorithm requires that the labeled input is in the first column. Therefore, we moved the MH calculated column to the start of the DataFrame to be ready for export.

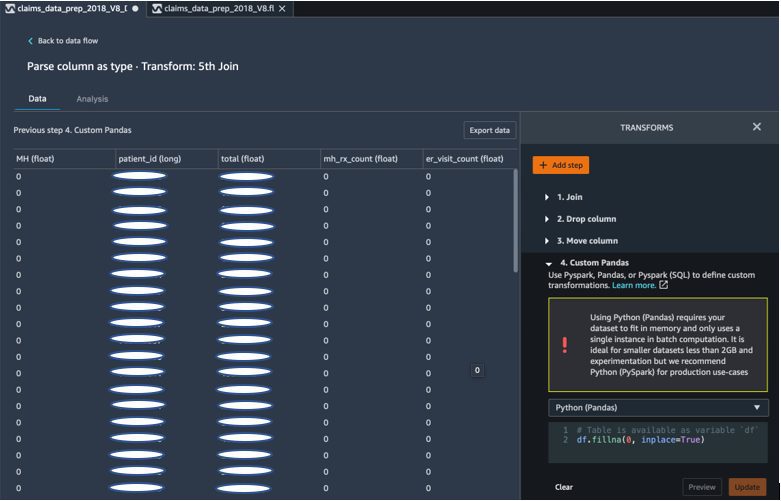

Fill in blanks with 0 using Pandas

Our ML algorithm also requires that the input data has no empty fields. Therefore, we filled the final dataset’s empty fields with 0s. We can easily do this via a custom transform (Pandas) in Data Wrangler.

We used the following logic:

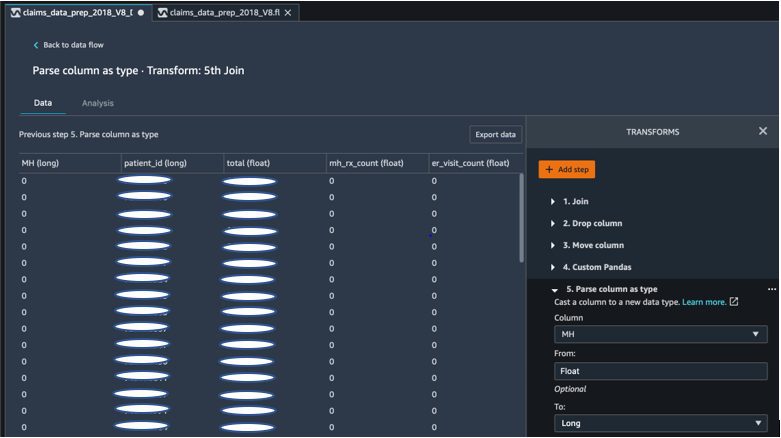

Cast column from float to long

You can also parse and cast a column to any new data type easily in Data Wrangler. For memory optimization purposes, we cast our mental health label input column as float.

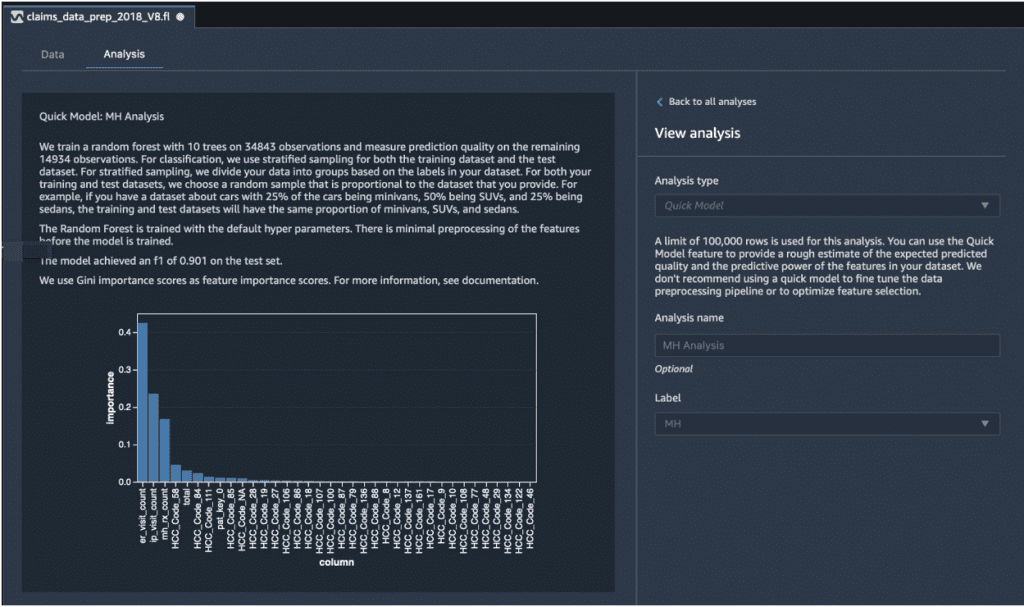

Quick Model analysis: Feature importance graph

After creating our final dataset, we utilized the Quick Model analysis type in Data Wrangler to quickly identify data inconsistencies and if our model accuracy was in the expected range, or if we needed to continue feature engineering before spending the time of training the model. The model returned an F1 score of 0.901, with 1 being the highest. An F1 score is a way of combining the precision and recall of the model, and it’s defined as the harmonic mean of the two. After inspecting these initial positive results, we were ready to export the data and proceed with model training using the exported dataset.

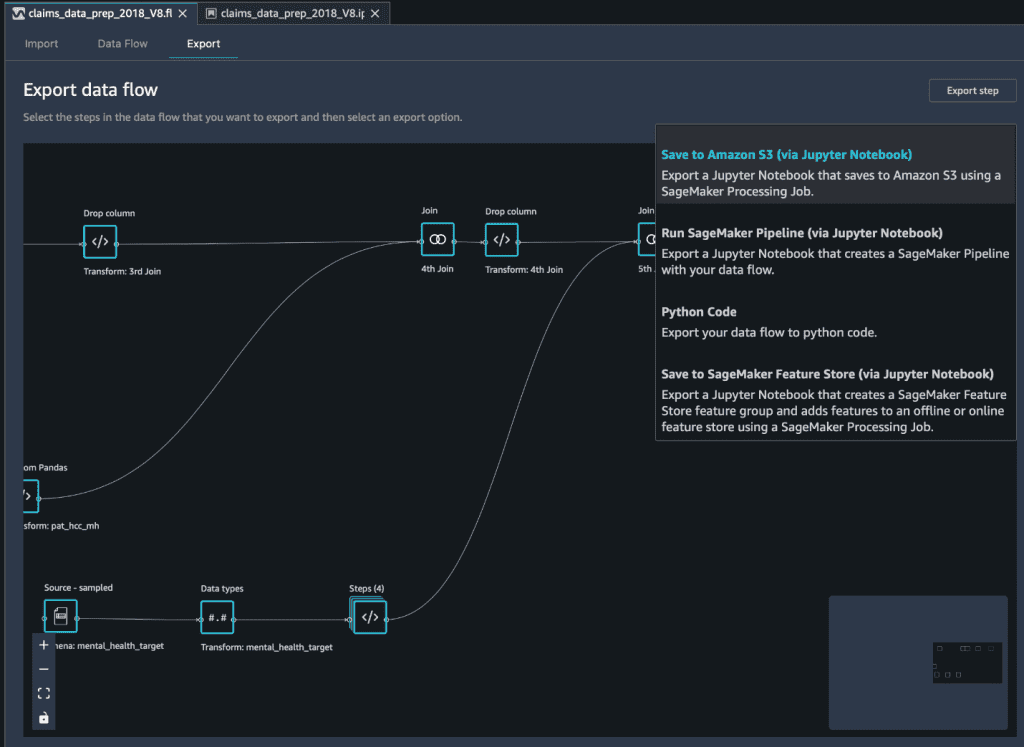

Export the final dataset to Amazon S3 via a Jupyter notebook

As a final step, to export the dataset in its current form (transformed) to Amazon Simple Storage Service (Amazon S3) for future use on model training, we use the Save to Amazon S3 (via Jupyter Notebook) export option. This notebook starts a distributed and scalable Amazon SageMaker Processing job that applies the created recipe (data flow) to specified inputs (usually larger datasets) and saves the results in Amazon S3. You can also export your transformed columns (features) to Amazon SageMaker Feature Store or export the transformations as a pipeline using Amazon SageMaker Pipelines, or simply export the transformations as Python code.

To export data to Amazon S3, you have three options:

- Export the transformed data directly to Amazon S3 via the Data Wrangler UI

- Export the transformations as a SageMaker Processing job via a Jupyter notebook (as we do for this post).

- Export the transformations to Amazon S3 via a destination node. A destination node tells Data Wrangler where to store the data after you’ve processed it. After you create a destination node, you create a processing job to output the data.

Conclusion

In this post, we showcased how Equilibrium Point IoT uses Data Wrangler to speed up the loading process of large amounts of our claims data for data cleaning and transformation in preparation for ML. We also demonstrated how to incorporate feature engineering with custom transformations using Pandas and PySpark in Data Wrangler, allowing us to export data step by step (after each join) for quality assurance purposes. The application of these easy-to-use transforms in Data Wrangler cut down the time spent on end-to-end data transformation by nearly 50%. Also, the Quick Model analysis feature in Data Wrangler allowed us to easily validate the state of transformations as we cycle through the process of data preparation and feature engineering.

Now that we have prepped the data for our mental health risk modeling use case, as next step, we plan to build an ML model using SageMaker and the built-in algorithms it offers, utilizing our claims dataset to identify members who should seek mental health services before they get to a point where they need it. Stay tuned!

About the Authors

Shibangi Saha is a Data Scientist at Equilibrium Point. She combines her expertise in healthcare payor claims data and machine learning to design, implement, automate, and document for health data pipelines, reporting, and analytics processes that drive insights and actionable improvements in the healthcare delivery system. Shibangi received her Master of Science in Bioinformatics from Northeastern University College of Science and a Bachelor of Science in Biology and Computer Science from Khoury College of Computer Science and Information Sciences.

Shibangi Saha is a Data Scientist at Equilibrium Point. She combines her expertise in healthcare payor claims data and machine learning to design, implement, automate, and document for health data pipelines, reporting, and analytics processes that drive insights and actionable improvements in the healthcare delivery system. Shibangi received her Master of Science in Bioinformatics from Northeastern University College of Science and a Bachelor of Science in Biology and Computer Science from Khoury College of Computer Science and Information Sciences.

Graciela Kravtzov is the Co-Founder and CTO of Equilibrium Point. Grace has held C-level/VP leadership positions within Engineering, Operations, and Quality, and served as an executive consultant for business strategy and product development within the healthcare and education industries and the IoT industrial space. Grace received a Master of Science degree in Electromechanical Engineer from the University of Buenos Aires and a Master of Science degree in Computer Science from Boston University.

Graciela Kravtzov is the Co-Founder and CTO of Equilibrium Point. Grace has held C-level/VP leadership positions within Engineering, Operations, and Quality, and served as an executive consultant for business strategy and product development within the healthcare and education industries and the IoT industrial space. Grace received a Master of Science degree in Electromechanical Engineer from the University of Buenos Aires and a Master of Science degree in Computer Science from Boston University.

Arunprasath Shankar is an Artificial Intelligence and Machine Learning (AI/ML) Specialist Solutions Architect with AWS, helping global customers scale their AI solutions effectively and efficiently in the cloud. In his spare time, Arun enjoys watching sci-fi movies and listening to classical music.

Arunprasath Shankar is an Artificial Intelligence and Machine Learning (AI/ML) Specialist Solutions Architect with AWS, helping global customers scale their AI solutions effectively and efficiently in the cloud. In his spare time, Arun enjoys watching sci-fi movies and listening to classical music.

Ajai Sharma is a Senior Product Manager for Amazon SageMaker where he focuses on SageMaker Data Wrangler, a visual data preparation tool for data scientists. Prior to AWS, Ajai was a Data Science Expert at McKinsey and Company where he led ML-focused engagements for leading finance and insurance firms worldwide. Ajai is passionate about data science and loves to explore the latest algorithms and machine learning techniques.

Ajai Sharma is a Senior Product Manager for Amazon SageMaker where he focuses on SageMaker Data Wrangler, a visual data preparation tool for data scientists. Prior to AWS, Ajai was a Data Science Expert at McKinsey and Company where he led ML-focused engagements for leading finance and insurance firms worldwide. Ajai is passionate about data science and loves to explore the latest algorithms and machine learning techniques.

Tags: Archive

Leave a Reply