Use AWS AI and ML services to foster accessibility and inclusion of people with a visual or communication impairment

AWS offers a broad set of artificial intelligence (AI) and machine learning (ML) services, including a suite of pre-trained, ready-to-use services for developers with no prior ML experience. In this post, we demonstrate how to use such services to build an application that fosters the inclusion of people with a visual or communication impairment, which includes difficulties in seeing, reading, hearing, speaking, or having a conversation in a foreign language. With services such as Amazon Transcribe, Amazon Polly, Amazon Translate, Amazon Rekognition and Amazon Textract, you can add features to your projects such as live transcription, text to speech, translation, object detection, and text extraction from images.

According to the World Health Organization, over 1 billion people—about 15% of the global population—live with some form of disability, and this number is likely to grow because of population ageing and an increase in the prevalence of some chronic diseases. For people with a speech, hearing, or visual impairment, everyday tasks such as listening to a speech or a TV program, expressing a feeling or a need, looking around, or reading a book can feel like impossible challenges. A wide body of research highlights the importance of assistive technologies for the inclusion of people with disabilities in society. According to research by the European Parliamentary Research Service, mainstream technologies such as smartphones provide more and more capabilities suitable for addressing the needs of people with disabilities. In addition, when you design for people with disabilities, you tend to build features that improve the experience for everyone; this is known as the curb-cut effect.

This post demonstrates how you can use the AWS SDK for JavaScript to integrate capabilities provided by AWS AI services into your own solutions. To do that, a sample web application showcases how to use Amazon Transcribe, Amazon Polly, Amazon Translate, Amazon Rekognition, and Amazon Textract to easily implement accessibility features. The source code of this application, AWS AugmentAbility, is available on GitHub to use as a starting point for your own projects.

Solution overview

AWS AugmentAbility is powered by five AWS AI services: Amazon Transcribe, Amazon Translate, Amazon Polly, Amazon Rekognition, and Amazon Textract. It also uses Amazon Cognito user pools and identity pools for managing authentication and authorization of users.

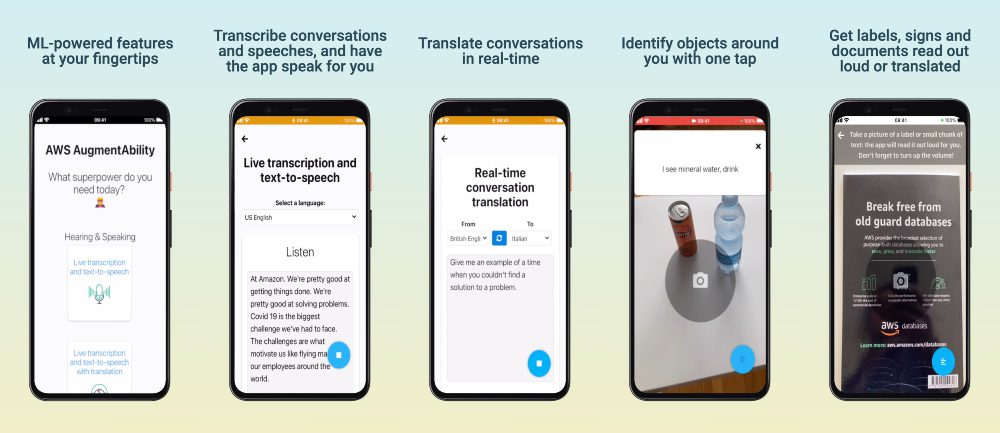

After deploying the web app, you will be able to access the following features:

- Live transcription and text to speech – The app transcribes conversations and speeches for you in real time using Amazon Transcribe, an automatic speech recognition service. Type what you want to say, and the app says it for you by using Amazon Polly text-to-speech capabilities. This feature also integrates with Amazon Transcribe automatic language identification for streaming transcriptions—with a minimum of 3 seconds of audio, the service can automatically detect the dominant language and generate a transcript without you having to specify the spoken language.

- Live transcription and text to speech with translation – The app transcribes and translates conversations and speeches for you, in real time. Type what you want to say, and the app translates and says it for you. Translation is available in the over 75 languages currently supported by Amazon Translate.

- Real-time conversation translation – Select a target language, speak in your language, and the app translates what you said in your target language by combining Amazon Transcribe, Amazon Translate, and Amazon Polly capabilities.

- Object detection – Take a picture with your smartphone, and the app describes the objects around you by using Amazon Rekognition label detection features.

- Text recognition for labels, signs, and documents – Take a picture with your smartphone of any label, sign, or document, and the app reads it out loud for you. This feature is powered by Amazon Rekognition and Amazon Textract text extraction capabilities. AugmentAbility can also translate the text into over 75 languages, or make it more readable for users with dyslexia by using the OpenDyslexic font.

Live transcription, text to speech, and real-time conversation translation features are currently available in Chinese, English, French, German, Italian, Japanese, Korean, Brazilian Portuguese, and Spanish. Text recognition features are currently available in Arabic, English, French, German, Italian, Portuguese, Russian, and Spanish. An updated list of the languages supported by each feature is available on the AugmentAbility GitHub repo.

You can build and deploy AugmentAbility locally on your computer or in your AWS account by using AWS Amplify Hosting, a fully managed CI/CD and static web hosting service for fast, secure, and reliable static and server-side rendered apps.

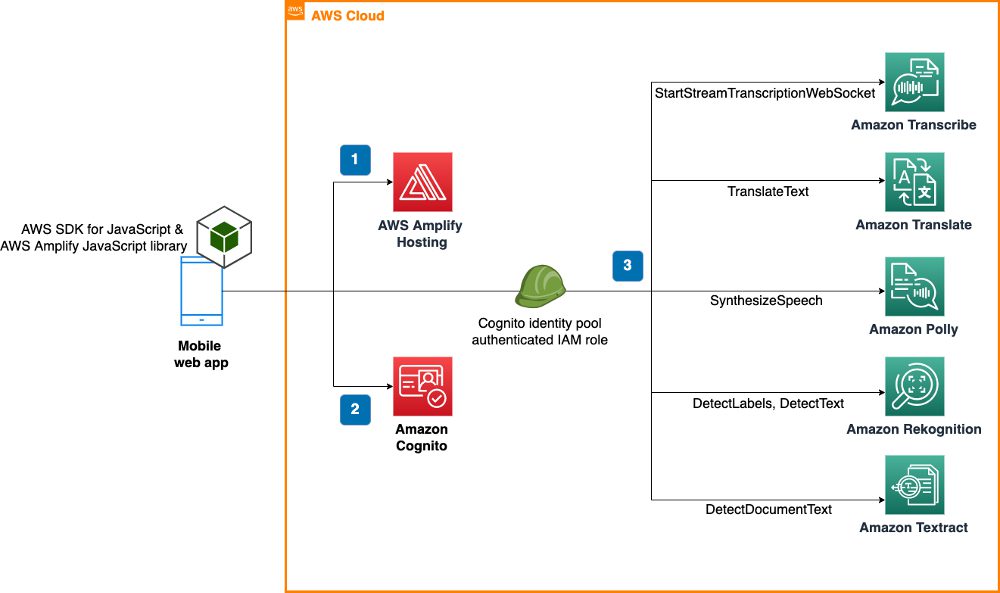

The following diagram illustrates the architecture of the application, assuming that it’s deployed in the cloud using AWS Amplify Hosting.

The solution workflow includes the following steps:

- A mobile browser is used to access the web app—an HTML, CSS, and JavaScript application hosted by AWS Amplify Hosting. The application has been implemented using the SDK for JavaScript and the AWS Amplify JavaScript library.

- The user signs in by entering a user name and a password. Authentication is performed against the Amazon Cognito user pool. After a successful login, the Amazon Cognito identity pool is used to provide the user with the temporary AWS credentials required to access app features.

- While the user explores the different features of the app, the mobile browser interacts with Amazon Transcribe (StartStreamTranscriptionWebSocket operation), Amazon Translate (TranslateText operation), Amazon Polly (SynthesizeSpeech operation), Amazon Rekognition (DetectLabels and DetectText operations) and Amazon Textract (DetectDocumentText operation).

AWS services have been integrated in the mobile web app by using the SDK for JavaScript. Generally speaking, the SDK for JavaScript provides access to AWS services in either browser scripts or Node.js; for this sample project, the SDK is used in browser scripts. For additional information about how to access AWS services from a browser script, refer to Getting Started in a Browser Script. The SDK for JavaScript is provided as a JavaScript file supporting a default set of AWS services. This file is typically loaded into browser scripts using a

Tags: Archive