Improve ML developer productivity with Weights & Biases: A computer vision example on Amazon SageMaker

The content and opinions in this post are those of the third-party author and AWS is not responsible for the content or accuracy of this post.

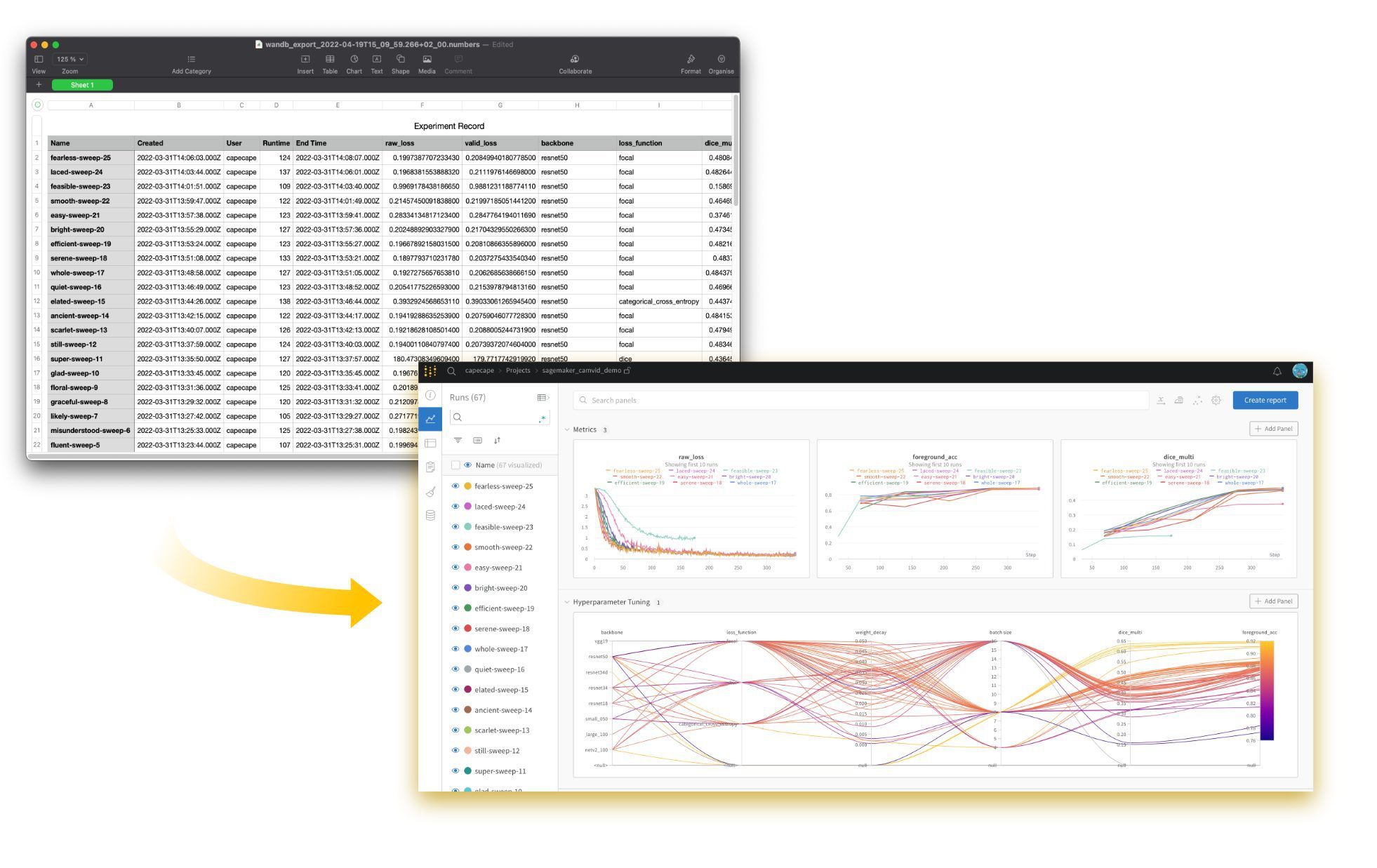

As more organizations use deep learning techniques such as computer vision and natural language processing, the machine learning (ML) developer persona needs scalable tooling around experiment tracking, lineage, and collaboration. Experiment tracking includes metadata such as operating system, infrastructure used, library, and input and output datasets—often tracked on a spreadsheet manually. Lineage involves tracking the datasets, transformations, and algorithms used to create an ML model. Collaboration includes ML developers working on a single project and also ML developers sharing their results across teams and to business stakeholders—a process commonly done via email, screenshots, and PowerPoint presentations.

In this post, we train a model to identify objects for an autonomous vehicle use case using Weights & Biases (W&B) and Amazon SageMaker. We showcase how the joint solution reduces manual work for the ML developer, creates more transparency in the model development process, and enables teams to collaborate on projects.

We run this example on Amazon SageMaker Studio for you to try out for yourself.

Overview of Weights & Biases

Weights & Biases helps ML teams build better models faster. With just a few lines of code in your SageMaker notebook, you can instantly debug, compare, and reproduce your models—architecture, hyperparameters, git commits, model weights, GPU usage, datasets, and predictions—all while collaborating with your teammates.

W&B is trusted by more than 200,000 ML practitioners from some of the most innovative companies and research organizations in the world. To try it for free, sign up at Weights & Biases, or visit the W&B AWS Marketplace listing.

Getting started with SageMaker Studio

SageMaker Studio is the first fully integrated development environment (IDE) for ML. Studio provides a single web-based interface where ML practitioners and data scientists can build, train, and deploy models with a few clicks, all in one place.

To get started with Studio, you need an AWS account and an AWS Identity and Access Management (IAM) user or role with permissions to create a Studio domain. Refer to Onboard to Amazon SageMaker Domain to create a domain, and the Studio documentation for an overview on using Studio visual interface and notebooks.

Set up the environment

For this post, we’re interested in running our own code, so let’s import some notebooks from GitHub. We use the following GitHub repo as an example, so let’s load this notebook.

You can clone a repository either through the terminal or the Studio UI. To clone a repository through the terminal, open a system terminal (on the File menu, choose New and Terminal) and enter the following command:

To clone a repository from the Studio UI, see Clone a Git Repository in SageMaker Studio.

To get started, choose the 01_data_processing.ipynb notebook. You’re prompted with a kernel switcher prompt. This example uses PyTorch, so we can choose the pre-built PyTorch 1.10 Python 3.8 GPU optimized image to start our notebook. You can see the app starting, and when the kernel is ready, it shows the instance type and kernel on the top right of your notebook.

Our notebook needs some additional dependencies. This repository provides a requirements.txt with the additional dependencies. Run the first cell to install the required dependencies:

You can also create a lifecycle configuration to automatically install the packages every time you start the PyTorch app. See Customize Amazon SageMaker Studio using Lifecycle Configurations for instructions and a sample implementation.

Use Weights & Biases in SageMaker Studio

Weights & Biases (wandb) is a standard Python library. Once installed, it’s as simple as adding a few lines of code to your training script and you’re ready to log experiments. We have already installed it through our requirements.txt file. You can also install it manually with the following code:

Case study: Autonomous vehicle semantic segmentation

Dataset

We use the Cambridge-driving Labeled Video Database (CamVid) for this example. It contains a collection of videos with object class semantic labels, complete with metadata. The database provides ground truth labels that associate each pixel with one of 32 semantic classes. We can version our dataset as a wandb.Artifact, that way we can reference it later. See the following code:

You can follow along in the 01_data_processing.ipynb notebook.

We also log a table of the dataset. Tables are rich and powerful DataFrame-like entities that enable you to query and analyze tabular data. You can understand your datasets, visualize model predictions, and share insights in a central dashboard.

Weights & Biases tables support many rich media formats, like image, audio, and waveforms. For a full list of media formats, refer to Data Types.

The following screenshot shows a table with raw images with the ground truth segmentations. You can also view an interactive version of this table.

Train a model

We can now create a model and train it on our dataset. We use PyTorch and fastai to quickly prototype a baseline and then use wandb.Sweeps to optimize our hyperparameters. Follow along in the 02_semantic_segmentation.ipynb notebook. When prompted for a kernel on opening the notebook, choose the same kernel from our first notebook, PyTorch 1.10 Python 3.8 GPU optimized. Your packages are already installed because you’re using the same app.

The model is supposed to learn a per-pixel annotation of a scene captured from the point of view of the autonomous agent. The model needs to categorize or segment each pixel of a given scene into 32 relevant categories, such as road, pedestrian, sidewalk, or cars. You can choose any of the segmented images on the table and access this interactive interface for accessing the segmentation results and categories.

Because the fastai library has integration with wandb, you can simply pass the WandbCallback to the Learner:

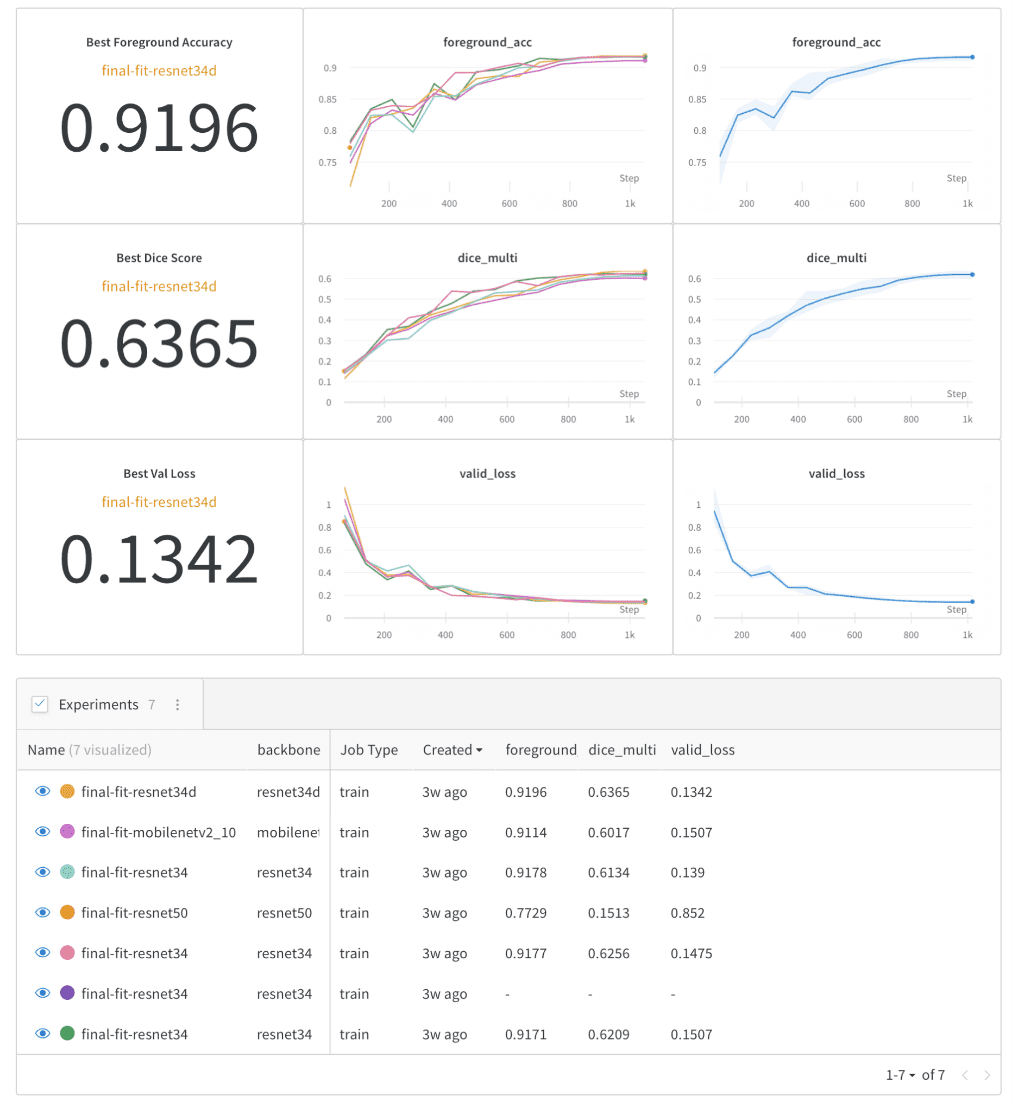

For the baseline experiments, we decided to use a simple architecture inspired by the UNet paper with different backbones from timm. We trained our models with Focal Loss as criterion. With Weights & Biases, you can easily create dashboards with summaries of your experiments to quickly analyze training results, as shown in the following screenshot. You can also view this dashboard interactively.

Hyperparameter search with sweeps

To improve the performance of the baseline model, we need to select the best model and the best set of hyperparameters to train. W&B makes this easy for us using sweeps.

We perform a Bayesian hyperparameter search with the goal of maximizing the foreground accuracy of the model on the validation dataset. To perform the sweep, we define the configuration file sweep.yaml. Inside this file, we pass the desired method to use: bayes and the parameters and their corresponding values to search. In our case, we try different backbones, batch sizes, and loss functions. We also explore different optimization parameters like learning rate and weight decay. Because these are continuous values, we sample from a distribution. There are multiple configuration options available for sweeps.

Afterwards, in a terminal, you launch the sweep using the wandb command line:

And then launch a sweep agent on this machine with the following code:

When the sweep has finished, we can use a parallel coordinates plot to explore the performances of the models with various backbones and different sets of hyperparameters. Based on that, we can see which model performs the best.

The following screenshot shows the results of the sweeps, including a parallel coordinates chart and parameter correlation charts. You can also view this sweeps dashboard interactively.

We can derive the following key insights from the sweep:

- Lower learning rate and lower weight decay results in better foreground accuracy and Dice scores.

- Batch size has strong positive correlations with the metrics.

- The VGG-based backbones might not be a good option to train our final model because they’re prone to resulting in a vanishing gradient. (They’re filtered out as the loss diverged.)

- The ResNet backbones result in the best overall performance with respect to the metrics.

- The ResNet34 or ResNet50 backbone should be chosen for the final model due to their strong performance in terms of metrics.

Data and model lineage

W&B artifacts were designed to make it effortless to version your datasets and models, regardless of whether you want to store your files with W&B or whether you already have a bucket you want W&B to track. After you track your datasets or model files, W&B automatically logs each modification, giving you a complete and auditable history of changes to your files.

In our case, the dataset, models, and different tables generated during training are logged to the workspace. You can quickly view and visualize this lineage by going to the Artifacts page.

Interpret model predictions

Weight & Biases is especially useful when assessing model performance by using the power of wandb.Tables to visualize where our model is doing badly. In this case, we’re particularly interested in detecting correctly vulnerable users like bicycles and pedestrians.

We logged the predicted masks along with the per-class Dice score coefficient into a table. We then filtered by rows containing the desired classes and sorted by ascending order on the Dice score.

In the following table, we first filter by choosing where the Dice score is positive (pedestrians are present in the image). Then we sort in ascending order to identify our worst-detected pedestrians. Keep in mind that a Dice score equaling 1 means correctly segmenting the pedestrian class. You can also view this table interactively.

We can repeat this analysis with other vulnerable classes, such as bicycles or traffic lights.

This feature is a very good way of identifying images that aren’t labeled correctly and tagging them to re-annotate.

Conclusion

This post showcased the Weights & Biases MLOps platform, how to set up W&B in SageMaker Studio, and how to run an introductory notebook on the joint solution. We then ran through an autonomous vehicle semantic segmentation use case and demonstrated tracking training runs with W&B experiments, hyperparameter optimization using W&B sweeps, and interpreting results with W&B tables.

If you’re interested in learning more, you can access the live W&B report. To try Weights & Biases for free, sign up at Weights & Biases, or visit the W&B AWS Marketplace listing.

About the Authors

Thomas Capelle is a Machine Learning Engineer at Weights and Biases. He is responsible for keeping the www.github.com/wandb/examples repository live and up to date. He also builds content on MLOPS, applications of W&B to industries, and fun deep learning in general. Previously he was using deep learning to solve short-term forecasting for solar energy. He has a background in Urban Planning, Combinatorial Optimization, Transportation Economics, and Applied Math.

Thomas Capelle is a Machine Learning Engineer at Weights and Biases. He is responsible for keeping the www.github.com/wandb/examples repository live and up to date. He also builds content on MLOPS, applications of W&B to industries, and fun deep learning in general. Previously he was using deep learning to solve short-term forecasting for solar energy. He has a background in Urban Planning, Combinatorial Optimization, Transportation Economics, and Applied Math.

Durga Sury is a ML Solutions Architect in the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 3 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and hikes with her four-year old husky.

Durga Sury is a ML Solutions Architect in the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 3 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and hikes with her four-year old husky.

Karthik Bharathy is the product leader for Amazon SageMaker with over a decade of product management, product strategy, execution and launch experience.

Karthik Bharathy is the product leader for Amazon SageMaker with over a decade of product management, product strategy, execution and launch experience.

Tags: Archive

Leave a Reply