Deep demand forecasting with Amazon SageMaker

Every business needs the ability to predict the future accurately in order to make better decisions and give the company a competitive advantage. With historical data, businesses can understand trends, make predictions of what might happen and when, and incorporate that information into their future plans, from product demand to inventory planning and staffing. If a forecast is too high, companies may over-invest in products and staff, which results in wasted investment. If the forecast is too low, companies may under-invest, which leads to a shortfall in raw materials and inventory, creating a poor customer experience.

Time series forecasting is a technique that predicts future time series data based on historical data. Time series forecasting is useful in multiple fields, including retail, finance, logistics, and healthcare. Demand forecasting uses historical time series data in order to make future estimations in relation to customer demand over a specific period and streamline the supply-demand decision-making process across businesses. Demand forecasting use cases include predicting ticket sales in the transportation industry, stock prices, number of hospital visits, number of customer representatives to hire for multiple locations in the next month, product sales across multiple regions in the next quarter, cloud server usage for the next day for a video streaming service, electricity consumption for multiple regions over the next week, number of IoT devices and sensors such as energy consumption, and more.

Time series data is categorized as univariate and multi-variate. For example, the total electricity consumption for a single household is a univariate time series over a period of time. When multiple univariate time series are stacked on each other, it’s called a multi-variate time series. For example, the total electricity consumption of 10 different (but correlated) households in a single neighborhood make up a multi-variate time series dataset.

The traditional approaches for time series forecasting include auto regressive integrated moving average (ARIMA) for univariate time series data and vector autoregression (VAR) for multi-variate time series data. These methods often require tedious data preprocessing and features generation prior to model training. These challenges are addressed by deep learning (DL) methods by automating the feature generation step prior to model training, such as incorporating various data normalization, lags, different time scales, some categorical data, dealing with missing values, and more, with better prediction power and fast GPU-enabled training and deployment.

In this post, we show you how to deploy a demand forecasting solution using Amazon SageMaker JumpStart. We walk you through an end-to-end solution for a demand forecasting task using three state-of-the-art time series algorithms: LSTNet, Prophet, and SageMaker DeepAR, which are available in GluonTS and Amazon SageMaker. The input data is a multi-variate time series that includes hourly electricity consumption of 321 users from 2012–2014. Next, each algorithm takes the historical multi-variate and correlated time series data to train and produce accurate predictions (multi-variate values) over a prediction interval. For each of the time series algorithms, we have two outputs: a trained model on the hourly electricity consumption data and a SageMaker endpoint that can predict the future (multi-variate) values given a prediction interval.

Alternatively, if you are looking for a fully managed service to deliver highly accurate forecasts, without writing code, we recommend checking out Amazon Forecast. Amazon Forecast is a time-series forecasting service based on machine learning (ML) and built for business metrics analysis. Based on the same technology used at Amazon.com, Amazon Forecast uses machine learning to combine time series data with additional variables to build forecasts.

Solution overview

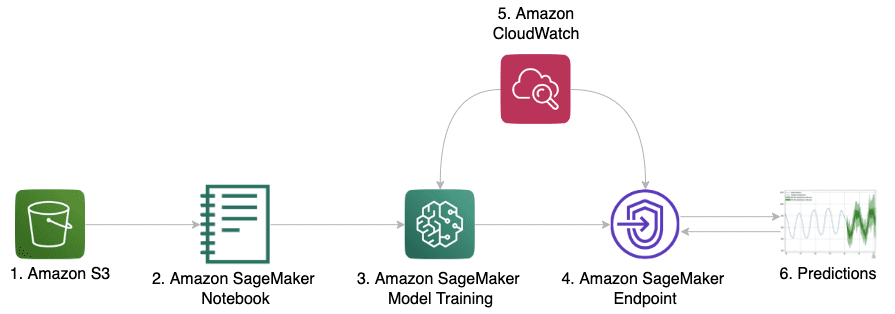

The following diagram shows the architecture for the end-to-end training and deployment process.

The solution workflow is as follows:

- The input data for training is located in an Amazon Simple Storage Service (Amazon S3) bucket.

- The provided SageMaker notebook gets the input data and launches the following steps.

- For each of the LSTNet, Prophet, and SageMaker DeepAR algorithms, train a model and evaluate its results using SageMaker.

- Deploy the trained model and create a SageMaker endpoint, which is an HTTPS endpoint that is capable of producing predictions.

- Monitor the model training and deployment via Amazon CloudWatch.

- The input data for inferencing is located in an S3 bucket. From the SageMaker notebook, send the requests to the SageMaker endpoint and make predictions.

Prerequisites

To try out the solution in your own account, make sure that you have the following in place:

- An AWS account to use this solution. If you don’t have an account, you can sign up for one.

- The solution outlined in this post is part of Amazon SageMaker JumpStart. To run this JumpStart 1P Solution and have the infrastructure deploy to your AWS account, you need to create an active Amazon SageMaker Studio instance (see Onboard to Amazon SageMaker Domain).

When the Studio instance is ready, you can launch Studio and access JumpStart. JumpStart features aren’t available in SageMaker notebook instances, and you can’t access them through SageMaker APIs or the AWS Command Line Interface (AWS CLI).

Launch the solution

To launch the solution, complete the following steps:

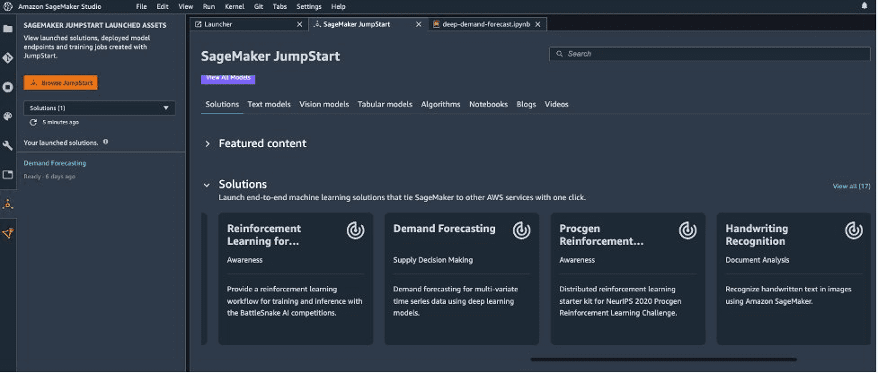

- Open JumpStart by using the JumpStart launcher in the Get Started section or by choosing the JumpStart icon in the left sidebar.

- In the Solutions section, choose Demand Forecasting to open the solution in another Studio tab.

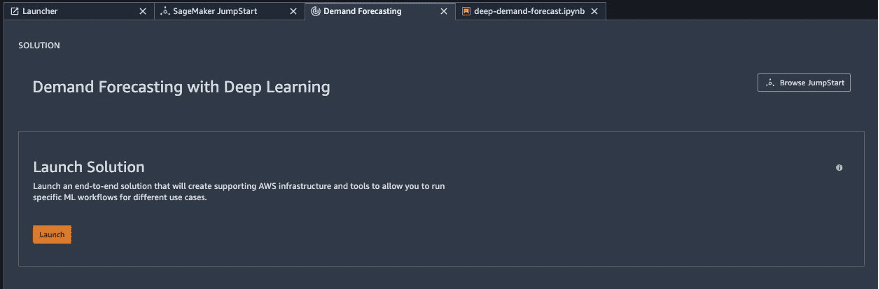

- On the Demand Forecasting tab, choose Launch to deploy the solution resources.

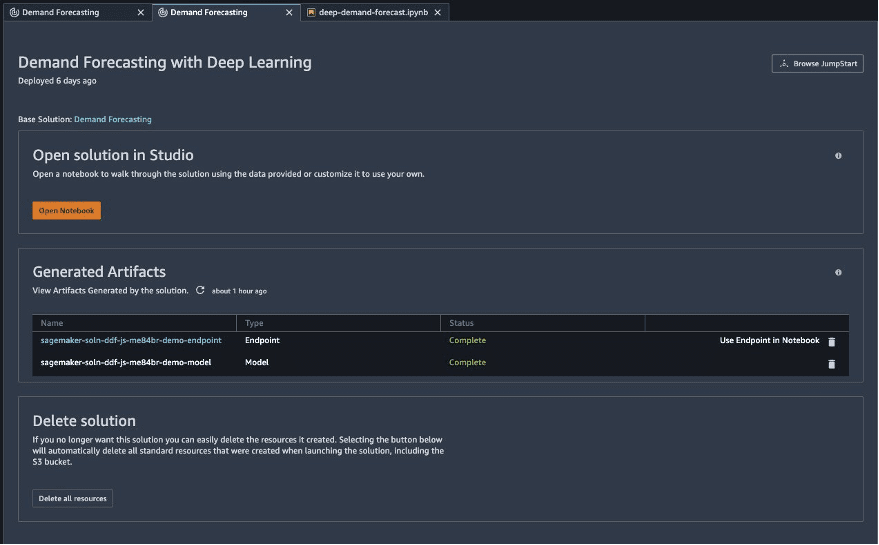

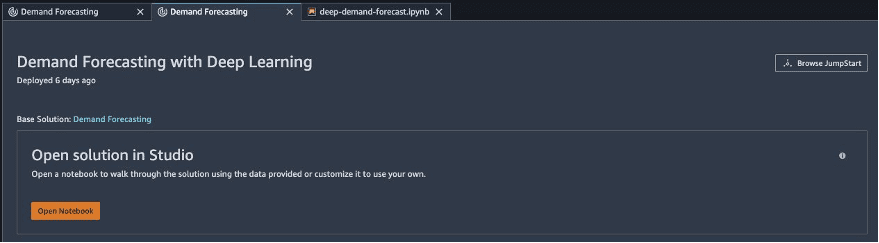

- Another tab opens showing the deploy status and the generated artifacts. When the deployment is finished, an Open Notebook button appears. Choose Open Notebook to open the solution notebook in Studio.

In the following sections, we walk you through the steps of the deep demand forecasting solution.

Data preparation and visualization

The dataset we use here is the multi-variate time series electricity consumptions data taken from Dua, D. and Graff, C. (2019). UCI Machine Learning Repository, Irvine, CA: University of California, School of Information and Computer Science. We use a cleaned version of the data containing 321 time series with 1-hour frequency, starting from January 1, 2012 with 26,304 time-steps. We have also provided the exchange rate dataset in case you want to try with other datasets as well.

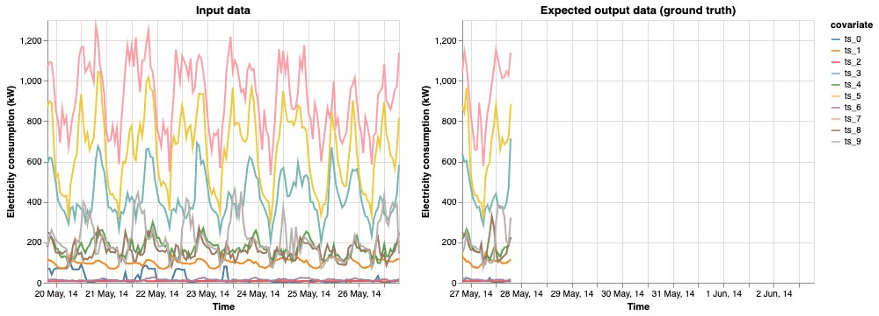

We have provided utilities for creating the dataframe from train and test data. The training data includes hourly electricity consumption values (for the 321 households) from 2012-01-01 00:00:00 to 2014-05-26 19:00:00, and the test data contains values from 2012-01-01 00:00:00 to 2014-06-02 19:00:00 (7 more days of hourly data compared to the training data). To train a time series forecasting model, the CONTEXT_LENGTH defines the length of each input time series, and PREDICTION_LENGTH defines the length of each output time series.

Because the CONTEXT_LENGTH and PREDICTION_LENGTH are set to 168 (7 days) and 24 (next 1 day), we plot the last 7 days of the training data and its subsequent 1 day of the testing data for demonstration purposes. The plotted training data and testing data are from 2014-05-19 20:00:00 to 2014-05-26 19:00:00, and from 2014-05-26 20:00:00 to 2014-05-27 02:00:00, respectively. For demonstration purposes, we only plot the 11 time series out of the 321 total, as shown in the following figure.

Train the models

This section demonstrates training an LSTNet model using GluonTS, a Prophet model using GluonTS, and a SageMaker DeepAR model with and without hyperparameter optimization (HPO). For each of these, we first trained the model without HPO, then we trained the model with HPO. We demonstrate how model performance increases with HPO by showing the comparison metrics, namely RRSE (Root Relative Squared Error), MAPE (Mean Absolute Percentage Error), and sMAPE (symmetric Mean Absolute Percentage Error). For HPO, we use the RRSE as the evaluation metric for all the three algorithms.

Train an optimal LSTNet model using GluonTS

LSTNet is a deep learning model that incorporates traditional auto-regressive linear models in parallel to the non-linear neural network part, which makes the non-linear deep learning model more robust for time series that violate scale changes. For information on the mathematics behind LSTNet, see Modeling Long- and Short-Term Temporal Patterns with Deep Neural Networks.

We first train a LSTNet model without HPO. With the hyperparameters defined, we can run the training job. We use GluonTS with MXNet as the backend deep learning framework to define and train our LSTNet model. SageMaker makes it do this with the framework estimators, which have the deep learning frameworks already set up. Here, we create a SageMaker MXNet estimator and pass in our model training script, hyperparameters, as well as the number and type of training instances we want.

Next, we train an optimal LSTNet model with HPO and further improve the model performance with SageMaker automatic model tuning. SageMaker automatic model tuning, also known as hyperparameter tuning, finds the best version of a model by running many training jobs on your dataset using the algorithm and ranges of hyperparameters that you specify. It then chooses the hyperparameter values that result in a model that performs the best, as measured by a metric that you choose. The best model and its corresponding hyperparameters are selected on the validation data from 2014-05-26 20:00:00 to 2014-06-01 19:00:00 (corresponding to 6 days). Next, we deploy the best model in an endpoint that we can query for prediction. Finally, the best model is evaluated on the holdout test data from 2014-06-01 20:00:00 to 2014-06-02 19:00:00 (corresponding to the next 1 day). The following table compares model performance.

| Metrics | LSTNet without HPO | LSTNet with HPO |

| RRSE | 0.555 | 0.506 |

| MAPE | 0.318 | 0.301 |

| sMAPE | 0.337 | 0.323 |

| Training Time (minutes) | 10.780 | 57.242 |

| Inference Time (seconds) | 5.202 | 5.340 |

Except for the training and inference time, for RRSE, MAPE, and sMAPE, smaller values indicate better predictive performance. Therefore, we can observe the performance of the model trained with HPO is significantly better than the one trained without HPO.

Train an optimal Prophet model using GluonTS with HPO

Prophet is an algorithm for forecasting time series data based on an additive model where non-linear trends are fit with yearly, weekly, and daily seasonality, plus holiday effects. It works best with time series that have strong seasonal effects and several seasons of historical data. Prophet is robust to missing data and shifts in the trend, and typically handles outliers well. For implementation of Prophet algorithm, we use the GluonTS version, which is a thin wrapper for calling the fbprophet package. First, we train a Prophet model without HPO using SageMaker Estimator. Next, we train an optimal Prophet model with with SageMaker Automatic Model Tuning (HPO) and further improve the model performance.

| Metrics | Prophet without HPO | Prophet with HPO |

| RRSE | 0.183 | 0.147 |

| MAPE | 0.288 | 0.278 |

| sMAPE | 0.278 | 0.289 |

| Training Time (minutes) | – | 45.633 |

| Inference Time (seconds) | 44.813 | 45.327 |

The metric values with HPO tuning are smaller than those without HPO tuning on the same test data. This indicates that HPO tuning further improves the model performance.

Train an optimal SageMaker DeepAR model with HPO

The SageMaker DeepAR forecasting algorithm is a supervised learning algorithm for forecasting scalar (one-dimensional) time series using recurrent neural networks (RNN). Classical forecasting methods, such as autoregressive integrated moving average (ARIMA) or exponential smoothing (ETS), fit a single model to each individual time series. They then use that model to extrapolate the time series into the future.

In many applications, however, you have many similar time series across a set of cross-sectional units. For example, you might have time series groupings for demand for different products, server loads, and requests for webpages. For this type of application, you can benefit from training a single model jointly over all of the time series. DeepAR takes this approach. When your dataset contains hundreds of related time series, DeepAR outperforms the standard ARIMA and ETS methods. You can also use the trained model to generate forecasts for new time series that are similar to the ones it has been trained on. For information on the mathematics behind DeepAR, see DeepAR: Probabilistic Forecasting with Autoregressive Recurrent Networks.

Similar to the settings in the previous models, we first train a DeepAR model without HPO. Next, we train an optimal DeepAR model with HPO. Then we deploy the best model in an endpoint that we can query for prediction. The following table compares model performance.

| Metrics | DeepAR without HPO | DeepAR with HPO |

| RRSE | 0.136 | 0.098 |

| MAPE | 0.087 | 0.099 |

| sMAPE | 0.104 | 0.116 |

| Training Time (minutes) | 24.048 | 210.530 |

| Inference Time (seconds) | 68.411 | 72.829 |

The metrics values with HPO tuning are smaller than those without HPO tuning on the same test data. This indicates that HPO tuning further improves the model performance.

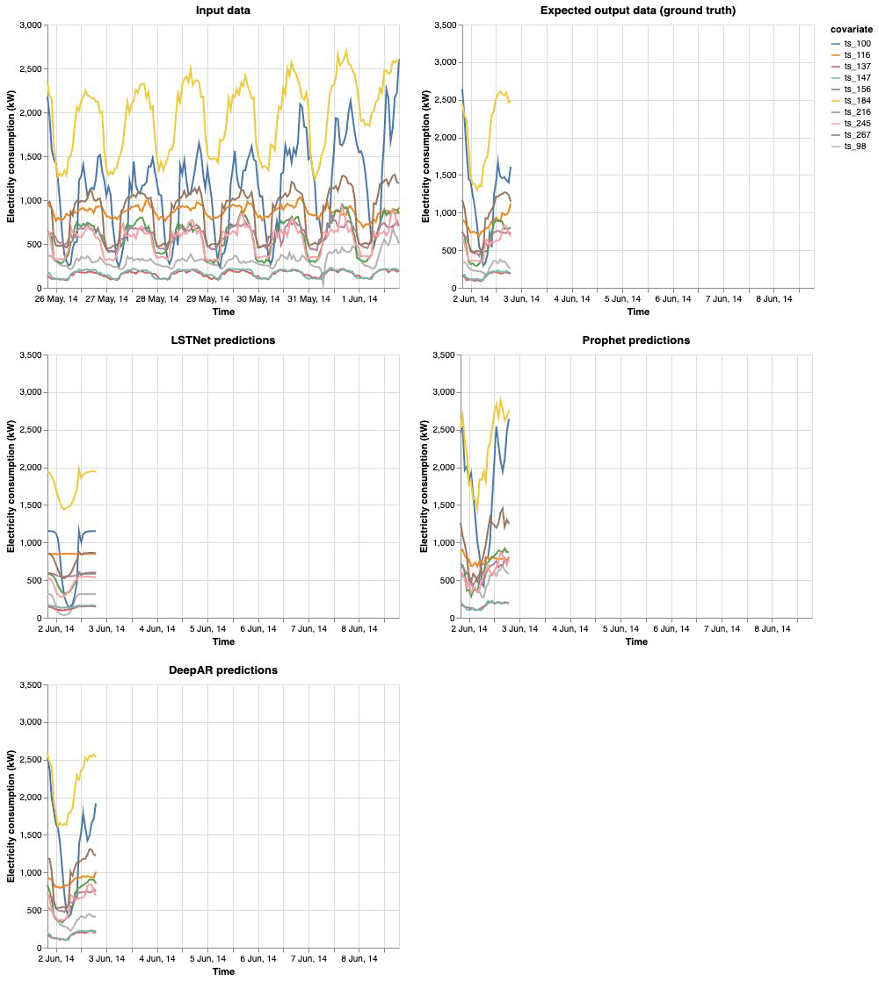

Evaluate model performance of all three algorithms on the same holdout test data

In this section, we compare the model performance from the three models trained from HPO. Based on the input data, the comparisons could vary for different input datasets. The following table that compares the three algorithms for the sample electricity input data used in this post.

| Metrics | LSTNet with HPO | Prophet with HPO | DeepAR with HPO |

| RRSE | 0.506 | 0.147 | 0.098 |

| MAPE | 0.302 | 0.278 | 0.099 |

| sMAPE | 0.323 | 0.289 | 0.116 |

| Training Time (minutes) | 57.242 | 45.633 | 210.530 |

| Inference Time (seconds) | 5.340 | 45.327 | 72.829 |

The following figures visualize these results.

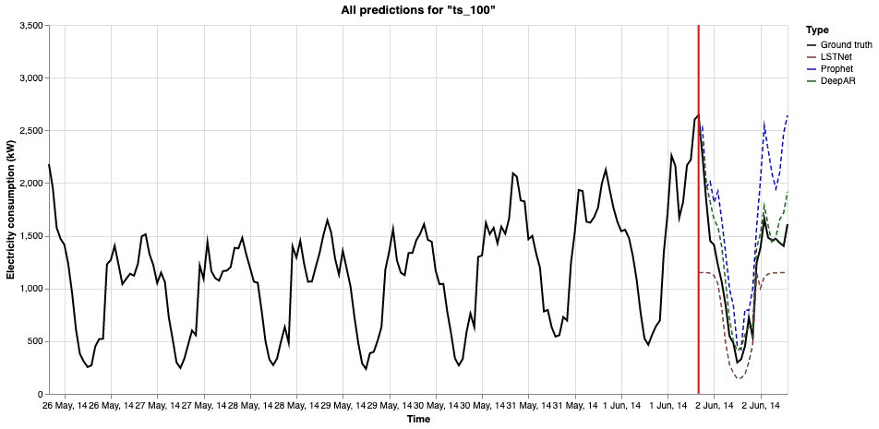

The following figure is another way to visualize the results.

The training and test data (ground truth) are shown as the black solid line (separated by the red vertical line) in the plot. The predictions from different forecasting algorithms are shown as dash lines. The closer the dash line comes to the black solid line, the more accurate the predictions are.

Clean up

When you’re finished with this solution, make sure that you delete all unwanted AWS resources to avoid incurring unintended charges. The solution notebook provides cleanup code. On the solution tab, you can also choose Delete all resources in the Delete solution section.

Conclusion

In this post, we introduced an end-to-end solution for a demand forecasting task using three state-of-the-art time series algorithms: LSTNet, Prophet, and SageMaker DeepAR, which are available in GluonTS and SageMaker. We discussed three training approaches: training an optimal LSTNet model using GluonTS, training an optimal Prophet model using GluonTS, and training an optimal SageMaker DeepAR model with HPO. For each of these, we first trained the model without HPO, and then trained the model with HPO. We demonstrated how the model performance increases with HPO by comparing metrics, namely RRSE, MAPE, and sMAPE.

In this post, we used the electricity data as our input dataset. However, you can change the input and bring your own data to an S3 bucket. You can use that data to train the models and get different performance results and choose the best algorithm accordingly.

On the SageMaker console, open Studio and launch the solution in JumpStart to get started, or you can check out the solution’s GitHub repository to review the code and more information.

About the Authors

Alak Eswaradass is a Senior Solutions Architect at AWS based in Chicago, Illinois. She is passionate about helping customers design cloud architectures utilizing AWS services to solve business challenges. She has a Master’s degree in computer science engineering. Before joining AWS, she worked for different healthcare organizations, and she has in-depth experience architecting complex systems, technology innovation, and research. She hangs out with her daughters and explores the outdoors in her free time.

Alak Eswaradass is a Senior Solutions Architect at AWS based in Chicago, Illinois. She is passionate about helping customers design cloud architectures utilizing AWS services to solve business challenges. She has a Master’s degree in computer science engineering. Before joining AWS, she worked for different healthcare organizations, and she has in-depth experience architecting complex systems, technology innovation, and research. She hangs out with her daughters and explores the outdoors in her free time.

Dr. Xin Huang is an Applied Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on developing scalable machine learning algorithms. His research interests are in the area of natural language processing, explainable deep learning on tabular data, and robust analysis of non-parametric space-time clustering.

Dr. Xin Huang is an Applied Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on developing scalable machine learning algorithms. His research interests are in the area of natural language processing, explainable deep learning on tabular data, and robust analysis of non-parametric space-time clustering.

Tags: Archive

Leave a Reply