Identify rooftop solar panels from satellite imagery using Amazon Rekognition Custom Labels

Renewable resources like sunlight provide a sustainable and carbon neutral mechanism to generate power. Governments in many countries are providing incentives and subsidies to households to install solar panels as part of small-scale renewable energy schemes. This has created a huge demand for solar panels. Reaching out to potential customers at the right time, through the right channel, and with attractive offers is very crucial for solar and energy companies. They’re looking for cost-efficient approaches and tools to conduct targeted marketing to proactively reach out to potential customers. By identifying the suburbs that have low coverage of solar panel installation at scale, they can maximize their marketing initiatives to those places, so as to maximize the return on their marketing investment.

In this post, we discuss how you can identify solar panels on rooftops from satellite imagery using Amazon Rekognition Custom Labels.

The problem

High-resolution satellite imagery of urban areas provides an aerial view of rooftops. You can use these images to identify solar panel installations. But it is a challenging task to automatically identify solar panels with high accuracy, low cost, and in a scalable way.

With rapid development in computer vision technology, several third-party tools use computer vision to analyze satellite images and identify objects (like solar panels) automatically. However, these tools are expensive and increase the overall cost of marketing. Many organizations have also successfully implemented state-of-the-art computer vision applications to identify the presence of solar panels on the rooftops from the satellite images.

But the reality is that you need to build your own data science teams that have the specific expertise and experience to build a production machine learning (ML) application for your specific use case. It generally takes months for teams to build a computer vision solution that they can use in production. This leads to an increased cost in building and maintaining such a system.

Is there a simpler and cost-effective solution that helps solar companies quickly build effective computer vision models without building a dedicated data science team for that purpose? Yes, Rekognition Custom Labels is the answer to this question.

Solution overview

Rekognition Custom Labels is a feature of Amazon Rekognition that takes care of the heavy lifting of computer vision model development for you, so no computer vision experience is required. You simply provide images with the appropriate labels, train the model, and deploy without having to build the model and fine-tune it. Rekognition Custom Labels has the capability to build highly accurate models with fewer labeled images. This takes away the heavy lifting of model development and helps you focus on developing value-added products and applications to your customers.

In this post, we show how to label, train, and build a computer vision model to detect rooftops and solar panels from satellite images. We use Amazon Simple Storage Service (Amazon S3) for storing satellite images, Amazon SageMaker Ground Truth for labeling the images with the appropriate labels of interest, and Rekognition Custom Labels for model training and hosting. To test the model output, we use a Jupyter notebook to run Python code to detect custom labels in a supplied image by calling Amazon Rekognition APIs.

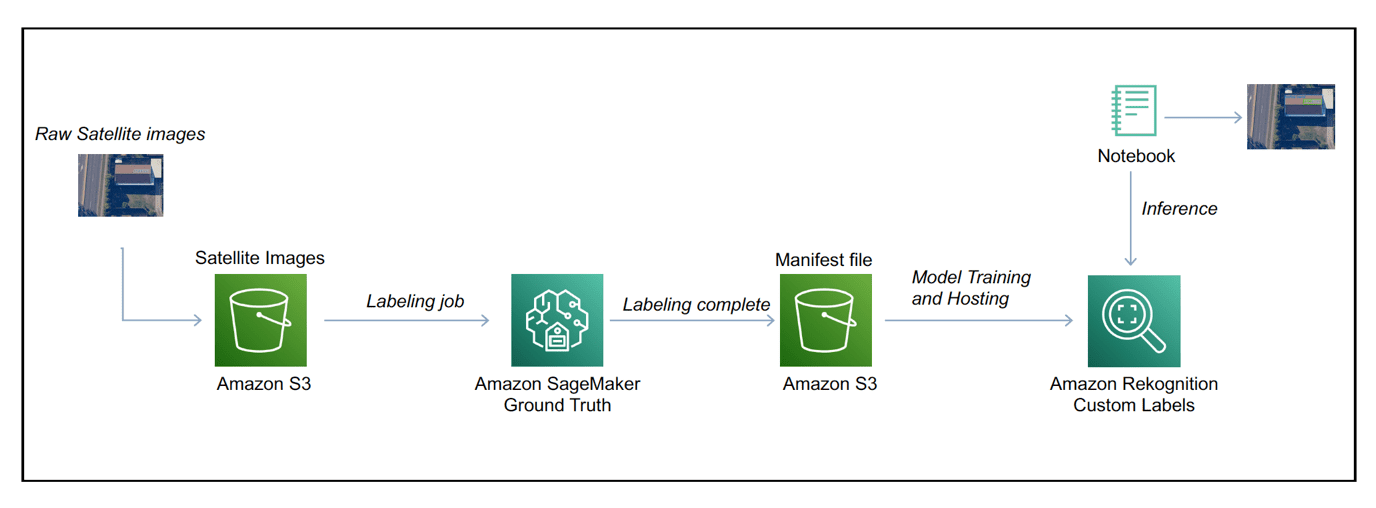

The following diagram illustrates the architecture using AWS services to label the images, and train and host the ML model.

The solution workflow is as follows:

- Store satellite imagery data in Amazon S3 as the input source.

- Use a Ground Truth labeling job to label the images.

- Use Amazon Rekognition to train the model with custom labels.

- Fine-tune the trained model.

- Start the model and analyze the image with the trained model using the Rekognition Custom Labels API.

Store satellite imagery data in Amazon S3 as an input source

The satellite images of rooftops with and without solar panels are captured from the satellite imagery data providers and stored in an S3 bucket. For our post, we use the images of New South Wales (NSW), Australia, provided by the Spatial Services, Department of Customer Service NSW. We have taken the screenshots of the rooftops from this portal and stored those images in the source S3 bucket. These images are labeled using a Ground Truth labeling job, as explained in the next step.

Use a Ground Truth labeling job to label the images

Ground Truth is a fully managed data labeling service that makes it easy to build highly accurate training datasets for ML tasks. It has three options:

- Amazon Mechanical Turk, which uses a public workforce to label the data

- Private, which allows you to create a private workforce from internal teams

- Vendor, which uses third-party resources for the labeling task

In this example, we use a private workforce to perform the data labeling job. Refer to Use Amazon SageMaker Ground Truth to Label Data for instructions on creating a private workforce and configuring Ground Truth for a labeling job with bounding boxes.

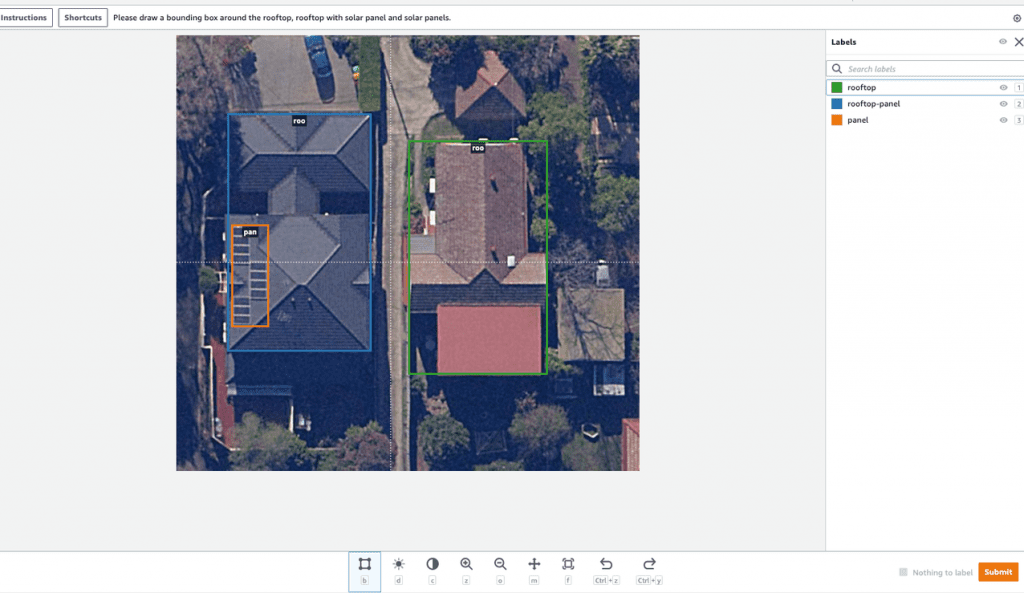

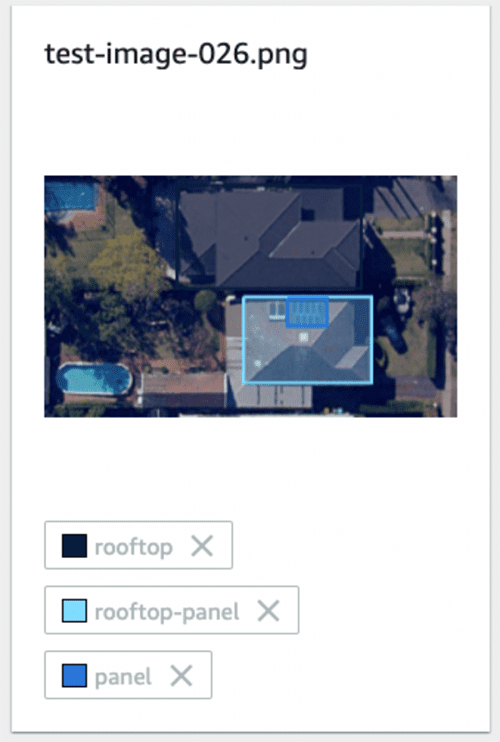

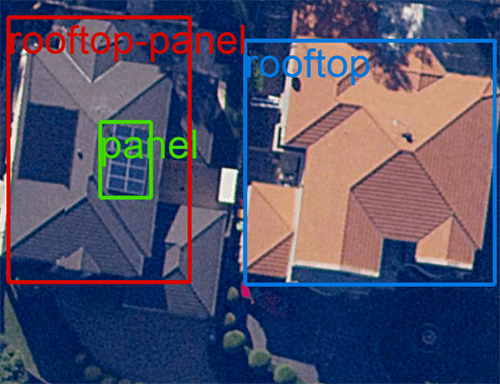

The following is an example image of the labeling job. The labeler can draw bounding boxes of the targets with the selected labels indicated by different colors. We used three labels on the images: rooftop, rooftop-panel, and panel to signify rooftops without solar panels, rooftops with solar panels, and just solar panels, respectively.

When the labeling job is complete, an output.manifest file is generated and stored in the S3 output location that you specified when creating the labeling job. The following code is an example of one image labeling output in the manifest file:

The output manifest file is what we need for the Amazon Rekognition training job. In the next section, we provide step-by-step instructions to create a high-performance ML model to detect objects of interest.

Use Amazon Rekognition to train the model with custom labels

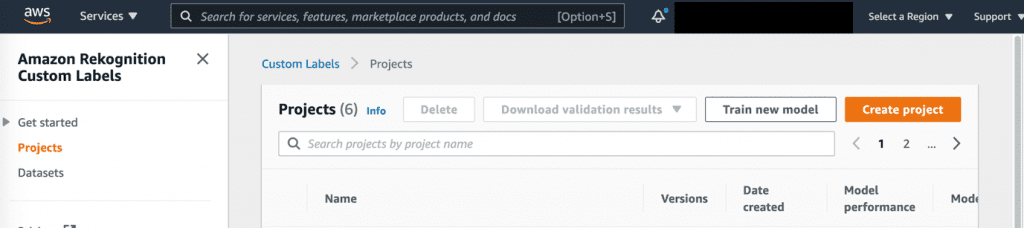

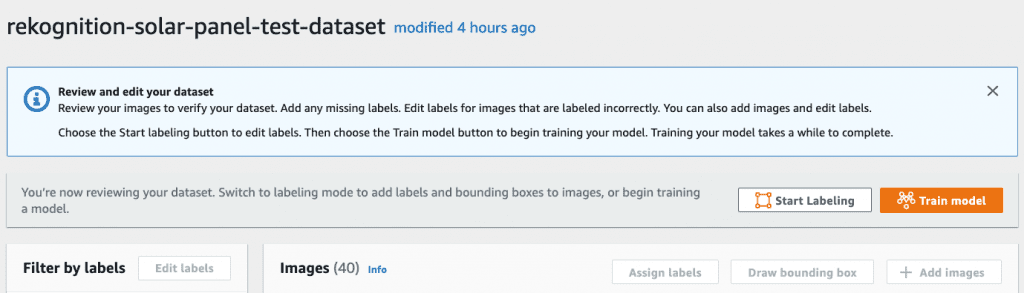

We now create a project for a custom object detection model, and provide the labeled images to Rekognition Custom Labels to train the model.

- On the Amazon Rekognition console, choose Use Custom Labels in the navigation pane.

- In the navigation pane, choose Projects.

- Choose Create project.

- For Project name, enter a unique name.

- Choose Create project.

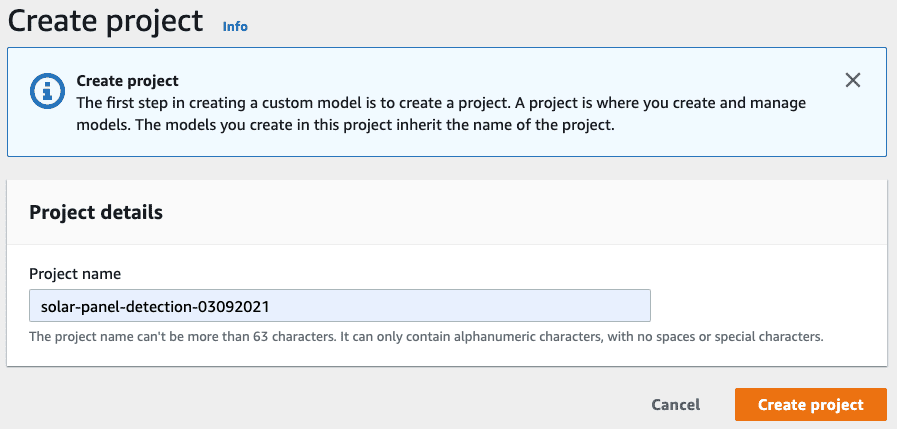

Next, we create a dataset for the training job.

- In the navigation pane, choose Datasets.

- Create a dataset based on the manifest file generated by the Ground Truth labeling job.

We’re now ready to train a new model.

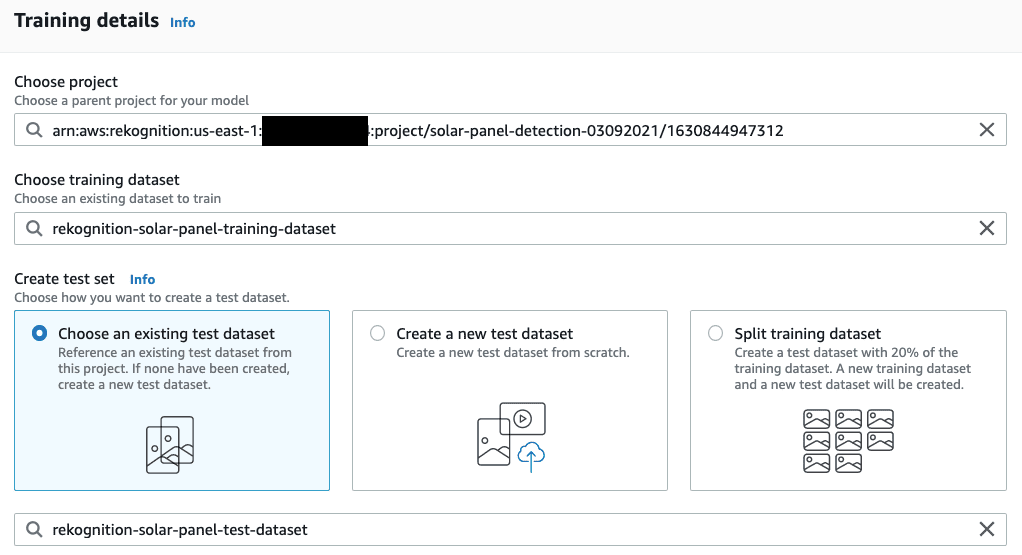

- Select the project you created and choose Train new model.

- Choose the training dataset you created and choose the test dataset.

- Choose Train to start training the model.

You can create a new test dataset or split the training dataset to use 20% of the training data as the test dataset and the remaining 80% as the training dataset. However, if you split the training dataset, the training and test datasets are randomly selected from the whole dataset every time you train a new model. In this example, we create a separate test dataset to evaluate the trained models.

We collected an additional 50 satellite images and labeled them using Ground Truth. We then used the output manifest file of this labeling job to create the test dataset.

This allows us to compare the evaluation metrics of different versions of the model that are trained based on different input datasets. The first training dataset consists of 160 images; the test dataset has 50 images.

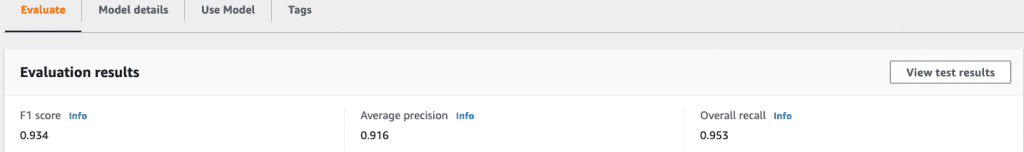

When the model training process is complete, we can access the evaluation metrics on the Evaluate tab on the model page. Our training job was able to achieve an F1 score of 0.934. The model evaluation metrics are reasonably good considering the number of training images we used and the number of images used for validating the model.

Although the model evaluation metrics are reasonable, it’s important to understand which images the model incorrectly labels, so as to further fine-tune the model’s performance to make it more robust to handle real-world challenges. In the next section, we describe the process of evaluating the images that have inaccurate labels and retraining the model to achieve better performance.

Fine-tune the trained model

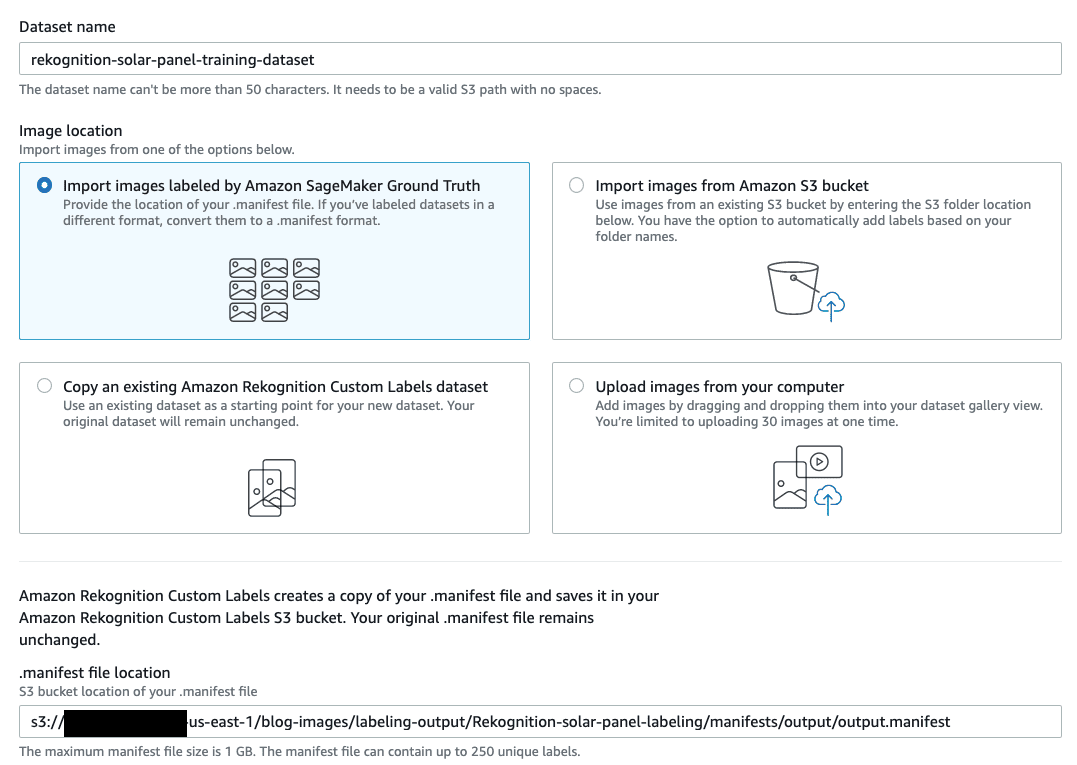

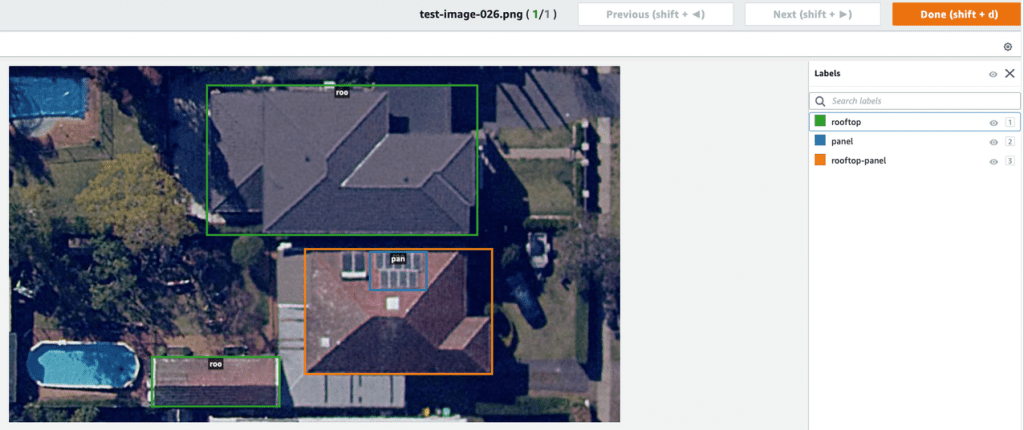

Evaluating incorrect labels inferred by the trained model is a crucial step to further fine-tune the model. To check the detailed test results, we can choose the training job and choose View test results to evaluate the images that the model inaccurately labeled. Evaluating the model performance on test data can help you identify any potential labeling or data source-related issues. For example, the following test image shows an example of false-positive labeling of a rooftop.

As you can determine from the preceding image, the identified rooftop is correct—it’s the rooftop of a smaller home built on the property. Based on the source image name, we can go back to the dataset to check the labels. We can do this via the Amazon Rekognition console. In the following screenshot, we can determine that the source image wasn’t labeled correctly. The labeler missed labeling that rooftop.

To correct the labeling issue, we don’t need to rerun the Ground Truth job or run an adjustment job on the whole dataset. We can easily verify or adjust individual images via the Rekognition Custom Labels console.

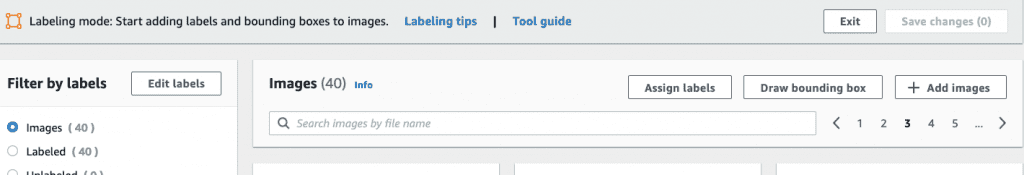

- On the dataset page, choose Start labeling.

- Select the image file that needs adjustment and choose Draw bounding box.

On the labeling page, we can draw or update the bounding boxes on this image.

- Draw the bounding box around the smaller building and label it as rooftop.

- Choose Done to save the changes or choose Next or Previous to navigate through additional images that require adjustments.

In some situations, you might have to provide more images with examples of the rooftops that the model failed to identify correctly. We can collect more images, label them, and retrain the model so that the model can learn the special cases of rooftops and solar panels.

To add more training images to the training dataset, you can create another Ground Truth job if the number of added images is large and if you need to create a labeling workforce team to label the images. When the labeling job is finished, we get a new manifest file, which contains the bounding box information for the newly added images. Then we need to manually merge the manifest file of the newly added images to the existing manifest file. We use the combined manifest file to create a new training dataset on the Rekognition Custom Labels console and train a more robust model.

Another option is to add the images directly to the current training dataset if the number of new images isn’t large and one person is sufficient to finish the labeling task on the Amazon Rekognition console. In this project, we directly add another 30 images to the original training dataset and perform labeling on the console.

After we complete the label verification and add more images of different rooftop and panel types, we have a second model trained with 190 training images that we evaluate on the same test dataset. The second version of the trained model achieved an F1 score of 0.964, which is an improvement from the earlier score of 0.934. Based on your business requirement, you can further fine-tune the model.

Deploy the model and analyze the images using the Rekognition Custom Labels API

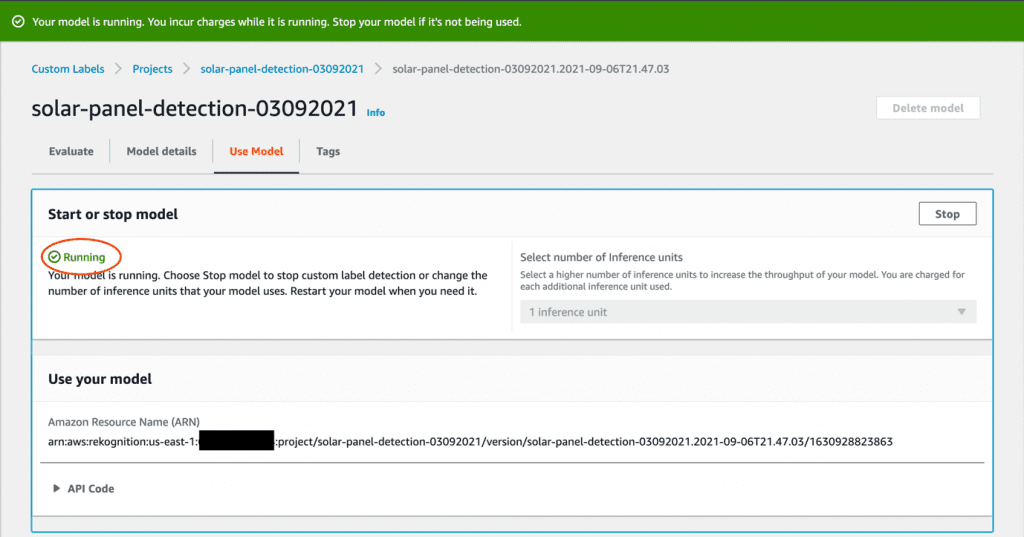

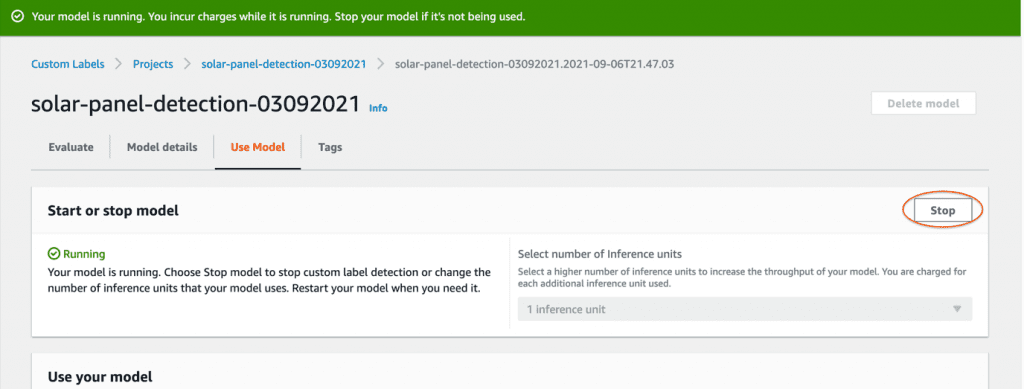

Now that we have trained a model with satisfactory evaluation results, we deploy the model to an endpoint for real-time inference. We analyze a few images using Python code via the Amazon Rekognition API on this deployed model. After you start the model, its status shows as Running.

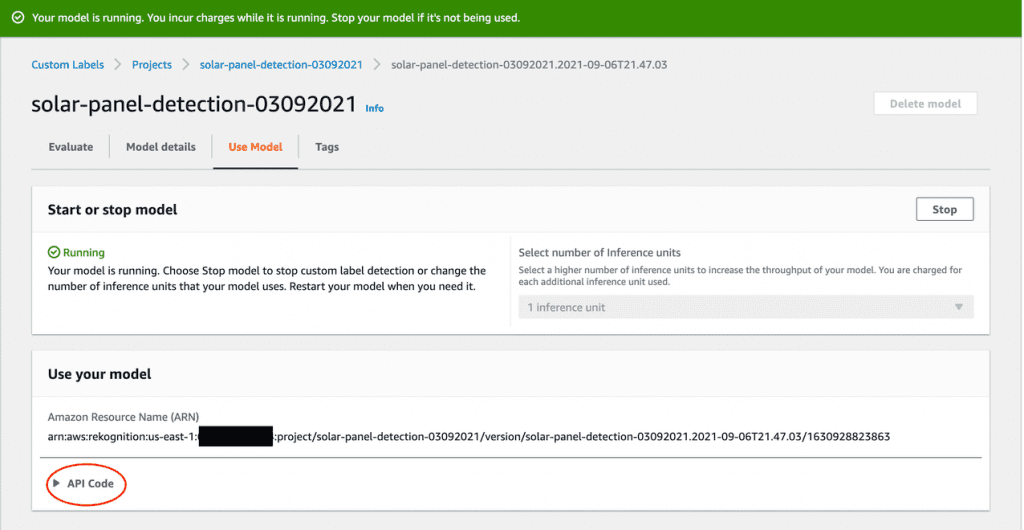

Now the model is ready to detect the labels on the new satellite images. We can test the model by running the provided sample Python API code. On the model page, choose API Code.

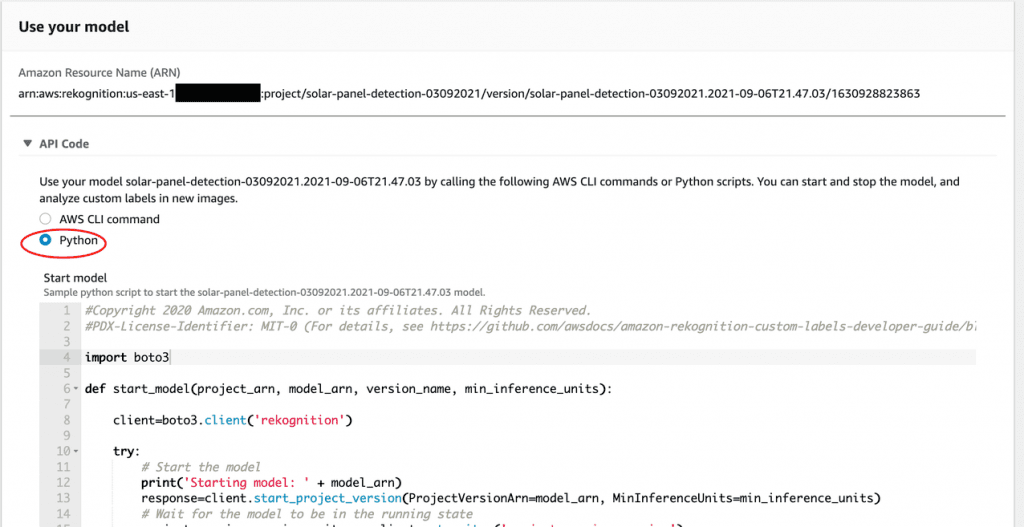

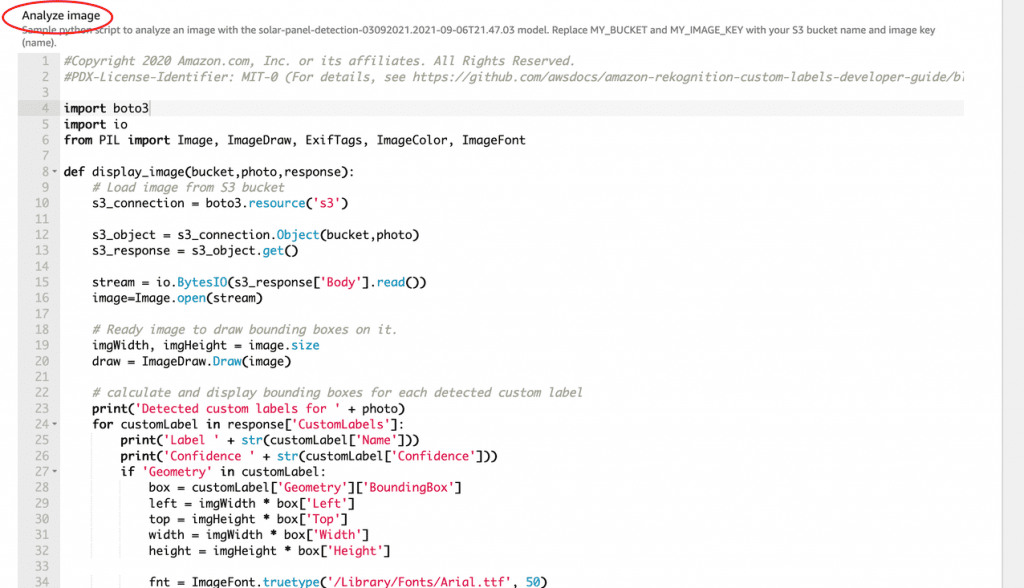

Select Python to review the sample code to start the model, analyze images, and stop the model.

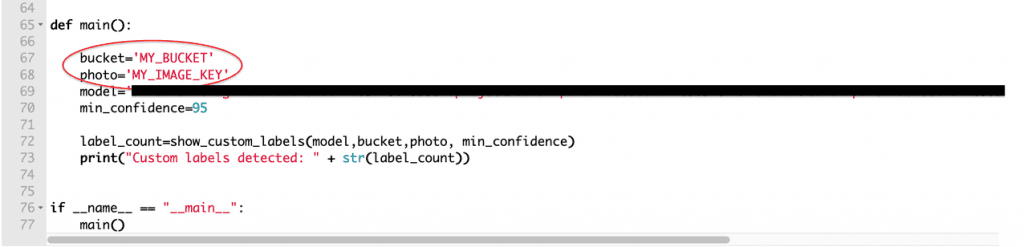

Copy the Python code in the Analyze image section into a Jupyter notebook, which can be running on your laptop.

To set up the environment to run the code, we need to install the AWS SDKs that we want to use and configure the security credentials to access the AWS resources. For instructions, refer to Set Up the AWS CLI and AWS SDKs.

Upload a test image to an S3 bucket. In the Analyze image Python code, substitute the variable MY_BUCKET with the bucket name that has the test image and replace MY_IMAGE_KEY with the file name of the test image.

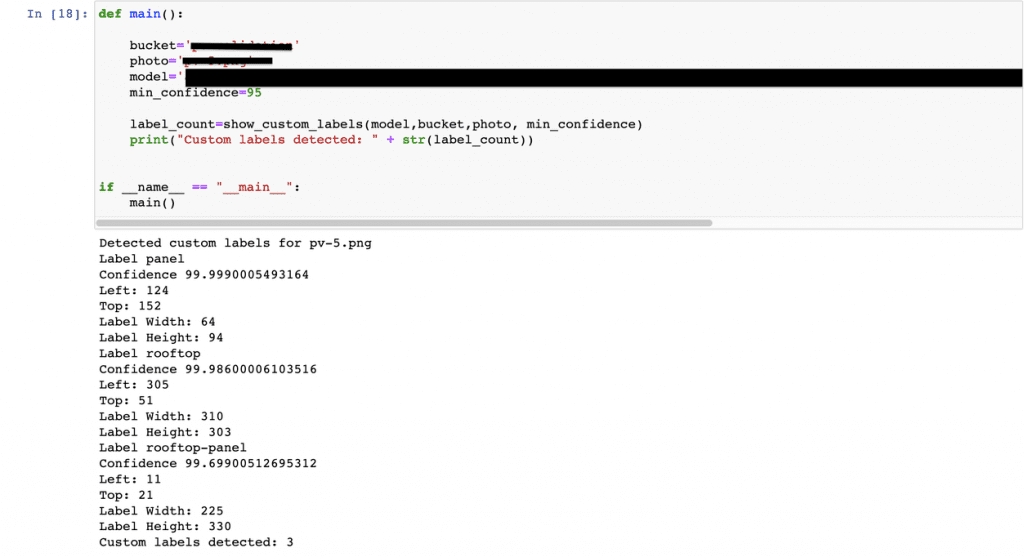

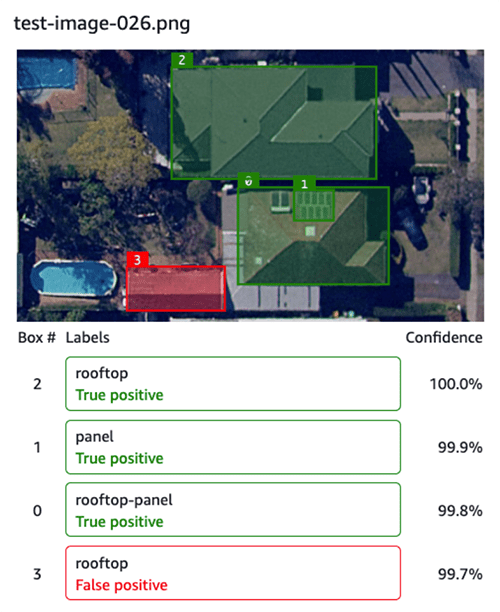

The following screenshot shows a sample response of running the Python code.

The following output image shows that the model has successfully detected three labels: rooftop, rooftop-panel, and panel.

Clean up

After testing, we can stop the model to avoid any unnecessary charges incurred to run the model.

Conclusion

In this post, we showed you how to detect rooftops and solar panels from the satellite imagery by building custom computer vision models with Rekognition Custom Labels. We demonstrated how Rekognition Custom Labels manages the model training by taking care of the deep learning complexities behind the scenes. We also demonstrated how to use Ground Truth to label the training images at scale. Furthermore, we discussed mechanisms to improve model accuracy by correcting the labeling of the images on the fly and retraining the model with the dataset. Power utility companies can use this solution to detect houses without solar panels to send offers and promotions to achieve efficient targeted marketing.

To learn more about how Rekognition Custom Labels can help your business, visit Amazon Rekognition Custom Labels or contact AWS Sales.

About the Authors

Melanie Li is a Senior AI/ML Specialist TAM at AWS based in Sydney, Australia. She helps enterprise customers to build solutions leveraging the state-of-the-art AI/ML tools on AWS and provides guidance on architecting and implementing machine learning solutions with best practices. In her spare time, she loves to explore nature outdoors and spend time with family and friends.

Melanie Li is a Senior AI/ML Specialist TAM at AWS based in Sydney, Australia. She helps enterprise customers to build solutions leveraging the state-of-the-art AI/ML tools on AWS and provides guidance on architecting and implementing machine learning solutions with best practices. In her spare time, she loves to explore nature outdoors and spend time with family and friends.

Santosh Kulkarni is a Solutions Architect at Amazon Web Services. He works closely with enterprise customers to accelerate their Cloud journey. He is also passionate about building large-scale distributed applications to solve business problems using his knowledge in Machine Learning, Big Data, and Software Development.

Santosh Kulkarni is a Solutions Architect at Amazon Web Services. He works closely with enterprise customers to accelerate their Cloud journey. He is also passionate about building large-scale distributed applications to solve business problems using his knowledge in Machine Learning, Big Data, and Software Development.

Dr. Baichuan Sun is a Senior Data Scientist at AWS AI/ML. He is passionate about solving strategic business problems with customers using data-driven methodology on the cloud, and he has been leading projects in challenging areas including robotics computer vision, time series forecasting, price optimization, predictive maintenance, pharmaceutical development, product recommendation system, etc. In his spare time he enjoys traveling and hanging out with family.

Dr. Baichuan Sun is a Senior Data Scientist at AWS AI/ML. He is passionate about solving strategic business problems with customers using data-driven methodology on the cloud, and he has been leading projects in challenging areas including robotics computer vision, time series forecasting, price optimization, predictive maintenance, pharmaceutical development, product recommendation system, etc. In his spare time he enjoys traveling and hanging out with family.

Tags: Archive

Leave a Reply