Tips to improve your Amazon Rekognition Custom Labels model

In this post, we discuss best practices to improve the performance of your computer vision models using Amazon Rekognition Custom Labels. Rekognition Custom Labels is a fully managed service to build custom computer vision models for image classification and object detection use cases. Rekognition Custom Labels builds off of the pre-trained models in Amazon Rekognition, which are already trained on tens of millions of images across many categories. Instead of thousands of images, you can get started with a small set of training images (a few hundred or less) that are specific to your use case. Rekognition Custom Labels abstracts away the complexity involved in building a custom model. It automatically inspects the training data, selects the right ML algorithms, selects the instance type, trains multiple candidate models with various hyperparameters settings, and outputs the best trained model. Rekognition Custom Labels also provides an easy-to-use interface from the AWS Management Console for managing the entire ML workflow, including labeling images, training the model, deploying the model, and visualizing the test results.

There are times when a model’s accuracy isn’t the best, and you don’t have many options to adjust the configuration parameters of the model. Behind the scenes there are multiple factors that play a key role to build a high-performing model, such as the following:

- Picture angle

- Image resolution

- Image aspect ratio

- Light exposure

- Clarity and vividness of background

- Color contrast

- Sample data size

The following are the general steps to be followed to train a production-grade Rekognition Custom Labels model:

- Review Taxonomy – This defines the list of attributes/items that you want to identify in an image.

- Collect relevant data – This is the most important step, where you need to collect relevant images that should resemble what you would see in a production environment. This could involve images of objects with varying backgrounds, lighting, or camera angles. You then create a training and testing datasets by splitting the collected images. You should only include real-world images as part of the testing dataset, and shouldn’t include any synthetically generated images. Annotations of the data you collected are crucial for the model performance. Make sure the bounding boxes are tight around the objects and the labels are accurate. We discuss some tips that you can consider when building an appropriate dataset later in this post.

- Review training metrics – Use the preceding datasets to train a model and review the training metrics for F1 score, precision, and recall. We will discuss in details about how to analyze the training metrics later in this post.

- Evaluate the trained model – Use a set of unseen images (not used for training the model) with known labels to evaluate the predictions. This step should always be performed to make sure that the model performs as expected in a production environment.

- Re-training (optional) – In general, training any machine learning model is an iterative process to achieve the desired results, a computer vision model is no different. Review the results in Step 4, to see if more images need to be added to the training data and repeat the above Steps 3 – 5.

In this post, we focus on the best practices around collecting relevant data (Step 2) and evaluating your trained metrics (Step 3) to improve your model performance.

Collect relevant data

This is the most critical stage of training a production-grade Rekognition Custom Labels model. Specifically, there are two datasets: training and testing. Training data is used for training the model, and you need to spend the effort building an appropriate training set. Rekognition Custom Labels models are optimized for F1 score on the testing dataset to select the most accurate model for your project. Therefore, it’s essential to curate a testing dataset that resembles the real world.

Number of images

We recommend having a minimum of 15-20 images per label. Having more images with more variations that reflects your use case will improve the model performance.

Balanced dataset

Ideally, each label in the dataset should have a similar number of samples. There shouldn’t be a massive disparity in the number of images per label. For example, a dataset where the highest number of images for a label is 1,000 vs. 50 images for another label resembles an imbalanced dataset. We recommend avoiding scenarios with lopsided ratio of 1:50 between the label with the least number of images vs. the label with the highest number of images.

Varying types of images

Include images in the training and test dataset that resembles what you will be using in the real world. For example, if you want to classify images of living rooms vs. bedrooms, you should include empty and furnished images of both rooms.

The following is an example image of a furnished living room.

In contrast, the following is an example of an unfurnished living room.

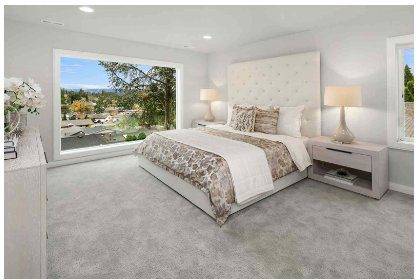

The following is an example image of a furnished bedroom.

The following is an example image of an unfurnished bedroom.

Varying backgrounds

Include images with different backgrounds. Images with natural context can provide better results than plain background.

The following is an example image of the front yard of a house.

The following is an example image of the front yard of a different house with a different background.

Varying lighting conditions

Include images with varying lighting so that it covers the different lighting conditions that occur during inference (for example, with and without flash). You can also include images with varying saturation, hue, and brightness.

The following is an example image of a flower under normal light.

In contrast, the following image is of the same flower under bright light.

Varying angles

Include images taken from various angles of the object. This helps the model learn different characteristics of the objects.

The following images are of the same bedroom from different angles.

|

|

There could be occasions where it’s not possible to acquire images of varying types. In those scenarios, synthetic images can be generated as part of the training dataset. For more information about common image augmentation techniques, refer to Data Augmentation.

Add negative labels

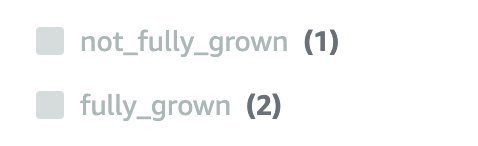

For image classification, adding negative labels can help increase model accuracy. For example, you can add a negative label, which doesn’t match any of the required labels. The following image represents the different labels used to identify fully grown flowers.

Adding the negative label not_fully_grown helps the model learn characteristics that aren’t part of the fully_grown label.

Handling label confusion

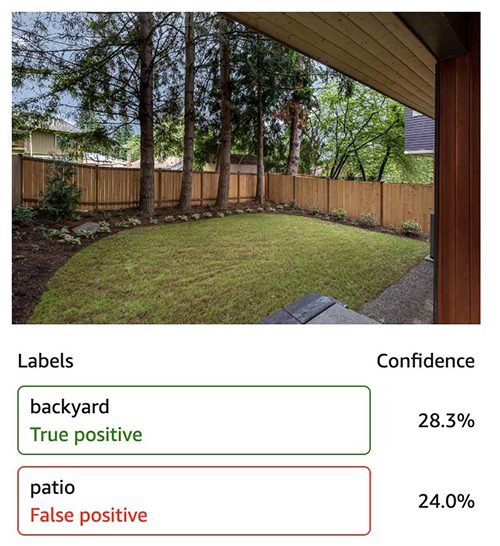

Analyze the results on the test dataset to recognize any patterns that are missed in the training or testing dataset. Sometimes it’s easy to spot such patterns by visually examining the images. In the following image, the model is struggling to resolve between a backyard vs. patio label.

In this scenario, adding more images to these labels in the dataset and also redefining the labels so that each label is distinct can help increase the accuracy of the model.

Data augmentation

Inside Rekognition Custom Labels, we perform various data augmentations for model training, including random cropping of the image, color jittering, random Gaussian noises, and more. Based on your specific use cases, it might also be beneficial to add more explicit data augmentations to your training data. For example, if you’re interested in detecting animals in both color and black and white images, you could potentially get better accuracy by adding black and white and color versions of the same images to the training data.

We don’t recommend augmentations on testing data unless the augmentations reflect your production use cases.

Review training metrics

F1 score, precision, recall, and assumed threshold are the metrics that are generated as an output of training a model using Rekognition Custom Labels. The models are optimized for the best F1 score based on the testing dataset that is provided. The assumed threshold is also generated based on the testing dataset. You can adjust the threshold based on your business requirement in terms of precision or recall.

Because the assumed thresholds are set on the testing dataset, an appropriate test set should reflect the real-world production use case. If the test dataset isn’t representative of the use case, you may see artificially high F1 scores and poor model performance on your real-world images.

These metrics are helpful when performing an initial evaluation of the model. For a production-grade system, we recommend evaluating the model against an external dataset (500–1,000 unseen images) representative of the real world. This helps evaluate how the model would perform in a production system and also identify any missing patterns and correct them by retraining the model. If you see a mismatch between F1 scores and external evaluation, we suggest you examine whether your test data is reflecting the real-world use case.

Conclusion

In this post, we walked you through the best practices for improving Rekognition Custom Labels models. We encourage you to learn more about Rekognition Custom Labels and try it out for your business-specific datasets.

About the authors

Amit Gupta is a Senior AI Services Solutions Architect at AWS. He is passionate about enabling customers with well-architected machine learning solutions at scale.

Amit Gupta is a Senior AI Services Solutions Architect at AWS. He is passionate about enabling customers with well-architected machine learning solutions at scale.

Yogesh Chaturvedi is a Solutions Architect at AWS with a focus in computer vision. He works with customers to address their business challenges using cloud technologies. Outside of work, he enjoys hiking, traveling, and watching sports.

Yogesh Chaturvedi is a Solutions Architect at AWS with a focus in computer vision. He works with customers to address their business challenges using cloud technologies. Outside of work, he enjoys hiking, traveling, and watching sports.

Hao Yang is a Senior Applied Scientist at the Amazon Rekognition Custom Labels team. His main research interests are object detection and learning with limited annotations. Outside works, Hao enjoys watching films, photography, and outdoor activities.

Hao Yang is a Senior Applied Scientist at the Amazon Rekognition Custom Labels team. His main research interests are object detection and learning with limited annotations. Outside works, Hao enjoys watching films, photography, and outdoor activities.

Pashmeen Mistry is the Senior Product Manager for Amazon Rekognition Custom Labels. Outside of work, Pashmeen enjoys adventurous hikes, photography, and spending time with his family.

Pashmeen Mistry is the Senior Product Manager for Amazon Rekognition Custom Labels. Outside of work, Pashmeen enjoys adventurous hikes, photography, and spending time with his family.

Tags: Archive

Leave a Reply