Set up enterprise-level cost allocation for ML environments and workloads using resource tagging in Amazon SageMaker

As businesses and IT leaders look to accelerate the adoption of machine learning (ML), there is a growing need to understand spend and cost allocation for your ML environment to meet enterprise requirements. Without proper cost management and governance, your ML spend may lead to surprises in your monthly AWS bill. Amazon SageMaker is a fully managed ML platform in the cloud that equips our enterprise customers with tools and resources to establish cost allocation measures and improve visibility into detailed cost and usage by your teams, business units, products, and more.

In this post, we share tips and best practices regarding cost allocation for your SageMaker environment and workloads. Across almost all AWS services, SageMaker included, applying tags to resources is a standard way to track costs. These tags can help you track, report, and monitor your ML spend through out-the-box solutions like AWS Cost Explorer and AWS Budgets, as well as custom solutions built on the data from AWS Cost and Usage Reports (CURs).

Cost allocation tagging

Cost allocation on AWS is a three-step process:

- Attach cost allocation tags to your resources.

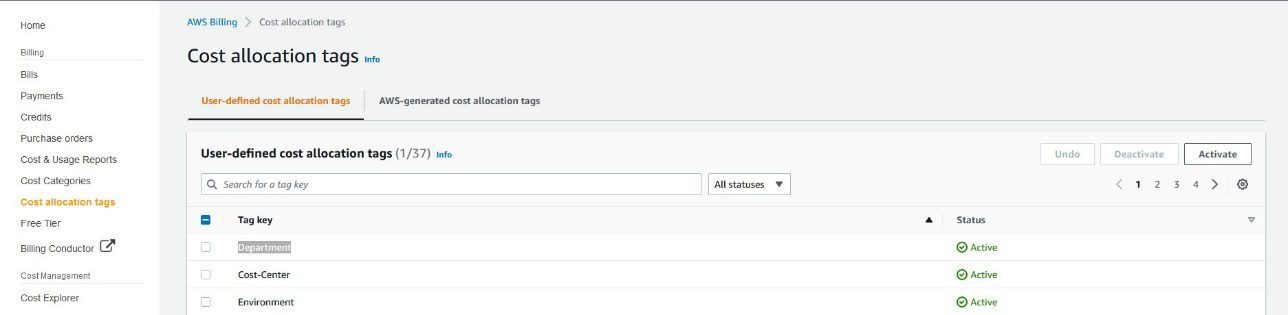

- Activate your tags in the Cost allocation tags section of the AWS Billing console.

- Use the tags to track and filter for cost allocation reporting.

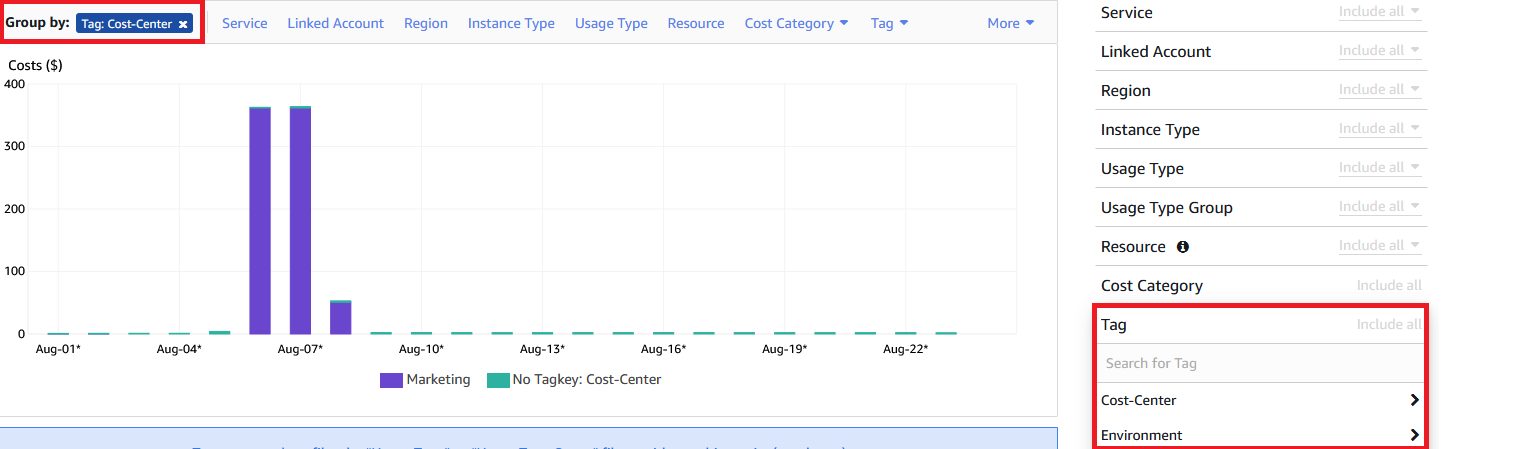

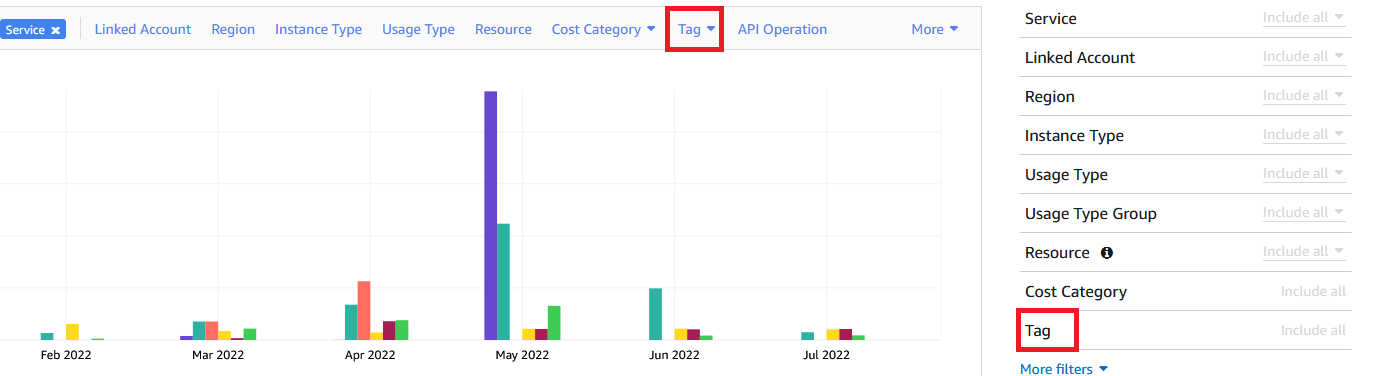

After you create and attach tags to resources, they appear in the AWS Billing console’s Cost allocation tags section under User-defined cost allocation tags. It can take up to 24 hours for tags to appear after they’re created. You then need to activate these tags for AWS to start tracking them for your resources. Typically, after a tag is activated, it takes about 24–48 hours for the tags to show up in Cost Explorer. The easiest way to check if your tags are working is to look for your new tag in the tags filter in Cost Explorer. If it’s there, then you’re ready to use the tags for your cost allocation reporting. You can then choose to group your results by tag keys or filter by tag values, as shown in the following screenshot.

One thing to note: if you use AWS Organizations and have linked AWS accounts, tags can only be activated in the primary payer account. Optionally, you can also activate CURs for the AWS accounts that enable cost allocation reports as a CSV file with your usage and costs grouped by your active tags. This gives you more detailed tracking of your costs and makes it easier to set up your own custom reporting solutions.

Tagging in SageMaker

At a high level, tagging SageMaker resources can be grouped into two buckets:

- Tagging the SageMaker notebook environment, either Amazon SageMaker Studio domains and domain users, or SageMaker notebook instances

- Tagging SageMaker-managed jobs (labeling, processing, training, hyperparameter tuning, batch transform, and more) and resources (such as models, work teams, endpoint configurations, and endpoints)

We cover these in more detail in this post and provide some solutions on how to apply governance control to ensure good tagging hygiene.

Tagging SageMaker Studio domains and users

Studio is a web-based, integrated development environment (IDE) for ML that lets you build, train, debug, deploy, and monitor your ML models. You can launch Studio notebooks quickly, and dynamically dial up or down the underlying compute resources without interrupting your work.

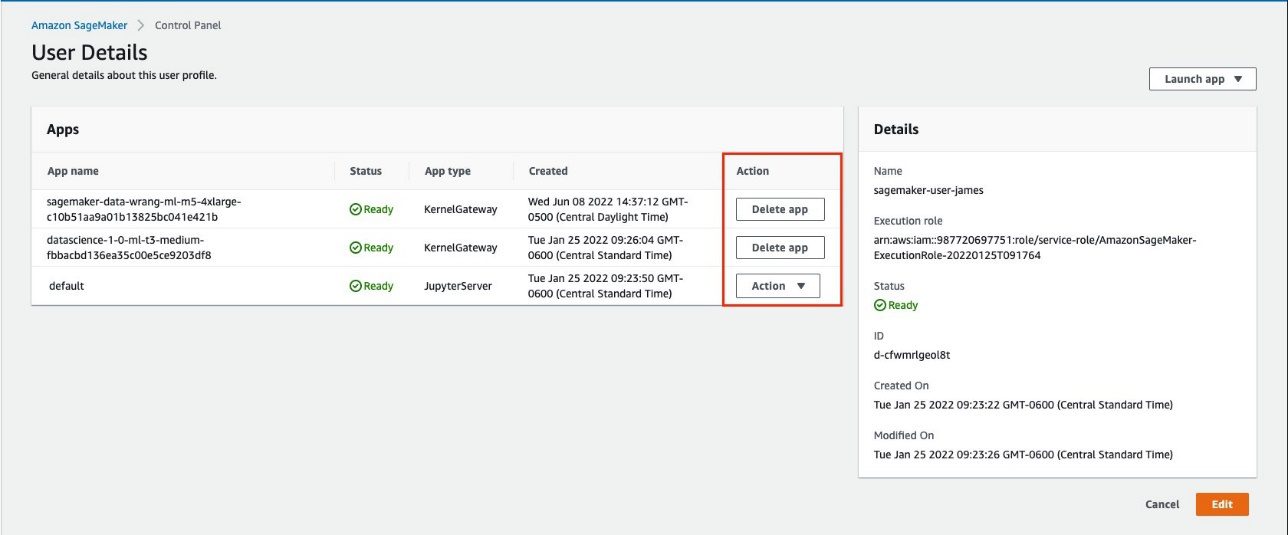

To automatically tag these dynamic resources, you need to assign tags to SageMaker domain and domain users who are provisioned access to those resources. You can specify these tags in the tags parameter of create-domain or create-user-profile during profile or domain creation, or you can add them later using the add-tags API. Studio automatically copies and assigns these tags to the Studio notebooks created in the domain or by the specific users. You can also add tags to SageMaker domains by editing the domain settings in the Studio Control Panel.

The following is an example of assigning tags to the profile during creation.

To tag existing domains and users, use the add-tags API. The tags are then applied to any new notebooks. To have these tags applied to your existing notebooks, you need to restart the Studio app (Kernel Gateway and Jupyter Server) belonging to that user profile. This won’t cause any loss in notebook data. Refer to this Shut Down and Update SageMaker Studio and Studio Apps to learn how to delete and restart your Studio apps.

Tagging SageMaker notebook instances

In the case of a SageMaker notebook instance, tagging is applied to the instance itself. The tags are assigned to all resources running in the same instance. You can specify tags programmatically using the tags parameter in the create-notebook-instance API or add them via the SageMaker console during instance creation. You can also add or update tags anytime using the add-tags API or via the SageMaker console.

Note that this excludes SageMaker managed jobs and resources such as training and processing jobs because they’re in the service environment rather than on the instance. In the next section, we go over how to apply tagging to these resources in greater detail.

Tagging SageMaker managed jobs and resources

For SageMaker managed jobs and resources, tagging must be applied to the tags attribute as part of each API request. An SKLearnProcessor example is illustrated in the following code. You can find more examples of how to assign tags to other SageMaker managed jobs and resources on the GitHub repo.

Tagging SageMaker pipelines

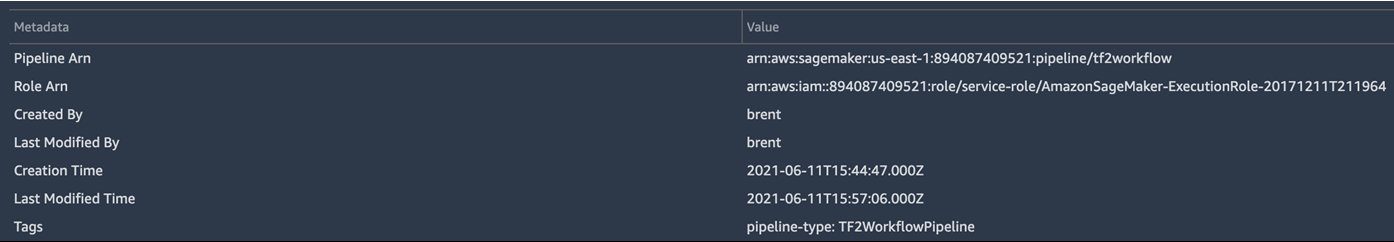

In the case of SageMaker pipelines, you can tag the entire pipeline as a whole instead of each individual step. The SageMaker pipeline automatically propagates the tags to each pipeline step. You still have the option to add additional, separate tags to individual steps if needed. In the Studio UI, the pipeline tags appear in the metadata section.

To apply tags to a pipeline, use the SageMaker Python SDK:

Enforce tagging using IAM policies

Although tagging is an effective mechanism for implementing cloud management and governance strategies, enforcing the right tagging behavior can be challenging if you just leave it to the end-users. How do you prevent ML resource creation if a specific tag is missing, how do you ensure the right tags are applied, and how do you prevent users from deleting existing tags?

You can accomplish this using AWS Identity and Access Management (IAM) policies. The following code is an example of a policy that prevents SageMaker actions such as CreateDomain or CreateNotebookInstance if the request doesn’t contain the environment key and one of the list values. The ForAllValues modifier with the aws:TagKeys condition key indicates that only the key environment is allowed in the request. This stops users from including other keys, such as accidentally using Environment instead of environment.

Tag policies and service control policies (SCPs) can also be a good way to standardize creation and labeling of your ML resources. For more information about how to implement a tagging strategy that enforces and validates tagging at the organization level, refer to Cost Allocation Blog Series #3: Enforce and Validate AWS Resource Tags.

Cost allocation reporting

You can view the tags by filtering the views on Cost Explorer, viewing a monthly cost allocation report, or by examining the CUR.

Visualizing tags in Cost Explorer

Cost Explorer is a tool that enables you to view and analyze your costs and usage. You can explore your usage and costs using the main graph: the Cost Explorer cost and usage reports. For a quick video on how to use Cost Explorer, check out How can I use Cost Explorer to analyze my spending and usage?

With Cost Explorer, you can filter how you view your AWS costs by tags. Group by allows us to filter out results by tag keys such as Environment, Deployment, or Cost Center. The tag filter helps us select the value we desire regardless of the key. Examples include Production and Staging. Keep in mind that you must run the resources after adding and activating tags; otherwise, Cost Explorer won’t have any usage data and the tag value won’t be displayed as a filter or group by option.

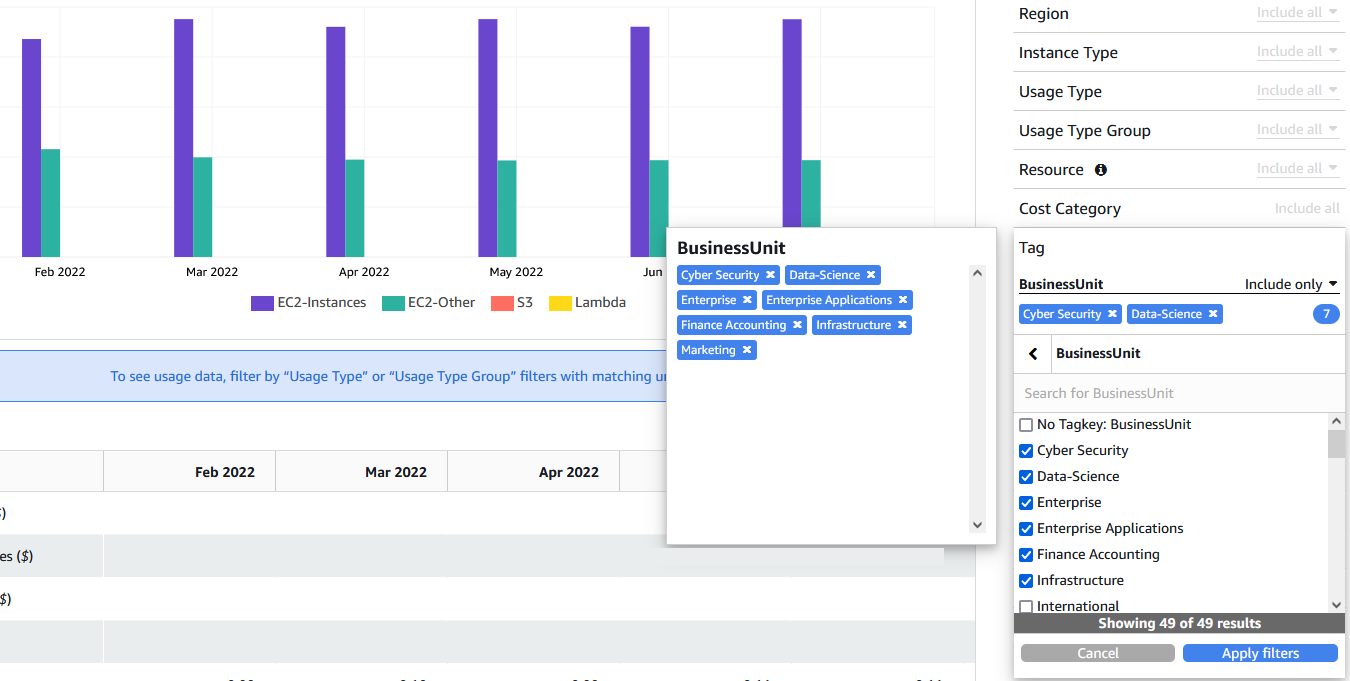

The following screenshot is an example of filtering by all values of the BusinessUnit tag.

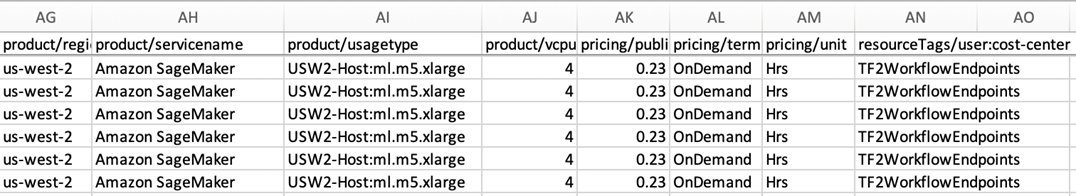

Examining tags in the CUR

The Cost and Usage Rreport contains the most comprehensive set of cost and usage data available. The report contains line items for each unique combination of AWS product, usage type, and operation that your AWS account uses. You can customize the CUR to aggregate the information either by the hour or by the day. A monthly cost allocation report is one way to set up cost allocation reporting. You can set up a monthly cost allocation report that lists the AWS usage for your account by product category and linked account user. The report contains the same line items as the detailed billing report and additional columns for your tag keys. You can set it up and download your report by following the steps in Monthly cost allocation report.

The following screenshot shows how user-defined tag keys show up in the CUR. User-defined tag keys have the prefix user, such as user:Department and user:CostCenter. AWS-generated tag keys have the prefix aws.

Visualize the CUR using Amazon Athena and Amazon QuickSight

Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. Athena is serverless, so there is no infrastructure to manage, and you pay only for the queries that you run. To integrate Athena with CURs, refer to Querying Cost and Usage Reports using Amazon Athena. You can then build custom queries to query CUR data using standard SQL. The following screenshot is an example of a query to filter all resources that have the value TF2WorkflowTraining for the cost-center tag.

In the following example, we’re trying to figure out which resources are missing values under the cost-center tag.

More information and example queries can be found in the AWS CUR Query Library.

You can also feed CUR data into Amazon QuickSight, where you can slice and dice it any way you’d like for reporting or visualization purposes. For instructions on ingesting CUR data into QuickSight, see How do I ingest and visualize the AWS Cost and Usage Report (CUR) into Amazon QuickSight.

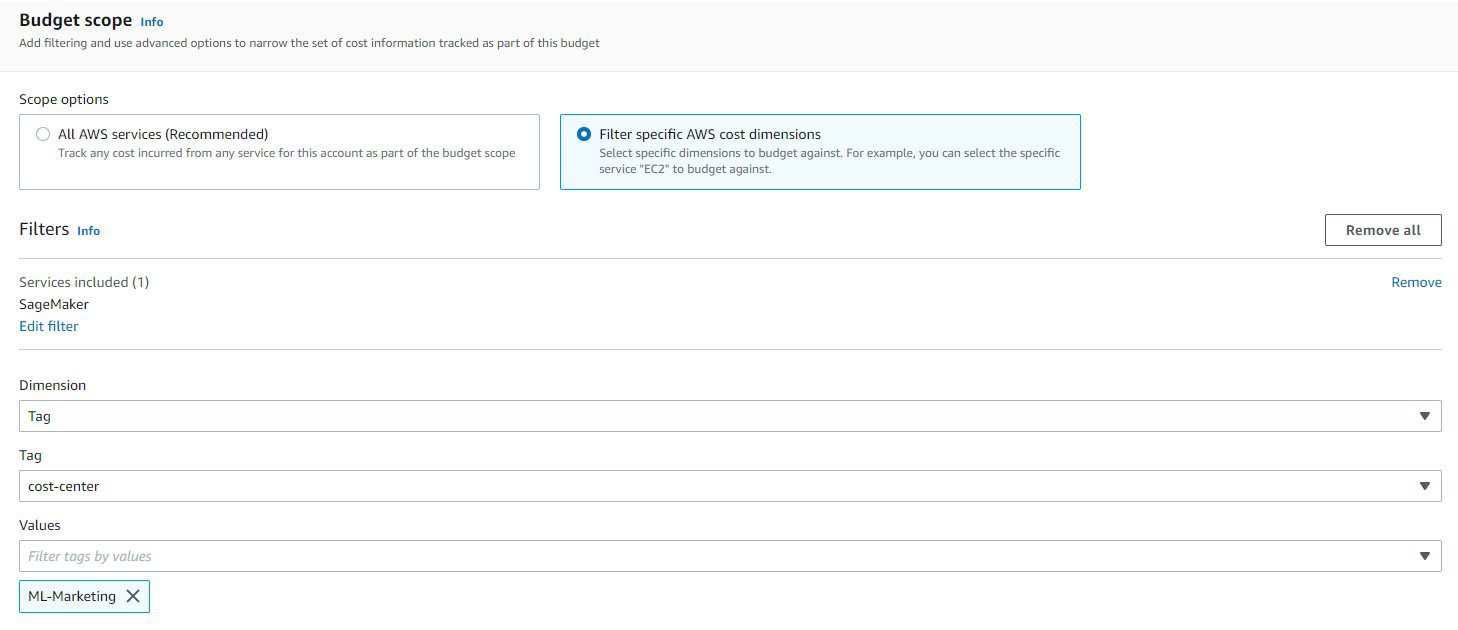

Budget monitoring using tags

AWS Budgets is an excellent way to provide an early warning if spend spikes unexpectedly. You can create custom budgets that alert you when your ML costs and usage exceed (or are forecasted to exceed) your user-defined thresholds. With AWS Budgets, you can monitor your total monthly ML costs or filter your budgets to track costs associated with specific usage dimensions. For example, you can set the budget scope to include SageMaker resource costs tagged as cost-center: ML-Marketing, as shown in the following screenshot. For additional dimensions and detailed instructions on how to set up AWS Budgets, refer to here.

With budget alerts, you can send notifications when your budget limits are (or are about to be) exceeded. These alerts can also be posted to an Amazon Simple Notification Service (Amazon SNS) topic. An AWS Lambda function that subscribes to the SNS topic is then invoked, and any programmatically implementable actions can be taken.

AWS Budgets also lets you configure budget actions, which are steps that you can take when a budget threshold is exceeded (actual or forecasted amounts). This level of control allows you to reduce unintentional overspending in your account. You can configure specific responses to cost and usage in your account that will be applied automatically or through a workflow approval process when a budget target has been exceeded. This is a really powerful solution to ensure that your ML spend is consistent with the goals of the business. You can select what type of action to take. For example, when a budget threshold is crossed, you can move specific IAM users from admin permissions to read-only. For customers using Organizations, you can apply actions to an entire organizational unit by moving them from admin to read-only. For more details on how to manage cost using budget actions, refer to How to manage cost overruns in your AWS multi-account environment – Part 1.

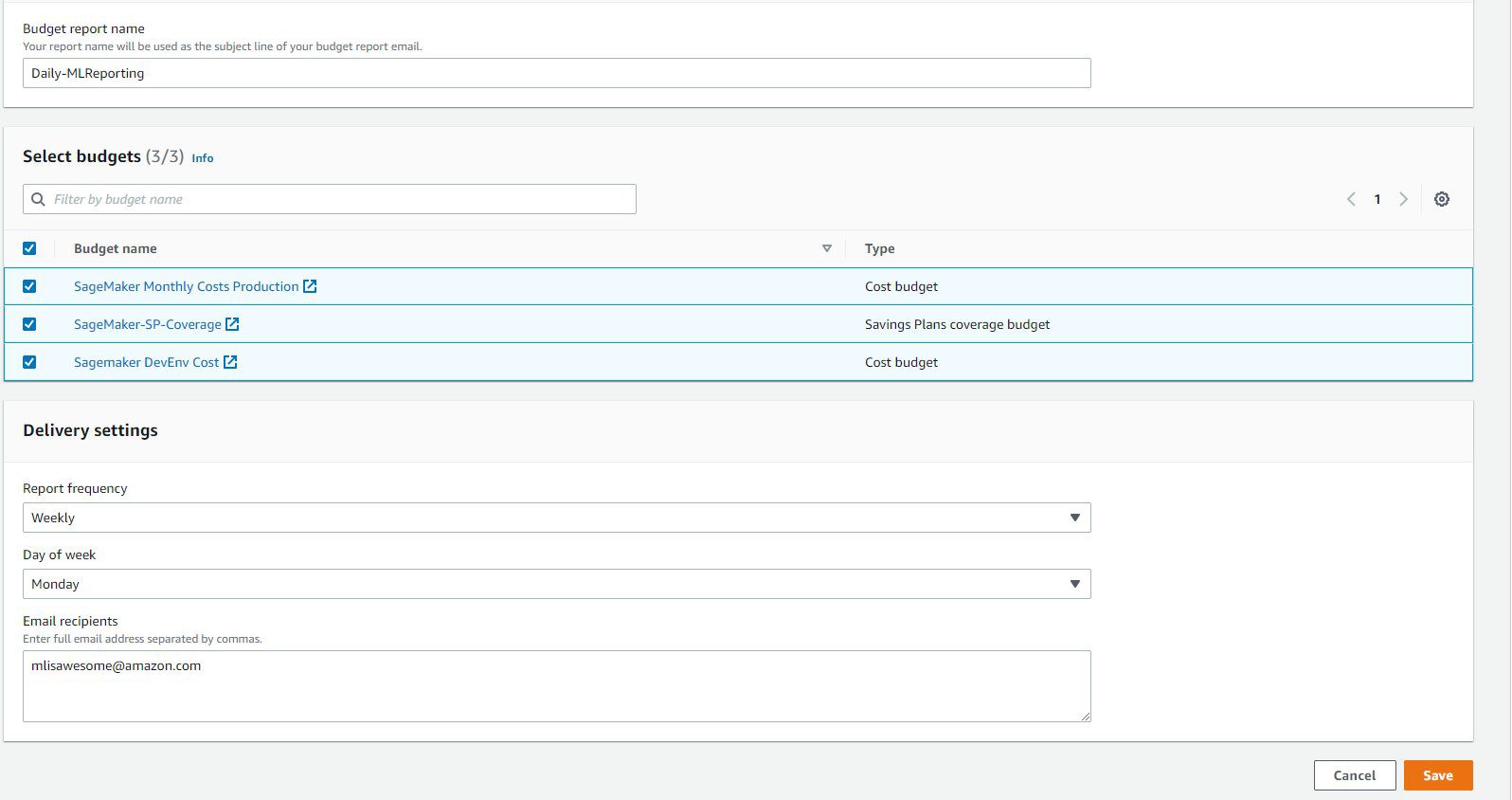

You can also set up a report to monitor the performance of your existing budgets on a daily, weekly, or monthly cadence and deliver that report to up to 50 email addresses. With AWS Budgets reports, you can combine all SageMaker-related budgets into a single report. This feature enables you to track your SageMaker footprint from a single location, as shown in the following screenshot. You can opt to receive these reports on a daily, weekly, or monthly cadence (I’ve chosen Weekly for this example), and choose the day of week when you want to receive them.

This feature is useful to keep your stakeholders up to date with your SageMaker costs and usage, and help them see when spend isn’t trending as expected.

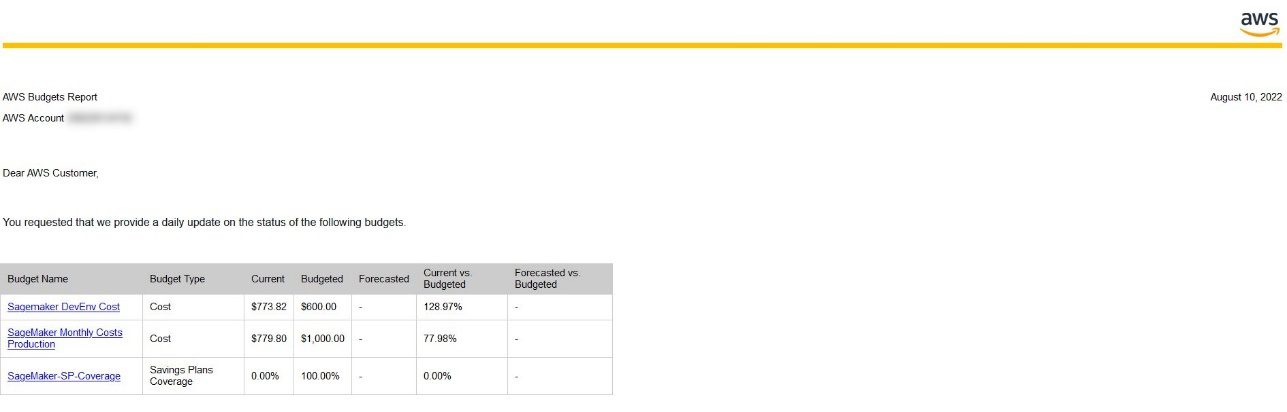

After you set up this configuration, you should receive an email similar to the following.

Conclusion

In this post, we showed how you can set up cost allocation tagging for SageMaker and shared tips on tagging best practices for your SageMaker environment and workloads. We then discussed different reporting options like Cost Explorer and the CUR to help you improve visibility into your ML spend. Lastly, we demonstrated AWS Budgets and the budget summary report to help you monitor the ML spend of your organization.

For more information about applying and activating cost allocation tags, see User-Defined Cost Allocation Tags.

About the authors

Sean Morgan is an AI/ML Solutions Architect at AWS. He has experience in the semiconductor and academic research fields, and uses his experience to help customers reach their goals on AWS. In his free time, Sean is an active open-source contributor and maintainer, and is the special interest group lead for TensorFlow Add-ons.

Sean Morgan is an AI/ML Solutions Architect at AWS. He has experience in the semiconductor and academic research fields, and uses his experience to help customers reach their goals on AWS. In his free time, Sean is an active open-source contributor and maintainer, and is the special interest group lead for TensorFlow Add-ons.

Brent Rabowsky focuses on data science at AWS, and leverages his expertise to help AWS customers with their own data science projects.

Brent Rabowsky focuses on data science at AWS, and leverages his expertise to help AWS customers with their own data science projects.

Nilesh Shetty is as a Senior Technical Account Manager at AWS, where he helps enterprise support customers streamline their cloud operations on AWS. He is passionate about machine learning and has experience working as a consultant, architect, and developer. Outside of work, he enjoys listening to music and watching sports.

Nilesh Shetty is as a Senior Technical Account Manager at AWS, where he helps enterprise support customers streamline their cloud operations on AWS. He is passionate about machine learning and has experience working as a consultant, architect, and developer. Outside of work, he enjoys listening to music and watching sports.

James Wu is a Senior AI/ML Specialist Solution Architect at AWS. helping customers design and build AI/ML solutions. James’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. Prior to joining AWS, James was an architect, developer, and technology leader for over 10 years, including 6 years in engineering and 4 years in marketing & advertising industries.

James Wu is a Senior AI/ML Specialist Solution Architect at AWS. helping customers design and build AI/ML solutions. James’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. Prior to joining AWS, James was an architect, developer, and technology leader for over 10 years, including 6 years in engineering and 4 years in marketing & advertising industries.

Tags: Archive

Leave a Reply