Deploy a machine learning inference data capture solution on AWS Lambda

Monitoring machine learning (ML) predictions can help improve the quality of deployed models. Capturing the data from inferences made in production can enable you to monitor your deployed models and detect deviations in model quality. Early and proactive detection of these deviations enables you to take corrective actions, such as retraining models, auditing upstream systems, or fixing quality issues.

AWS Lambda is a serverless compute service that can provide real-time ML inference at scale. In this post, we demonstrate a sample data capture feature that can be deployed to a Lambda ML inference workload.

In December 2020, Lambda introduced support for container images as a packaging format. This feature increased the deployment package size limit from 500 MB to 10 GB. Prior to this feature launch, the package size constraint made it difficult to deploy ML frameworks like TensorFlow or PyTorch to Lambda functions. After the launch, the increased package size limit made ML a viable and attractive workload to deploy to Lambda. In 2021, ML inference was one of the fastest growing workload types in the Lambda service.

Amazon SageMaker, Amazon’s fully managed ML service, contains its own model monitoring feature. However, the sample project in this post shows how to perform data capture for use in model monitoring for customers who use Lambda for ML inference. The project uses Lambda extensions to capture inference data in order to minimize the impact on the performance and latency of the inference function. Using Lambda extensions also minimizes the impact on function developers. By integrating via an extension, the monitoring feature can be applied to multiple functions and maintained by a centralized team.

Overview of solution

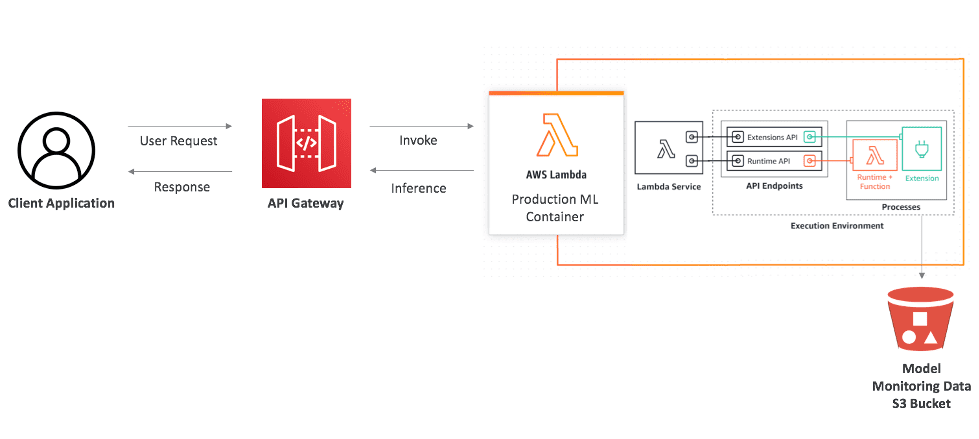

This project contains source code and supporting files for a serverless application that provides real-time inferencing using a distilbert-base, pretrained question answering model. The project uses the Hugging Face question and answer natural language processing (NLP) model with PyTorch to perform natural language inference tasks. The project also contains a solution to perform inference data capture for the model predictions. The Lambda function writer can determine exactly which data from the inference request input and the prediction result to send to the extension. In this solution, we send the input and the answer from the model to the extension. The extension then periodically sends the data to an Amazon Simple Storage Service (Amazon S3) bucket. We build the data capture extension as a container image using a makefile. We then build the Lambda inference function as a container image and add the extension container image as a container image layer. The following diagram shows an overview of the architecture.

Lambda extensions are a way to augment Lambda functions. In this project, we use an external Lambda extension to log the inference request and the prediction from the inference. The external extension runs as a separate process in the Lambda runtime environment, diminishing the impact on the inference function. However, the function shares resources such as CPU, memory, and storage with the Lambda function. We recommend allocating enough memory to the Lambda function to ensure optimal resource availability. (In our testing, we allocated 5 GB of memory to the inference Lambda function and saw optimal resource availability and inference latency). When an inference is complete, the Lambda service returns the response immediately and doesn’t wait for the extension to finish logging the request and response to the S3 bucket. With this pattern, the monitoring extension doesn’t affect the inference latency. To learn more about Lambda extensions check out these video series.

Project contents

This project uses the AWS Serverless Application Model (AWS SAM) command line interface (CLI). This command-line tool allows developers to initialize and configure applications; package, build, and test locally; and deploy to the AWS Cloud.

You can download the source code for this project from the GitHub repository.

This project includes the following files and folders:

- app/app.py – Code for the application’s Lambda function, including the code for ML inferencing.

- app/Dockerfile – The Dockerfile to build the container image that packages the inference function, the model downloaded from Hugging Face, and the Lambda extension built as a layer. In contrast to .zip functions, layers can’t be attached to container-packaged Lambda functions at function create time. Instead, we build the layer and copy its contents into the container image.

- Extensions – The model monitor extension files. This Lambda extension is used to log the input to the inference function and the corresponding prediction to an S3 bucket.

- app/model – The model downloaded from Hugging Face.

- app/requirements.txt – The Python dependencies to be installed into the container.

- events – Invocation events that you can use to test the function.

- template.yaml – A descriptor file that defines the application’s AWS resources.

The application uses several AWS resources, including Lambda functions and an Amazon API Gateway API. These resources are defined in the template.yaml file in this project. You can update the template to add AWS resources through the same deployment process that updates your application code.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account

- The AWS SAM CLI installed

- Docker community edition installed

- Python 3.8.x

Deploy the sample application

To build your application for the first time, complete the following steps:

- Run the following code in your shell. (This will builds the extension as well):

- Build a Docker image of the model monitor application. The build contents reside in the

.aws-samdirectory

- Tag your container for deployment to Amazon Elastic Container Registry (Amazon ECR)

- Login to Amazon ECR:

- Create a repository in Amazon ECR:

aws ecr create-repositoryrepository-name serverless-ml-model-monitor--image-scanning-configuration scanOnPush=true--region us-east-1

- Push the container image to Amazon ECR:

- Uncomment line #1 in app/Dockerfile and edit it to point to the correct ECR repository image, then uncomment lines #6 and #7 in app/Dockerfile:

- Build the application again:

We build again because Lambda doesn’t support Lambda layers directly for the container image packaging type. We need to first build the model monitoring component as a container image, upload it to Amazon ECR, and then use that image in the model monitoring application as a container layer.

- Finally, deploy the Lambda function, API Gateway, and extension:

This command packages and deploys your application to AWS with a series of prompts:

- Stack name : The name of the deployed AWS CloudFormation stack. This should be unique to your account and Region, and a good starting point would be something matching your project name.

- AWS Region : The AWS Region to which you deploy your application.

- Confirm changes before deploy : If set to

yes, any change sets are shown to you before running for manual review. If set to no, the AWS SAM CLI automatically deploys application changes. - Allow AWS SAM CLI IAM role creation : Many AWS SAM templates, including this example, create AWS Identity and Access Management (IAM) roles required for the Lambda function(s) included to access AWS services. By default, these are scoped down to the minimum required permissions. To deploy a CloudFormation stack that creates or modifies IAM roles, the

CAPABILITY_IAMvalue forcapabilitiesmust be provided. If permission isn’t provided through this prompt, to deploy this example you must explicitly pass--capabilities CAPABILITY_IAMto thesam deploycommand. - Save arguments to samconfig.toml : If set to

yes, your choices are saved to a configuration file inside the project so that in the future, you can just runsam deploywithout parameters to deploy changes to your application.

You can find your API Gateway endpoint URL in the output values displayed after deployment.

Test the application

To test the application, use Postman or curl to send a request to the API Gateway endpoint. For example:

You should see output like the following code. The ML model inferred from the context and returned the answer for our question.

After a few minutes, you should see a file in the S3 bucket nlp-qamodel-model-monitoring-modelmonitorbucket- with the input and the inference logged.

Clean up

To delete the sample application that you created, use the AWS CLI:

Conclusion

In this post, we implemented a model monitoring feature as a Lambda extension and deployed it to a Lambda ML inference workload. We showed how to build and deploy this solution to your own AWS account. Finally, we showed how to run a test to verify the functionality of the monitor.

Please provide any thoughts or questions in the comments section. For more serverless learning resources, visit Serverless Land.

About the Authors

Dan Fox is a Principal Specialist Solutions Architect in the Worldwide Specialist Organization for Serverless. Dan works with customers to help them leverage serverless services to build scalable, fault-tolerant, high-performing, cost-effective applications. Dan is grateful to be able to live and work in lovely Boulder, Colorado.

Dan Fox is a Principal Specialist Solutions Architect in the Worldwide Specialist Organization for Serverless. Dan works with customers to help them leverage serverless services to build scalable, fault-tolerant, high-performing, cost-effective applications. Dan is grateful to be able to live and work in lovely Boulder, Colorado.

Newton Jain is a Senior Product Manager responsible for building new experiences for machine learning, high performance computing (HPC), and media processing customers on AWS Lambda. He leads the development of new capabilities to increase performance, reduce latency, improve scalability, enhance reliability, and reduce cost. He also assists AWS customers in defining an effective serverless strategy for their compute-intensive applications.

Newton Jain is a Senior Product Manager responsible for building new experiences for machine learning, high performance computing (HPC), and media processing customers on AWS Lambda. He leads the development of new capabilities to increase performance, reduce latency, improve scalability, enhance reliability, and reduce cost. He also assists AWS customers in defining an effective serverless strategy for their compute-intensive applications.

Diksha Sharma is a Solutions Architect and a Machine Learning Specialist at AWS. She helps customers accelerate their cloud adoption, particularly in the areas of machine learning and serverless technologies. Diksha deploys customized proofs of concept that show customers the value of AWS in meeting their business and IT challenges. She enables customers in their knowledge of AWS and works alongside customers to build out their desired solution.

Diksha Sharma is a Solutions Architect and a Machine Learning Specialist at AWS. She helps customers accelerate their cloud adoption, particularly in the areas of machine learning and serverless technologies. Diksha deploys customized proofs of concept that show customers the value of AWS in meeting their business and IT challenges. She enables customers in their knowledge of AWS and works alongside customers to build out their desired solution.

Veda Raman is a Senior Specialist Solutions Architect for machine learning based in Maryland. Veda works with customers to help them architect efficient, secure and scalable machine learning applications. Veda is interested in helping customers leverage serverless technologies for Machine learning.

Veda Raman is a Senior Specialist Solutions Architect for machine learning based in Maryland. Veda works with customers to help them architect efficient, secure and scalable machine learning applications. Veda is interested in helping customers leverage serverless technologies for Machine learning.

Josh Kahn is the Worldwide Tech Leader for Serverless and a Principal Solutions Architect. He leads a global community of serverless experts at AWS who help customers of all sizes, from start-ups to the world’s largest enterprises, to effectively use AWS serverless technologies.

Josh Kahn is the Worldwide Tech Leader for Serverless and a Principal Solutions Architect. He leads a global community of serverless experts at AWS who help customers of all sizes, from start-ups to the world’s largest enterprises, to effectively use AWS serverless technologies.

Tags: Archive

Leave a Reply