2023 in review: many reasons to celebrate

The year of 2023 was a busy one for the Open Source Initiative (OSI), as we celebrated the 25th Anniversary of Open Source while looking towards present and future challenges and opportunities. Our work has revolved around 3 grand areas: licensing and legal, policy and standards, and advocacy and outreach. As the steward of the Open Source Definition, license and legal have been part of our core program since the very beginning of our foundation. We serve as an anchor for open community consensus on what constitutes Open Source. We protect the Open Source principles, enforcing the marks “Certified Open Source” and “Open Source Approved License”. Under policy and standards, we have monitored policy and standards setting organizations, supporting legislators and policy makers educating them about the Open Source ecosystem, its role in innovation and its value for an open future. Lastly, under advocacy and outreach, we are leading global conversations with non-profits, developers and lawyers to improve the understanding of Open Source principles and practice. OSI investigates the impacts of ongoing debates around Open Source, from artificial intelligence to cybersecurity.

Highlights

| Website | Membership | Newsletter |

|---|---|---|

~2M visitors / year (stable YoY) |

~2800 members (+136% YoY) |

~10100 subscribers (+25% YoY) |

We surpassed 2,500 members and 10,000 subscribers.

| Events | Workshop | Keynote | Talk | Webinar |

|---|---|---|---|---|

36 |

6 |

12 |

24 |

18 |

Licensing and Legal

The License Review working group has continued to examine and improve the license review process and has created a systematic and well-ordered database of all the licenses that have been submitted to OSI for approval since the time of the organization’s founding. The OSI has also worked towards establishing an open governance model for ClearlyDefined, an open source project with a mission to create a global database of licensing metadata for every software component ever published. This year, GitHub has added 17.5 million package licenses sourced from ClearlyDefined to their database, expanding the license coverage for packages that appear in dependency graph, dependency insights, dependency review, and a repository’s software bill of materials (SBOM).

OSI Approved Licenses

We provide a venue for the community to discuss Open Source licenses and we maintain the OSI Approved Licenses database.

ClearlyDefined

We aim to crowdsource a global database of licensing metadata for every software component ever published for the benefit of all.

List of articles:

- Most popular licenses for each language in 2023

- License Consistency Working Group begins license review

- How the OSI checks if new licenses comply with the Open Source Definition

- The Approved Open Source Licenses never looked better

- Webinar: ClearlyDefined proceeding towards a clear governance structure

- What Is Open Governance? Drafting a charter for an Open Source project

- Diving in to Open Source supply chain; connecting and collaborating with communities

- Open Source Approved License® registry project underway with help of intern, Giulia Dellanoce

- ClearlyDefined gets a new community manager with a vision toward the future

- The License Review working group asks for community input on its recommendations

Policy and Standards

The OSI’s senior policy directors Deb Bryant and Simon Phipps have been busy keeping track of policies affecting Open Source software mostly across the US and Europe and bringing different stakeholders together to voice their opinions. In particular, we are tracking the Securing Open Source Software Act in the US and the Cyber Resilience Act in Europe. In 2023, the OSI joined the Digital Public Goods Alliance, and launched the Open Source Alliance with 20 initial members, including the Apache Software Foundation, Eclipse Foundation, and the Python Software Foundation.

Open Policy Alliance

We are bringing non-profit organizations together to participate in educating and informing public policy decisions related to Open Source software, content, research, and education.

List of articles:

- Diverse Open Source uses highlight need for precision in Cyber Resilience Act

- Convening public benefit and charitable foundations working in open domains

- Modern EU policies need the voices of the fourth sector

- Open Policy Alliance: A new program to amplify underrepresented voices in public policy development

- OSI’s comments to US Patent and Trademark Office

- OSI calls for revision of disclosure rules in CRA

- Action needed to protect against patent trolls

- Regulatory language cannot be the same for all software

- Why open video is vital for Open Source

- Another issue with the Cyber Resilience Act: European standards bodies are inaccessible to Open Source projects

- The Cyber Resilience Act introduces uncertainty and risk leaving Open Source projects confused

- The vital role of Open Source maintainers facing the Cyber Resilience Act

- Open Source ensures code remains a part of culture

- Why the European Commission must consult the Open Source communities

- Recap/Summary of the Digital Market Act workshop in Brussels

- Why Open Source should be exempt from Standard-Essential Patents

- Open Source Initiative joins the Digital Public Goods Alliance

- 2023, governments scrutinize Open Source

- The ultimate list of reactions to the Cyber Resilience Act

- What is the Cyber Resilience Act and why it’s dangerous for Open Source

Advocacy and Outreach

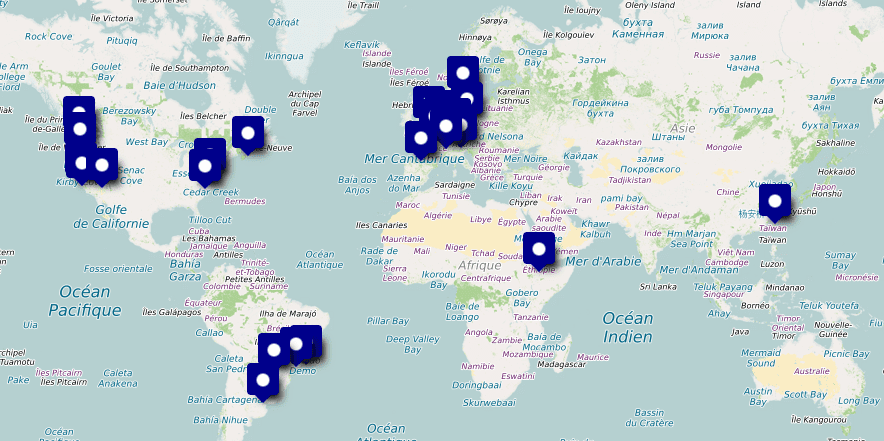

The OSI has celebrated the 25th anniversary of Open Source in partnership with 36 conferences from around the world with a combined attendance of over 125,000 people. Throughout the year, our focus has shifted from reviewing the past of Free and Open Source software to exploring the future of Open Source in this new era of AI. We have organized several online and in-person activities as part of the Deep Dive AI, an open multi-stakeholder process to define Open Source AI. We have also organized License Clinic, a workshop tailored for the US federal government. Finally, we have launched Opensource.net as a new home for a community of writers and editors of the project formerly known as Opensource.com.

25 years of Open Source

We celebrated the 25th Anniversary by sharing the rich and interconnected history of the Free Software and Open Source movements and we explored the challenges and opportunities ahead, from AI to cybersecurity.

Deep Dive: Defining Open Source AI

We are bringing together global experts to establish a shared set of principles that can recreate the permissionless, pragmatic and simplified collaboration for AI practitioners.

Opensource.net

We gave a new home to the community of contributors from opensource.com. The new platform supports healthy dialog and informed education on Open Source software topics.

List of articles:

- Navigating what’s next: Takeaways from the Digital Public Goods Alliance Meeting

- Open Source AI: Establishing a common ground

- DPGA members engage in Open Source AI Definition workshop

- Closing the 2023 rounds of Deep Dive AI with first draft piece of the Definition of Open Source AI

- Nerdearla reflects on openness and inclusivity

- Three highlights from Open Source Summit Europe 2023

- Opensource.com community finds new life with the Open Source Initiative

- To trust AI, it must be open and transparent. Period.

- Open Source Initiative Hosts 2nd Deep Dive AI Event, Aims to Define ‘Open Source’ for AI

- Driving the global conversation about “Open Source AI”

- COSCUP unveiled

- Celebrating 25 years of Open Source at Campus Party

- Takeaways from the “Defining Open AI” community workshop

- Meta’s LLaMa 2 license is not Open Source

- Three takeaways from Data + AI Summit

- Towards a definition of “Open Artificial Intelligence”: First meeting recap

- Digital activists and open movement leaders share their perspective with Open Future in new research report, “Shifting tides: the open movement at a turning point”

- Now is the time to define Open Source AI

- The AI renaissance and why Open Source matters

- The importance of Open Source AI and the challenges of liberating data

- Things I learned at Brussels to the Bay: AI governance in the world

- Why advocacy and outreach matter

- 2023 State of Open Source Report: key findings and analysis

- OSI to hold License Clinic

- The 2023 State of Open Source Report confirms security as top issue

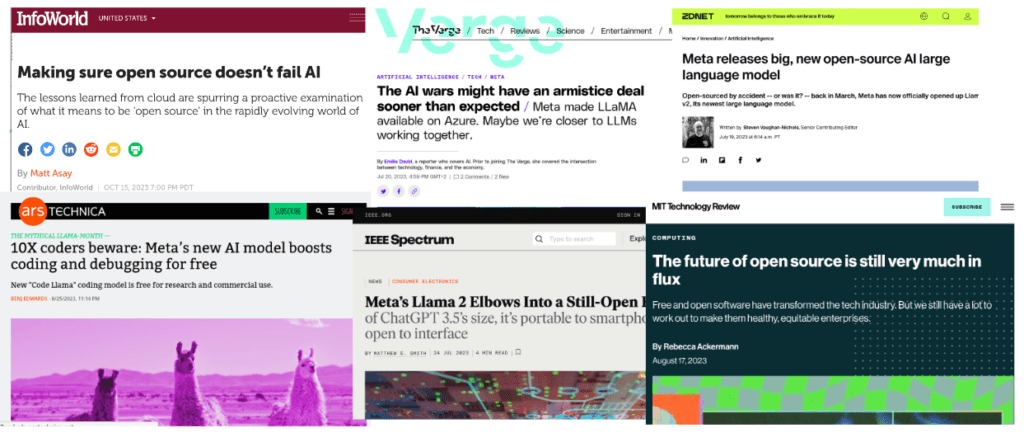

Press mentions

In 2023, the Open Source Initiative was cited 100 times in the press worldwide, educating and countering misinformation. Our work was featured at The Verge, TechCrunch, ZDNET, InfoWorld, Ars Technica, IEEE Spectrum, MIT Technology Review, among other top media outlets.

List of select articles:

- Applying the lessons of open source to generative AI — InfoWorld

- What is the EU’s Cyber Resilience Act (CRA)? — ITPro

- Open Source AI and the Llama 2 Kerfuffle — The New Stack

- The future of open source is still very much in flux— MIT Technology Review

- 7 Takeaways On The State And Future Of OSS Disruption — Forbes

- Meta’s Llama 2 Elbows Into a Still-Open Field — IEEE Spectrum

- Meta can call Llama 2 open source as much as it likes, but that doesn’t mean it is — The Register

- So, that’s the end of OpenAI’s ChatGPT moat — Business Insider

- The AI wars might have an armistice deal sooner than expected — The Verge

- Open source licenses need to leave the 1980s and evolve to deal with AI — The Register

- EU’s Cyber Resilience Act contains a poison pill for open source developers — The Register

Events

The Open Source Initiative contributed with keynotes, panels, talks, and other activities at 36 events worldwide throughout 2023, including the top tech and open source conferences, with a combined attendance of over 125,000 people.

List of events:

- EU Open Source Policy Summit

- FOSDEM

- State of Open Con

- SCALE 20x

- FOSS Backstage

- ORT Community Days

- Open Source License Clinic

- FOSS North

- Web Summit Rio

- Open Source Summit NA

- OpenInfra Summit

- Data + AI Summit

- FOSSY

- Campus Party Brazil

- Open Source Congress

- COSCUP

- North Bay Python

- Diana Initiative

- Black Hat US

- Ai4

- DEFCON

- NextCloud Conference

- Open Source Summit EU

- Nerdearla

- Deep Dive: AI Webinar Series

- Community Leadership Summit

- All Things Open

- EclipseCon

- Latinoware

- Linux Foundation Member Summit

- Community over Code

- SFScon

- Digital Public Goods Alliance Meeting

- Open Source Experience

- AI.dev

EU Open Source Policy Summit

In Support of Sound Public Policy: How to Avoid Unintended Consequences to OSS in Lawmaking

Deborah Bryant, James Lovegrove, Maarten Aertse, Simon Phipps

As Open Source has taken on monumental importance in the digital markets being the main model for software development, its exposure to regulatory risk has increased. Just in the last few years we have seen policymakers, often despite their best intentions, unintentionally targeting Open Source developers, repositories or the innovation model itself. To name some examples: the Copyright Directive, and more recently the AI Act and the Cyber Resilience Act all have created unintended regulatory risk for OSS. In this panel, we will discuss the status of ongoing files, but also take a few steps back and suggest approaches to how policymakers can avoid these unintended consequences. How to consider developers and communities in the legislative process? How does the very horizontal Open Source ecosystem fit into the EU system of vertical multistakeholderism? What is the responsibility of Open Source experts to engage earlier with policymakers?

FOSDEM

Celebrating 25 years of Open Source: Past, Present, and Future

Nick Vidal

February 2023 marks the 25th Anniversary of Open Source. This is a huge milestone for the whole community to celebrate! In this session, we’ll travel back in time to understand our rich journey so far, and look forward towards the future to reimagine a new world where openness and collaboration prevail. Come along and celebrate with us this very special moment!

The open source software label was coined at a strategy session held on February 3rd, 1998 in Palo Alto, California. That same month, the Open Source Initiative (OSI) was founded as a general educational and advocacy organization to raise awareness and adoption for the superiority of an open development process. One of the first tasks undertaken by OSI was to draft the Open Source Definition (OSD). To this day, the OSD is considered a gold standard of open-source licensing.

In this session, we’ll cover the rich and interconnected history of the Free Software and Open Source movements, and demonstrate how, against all odds, open source has come to “win” the world. But have we really won? Open source has always faced an extraordinary uphill battle: from misinformation and FUD (Fear Uncertainty and Doubt) constantly being spread by the most powerful corporations, to issues around sustainability and inclusion.

We’ll navigate this rich history of open source and dive right into its future, exploring the several challenges and opportunities ahead, including its key role on fostering collaboration and innovation in emerging areas such as ML/AI and cybersecurity. We’ll share an interactive timeline during the presentation and throughout the year, inviting the audience and the community at-large to share their open source stories and dreams with each other.

Open Source Initiative – Changes to License Review Process

Pamela Chestek

The Open Source Initiative is working on making improvements to its license review process and has a set of recommendations for changes it is considering making, available [link to be provided]. This session will review the proposed changes and also take feedback from the participants on what it got right, what it got wrong, and what it might have missed.

The License Review Working Group of the Open Source Initiative was created to improve the license review process. In the past, the process has been criticized as unpredictable, difficult to navigate, and applying undisclosed requirements. The Working Group developed a set of recommendations for revising the process for reviewing and approving or rejecting licenses submitted to the OSI. The recommendations include separate review standards for new and legacy licenses, a revised group of license categories, and some specific requirements for license submissions. The recommendations are available [link to be provided] and the OSI is in the feedback stage of its process, seeking input on the recommendations. The session will review the proposed changes and also take feedback from the participants on what it got right, what it got wrong, and what it might have missed.

The role of Open Infrastructure in digital sovereignty

Thierry Carrez

Pandemics and wars have woken up countries and companies to the strategic vulnerabilities in their infrastructure dependencies, with digital sovereignty now being a top concern, especially in Europe.

In this short talk, Thierry Carrez, the General Manager for the Open Infrastructure Foundation, will explore the critical role that open source has to play in general in enabling digital sovereignty. In particular, he will explore how Open Infrastructure (open source solutions for providing infrastructure), with its interoperability, transparency and independence properties, is essential to to reach data and computing sovereignty.

SCALE 20x

Defining an Open Source AI

Stefano Maffulli

The traditional view of open source code implementing AI algorithms may not be sufficient to guarantee inspectability, modifiability and replicability of the AI systems. The Open Source Initiative is leading an exploration of the world of AI and Open Source, diving around the boundaries of data and software to discover how concepts like copy, distribution, modification of source code apply in the context of AI.

AI systems are already deciding who stays in jail or which customers deserve credit to buy a house. More kinds of “autonomous” systems are appearing so fast that government regulators are rushing to define policies.

Artificial Intelligence/Machine learning, explained at a high level, is a type of complex system that combines code to create and train/tune models, and data used for training and validation to generate artifacts. The most common tools are implemented with open source software like TensorFlow or PyTorch. But from a practical perspective, these packages are not sufficient to enable a user to exercise their rights to run, study, modify and redistribute a “machine learning system.” What’s the definition of open source in the context of AI/ML? Where is the boundary between data and software? How do we apply copyleft to software that can identify your cats in your collection of pictures?

FOSS Backstage

Summary

Securing OSS across the whole supply chain and beyond

Nick Vidal

As we celebrate the triumph of open source software on its 25th anniversary, at the same time we have to acknowledge the great responsibility that its pervasiveness entails. Open source has become a vital component of a working society and there’s a pressing need to secure it across the whole supply chain and beyond. In this session, we’ll take the opportunity to look at three major advancements in open source security, from SBOMs and Sigstore to Confidential Computing.

Open source plays a vital role in modern society given its pervasiveness in the Cloud, mobile devices, IoT, and critical infrastructure. Securing it at every step in the supply chain and beyond is of ultimate importance.

As we prepare for the “next Log4Shell”, there are some technologies that are emerging on the horizon, among which SBOMs, Sigstore, and Confidential Computing. In this session, we’ll explore these technologies in detail.

While SBOMs (Software Bill Of Materials) allow developers to track the dependencies of their software and ensure that they are using secure and reliable packages, Sigstore allows developers to verify the authenticity and integrity of open source packages, ensuring that the code has not been tampered with or compromised,

Confidential Computing, on the other hand, protects code and data in use by performing computation in a hardware-based, attested Trusted Execution Environment, ensuring that sensitive code and data cannot be accessed or tampered by unauthorized parties, even if an attacker were to gain access to the computing infrastructure.

SBOMs, Sigstore, and Confidential Computing provide a powerful combination to address security concerns and ensure the integrity and safety of open source software and data. They focus on “security first,” rather than perpetuating existing approaches which have typically attempted to bolt on security measures after development, or which rely on multiple semi-connected processes through the development process to provide marginal improvements to the overall security of an application and its deployment.

As we celebrate the 25th anniversary of open source, these three technologies emerging represent a step forward on securing OSS across the whole supply chain and beyond. We foresee them playing a key role on minimizing the risk of vulnerabilities and protecting software and data against potential attacks, providing greater assurances for society as a whole.

Podcast: SustainOSS

Nick Vidal, Richard Littaeur

As we celebrate the triumph of open source software on its 25th anniversary, at the same time we have to acknowledge the great responsibility that its pervasiveness entails. Open source has become a vital component of a working society and there’s a pressing need to secure it across the whole supply chain and beyond. In this session, we’ll take the opportunity to look at three major advancements in open source security, from SBOMs and Sigstore to Confidential Computing.

Open source plays a vital role in modern society given its pervasiveness in the Cloud, mobile devices, IoT, and critical infrastructure. Securing it at every step in the supply chain and beyond is of ultimate importance.

As we prepare for the “next Log4Shell”, there are some technologies that are emerging on the horizon, among which SBOMs, Sigstore, and Confidential Computing. In this session, we’ll explore these technologies in detail.

While SBOMs (Software Bill Of Materials) allow developers to track the dependencies of their software and ensure that they are using secure and reliable packages, Sigstore allows developers to verify the authenticity and integrity of open source packages, ensuring that the code has not been tampered with or compromised,

Confidential Computing, on the other hand, protects code and data in use by performing computation in a hardware-based, attested Trusted Execution Environment, ensuring that sensitive code and data cannot be accessed or tampered by unauthorized parties, even if an attacker were to gain access to the computing infrastructure.

SBOMs, Sigstore, and Confidential Computing provide a powerful combination to address security concerns and ensure the integrity and safety of open source software and data. They focus on “security first,” rather than perpetuating existing approaches which have typically attempted to bolt on security measures after development, or which rely on multiple semi-connected processes through the development process to provide marginal improvements to the overall security of an application and its deployment.

As we celebrate the 25th anniversary of open source, these three technologies emerging represent a step forward on securing OSS across the whole supply chain and beyond. We foresee them playing a key role on minimizing the risk of vulnerabilities and protecting software and data against potential attacks, providing greater assurances for society as a whole.

ORT Community Days

Summary

Presenting ClearlyDefined

Nick Vidal

Nick Vidal, the new community manager for ClearlyDefined, will provide a brief background on the project and later focus on gathering feedback from the audience as to the next steps for how ClearlyDefined can best serve the community.

Open Source License Clinic

Open Source Licenses 201

Pam Chestek

This essential Clinic session is an advanced primer on open source licenses and why one should care, which are most commonly used and why. Also included are insights into the OSI license process and who are involved in considering and approving new licenses based on Open Source Definition, plus which have been approved in the last five years. Topics include challenges, successes, best practices, operational policies, resources.The briefing is followed by an expert panel discussion.

SBOM This, and SBOM That

Aeva Black

ust a few years ago the notion of a Software Bill of Material (SBOM) was centered around open source licenses. How has it changed, and why is it increasingly being called out as a key component of software transparency by governments around the world? The presenter will share a history of the SBOM, its evolution and role today in cybersecurity. The session will be followed by a Q&A session.

Are AI Models the New Open Source Projects?

Justin Colannino

Communities of machine learning developers are working together and creating thousands of powerful ML models under open source and other public licenses. But these licenses are for software, and ML is different. This briefing discusses how to square ML with open source software licenses and collaboration practices, followed by a panel discussion on the implications that ML and its growing communities have on the future open source of software development.

Alternative Licenses

Luis Villa

The past several years have seen an increase in the number of software licenses which appear to nod to open source software (OSS) licenses – those conforming with the Open Source Definition (OSD) – but are developed to meet different objectives, often withholding some benefits of OSS. What are the emerging patterns in the creation of new licensing strategies? The briefing offers a look at the current landscape and provides an opportunity to answer questions and discuss concerns.

FOSS North

Speaking Up For FOSS Now Everyone Uses It

Simon Phipps

At 40 years old, FOSS has become a full citizen in modern society. By popularising and catalysing the pre-existing concepts from the free software movement, open source has moved to the heart of the connected technology revolution over the last 25 years. In Europe, it now drives nearly 100 Billion Euros of GDP. Unsurprisingly, it is now the focus of much political attention from all directions – including regulators and detractors. Today everyone wants to be FOSS – including many who really don’t but want the cachet.

In 2022, the mounting wave broke and legislation affecting our movement cascaded into view in the USA and Europe. In Europe, the DSA, Data Act, AI Act, CRA, PLD, and several more major legislative works emerged from the Digital Agenda. Despite its apparent awareness of open source, this legislation appeared ill-suited for the reality of our communities. Why is that? Where do standards come into this? Where is this heading?

Simon Phipps is currently director of standards and EU policy for the Open Source Initiative, where he was previously a member of the board of directors and board President. He has also served as a director at The Document Foundation, the UK’s Open Rights Group and other charities and non-profits. Prior to that, he ran one of the first OSPOs at Sun Microsystems, was one of the founders of IBM’s Java business, worked on video conference software and standards at IBM and was involved with workstation and networking software at Unisys/Burroughs. A European rendered stateless by British politics, he lives in the UK.

Web Summit Rio

Open Source Summit NA

OmniBOR: Bringing the Receipts for Supply Chain Security

Aeva Black, Ed Warnicke

Supply Chain requirements got you down? Getting an endless array of false positives from you ‘SBOM scanners’ ? Spending more of your time proving you don’t have a ‘false positive’ from your scanners than fixing real vulnerabilities in your code? There has to be a better way. There is. Come hear from Aeva and Ed about a new way to capture the full artifact dependency graph of your software, not as a ‘scan’ after the fact, but as an output of your build tools themselves. Find out when this feature is coming to a build tool near you.

OpenInfra Summit

Rising to the Supply Chain Security Challenge in Global Open Source Communities

Aeva Black

Policy-makers around the world are debating how best to secure the open source components in software supply chains critical to national infrastructure. For individuals who are not steeped in open source communities’ culture, it can seem logical to apply paradigms designed to model the commercial supply chain of physical goods – but this could lead to catastrophic results for open source projects, where liability is expressly disclaimed in the license and contributors are often unpaid volunteers willing to share their time and ingenuity. What, then, should each of us do?

The OpenInfra community was an early leader in defining secure build practices for a large open source project. Comparable processes are now recommended for all open source projects, and are reflected in frameworks published by the OpenSSF… but even more might soon be necessary.

FOSSY

Summary

Keeping Open Source in the public Interest

Stefano Maffulli

Following an explosion of growth in open collaboration in solving the world’s most urgent problems related to the 2020 global Covid-19 pandemic, open source software moved from mainstream to the world’s main stage. In 2022 the United Nation’s Digital Public Goods (DPG) Alliance began formally certifying open source software as DPG; the European Union wrote open source into their road map; both the EU and the US began crafting Cybersecurity legislation in support of secure software – not targeting OSS as a specific concern but rather protecting and investing in it as critical to its own and its citizens’ interest.

OSI has recognized these important seachanges in the environment, including unprecedented interest in open source in public arenas. Stefano Maffulli’s briefing will provide an overview of important trends in Open Source Software in public policy, philanthropy and research and talk about a new initiative at OSI designed to bring open voices to the discussion.

Workshop – Defining Open Source AI

Stefano Maffulli

Join this in-promptu meeting to share your thoughts on what it means for Artificial Intelligence and Machine Learning systems to be “open”. The Open Source Initiative will host this lunch break to hear from the FOSSY participants what they think should be the shared set of principles that can recreate the permissionless, pragmatic and simplified collaboration for AI practitioners, similar to what the Open Source Definition has done.

Campus Party Brazil

Summary

25 years of Open Source

Nick Vidal, Bruno Souza

Link to video recording (Portuguese)

Este ano comemoramos 25 anos de Open Source. Este é um grande marco para toda a comunidade! Nesta sessão, viajaremos no tempo para entender nossa rica história e como, apesar de todas as batalhas travadas, viemos a conquistar o mundo, hoje presente em todos os cantos, da Web à Nuvem. Em seguida, mergulharemos direto para o futuro, explorando os vários desafios e oportunidades à frente, incluindo seu papel fundamental na promoção da colaboração e inovação em áreas emergentes como Inteligência Artificial e Cybersecurity. Compartilharemos uma linha do tempo interativa durante a apresentação e convidaremos o público e a comunidade em geral a compartilhar suas histórias e sonhos de código aberto uns com os outros.

The future of Artificial Intelligence: Sovereignty and Privacy with Open Source

Nick Vidal, Aline Deparis

Link to video recording (Portuguese)

O futuro da Inteligência Artificial está sendo definido neste exato momento, em uma incrível batalha travada entre grandes empresas e uma comunidade de empreendedores, desenvolvedores e pesquisadores ao redor do mundo. Existem dois caminhos que podemos seguir: um em que os códigos, modelos e dados são proprietários e altamente regulamentados ou outro em que os códigos, modelos e dados são abertos. Um caminho levará a uma monopolização da IA por algumas grandes corporações, onde os usuários finais terão seu poder e privacidade limitados, enquanto o outro democratizará a IA, permitindo que qualquer indivíduo estude, adapte, contribua, inove e construa negócios em cima dessas fundações com total controle e respeito à privacidade.

Open Source Congress

Panel: Does AI Change Everything? What is Open? Liability, Ethics, Values?

Joanna Lee, The Linux Foundation; Satya Mallick, OpenCV; Mohamed Nanabhay, Mozilla Ventures; Stefano Maffuli, OSI

The rapid advancements in artificial intelligence (AI) have ushered in a new era of possibilities and challenges across various sectors of society. As AI permeates our lives, it is crucial to foster a comprehensive understanding of its implications. The panel will bring together experts from diverse backgrounds to engage in a thought-provoking dialogue on the current challenges for AI in open source. Panelists will address the critical challenges facing the ecosystem, including the need to align on defining open AI, how to foster collaboration between and among open source foundations, explore avenues for improvement, and identify current cross-foundational initiatives, all to improve the state of open source AI.

Defining “Open” AI/ML

Stefano Maffuli

Join this in-promptu meeting to share your thoughts on what it means for Artificial Intelligence and Machine Learning systems to be “open”. The Open Source Initiative will host this session to hear from the Open Source Congress participants what they think should be the shared set of principles that can recreate the permissionless, pragmatic and simplified collaboration for AI practitioners, similar to what the Open Source Definition has done.

COSCUP

Summary

The Yin and Yang of Open Source: Unveiling the Dynamics of Collaboration, Diversity, and Cultural Transformation

Paloma Oliveira

“The Yin and Yang of Open Source” is a captivating exploration of the intricate relationship between collaboration, diversity, and open source culture. Looking into its rich history, benefits, challenges, and current issues, with a particular focus on its influence in cultural transformation, the talk aims to inspire a deeper appreciation for the immense power of free and open source philosophy and practical application. It emphasizes the importance of responsible practices and the creation of inclusive communities, urging us to embrace this transformative force and actively contribute to a future that is more inclusive and collaborative.

North Bay Python

NextCloud Conference

The Fourth Sector: an often overlooked and misunderstood sector in the European worldview

Simon Phipps

Simon Phipps is known for his time at Sun Microsystems, where he took over leadership of Sun’s open source program and ran one of the first OSPOs. During this time in the 2000s, most of Sun’s core software was released under open source licenses, including Solaris and Java (which he had previously worked on co-establishing IBM’s Java business in the 1990s). When Sun was broken up in 2010, he was freed to focus purely on open source and dedicated time to re-imagining the Open Source Initiative (OSI) – the non-profit organization that acts as a steward of the canonical list of open source licenses and the Open Source Definition. Today Simon leads OSI’s work educating European policymakers about the needs of the open source community.

Open Source Summit EU

Summary

Keynote: The Evolving OSPO

Nithya Ruff

The OSPO or open source program office has become a well-established institution for driving open source strategy and operations inside companies and other institutions. And 2023 has been a year of strong change and growth for OSPOs everywhere. This keynote will take a look at new challenges and opportunities that face OSPOs today.

Panel Discussion: Why Open Source AI Matters: The EU Community & Policy Perspective

Justin Colannino; Astor Nummelin Carlberg; Ibrahim Haddad; Sachiko Muto; Stefano Maffulli

Nerdearla

Summary

Celebrating 25 years of Open Source

Nick Vidal

February 2023 marks the 25th Anniversary of Open Source. This is a huge milestone for the whole community to celebrate! In this session, we’ll travel back in time to understand our rich journey so far, and look forward towards the future to reimagine a new world where openness and collaboration prevail. Come along and celebrate with us this very special moment! The open source software label was coined at a strategy session held on February 3rd, 1998 in Palo Alto, California. That same month, the Open Source Initiative (OSI) was founded as a general educational and advocacy organization to raise awareness and adoption for the superiority of an open development process. One of the first tasks undertaken by OSI was to draft the Open Source Definition (OSD). To this day, the OSD is considered a gold standard of open-source licensing. In this session, we’ll cover the rich and interconnected history of the Free Software and Open Source movements, and demonstrate how, against all odds, open source has come to “win” the world. But have we really won? Open source has always faced an extraordinary uphill battle: from misinformation and FUD (Fear Uncertainty and Doubt) constantly being spread by the most powerful corporations, to issues around sustainability and inclusion. We’ll navigate this rich history of open source and dive right into its future, exploring the several challenges and opportunities ahead, including its key role on fostering collaboration and innovation in emerging areas such as ML/AI and cybersecurity. We’ll share an interactive timeline during the presentation and throughout the year, inviting the audience and the community at-large to share their open source stories and dreams with each other.

Deep Dive: AI Webinar Series

Summary

The Turing Way Fireside Chat: Who is building Open Source AI?

Jennifer Ding, Arielle Bennett, Anne Steele, Kirstie Whitaker, Marzieh Fadaee, Abinaya Mahendiran, David Gray Widder, Mophat Okinyi

Facilitated by Jennifer Ding and Arielle Bennett of The Turing Way and the Alan Turing Institute, this panel will feature highlights from Abinaya Mahendiran (Nunnari Labs) Marzieh Fadaee (Cohere for AI), David Gray Widder (Cornell Tech), and Mophat Okinyi (African Content Moderators Union). As part of conversations about defining open source AI as hosted by the Open Source Initiative (OSI), The Turing Way is hosting a panel discussion centering key communities who are part of building AI today, whose contributions are often overlooked. Through a conversation with panellists from content moderation, data annotation, and data governance backgrounds, we aim to highlight different kinds of contributors whose work is critical to the Open Source AI ecosystem, but whose contributions are often left out of governance decisions or from benefitting from the AI value chain. We will focus on these different forms of work and how each are recognised and rewarded within the open source ecosystem, with an eye to what is happening now in the AI space. In the spirit of an AI openness that promotes expanding diverse participation, democratising governance, and inviting more people to shape and benefit from the future of AI, we will frame a conversation that highlights current best practices as well as legal, social, and cultural barriers. We hope this multi-domain, multi-disciplinary discussion can emphasise the importance of centering the communities who are integral to AI production in conversations, considerations, and definitions of “Open Source AI.”

Operationalising the SAFE-D principles for Open Source AI

Kirstie Whitaker

The SAFE-D principles (Leslie, 2019) were developed at the Alan Turing Institute, the UK’s national institute for data science and artificial intelligence. They have been operationalised within the Turing’s Research Ethics (TREx) institutional review process. In this panel we will advocate for the definition of Open Source AI to include reflections on each of these principles and present case studies of how AI projects are embedding these normative values in the delivery of their work.

The SAFE-D approach is anchored in the following five normative goals:

* **Safety and Sustainability** ensuring the responsible development, deployment, and use of a data-intensive system. From a technical perspective, this requires the system to be secure, robust, and reliable. And from a social sustainability perspective, this requires the data practices behind the system’s production and use to be informed by ongoing consideration of the risk of exposing affected rights-holders to harms, continuous reflection on project context and impacts, ongoing stakeholder engagement and involvement, and change monitoring of the system from its deployment through to its retirement or deprovisioning.

* Our recommendation: Open source AI must be safe and sustainable, and open ways of working ensure that “many eyes make all bugs shallow”. Having a broad and engaged community involved throughout the AI workflow keeps infrastructure more secure and keeps the purpose of the work aligned with the needs of the impacted stakeholders.

* **Accountability** can include specific forms of process transparency (e.g., as enacted through process logs or external auditing) that may be necessary for mechanisms of redress, or broader processes of responsible governance that seek to establish clear roles of responsibility where transparency may be inappropriate (e.g., confidential projects).

* Our recommendation: Open source AI should have clear accountability documentation and processes of raising concerns. These are already common practice in open source communities, including through codes of conduct and requests for comment for extensions or breaking changes.

* **Fairness and Non-Discrimination** are inseparably connected with sociolegal conceptions of equity and justice, which may emphasize a variety of features such as equitable outcomes or procedural fairness through bias mitigation, but also social and economic equality, diversity, and inclusiveness.

* Our recommendation: Open source AI should clearly communicate how the AI model and workflow are considering equity and justice. We hope that the open source AI community will embed existing tools for bias reporting into an interoperable open source AI ecosystem.

* **Explainability and Transparency** are key conditions for autonomous and informed decision-making in situations where data processing interacts with or influence human judgement and decision-making. Explainability goes beyond the ability to merely interpret the outcomes of a data-intensive system; it also depends on the ability to provide an accessible and relevant information base about the processes behind the outcome.

* Our recommendation: Open source AI should build on the strong history of transparency that is the foundation of the definition of open source: access to the source code, data, and documentation. We are confident that current open source ways of working will enhance transparency and explainability across the AI ecosystem.

* **Data quality, integrity, protection and privacy** must all be established to be confident that the data-intensive systems and models have been developed on secure grounds.

* Our recommendation: Even where data can not be made openly available, there is accountability and transparency around how the data is gathered and used.

The agenda for the session will be:

1. Prof David Leslie will give an overview of the SAFE-D principles.

2. Victoria Kwan will present how the SAFE-D principles have been operationalised for institutional review processes.

3. Dr Kirstie Whitaker will propose how the institutional process can be adapted for decentralised adoption through a shared definition of Open Source AI.

The final 20 minutes will be a panel responding to questions and comments from the audience.

Commons-based data governance

Alek Tarkowski, Zuzanna Warso

Issues related to data governance (its openness, provenance, transparency) have traditionally been outside the scope of open source frameworks. Yet the development of machine learning models shows that concerns over data governance should be in the scope of any approach that aims to govern open-source AI in a holistic way. In this session, I would like to discuss issues such as: – the need for openly licensed / commons based data sources – the feasibility of a requirement to openly share any data used in the training of open-source models – transparency and provenance requirements that could be part of an open-source AI framework.

Preempting the Risks of Generative AI: Responsible Best Practices for Open-Source AI Initiatives

Monica Lopez, PhD

As artificial intelligence (AI) has proliferated across many industries and use cases, changing the way we work, interact and live with one another, AI-enabled technology poses two intersecting challenges to address: the influencing of our beliefs and the engendering of new means for nefarious intent. Such challenges resulting from human psychological tendencies can inform the type of governance needed to ensure safe and reliable generative AI development, particularly in the domain of open-source content.

The formation of human beliefs from a subset of available data from the environment is critical for survival. While beliefs can change with the introduction of new data, the context in which such data emerges and the way in which such data are communicated all matter. Our live dynamic interactions with each other underpin our exchange of information and development of beliefs. Generative AI models are not live systems, and their internal architecture is incapable of understanding the environment to evaluate information. Considering this system reality with the use of AI as a tool for malicious actors to commit crimes, deception –strategies humans use to manipulate others, withhold the truth, and create false impressions for personal gain– becomes an action further amplified by impersonal, automated means.

With the entrance in November 2022 of large language models (LLMs) and other multimodal AI generative systems for public use and consumption, we have the mass availability of tools capable of blurring the line between reality and fiction and of outputting disturbing and dangerous content. Moreover, open-source AI efforts, while laudable in their goal to create a democratized technology, speed up collaboration, fight AI bias, encourage transparency, and generate community norms and AI standards by standards bodies all to encourage fairness, have highlighted the dangers of model traceability and the complex nature of data and algorithm provenance (e.g., PoisonGPT, WormGPT). Further yet, regulation over the development and use of these generative systems remains incomplete and in draft form, e.g., the European Union AI Act, or as voluntary commitments of responsible governance, e.g., Voluntary Commitments by Leading United States’ AI Companies to Manage AI Risks.

The above calls for a reexamination and subsequent integration of human psychology, AI ethics, and AI risk management for the development of AI policy within the open-source AI space. We propose a three-tiered solution founded on a human-centered approach that advocates human well-being and enhancement of the human condition: (1) A clarification of human beliefs and the transference of expectations on machines as a mechanism for supporting deception with AI systems; (2) The use of (1) to re-evaluate ethical considerations as transparency, fairness, and accountability and their individual requirements for open-source code LLMs; and (3) A resulting set of technical recommendations that improve risk management protocols (i.e., independent audits with holistic evaluation strategies) to overcome both the problems of evaluation methods with LLMs and the rigidity and mutability of human beliefs.

The goal of this three-tiered solution is to preserve human control and fill the gap of current draft legislation and voluntary commitments, balancing the vulnerabilities of human evaluative judgement with the strengths of human technical innovation.

Data privacy in AI

Michael Meehan

Data privacy in AI is something everyone needs to plan for. As AI technology continues to advance, it is becoming increasingly important to protect the personal information that is used to train and power these systems, and to ensure that companies are using personal information properly. First, understand that AI systems can inadvertently leak the data used to train the AI as it is producing results. This talk will give an overview of how and why this happens. Second, ensure that you have proper rights to use data fed into your AI. This is not a simple task at times, and the stakes are high. This talk will go into detail about circumstances where the initial rights were not proper, and the sometimes-catastrophic results of that. Third, consider alternatives to using real personal information to train models. One particularly appealing approach is to use the personal data to create statistically-similar synthetic data, and use that synthetic data to train your AI systems. The considerations are important to help protect personal information, or other sensitive information, from being leaked by using AI. This will help to ensure that AI technology can be used safely and responsibly, and that the benefits of AI can be enjoyed with fewer risks.

Perspectives on Open Source Regulation in the upcoming EU AI Act

Katharina Koerner

This presentation will delve into the legal perspectives surrounding the upcoming EU AI Act, with a specific focus on the role of open source, non-profit, and academic research and development in the AI ecosystem. The session will cover crucial topics such as defining open data and AI/ML systems, copyrightability of AI outputs, control over code and data, data privacy, and fostering fair competition while encouraging open innovation. Drawing from existing and upcoming AI regulations globally, we will present recommendations to facilitate the growth of an open ecosystem while safeguarding ethical and accountable AI practices. Join this session for an insightful exploration of the legal landscape shaping the future of open source.

What You Will Learn in the Presentation:

The key problems faced by open source projects under the draft EU AI Act.

The significance of clear definitions and exemptions for open source AI components.

The need for effective coordination and governance to support open source development.

The challenges in implementing the R&D exception for open source AI.

The importance of proportional requirements for “foundation models” to encourage open source innovation and competition.

Recommendation to address the concerns of open source platform providers and ensure an open and thriving AI ecosystem under the AI Act.

Data Cooperatives and Open Source AI

Tarunima Prabhakar, Siddharth Manoharrs

Data Cooperatives have been proposed as a possible remediation to the current power disparity between citizens/internet users from whom data is generated and corporations that process data. But these cooperatives may also evolve to develop their own AI models based on the pooled data. The move to develop machine learning may be driven by a need to make the cooperative sustainable or to address a need of the people pooling the data. The cooperative may consider ‘opening’ its machine learning model even if the data is not open. In this talk we will use Uli, our ongoing project to respond to gendered abuse in Indian languages, as a case study to describe the interplay between community pooled data and open source AI. Uli relies on instances of abuse annotated by activists and researchers at the receiving end of gendered abuse. This crowdsourced data has been used to train a machine learning model to detect abuse in Indian languages. While the data and the machine learning model were made open source for the beta release, in subsequent iterations the team is considering limiting the data that is opened. This is, in part, a recognition that the project is compensating for the lack of adequate attention to non anglophone languages by trust and safety teams across platforms. This talk will explore the different models for licensing data and the machine learning models built on it, that the team is considering, and the tradeoffs between economic sustainability and public good creation in each.

Fairness & Responsibility in LLM-based Recommendation Systems: Ensuring Ethical Use of AI Technology

Rohan Singh Rajput

The advent of Large Language Models (LLMs) has opened a new chapter in recommendation systems, enhancing their efficacy and personalization. However, as these AI systems grow in complexity and influence, issues of fairness and responsibility become paramount.This session addresses these crucial aspects, providing an in-depth exploration of ethical concerns in LLM-based recommendation systems, including algorithmic bias, transparency, privacy, and accountability. We’ll delve into strategies for mitigating bias, ensuring data privacy, and promoting responsible AI usage.Through case studies, we’ll examine real-world implications of unfair or irresponsible AI practices, along with successful instances of ethical AI implementations. Finally, we’ll discuss ongoing research and emerging trends in the field of ethical AI.Ideal for AI practitioners, data scientists, and ethicists, this session aims to equip attendees with the knowledge to implement fair and responsible practices in LLM-based recommendation systems.

Challenges welcoming AI in openly-developed open source projects

Thierry Carrez, Davanum Srinivas, Diane Mueller

Openly-developed open source projects are projects that are developed in a decentralized manner, fully harnessing the power of communities by going beyond open source to also require open development, open design and open community (the 4 opens). This open approach to innovation has led to creation of very popular open source infrastructure technologies like OpenStack or Kubernetes.

With the rise of generative solutions and LLMs, we are expecting more and more code to be produced, directly or indirectly, by AI. Expected efficiencies may save millions of dollars. But at what cost? How is that going to affect the 4 opens? What are the challenges in welcoming AI in our open communities?

This webinar will explore questions such as:

– Can AI-generated code be accepted in projects under an open source license?

– How can we expect open design processes to evolve in a AI world?

– Is it possible to avoid that the burden just shifts from code authoring to code reviewing?

– What does open community mean with AI-powered participants? Is there a risk to create a second class of community members?

Opening up ChatGPT: a case study in operationalizing openness in AI

Andreas Liesenfeld, Mark Dingemanse

Openness in AI is necessarily a multidimensional and therefore graded notion. We present work on tracking openness, transparency and accountability in current instruction-tuned large language models. Our aim is to provide evidence-based judgements of openness for over ten specific features, from source code to training data to model weights and from licensing to scientific documentation and API access. The features are grouped in three broad areas (availability, documentation, and access methods). The openness judgements can be used individually by potential users to make informed decisions for or against deployment of a particular architecture or model. They can also be used cumulatively to derive overall openness scores (tracked at https://opening-up-chatgpt.github.io). This approach allows us to efficiently point out questionable uses of the term “open source” (for instance, Meta’s Llama2 emerges as the least open of all ‘open’ models) and to incentivise developers to consider openness and transparency throughout the model development and deployment cycle (for instance, the BLOOMZ model stands out as a paragon of openness). While our focus is on LLM+RLHF architectures, the overall approach of decomposing openness into its most relevant constituent features is of general relevance to the question of how to define “open” in the context of AI and machine learning. As scientists working in the spirit of open research, the framework and source code underlying our openness judgements and live tracker is itself open source.

Open source AI between enablement, transparency and reproducibility

Ivo Emanuilov, Jutta Suksi

Open source AI is a misnomer. AI, notably in the form of machine learning (ML), is not programmed to perform a task but to learn a task on the basis of available data. The learned model is simply a new algorithm trained to perform a specific task, but it is not a computer program proper and does not fit squarely into the protectable subject matter scope of most open source software licences. Making available the training script or the model’s ‘source code’ (eg, neural weights), therefore, does not guarantee compliance with the OSI definition of open source as it stands because AI is a collection of data artefacts spread across the ML pipeline.

The ML pipeline is formed by processes and artifacts that focus on and reflect the extraction of patterns, trends and correlations from billions of data points. Unlike conventional software, where the emphasis is on the unfettered downstream availability of source code, in ML it is transparency about the mechanics of this pipeline that takes centre stage.

Transparency is instrumental for promoting use maximisation and mitigating the risk of closure as fundamental tenets of the OSS definition. Instead of focusing on single computational artefacts (eg, the training and testing data sets, or the machine learning model), a definition of open source AI should zoom in on the ‘recipe’, ie the process of making a reproducible model. Open source AI should be less interested in the specific implementations protected by the underlying copyright in source code and much more engaged with promoting public disclosure of details about the process of ‘AI-making’.

The definition of open source software has been difficult to apply to other subject matter, so it is not surprising that AI, as a fundamentally different form of software, may similarly require another definition. In our view, any definition of open source AI should therefore focus not solely on releasing neural network weights, training script source code, or training data, important as they may be, but on the functioning of the whole pipeline such that the process becomes reproducible. To this end, we propose a definition of open source AI which is inspired by the written description and enablement requirement in patent law. Under that definition, to qualify as open source AI, the public release should disclose details about the process of making AI that are sufficiently clear and complete for it to be carried out by a person skilled in machine learning.

This definition is obviously subject to further development and refinement in light of the features of the process that may have to be released (eg, model architecture, optimisation procedure, training data etc.). Some of these artefacts may be covered by exclusive IP rights (notably, copyright), others may not. This creates a fundamental challenge with licensing AI in a single package.

One way to deal with this conundrum is to apply the unitary approach known from the European case law on video games (eg, the ECJ Nintendo case) whereby if we can identify one expressive element that attracts copyright protection (originality), this element would allow us to extend protection to the work as a whole. Alternatively, we can adopt the more pragmatic and technically correct approach to AI as a process embedding a heterogenous collection of artefacts. In this case, any release on open source terms that ensures enablement, reproducibility and downstream availability would have to take the form of a hybrid licence which grants cumulatively enabling rights over code, data, and documentation.

In this session, we discuss these different approaches and how the way we define open source AI and the objectives pursued with this definition may predetermine which licensing approach should apply.

Federated Learning: A Paradigm Shift for Secure and Private Data Analysis

Dimitris Stripelis

Introduction

There are situations where data relevant to a machine learning problem are distributed across multiple locations that cannot share the data due to regulatory, competitiveness, security, or privacy reasons. Federated Learning (FL) is a promising approach to learning a joint machine learning model over all the available data across silos without transferring data to a centralized location. Federated Learning was originally introduced by Google in 2017 for next-word prediction on edge devices [1]. Recently, Federated Learning has witnessed vast applicability across multiple disciplines, especially in healthcare, finance, and manufacturing.

Federated Learning Training

Typically, a federated environment consists of a centralized server and a set of participating devices. Instead of sending the raw data to the central server, devices only send their local model parameters trained over their private data. This computational approach has a great impact on how traditional training of the machine and deep learning models is performed. Compared to centralized machine learning where data need to be aggregated in a centralized location, Federated Learning allows data to live at their original location, hence improving data security and reducing associated data privacy risks. When Federated Learning is used to train models across multiple edge devices, e.g., mobile phones, sensors, and the like, it is known as cross-device FL, and when applied across organizations it is known as cross-silo FL.

Secure and Private Federated Learning

Federated Learning addresses some data privacy concerns by ensuring that sensitive data never leaves the user’s device. Individual data remains secure and private, significantly reducing the risk of data leakage, while users actively participate in the data analysis processes and maintain complete control over their personal information. However, Federated Learning is not always secure and private out-of-the-box. The federated model can still leak sensitive information if not adequately protected [3] while an eavesdropper/adversary can still access the federated training procedure through the communication channels. To alleviate this, Federated Learning has to be combined with privacy-preserving and secure data analysis mechanisms, such as Differential Privacy [4] and Secure Aggregation [5] protocols. Differential Privacy can ensure that sensitive personal information is still protected even under unauthorized access, while Secure Aggregation protocols enable models’ aggregation even under collusion attacks.

Conclusion

In a data-driven world, prioritizing data privacy and secure data analysis is not just a responsibility but a necessity. Federated Learning emerges as a game-changer in this domain, empowering organizations to gain insights from decentralized data sources while safeguarding data privacy. By embracing Federated Learning, we can build a future where data analysis and privacy coexist harmoniously, unlocking the full potential of data-driven innovations while respecting the fundamental rights of privacy.

Should OpenRAIL licenses be considered OS AI Licenses?

Daniel McDuff, Danish Contractor, Luis Villa, Jenny Lee

Advances in AI have been enabled in-part thanks to open source (OS) which has permeated ML research both in the academy and industry. However, there are growing concerns about the influence and scale of AI models (e.g., LLMs) on people and society. While openness is a core value for innovation in the field, openness is not enough and does not address the risks of harm that might exist when AI is used negligently or maliciously. A growing category of licenses are open responsible AI licenses (https://www.licenses.ai/ai-licenses) which include behavioral-use clauses, these include high profile projects such as Llama2 (https://ai.meta.com/llama/) and Bloom (https://bigscience.huggingface.co/blog/bloom). In this proposed session the panelists would discuss whether OpenRAIL (https://huggingface.co/blog/open_rail) licenses should be considered as OS AI licenses.

Topics will include: Whether the definition of OS is not adequate for AI systems; Whether OS of AI systems requires open-sourcing every aspect of the model (data, model, source) and whether that is feasible; How data use requirements could be included in such a definition; and therefore, whether inclusion of behavioral use restrictions is at odds with any future definition of OS AI. In responding to these questions the panelists will discuss how the components of AI systems (e.g., data, models, source code, applications) each have different properties and whether this is part of the motivation for a new form of licensing. The speakers have their own experience of building, distributing and deploying AI systems and will provide examples of these considerations in practice.

Copyright — Right Answer for Open Source Code, Wrong Answer for Open Source AI?

McCoy Smith

Open source has always found its legal foundation primarily in copyright. Although many codes of behavior around open source have been adopted and promulgated by various open source communities, in the end it is the license attached to any piece of open source that dictates how it may be used and what obligations a user must abide by in order to remain legally compliant.

Artificial Intelligence is raising, and will continue to raise, profound questions about how copyright law applies — or does not apply — to the process of ingesting training content, processing that content to extract information used to generate output, what the that information is, and the nature of the output produced.

Much debate, and quite a bit of litigation, has recently been generated around questions raised by the input phase of training Artificial Intelligence, and to what extent the creators of materials used in that input phase have any right — morally or legally — to object to that training. At the same time, whether or not the output of AI can be the subject matter of copyright, or patent, protection is also being tested in various jurisdictions — with clashing results. What occurs between input and output remains an unresolved issue — and whether there is any legal regime that can be used to guarantee that legal, normative rules can control how those processes are used exist in the way that copyright, and copyright licensing, do so in open source at present.

The presentation will discuss these issues in depth with a lens toward testing whether copyright — or any other intellectual property regime — really can be useful in keeping AI “open.”

Should we use open source licenses for ML/AI models?

Mary Hardy

Open source AI models are exponentially increasing in number and the variety of open source licenses chosen is substantial. Can all OSI-approved licenses be used uniformly to fit the various components of AI?

During the session, open source attorney Mary Hardy will explore questions present and future about open ML model licenses, including:

Why is AFL-3.0 so popular?

What about Apache-2.0? GPL-2.0/3.0?

What are the implications of licensing modifications under a different OS license than the checkpoint used as a basis?

Is a new license that explicitly considers ML model weights needed?

Covering your bases with IP Indemnity

Justin Dorfman, Tammy Zhu, Samantha Mandell

When working with LLM providers that don’t have their models public (Anthropic, OpenAI, etc.), it’s near impossible to know if any Copyleft code has been trained upon. So how do you bring AI developer tools to the market without risking legal jeopardy? I asked Sourcegraph’s head of Legal, Tammy Zhu, to teach me how we protect ourselves from failing to comply with attribution requirements.

The Ideology of FOSS and AI: What “Open” means relating to platforms and black box systems

Mike Nolan

The initial conception of Free and Open Source Software was developed during a time where software was bundled into discrete packages to be run on machines owned and operated by a single individual. The initial FOSS movement utilized licensing and copyright law to provide better autonomy and control over these software systems. Now, our software systems often operate as platforms, monopolizing access between networks and resources and profiting greatly through that monopoly.

In this talk, listeners will learn more about the ideological foundations of FOSS and the blindspots that have developed in our community as software has transitioned from individual discrete packages into deeply interconnected systems that gate access to critical resources for many. We will delve into what autonomy might mean in a world where the deployment of technology inherently affects so many. Finally, we will observe the flaws in conventional open source approaches to providing autonomy and what other tools we may have at our disposal to ensure better community governance of this increasingly pervasive technology.

Community Leadership Summit

All Things Open

Summary

Celebrating 25 years of Open Source

Nick Vidal

This year marks the 25th Anniversary of Open Source. This is a huge milestone for the whole community to celebrate! In this session, we’ll travel back in time to understand our rich journey so far, and look forward towards the future to reimagine a new world where openness and collaboration prevail. Come along and celebrate with us this very special moment! The open source software label was coined at a strategy session held on February 3rd, 1998 in Palo Alto, California. That same month, the Open Source Initiative (OSI) was founded as a general educational and advocacy organization to raise awareness and adoption for the superiority of an open development process. One of the first tasks undertaken by OSI was to draft the Open Source Definition (OSD). To this day, the OSD is considered a gold standard of open-source licensing. In this session, we’ll cover the rich and interconnected history of the Free Software and Open Source movements, and demonstrate how, against all odds, open source has come to “win” the world. But have we really won? Open source has always faced an extraordinary uphill battle: from misinformation and FUD (Fear Uncertainty and Doubt) constantly being spread by the most powerful corporations, to issues around sustainability and inclusion. We’ll navigate this rich history of open source and dive right into its future, exploring the several challenges and opportunities ahead, including its key role in fostering collaboration and innovation in emerging areas such as Artificial Intelligence. We’ll share an interactive timeline during the presentation and throughout the year, inviting the audience and the community at-large to share their open source stories and dreams with each other.

Open Source 201

Pamela Chestek

This essential session is an advanced primer on open source licenses and why one should care, which are most commonly used and why. Also included are insights into the OSI license process and who are involved in considering and approving new licenses based on Open Source Definition, plus which have been approved in the last five years. Topics include challenges, successes, best practices, operational policies, resources.

Open Source and Public Policy

Deb Bryant, Stephen Jacobs, Patrick Masson, Ruth Suehle, Greg Wallace

New regulations in the software industry and adjacent areas such as AI, open science, open data, and open education are on the rise around the world. Cyber Security, societal impact of AI, data and privacy are paramount issues for legislators globally. At the same time, the COVID-19 pandemic drove collaborative development to unprecedented levels and took Open Source software, open research, open content and data from mainstream to main stage, creating tension between public benefit and citizen safety and security as legislators struggle to find a balance between open collaboration and protecting citizens.

Historically, the open source software community and foundations supporting its work have not engaged in policy discussions. Moving forward, thoughtful development of these important public policies whilst not harming our complex ecosystems requires an understanding of how our ecosystem operates. Ensuring stakeholders without historic benefit of representation in those discussions becomes paramount to that end.

Please join our open discussion with open policy stakeholders working constructively on current open policy topics. Our panelists will provide a view into how oss foundations and other open domain allies are now rising to this new challenge as well as seizing the opportunity to influence positive changes to the public’s benefit.

Topics: Public Policy, Open Science, Open Education, current legislation in the US and EU, US interest in OSS sustainability, intro to the Open Policy Alliance

Panel: Open Source Compliance & Security

Aeva Black, Brian Dussault, Madison Oliver, Alexander Beaver

The goal of this panel is to cover all things supply chain (from SBOMs in general to other technologies/approaches in particular) exploring four different perspectives, from CISA (Cybersecurity and Infrastructure Security Agency) and the latest efforts by the US government to secure open source; from GitHub, the largest open source developer platform; from Stacklock, one of the most exciting startups in this space, being led by the founders of Kubernetes and Sigstore; and from Rochester Institute of Technology, one of the leading universities in the US.

Open Source AI definition (workshop)

Mer Joyce, Stefano Maffulli

The Open Source Initiative (OSI) continues the work of exploring complexities surrounding the development and use of artificial intelligence in this in-person session, part of Deep Dive: AI – Defining Open Source AI 2023 series. The goal is to collaboratively establish a clear and defensible definition of “Open Source AI.” This is going to be an interactive session where every participant will have an active role. OSI will share an early draft of the Open Source AI Definition and, with the help of a facilitator, we will collect feedback from the participants. Be in the room where it happens!

EclipseCon

Open Source Is 25 Years Young

Carlos Piana

This year, the Open Source Initiative (OSI) celebrates 25 years of activity, mainly defining what open source is. We are certainly proud of what we have done in the past and believe that open source has delivered many if not all of its promises.

But we are more interested in what lies ahead for the next 25 years. The paradigm shifts with increasing speed, from mainframe, to client/server, to Internet, to cloud, to AI, to what? We must make sure that openness and freedom remain as unhindered as possible. Simply using the same tools that made open source a resounding success will not be enough.

In this talk, Carlo Piana will share OSI’s views and plans to foster openness for the years to come.

Latinoware

The future of Artificial Intelligence: Sovereignty and Privacy with Open Source

Nick Vidal, Aline Deparis

Link to video recording (Portuguese)

The future of Artificial Intelligence is being defined right now, in an incredible battle between large companies and a community of entrepreneurs, developers and researchers around the world. The world of AI is at an important crossroads. There are two paths forward: one where highly regulated proprietary code, models, and datasets are going to prevail, or one where Open Source dominates. One path will lead to a stronghold of AI by a few large corporations where end-users will have limited privacy and control, while the other will democratize AI, allowing anyone to study, adapt, contribute back, innovate, as well as build businesses on top of these foundations with full control and respect for privacy.

Celebrating 25 years of Open Source

Nick Vidal

This year marks the 25th Anniversary of Open Source. This is a huge milestone for the whole community to celebrate! In this session, we’ll travel back in time to understand our rich journey so far, and look forward towards the future to reimagine a new world where openness and collaboration prevail. Come along and celebrate with us this very special moment! The open source software label was coined at a strategy session held on February 3rd, 1998 in Palo Alto, California. That same month, the Open Source Initiative (OSI) was founded as a general educational and advocacy organization to raise awareness and adoption for the superiority of an open development process. One of the first tasks undertaken by OSI was to draft the Open Source Definition (OSD). To this day, the OSD is considered a gold standard of open-source licensing. In this session, we’ll cover the rich and interconnected history of the Free Software and Open Source movements, and demonstrate how, against all odds, open source has come to “win” the world. But have we really won? Open source has always faced an extraordinary uphill battle: from misinformation and FUD (Fear Uncertainty and Doubt) constantly being spread by the most powerful corporations, to issues around sustainability and inclusion. We’ll navigate this rich history of open source and dive right into its future, exploring the several challenges and opportunities ahead, including its key role in fostering collaboration and innovation in emerging areas such as Artificial Intelligence. We’ll share an interactive timeline during the presentation and throughout the year, inviting the audience and the community at-large to share their open source stories and dreams with each other.

Linux Foundation Member Summit

Why Open Source AI Matters: The Community & Policy Perspective

Mary Hardy; Stefano Mafulli; Mike Linksvayer; Katharina Koerner

The number of publicly available AI models is growing exponentially, doubling every six months. With this explosion, communities and policymakers are asking questions about open source AI’s innovation benefits, safety risks, impact on sovereignty, and competitive economics against closed-source models. In this panel discussion, Mary and panelists will talk about why a clear and consistent definition of open source AI matters for open source communities in the face of growing policy tending towards greater regulation of open communities.

Workshop: Define “Open AI”

Stefano Maffulli, Mer Joyce

As the legislators accelerate and the doomsayers chant, one thing is clear: It’s time to define what “open” means in the context of AI/ML before it’s defined for us.