Improving speech-to-text transcripts from Amazon Transcribe using custom vocabularies and Amazon Augmented AI

Businesses and organizations are increasingly using video and audio content for a variety of functions, such as advertising, customer service, media post-production, employee training, and education. As the volume of multimedia content generated by these activities proliferates, businesses are demanding high-quality transcripts of video and audio to organize files, enable text queries, and improve accessibility to audiences who are deaf or hard of hearing (466 million with disabling hearing loss worldwide) or language learners (1.5 billion English language learners worldwide).

Traditional speech-to-text transcription methods typically involve manual, time-consuming, and expensive human labor. Powered by machine learning (ML), Amazon Transcribe is a speech-to-text service that delivers high-quality, low-cost, and timely transcripts for business use cases and developer applications. In the case of transcribing domain-specific terminologies in fields such as legal, financial, construction, higher education, or engineering, the custom vocabularies feature can improve transcription quality. To use this feature, you create a list of domain-specific terms and reference that vocabulary file when running transcription jobs.

This post shows you how to use Amazon Augmented AI (Amazon A2I) to help generate this list of domain-specific terms by sending low-confidence predictions from Amazon Transcribe to humans for review. We measure the word error rate (WER) of transcriptions and number of correctly-transcribed terms to demonstrate how to use custom vocabularies to improve transcription of domain-specific terms in Amazon Transcribe.

To complete this use case, use the notebook A2I-Video-Transcription-with-Amazon-Transcribe.ipynb on the Amazon A2I Sample Jupyter Notebook GitHub repo.

Example of mis-transcribed annotation of the technical term, “an EC2 instance”. This term was transcribed as “Annecy two instance”.

Example of correctly transcribed annotation of the technical term “an EC2 instance” after using Amazon A2I to build an Amazon Transcribe custom vocabulary and re-transcribing the video.

This walkthrough focuses on transcribing video content. You can modify the code provided to use audio files (such as MP3 files) by doing the following:

- Upload audio files to your Amazon Simple Storage Service (Amazon S3) bucket and using them in place of the video files provided.

- Modify the button text and instructions in the worker task template provided in this walkthrough and tell workers to listen to and transcribe audio clips.

Solution overview

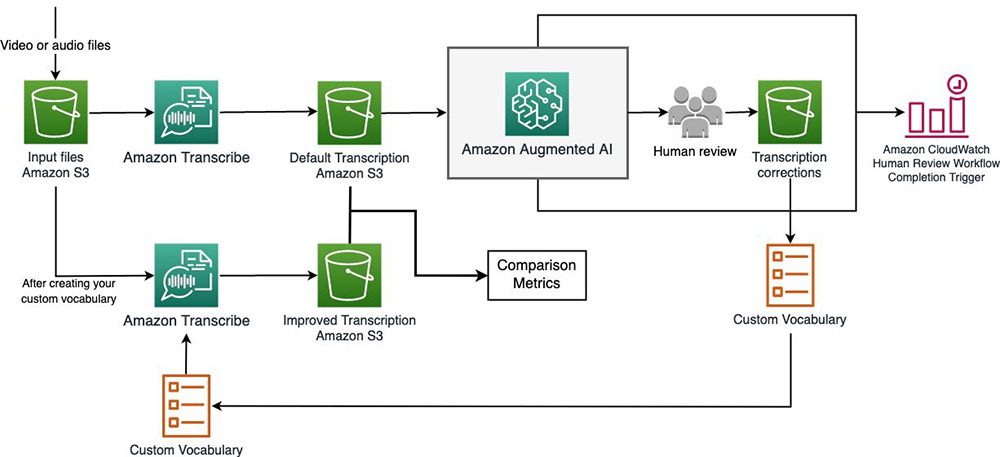

The following diagram presents the solution architecture.

We briefly outline the steps of the workflow as follows:

- Perform initial transcription. You transcribe a video about Amazon SageMaker, which contains multiple mentions of technical ML and AWS terms. When using Amazon Transcribe out of the box, you may find that some of these technical mentions are mis-transcribed. You generate a distribution of confidence scores to see the number of terms that Amazon Transcribe has difficulty transcribing.

- Create human review workflows with Amazon A2I. After you identify words with low-confidence scores, you can send them to a human to review and transcribe using Amazon A2I. You can make yourself a worker on your own private Amazon A2I work team and send the human review task to yourself so you can preview the worker UI and tools used to review video clips.

- Build custom vocabularies using A2I results. You can parse the human-transcribed results collected from Amazon A2I to extract domain-specific terms and use these terms to create a custom vocabulary table.

- Improve transcription using custom vocabulary. After you generate a custom vocabulary, you can call Amazon Transcribe again to get improved transcription results. You evaluate and compare the before and after performances using an industry standard called word error rate (WER).

Prerequisites

Before beginning, you need the following:

- An AWS account.

- An S3 bucket. Provide its name in

BUCKETin the notebook. The bucket must be in the same Region as this Amazon SageMaker notebook instance. - An AWS Identity and Access Management (IAM) execution role with required permissions. The notebook automatically uses the role you used to create your notebook instance (see the next item in this list). Add the following permissions to this IAM role:

- Attach managed policies

AmazonAugmentedAIFullAccessandAmazonTranscribeFullAccess. - When you create your role, you specify Amazon S3 permissions. You can either allow that role to access all your resources in Amazon S3, or you can specify particular buckets. Make sure that your IAM role has access to the S3 bucket that you plan to use in this use case. This bucket must be in the same Region as your notebook instance.

- Attach managed policies

- An active Amazon SageMaker notebook instance. For more information, see Create a Notebook Instance. Open your notebook instance and upload the notebook A2I-Video-Transcription-with-Amazon-Transcribe.ipynb.

- A private work team. A work team is a group of people that you select to review your documents. You can choose to create a work team from a workforce, which is made up of workers engaged through Amazon Mechanical Turk, vendor-managed workers, or your own private workers that you invite to work on your tasks. Whichever workforce type you choose, Amazon A2I takes care of sending tasks to workers. For this post, you create a work team using a private workforce and add yourself to the team to preview the Amazon A2I workflow. For instructions, see Create a Private Workforce. Record the ARN of this work team—you need it in the accompanying Jupyter notebook.

To understand this use case, the following are also recommended:

- Basic understanding of AWS services like Amazon Transcribe, its features such as custom vocabularies, and the core components and workflow Amazon A2I uses.

- The notebook uses the AWS SDK for Python (Boto3) to interact with these services.

- Familiarity with Python and NumPy.

- Basic familiarity with Amazon S3.

Getting started

After you complete the prerequisites, you’re ready to deploy this solution entirely on an Amazon SageMaker Jupyter notebook instance. Follow along in the notebook for the complete code.

To start, follow the Setup code cells to set up AWS resources and dependencies and upload the provided sample MP4 video files to your S3 bucket. For this use case, we analyze videos from the official AWS playlist on introductory Amazon SageMaker videos, also available on YouTube. The notebook walks through transcribing and viewing Amazon A2I tasks for a video about Amazon SageMaker Jupyter Notebook instances. In Steps 3 and 4, we analyze results for a larger dataset of four videos. The following table outlines the videos that are used in the notebook, and how they are used.

| Video # | Video Title | File Name | Function |

|

1 |

Fully-Managed Notebook Instances with Amazon SageMaker – a Deep Dive | Fully-Managed Notebook Instances with Amazon SageMaker – a Deep Dive.mp4 | Perform the initial transcription and viewing sample Amazon A2I jobs in Steps 1 and 2.Build a custom vocabulary in Step 3 |

|

2 |

Built-in Machine Learning Algorithms with Amazon SageMaker – a Deep Dive | Built-in Machine Learning Algorithms with Amazon SageMaker – a Deep Dive.mp4 | Test transcription with the custom vocabulary in Step 4 |

|

3 |

Bring Your Own Custom ML Models with Amazon SageMaker | Bring Your Own Custom ML Models with Amazon SageMaker.mp4 | Build a custom vocabulary in Step 3 |

|

4 |

Train Your ML Models Accurately with Amazon SageMaker | Train Your ML Models Accurately with Amazon SageMaker.mp4 | Test transcription with the custom vocabulary in Step 4 |

In Step 4, we refer to videos 1 and 3 as the in-sample videos, meaning the videos used to build the custom vocabulary. Videos 2 and 4 are the out-sample videos, meaning videos that our workflow hasn’t seen before and are used to test how well our methodology can generalize to (identify technical terms from) new videos.

Feel free to experiment with additional videos downloaded by the notebook, or your own content.

Step 1: Performing the initial transcription

Our first step is to look at the performance of Amazon Transcribe without custom vocabulary or other modifications and establish a baseline of accuracy metrics.

Use the transcribe function to start a transcription job. You use vocab_name parameter later to specify custom vocabularies, and it’s currently defaulted to None. See the following code:

Wait until the transcription job displays COMPLETED. A transcription job for a 10–15-minute video typically takes up to 5 minutes.

When the transcription job is complete, the results is stored in an output JSON file called YOUR_JOB_NAME.json in your specified BUCKET. Use the get_transcript_text_and_timestamps function to parse this output and return several useful data structures. After calling this, all_sentences_and_times has, for each transcribed video, a list of objects containing sentences with their start time, end time, and confidence score. To save those to a text file for use later, enter the following code:

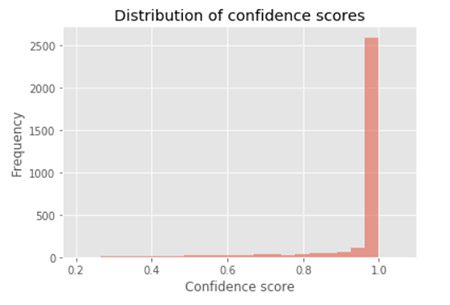

To look at the distribution of confidence scores, enter the following code:

The following graph illustrates the distribution of confidence scores.

Next, we filter out the high confidence scores to take a closer look at the lower ones.

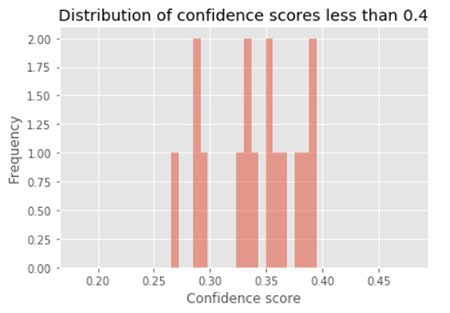

You can experiment with different thresholds to see how many words fall below that threshold. For this use case, we use a threshold of 0.4, which corresponds to 16 words below this threshold. Sequences of words with a term under this threshold are sent to human review.

As you experiment with different thresholds and observe the number of tasks it creates in the Amazon A2I workflow, you can see a tradeoff between the number of mis-transcriptions you want to catch and the amount of time and resources you’re willing to devote to corrections. In other words, using a higher threshold captures a greater percentage of mis-transcriptions, but it also increases the number of false positives—low-confidence transcriptions that don’t actually contain any important technical term mis-transcriptions. The good news is that you can use this workflow to quickly experiment with as many different threshold values as you’d like before sending it to your workforce for human review. See the following code:

You get the following output:

The following graph shows the distribution of confidence scores less than 0.4.

As you experiment with different thresholds, you can see a number of words classified with low confidence. As we see later, terms that are specific to highly technical domains are more difficult to automatically transcribe in general, so it’s important that we capture these terms and incorporate them into our custom vocabulary.

Step 2: Creating human review workflows with Amazon A2I

Our next step is to create a human review workflow (or flow definition) that sends low confidence scores to human reviewers and retrieves the corrected transcription they provide. The accompanying Jupyter notebook contains instructions for the following steps:

- Create a workforce of human workers to review predictions. For this use case, creating a private workforce enables you to send Amazon A2I human review tasks to yourself so you can preview the worker UI.

- Create a work task template that is displayed to workers for every task. The template is rendered with input data you provide, instructions to workers, and interactive tools to help workers complete your tasks.

- Create a human review workflow, also called a flow definition. You use the flow definition to configure details about your human workforce and the human tasks they are assigned.

- Create a human loop to start the human review workflow, sending data for human review as needed. In this example, you use a custom task type and start human loop tasks using the Amazon A2I Runtime API. Each time

StartHumanLoopis called, a task is sent to human reviewers.

In the notebook, you create a human review workflow using the AWS Python SDK (Boto3) function create_flow_definition. You can also create human review workflows on the Amazon SageMaker console.

Setting up the worker task UI

Amazon A2I uses Liquid, an open-source template language that you can use to insert data dynamically into HTML files.

In this use case, we want each task to enable a human reviewer to watch a section of the video where low confidence words appear and transcribe the speech they hear. The HTML template consists of three main parts:

- A video player with a replay button that only allows the reviewer to play the specific subsection

- A form for the reviewer to type and submit what they hear

- Logic written in JavaScript to give the replay button its intended functionality

The following code is the template you use:

The {{ task.input.filePath | grant_read_access }} field allows you to grant access to and display a video to workers using a path to the video’s location in an S3 bucket. To prevent the reviewer from navigating to irrelevant sections of the video, the controls parameter is omitted from the video tag and a single replay button is included to control which section can be replayed.

Under the video player, the

At the end of the HTML snippet, the section enclosed by the

Tags: Archive