Building machine learning workflows with Amazon SageMaker Processing jobs and AWS Step Functions

Machine learning (ML) workflows orchestrate and automate sequences of ML tasks, including data collection, training, testing, evaluating an ML model, and deploying the models for inference. AWS Step Functions automates and orchestrates Amazon SageMaker-related tasks in an end-to-end workflow. The AWS Step Functions Data Science Software Development Kit (SDK) is an open-source library that allows you to easily create workflows that preprocess data and then train and publish ML models using Amazon SageMaker and Step Functions. You can create ML workflows in Python that orchestrate AWS infrastructure at scale, without having to provision and integrate AWS services separately.

Amazon SageMaker is a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy ML models at scale.

At re:Invent 2019, we announced the launch of Amazon SageMaker Processing, a new capability of Amazon SageMaker that lets you easily run your preprocessing, postprocessing, and model evaluation workloads on fully managed infrastructure.

Today, we’re happy to announce the availability of the Step Functions service integration with Amazon SageMaker Processing. This integration allows data scientists to easily integrate Amazon SageMaker Processing into their ML workflows using the Step Functions and Step Functions Data Science SDK.

Benefits of the AWS Step Functions Data Science SDK

The AWS Step Functions Data Science SDK allows data scientists to easily construct ML workflows without dealing with DevOps tasks like provisioning hardware or deploying software. The Step Functions Data Science SDK has built-in integrations with Amazon SageMaker to orchestrate ML workflows, including training, hyperparameter tuning, or deploying a model. The SDK allows you to develop and test your ML workflows locally, and provides consistency when deploying workflows to a testing or production environments on AWS.

It also includes the following benefits:

- Ease of use – You can build and orchestrate ML workflows using Python. The SDK also allows you to create reusable workflow templates that other team members can use. Step Functions allows you to easily introduce error handling, retry logic, parallel steps, and branching into the workflows. You can also build complex ML workflows using other AWS services, including native integration with the Amazon DynamoDB. Amazon SNS, Amazon SQS, Amazon EMR, AWS Lambda, AWS Glue, AWS Batch, and Amazon Elastic Container Service (Amazon ECS) – For more information, see AWS Step Functions Data Science SDK

- Agility – Step Functions allows you to build serverless workflows without needing to set up any underlying infrastructure. You can quickly build new workflows in a matter of minutes. In addition, Step Functions scales out effortlessly to match your use case.

- Cost – With Step Functions, you pay for each transition from one state to the next. Billing is metered by state transition, and you don’t pay for idle time. This keeps Step Functions cost-effective as you scale from a few runs to tens of millions. Furthermore, the native integration of the SDK with other AWS services, including Amazon SageMaker, allows you to reduce the state transitions even further. We go into more detail about one of the native integrations later in this post.

The Amazon SageMaker ProcessingStep is now available as part of the AWS Step Functions Data Science SDK. This service integration allows you to get rid of additional steps including AWS Lambda steps for creating, polling, and checking the status of Amazon SageMaker Processing jobs. You can create processing jobs by using the newly available ProcessingStep.

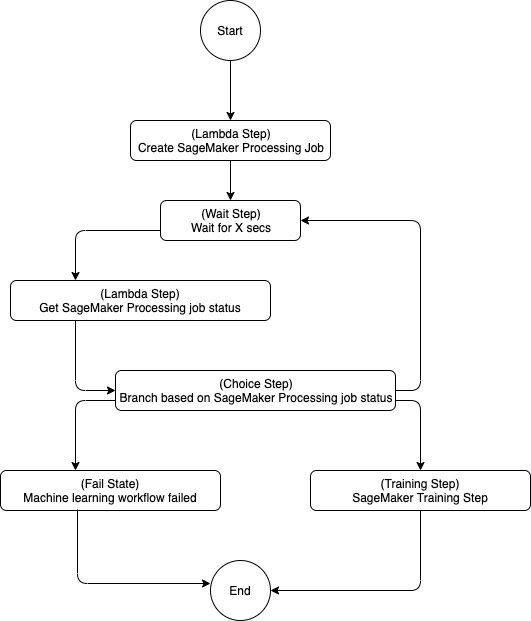

Prior to this launch, integrating Amazon SageMaker Processing into a Step Functions workflow required authoring AWS Lambda functions to invoke the Amazon SageMaker Processing APIs. The function used a low-level AWS SDK to construct the request parameters, call the Lambda Amazon SageMaker Processing job APIs (create_processing_job(), describe_processing_job(), list_processing_jobs(), or stop_processing_job()), and read the response objects returned. In addition, the ML engineer had to embed additional logic to check the processing job’s status using busy polling at regular intervals using additional workflow steps, including a Wait state, Choice state, and Task states to create a processing job and check the job status. The following diagram illustrates the Step Functions workflow prior to this launch

AWS Step Functions Workflow prior to the launch of “ProcessingStep”

This approach requires busy polling the processing job’s status and requires additional complexity in terms of additional steps in the overall workflow. This polling mechanism also incurs an additional cost because of the state transitions for checking the status.

New Amazon SageMaker Processing step

With new ProcessingStep, you can now choose to get the response synchronously without having to write any additional steps in the workflow.

The new ProcessingStep creates a Task state to fun the processing job. You can walk through a Step Functions Amazon SageMaker Jupyter Notebook to see how it works.

In the notebook, we create an SKLearn Amazon SageMaker Processor object. See the following code:

We then create a ProcessingStep using this processor:

This processing step uses the preprocessing script preprocessor.py with the argument defining the train and test dataset split ratio (for example, 0.2). For more information about input arguments, see ProcessingStep.

The new ProcessingStep launches a processing job and by default waits synchronously for it to complete. This allows you to get the status and output of the processing job in a single step as compared to writing additional steps to poll for the processing job’s status by writing a new Lambda function. The wait_for_completion parameter of the ProcessingStep is set to True to indicate that the Task state should wait for the processing job to complete before proceeding to the next step. When the ProcessingStep is finished, Step Functions makes the response of DescribeProcessingJob available as the output of the step. Step Functions internally listens to Amazon EventBridge Amazon SageMaker events to get the notifications of the processing job’s status change. This approach takes away all the heavy lifting from the end-user that would otherwise be needed in polling the job’s status.

If you need any of the values from the output of the ProcessingStep to be available to the next state in your Step Functions workflow, you can do so using the already available Choice Rules for the Choice state. See the following code:

The preceding code retrieves the processing job’s status, checks if it’s in the Completed status, and sets the next state after ProcessingStep finishes.

You can further add error-handling logic in the ProcessingStep by creating a Step Functions Catch block and adding that to the list of catchers for the state by using add_catch(). See the following code:

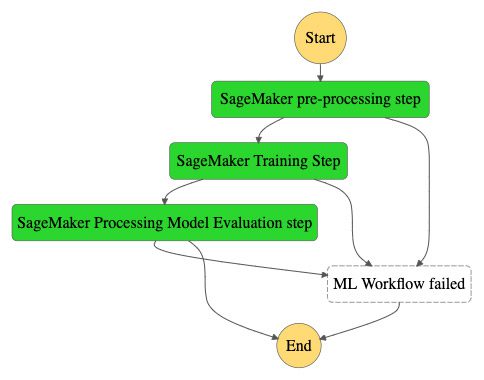

The following ML workflow shows the use of ProcessingStep to preprocess a dataset prior to running a TrainingStep.

AWS Step Functions Workflow using the new “ProcessingStep”

You can also create more additional ML workflows that launch multiple parallel tasks using dynamic parallelism support in Step Functions. For example, prior to training, your workflow may run multiple independent tasks, such as anomaly detection or feature selection. You can implement these tasks by using multiple processing steps launched via Parallel states.

Amazon SageMaker Processing jobs are also supported in EventBridge. The EventBridge integration allows you to monitor status changes of your processing jobs and automatically trigger actions. You can do so by configuring the EventBridge rule to match on Amazon SageMaker Processing events. When the pattern matches, the rule routes that event to the target. You can configure this on the Amazon CloudWatch console. Complete the following steps:

- On the CloudWatch console, under Events, choose Rules.

- Choose Create rule.

- For Service Name, choose SageMaker.

- For Event Type¸ choose SageMaker Processing Job State Change.

- In the Targets section, choose Add target to configure your event handler.

Summary

This post provided an overview of the new ProcessingStep as part of the Step Functions Data Science SDK for creating Amazon SageMaker Processing jobs. It showed how this native integration allows you to get rid of busy polling of processing job status, avoid extra steps in your workflow, and add retry and error-handling logic for the new Processing step. The post provided the example notebook showing the use of the new step and an overview of how to use EventBridge rules to handle when the processing job event changes.

For more information and example notebooks related to the SDK, see Introducing the AWS Step Functions Data Science SDK for Amazon SageMaker.

About the authors

Dhawalkumar Patel is a Startup Senior Solutions Architect at AWS. He has worked with organizations ranging from large enterprises to startups on problems related to distributed computing and artificial intelligence. He is currently focused on machine learning and serverless technologies.

Dhawalkumar Patel is a Startup Senior Solutions Architect at AWS. He has worked with organizations ranging from large enterprises to startups on problems related to distributed computing and artificial intelligence. He is currently focused on machine learning and serverless technologies.

Shunjia Ding is a Software Development Engineer working with AWS Step Functions Services development at AWS.

Shunjia Ding is a Software Development Engineer working with AWS Step Functions Services development at AWS.

Tags: Archive

Leave a Reply