Adding custom data sources to Amazon Kendra

Amazon Kendra is a highly accurate and easy-to-use intelligent search service powered by machine learning (ML). Amazon Kendra provides native connectors for popular data sources like Amazon Simple Storage Service (Amazon S3), SharePoint, ServiceNow, OneDrive, Salesforce, and Confluence so you can easily add data from different content repositories and file systems into a centralized location. This enables you to use Kendra’s natural language search capabilities to quickly find the most relevant answers to your questions.

However, many organizations store relevant information in the form of unstructured data on company intranets or within file systems on corporate networks that are inaccessible to Amazon Kendra.

You can now use the custom data source feature in Amazon Kendra to upload content to your Amazon Kendra index from a wider range of data sources. When you select a connector type, the custom data source feature gives complete control over how documents are selected and indexed, and provides visibility and metrics on which content associated with a data source has been added, modified, or deleted.

In this post, we describe how to use a simple web connector to scrape content from unauthenticated webpages, capture attributes, and ingest this content into an Amazon Kendra index using the custom data source feature. This enables you to ingest your content directly to the index using the BatchPutDocument API, and allows you to keep track of the ingestion through Amazon CloudWatch log streams and through the metrics from the data sync operation.

Setting up a web connector

To use the custom data source connector in Amazon Kendra, you need to create an application that scrapes the documents in your repository and builds a list of documents. You ingest those documents into your Amazon Kendra index by using the BatchPutDocument operation. To delete documents, you have to provide a list of the document IDs and use the BatchDeleteDocument operation. If you need to modify a document (for example because it was updated), if you provide the same document ID, the document with the matching document ID is replaced on your index.

For this post, we scrape HTML content from AWS FAQs for 11 AI/ML services:

- Amazon CodeGuru

- Amazon Comprehend

- Amazon Forecast

- Amazon Kendra

- Amazon Lex

- Amazon Personalize

- Amazon Polly

- Amazon Rekognition

- Amazon SageMaker

- Amazon Transcribe

- Amazon Translate

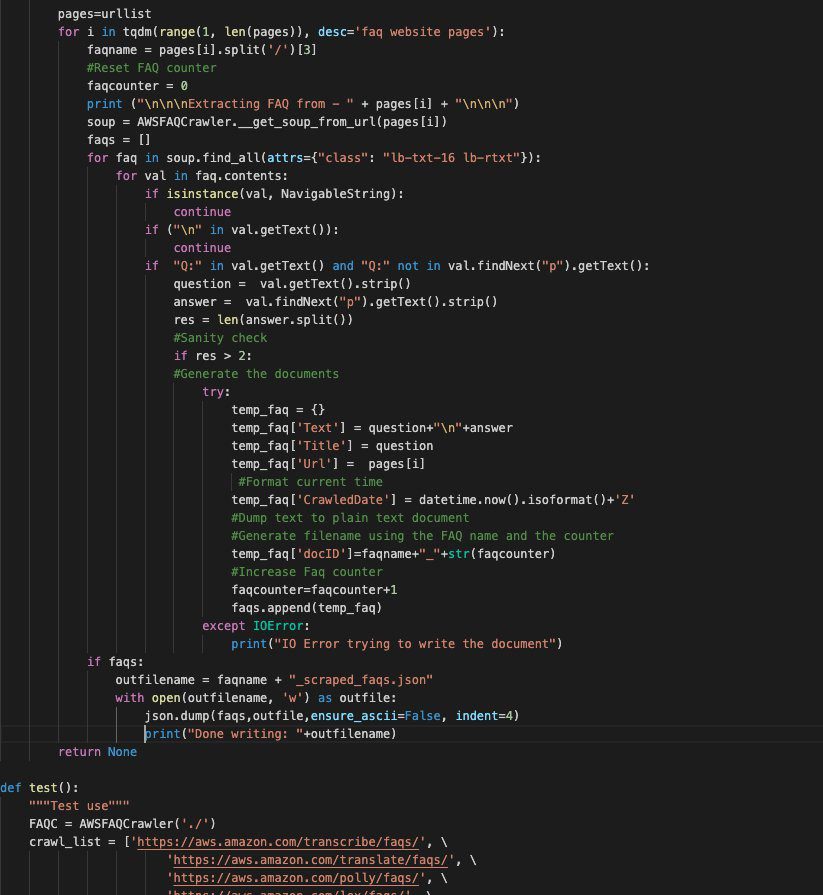

We use BeautifulSoup and requests library to scrape the content from the AWS FAQ website. The script first gets the content of an AWS FAQ page through the get_soup_from_url function. Based on the presence of certain CSS classes, it locates question and answers pairs and for each URL, it creates a text file to be later ingested in Amazon Kendra.

The solution in this post is for demonstration purposes only. We recommend running similar scripts only on your own websites after consulting with the team who manages them, or be sure to follow the terms of service for the website that you’re trying to scrape.

The following screenshot shows a sample of the script.

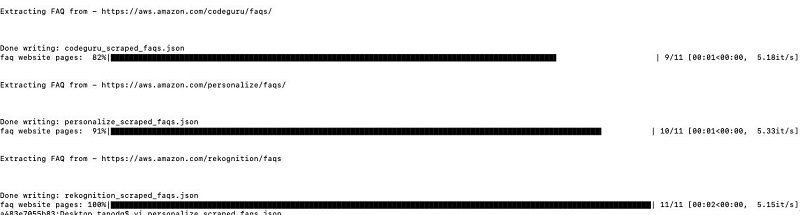

The following screenshot shows the results of a sample run.

The ScrapedFAQS.zip file contains the scraped documents.

Creating a custom data source

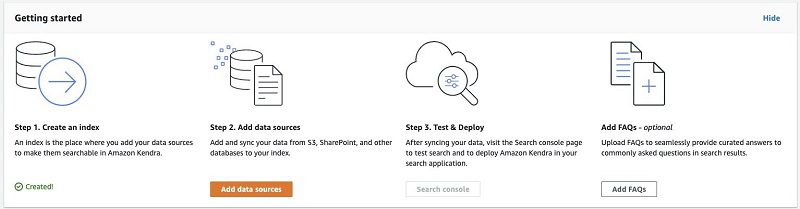

To ingest documents through the custom data source, you need to first create a data source. The assumption is you already have an Amazon Kendra index in your account. If you don’t, you can create a new index.

Amazon Kendra has two provisioning editions: the Amazon Kendra Developer Edition, recommended for building proof of concepts (POCs), and the Amazon Kendra Enterprise Edition, which provides multi-AZ deployment, making it ideal for production. Amazon Kendra connectors work with both editions.

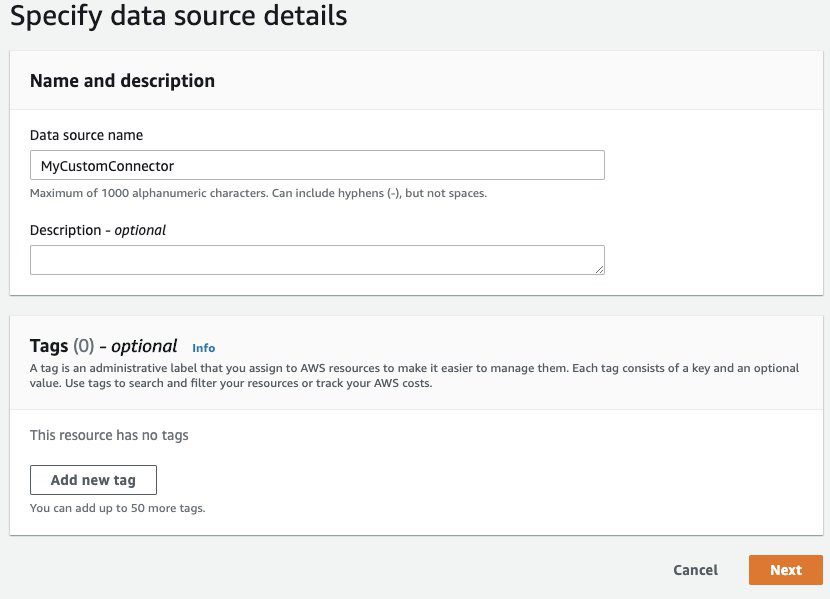

To create your custom data source, complete the following steps:

- On your index, choose Add data sources.

- For Custom data source connector, choose Add connector.

- For Data source name, enter a name (for example,

MyCustomConnector).

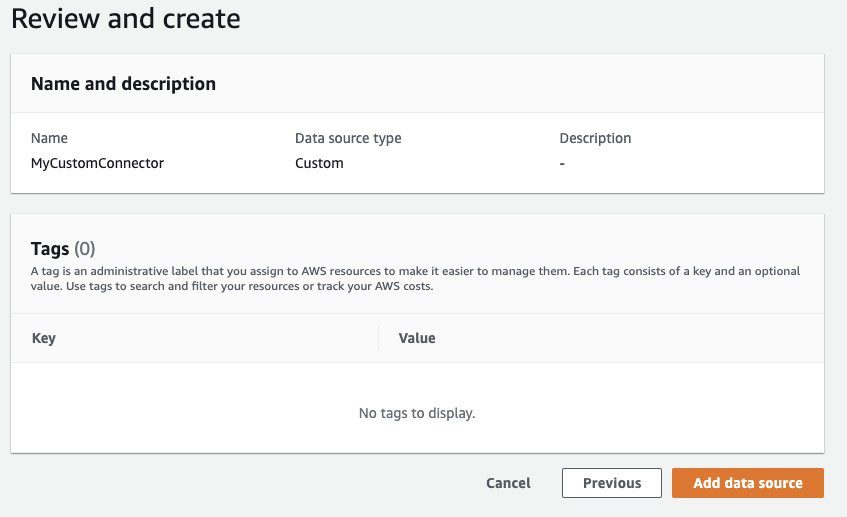

- Review the information in the Next steps

- Choose Add data source.

Syncing documents using the custom data source

Now that your connector is set up, you can ingest documents in Amazon Kendra using the BatchPutDocument API, and get some metrics to track the status of ingestion. For that you need an ExecutionID, so before running your BatchPutDocument operation, you need to start a data source sync job. When the data sync is complete, you stop the data source sync job.

For this post, you use the latest version of the AWS SDK for Python (Boto3) and ingest 10 documents with the IDs 0–9.

Extract the .zip file containing the scraped content by using any standard file decompression utility. You should have 11 files on your local file system. In a real use case, these files are likely on a shared file server in your data center. When you create a custom data source, you have complete control over how the documents for the index are selected. Amazon Kendra only provides metric information that you can use to monitor the performance of your data source.

To sync your documents, enter the following code:

If everything goes well, you see output similar to the following:

Allow for some time for the sync job to finish, because document ingestion could continue as an asynchronous process after the data source sync process has stopped. The status on the Amazon Kendra console should change from Syncing-indexing to Succeeded when all the documents have been ingested successfully. You can now confirm the count of the documents that were ingested successfully and the metrics of the operation on the Amazon Kendra console.

Deleting documents from a custom data source

In this section, you explore how to remove documents from your index. You can use the same DataSourceSync job that you used for ingesting the documents. This process could be useful if you have a changelog of the documents you’re syncing with your Amazon Kendra index, and during your sync job you want to delete documents from your index and also ingest new documents. You can do this by starting the sync job, performing the BatchDeleteDocument operation, performing the BatchPutDocumentation operation, and stopping the sync job.

For this post, we use a separate data source sync job to remove the documents with IDs 6, 7, and 8. See the following code:

When the process is complete, you see a message similar to following:

On Amazon Kendra console, you can see the operation details.

Running queries

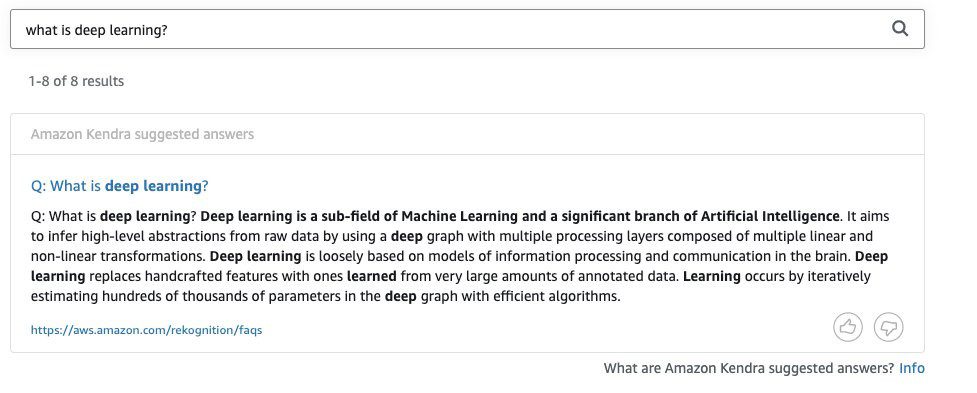

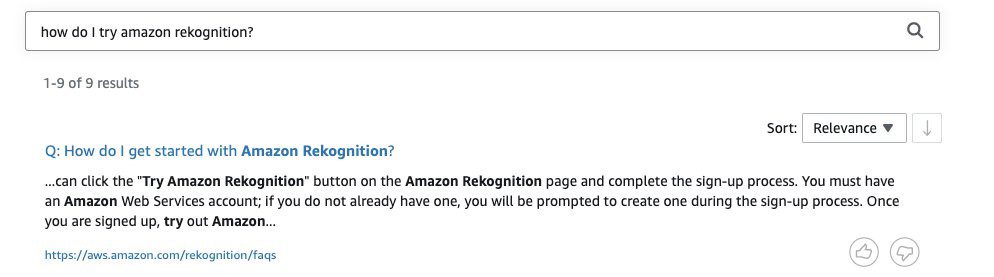

In this section, we show results from queries using the documents you ingested into your index.

The following screenshot shows results for the query “what is deep learning?”

The following screenshot shows results for the query “how do I try amazon rekognition?”

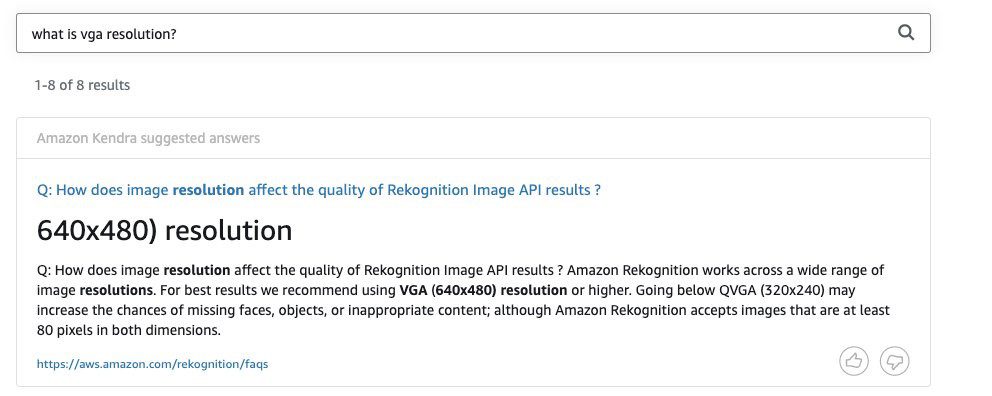

The following screenshot shows results for the query “what is vga resolution?”

Conclusion

In this post, we demonstrated how you can use the custom data source feature in Amazon Kendra to ingest documents from a custom data source into an Amazon Kendra index. We used a sample web connector to scrape content from AWS FAQs and stored it in a local file system. Then we outlined the steps you can follow to ingest those scraped documents into your Kendra index. We also detailed how to use CloudWatch metrics to check the status of an ingestion job, and ran a few natural language search queries to get relevant results from the ingested content.

We hope this post helps you take advantage of the intelligent search capabilities of Amazon Kendra to find accurate answers from your enterprise content. For more information about Amazon Kendra, watch AWS re:Invent 2019 – Keynote with Andy Jassy on YouTube.

About the Authors

Tapodipta Ghosh is a Senior Architect. He leads the Content And Knowledge Engineering Machine Learning team that focuses on building models related to AWS Technical Content. He also helps our customers with AI/ML strategy and implementation using our AI Language services like Kendra.

Juan Pablo Bustos is an AI Services Specialist Solutions Architect at Amazon Web Services, based in Dallas, TX. Outside of work, he loves spending time writing and playing music as well as trying random restaurants with his family.

Tags: Archive

Leave a Reply