Perform batch fraud predictions with Amazon Fraud Detector without writing code or integrating an API

Amazon Fraud Detector is a fully managed service that makes it easy to identify potentially fraudulent online activities, such as the creation of fake accounts or online payment fraud. Unlike general-purpose machine learning (ML) packages, Amazon Fraud Detector is designed specifically to detect fraud. Amazon Fraud Detector combines your data, the latest in ML science, and more than 20 years of fraud detection experience from Amazon.com and AWS to build ML models tailor-made to detect fraud in your business.

After you train a fraud detection model that is customized to your business, you create rules to interpret the model’s outputs and create a detector to contain both the model and rules. You can then evaluate online activities for fraud in real time by calling your detector through the GetEventPrediction API and passing details about a single event in each request. But what if you don’t have the engineering support to integrate the API, or you want to quickly evaluate many events at once? Previously, you needed to create a custom solution using AWS Lambda and Amazon Simple Storage Service (Amazon S3). This required you to write and maintain code, and it could only evaluate a maximum of 4,000 events at once. Now, you can generate batch predictions in Amazon Fraud Detector to quickly and easily evaluate a large number of events for fraud.

Solution overview

To use the batch predictions feature, you must complete the following high-level steps:

- Create and publish a detector that contains your fraud prediction model and rules, or simply a ruleset.

- Create an input S3 bucket to upload your file to and, optionally, an output bucket to store your results.

- Create a CSV file that contains all the events you want to evaluate.

- Perform a batch prediction job through the Amazon Fraud Detector console.

- Review your results in the CSV file that is generated and stored to Amazon S3.

Create and publish a detector

You can create and publish a detector version using the Amazon Fraud Detector console or via the APIs. For console instructions, see Get started (console).

Create the input and output S3 buckets

Create an S3 bucket on the Amazon S3 console where you upload your CSV files. This is your input bucket. Optionally, you can create a second output bucket where Amazon Fraud Detector stores the results of your batch predictions as CSV files. If you don’t specify an output bucket, Amazon Fraud Detector stores both your input and output files in the same bucket.

Make sure you create your buckets in the same Region as your detector. For more information, see Creating a bucket.

Create a sample CSV file of event records

Prepare a CSV file that contains the events you want to evaluate. In this file, include a column for each variable in the event type associated to your detector. In addition, include columns for:

- EVENT_ID – An identifier for the event, such as a transaction number. The field values must satisfy the following regular expression pattern: ^[0-9a-z_-]+$.

- ENTITY_ID – An identifier for the entity performing the event, such as an account number. The field values must also satisfy the following regular expression pattern: ^[0-9a-z_-]+$.

- EVENT_TIMESTAMP – A timestamp, in ISO 8601 format, for when the event occurred.

- ENTITY_TYPE – The entity that performs the event, such as a customer or a merchant.

Column header names must match their corresponding Amazon Fraud Detector variable names exactly. The preceding four required column header names must be uppercase, and the column header names for the variables associated to your event type must be lowercase. You receive an error for any events in your file that have missing values.

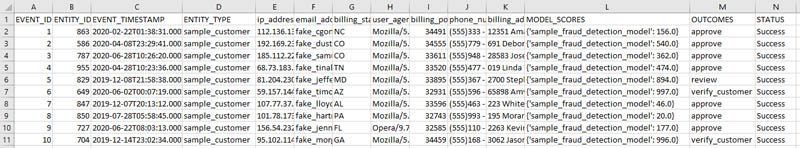

In your CSV file, each row corresponds to one event for which you want to generate a prediction. The CSV file can be up to 50 MB, which allows for about 50,000-100,000 events depending on your event size. The following screenshot shows an example of an input CSV file.

For more information about Amazon Fraud Detector variable data types and formatting, see Create a variable.

Perform a batch prediction

Upload your CSV file to your input bucket. Now it’s time to start a batch prediction job.

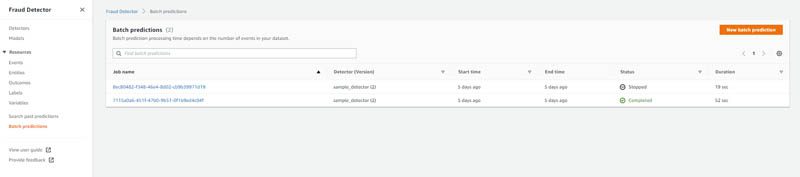

- On the Amazon Fraud Detector console, choose Batch predictions in the navigation pane.

This page contains a summary of past batch prediction jobs.

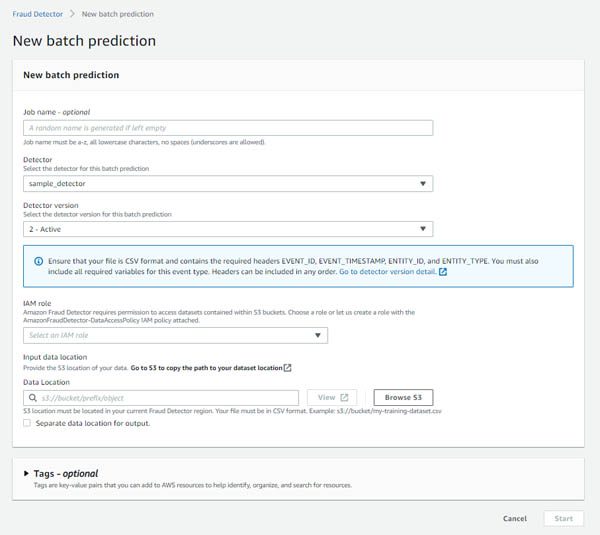

- Choose New batch prediction.

- For Job name¸ you can enter a name for your job or let Amazon Fraud Detector assign a random name.

- For Detector and Detector version, choose the detector and version you want to use for your batch prediction.

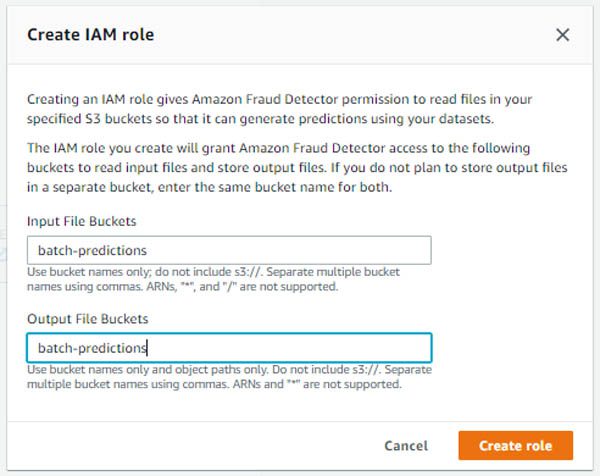

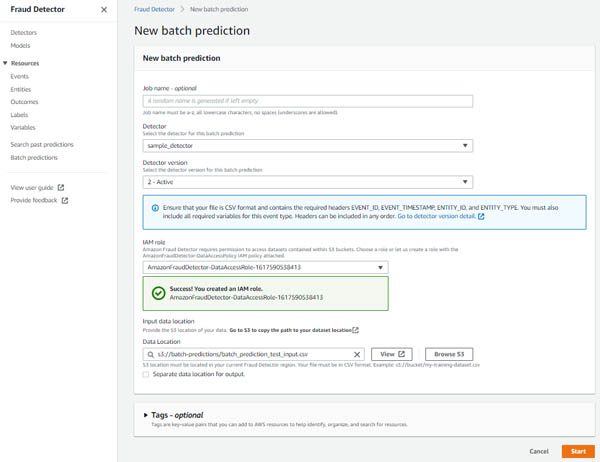

- For IAM role, if you already have an AWS Identity and Access Management (IAM) role, you can choose it from the drop-down menu. Alternatively, you can create one by choosing Create IAM role.

When creating a new IAM role, you can specify different buckets for the input and output files or enter the same bucket name for both.

If you use an existing IAM role such as the one that you use for accessing datasets for model training, you need to ensure the role has the s3:PutObject permission attached before starting a batch predictions job.

- After you choose your IAM role, for Data Location, enter the S3 URI for your input file.

- Choose Start.

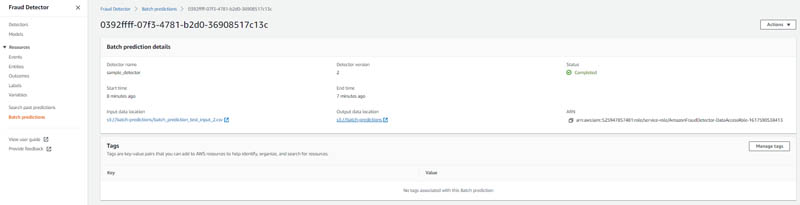

You’re returned to the Batch predictions page, where you can see the job you just created. Batch prediction job processing times vary based on how many events you’re evaluating. For example, a 20 MB file (about 20,000 events) takes about 12 minutes. You can view the status of the job at any time on the Amazon Fraud Detector console. Choosing the job name opens a job detail page with additional information like the input and output data locations.

Review your batch prediction results

After the job is complete, you can download your output file from the S3 bucket you designated. To find the file quickly, choose the link under Output data location on the job detail page.

The output file has all the columns you provided in your input file, plus three additional columns:

- STATUS – Shows

Successif the event was successfully evaluated or an error code if the event couldn’t be evaluated - OUTCOMES – Denotes which outcomes were returned by your ruleset

- MODEL_SCORES – Denotes the risk scores that were returned by any models called by your ruleset

The following screenshot shows an example of an output CSV file.

Conclusion

Congrats! You have successfully performed a batch of fraud predictions. You can use the batch predictions feature to test changes to your fraud detection logic, such as a new model version or updated rules. You can also use batch predictions to perform asynchronous fraud evaluations, like a daily check of all accounts created in the past 24 hours.

Depending on your use case, you may want to use your prediction results in other AWS services. For example, you can analyze the prediction results in Amazon QuickSight or send results that are high risk to Amazon Augmented AI (Amazon A2I) for a human review of the prediction. You may also want to use Amazon CloudWatch to schedule recurring batch predictions.

Amazon Fraud Detector has a 2-month free trial that includes 30,000 predictions per month. After that, pricing starts at $0.005 per prediction for rules-only predictions and $0.03 for ML-based predictions. For more information, see Amazon Fraud Detector pricing. For more information about Amazon Fraud Detector, including links to additional blog posts, sample notebooks, user guide, and API documentation, see Amazon Fraud Detector.

If you have any questions or comments, let us know in the comments!

About the Author

Bilal Ali is a Sr. Product Manager working on Amazon Fraud Detector. He listens to customers’ problems and finds ways to help them better fight fraud and abuse. He spends his free time watching old Jeopardy episodes and searching for the best tacos in Austin, TX.

Bilal Ali is a Sr. Product Manager working on Amazon Fraud Detector. He listens to customers’ problems and finds ways to help them better fight fraud and abuse. He spends his free time watching old Jeopardy episodes and searching for the best tacos in Austin, TX.

Tags: Archive

Leave a Reply