Automate weed detection in farm crops using Amazon Rekognition Custom Labels

Amazon Rekognition Custom Labels makes automated weed detection in crops easier. Instead of manually locating weeds, you can automate the process with Amazon Rekognition Custom Labels, which allows you to build machine learning (ML) models that can be trained with only a handful of images and yet are capable of accurately predicting which areas of a crop have weeds and need treatment. This saves farmers time, effort, and weed treatment costs.

Every farm has weeds. Weeds compete with crops and if not controlled can take up precious space, sunlight, water, and nutrients from crops and reduce their yield. Weeds grow much faster than crops and need immediate and effective control. Detecting weeds in crops is a lengthy and time-consuming process and is currently done manually. Although weed spray machines exist that can be coded to go to an exact location in a field and spray weed treatment in just those spots, the process of locating where those weeds exist is not yet automated.

Weed location automation isn’t an easy process. This is where computer vision and AI come in. Amazon Rekognition is a fully managed computer vision service that allows developers to analyze images and videos for a variety of use cases, including face identification and verification, media intelligence, custom industrial automation, and workplace safety. Detecting custom objects and scenes can be hard. Training and improving the accuracy of a computer vision model requires a large amount of data and is a complex problem. Amazon Rekognition Custom Labels allows you to detect custom labeled objects and scenes with just a handful of training images.

In this post, we use Amazon Rekognition Custom Labels to build an ML model that detects weeds in crops. We’re presently helping researchers at a US university automate this process for local farmers.

Create and train a weed detection model

We solve this problem by feeding images of crops with and without weeds to Amazon Rekognition Custom Labels and building an ML model. After the model is built and deployed, we can perform inference by feeding the model images from field cameras. This way farmers can automate weed detection in their fields. Our experiments showed that highly accurate models can be built with as few as 32 images.

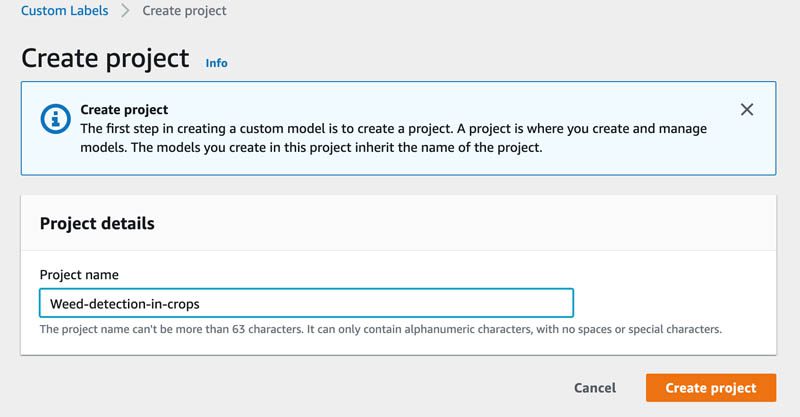

- On the Amazon Rekognition console, choose Use Custom Labels.

- Choose Projects.

- Choose Create project.

- For Project name, enter a name (for example,

Weed-detection-in-crops). - Choose Create project.

Next, we create a dataset.

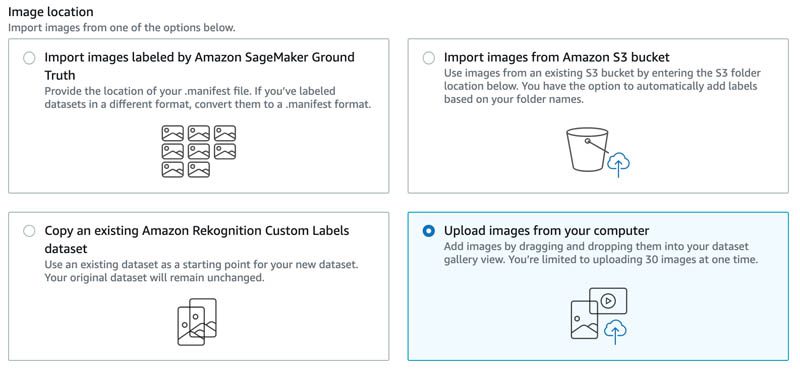

- On the Amazon Rekognition Custom Labels console, choose Datasets.

- Choose Create dataset.

- Enter a name for your dataset, such as

crop-weed-ds. - Select your training data location (for this post, we select Upload images from your computer).

- Choose Add images to upload your images.

For this post, we use 32 field images, of which half are images of crops without weeds and half are weed-infected crops.

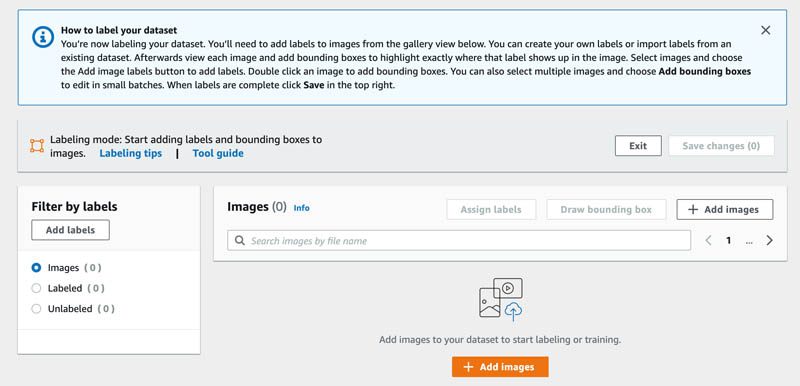

- After you upload your training images, choose Add labels to add labels to your training data.

For this post, we define two labels: good-crop and weed.

- Assign your uploaded images one of these two labels depending on that image type.

- Save these changes.

We now have labeled images for both the classes we defined.

- Create another dataset for testing called

test-ds, which contains four labeled images for testing purposes.

We’re now ready to train a new model.

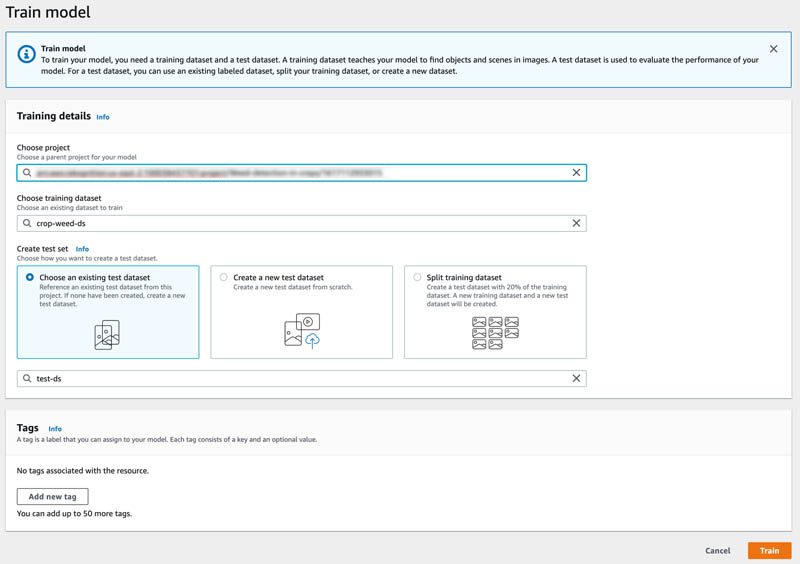

- Select the project you created and choose Train new model.

- Choose the training dataset and test dataset that you created earlier.

- Choose Train.

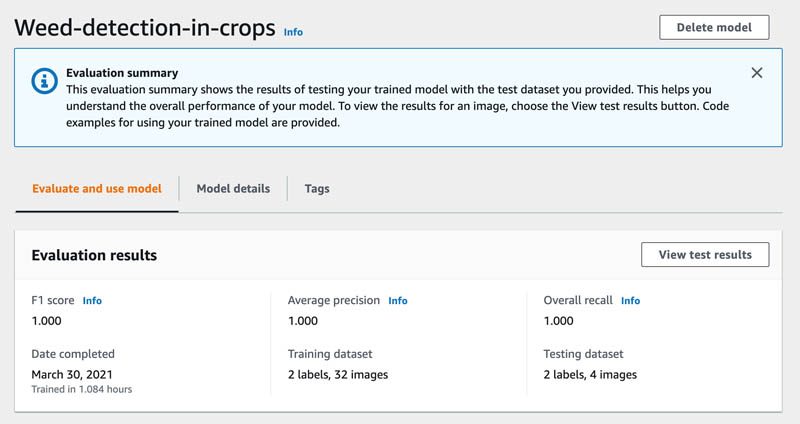

After the model is trained, we can see how it performed. Our model was near perfect, with an F1 score of 1.0. Precision and recall were 1.0 as well.

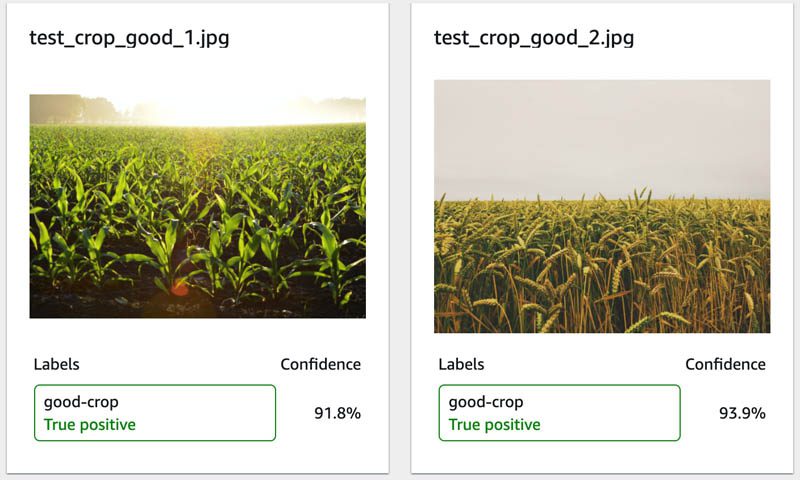

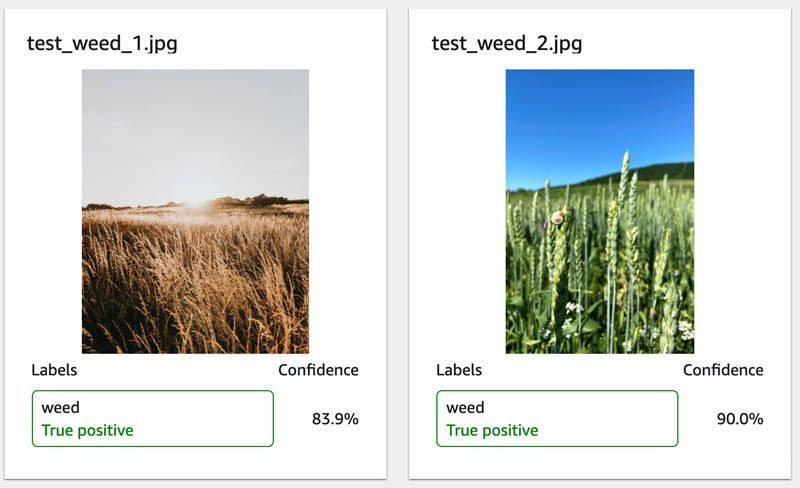

We can choose View test results to see how this model performed on our test data. The following screenshot shows that good crops were predicted accurately as good crops and weed-infected crops were detected as containing weeds.

Test the model via your browser

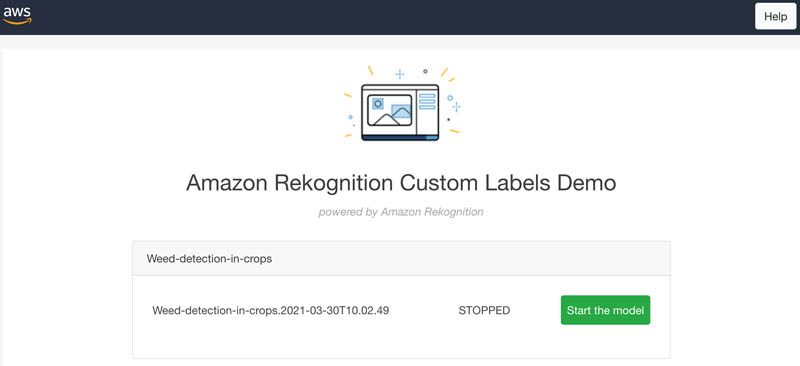

We offer an AWS CloudFormation template in the GitHub repo that allows you to test the model through a browser. Choose the appropriate template depending on your Region. The template launches the required resources for you to test the model

The template asks for your email when you launch it. When the template is ready, it emails you the required credentials. The Outputs tab for the CloudFormation stack has a website URL for testing the model.

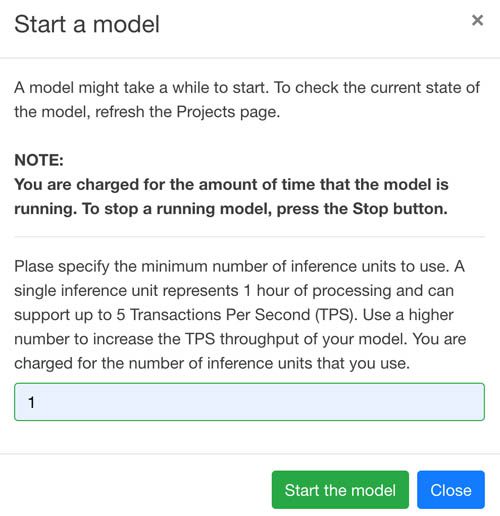

- On the browser front end, choose Start the model.

- Enter 1 for inference units.

- Choose Start the model.

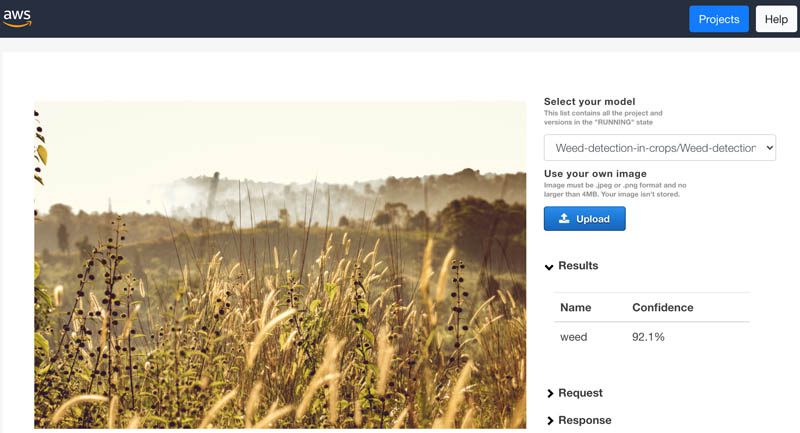

- When the model is running, you can upload any image to it and get classification results.

- Stop the model once your testing is completed.

Perform inference using the SDK

Inference from the model is also possible using the SDK. The following code runs on the same image as in the previous section:

The results from using the SDK are the same as earlier from the browser:

Best practices

Consider the following best practices when using Amazon Rekognition Custom Labels:

- Use images that have high resolution

- Crop out any background noise in the image

- Have a good contrast between the object you’re trying to detect and other objects in the image

- Delete any resources that you have created once your project is completed

Conclusion

In this post, we showed how you can automate weed detection in crops by building custom ML models with Amazon Rekognition Custom Labels. Amazon Rekognition Custom Labels takes care of deep learning complexities behind the scenes, allowing you to build powerful image classification models with just a handful of training images. You can improve model accuracy by increasing the number of images in your training data and resolution of those images. Farmers can deploy models such as these into their weed spray machines in order to reduce cost and manual effort. To learn more, including other use cases and video tutorials, visit the Amazon Rekognition Custom Labels webpage.

About the Author

Raju Penmatcha is a Senior AI/ML Specialist Solutions Architect at AWS. He works with education, government, and nonprofit customers on machine learning and artificial intelligence related projects, helping them build solutions using AWS. When not helping customers, he likes traveling to new places.

Raju Penmatcha is a Senior AI/ML Specialist Solutions Architect at AWS. He works with education, government, and nonprofit customers on machine learning and artificial intelligence related projects, helping them build solutions using AWS. When not helping customers, he likes traveling to new places.

Tags: Archive

Leave a Reply