ML model explainability with Amazon SageMaker Clarify and the SKLearn pre-built container

Amazon SageMaker Clarify is a new machine learning (ML) feature that enables ML developers and data scientists to detect possible bias in their data and ML models and explain model predictions. It’s part of Amazon SageMaker, an end-to-end platform to build, train, and deploy your ML models. Clarify was made available at AWS re:Invent 2020.

In this post, we focus on the explainability capabilities in Clarify. With the concept of Shapley values, Clarify helps identify the importance of various features in overall model predictions as well as for individual inferences. These feature importance graphs are available after the model has trained, and Clarify can also help identify any shifts in feature importance over time through Amazon SageMaker Model Monitor.

At AWS, we take our mission to put ML in the hands of every developer seriously. For this reason, we want to present a way of using Clarify with the scikit-learn pre-built container. This approach generalizes across all pre-built and custom-built containers. We also provide a solution of integrating this new feature into your ML pipeline using the AWS Step Functions Data Science Python SDK.

In this post, we provide a step-by-step guide for using these capabilities and provide code that helps you get started.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account

Deploy your resources

Before we get started, we need certain roles and policies that let your SageMaker notebooks interact with AWS Step Functions. We create these with an AWS CloudFormation template. The template also creates a SageMaker instance that automatically downloads this GitHub repository. Launch the stack with the following link:

Specify the Amazon Simple Storage Service (Amazon S3) bucket that you have in place for storing your data during stack creation.

After your stack is deployed, navigate to the SageMaker notebook instances on the SageMaker console. There you will find an up-and-running notebook that you can start using.

Use Clarify with a pre-built SKLearn container

One of the most-used Python libraries in the ML space is scikit-learn. For this reason, AWS offers a pre-built container in SageMaker for training and deploying ML models. With Clarify, you can now explain why your scikit-learn model predicts the way it does. For more information about getting started with Clarify using the built-in XGBoost model, see Fairness and Explainability with SageMaker Clarify.

Depending on whether you use a pre-built container or bring your own container, the way to integrate Clarify is slightly different. In the first part of this post, we explain the steps involved to work with Clarify and the pre-built SKLearn container.

Get started by setting global variables

Throughout our examples, we use the abalone dataset originally from UCI data repository. The example Jupyter notebook we provide downloads the dataset through code to your SageMaker notebook instance.

The dataset contains nine fields, starting with the Rings number, which indicates the age of the abalone (its age equals the number of rings plus 1.5). Usually the number of rings are counted through microscopes to estimate the abalone’s age. We use our algorithm to predict the abalone age based on the other features within the dataset.

These features are physical measurements that can be measured using the correct tools, so we improve the complexity of having to examine the abalone under microscopes to understand its age.

Overall, we’re trying to solve a regression problem in which we try to predict the target variable Rings. While working with the notebook, you use this data and generate multiple datasets that are uploaded to Amazon S3. To store your data on Amazon S3, we define the bucket and data prefixes at the beginning:

The S3 bucket is used to store your data and save the trained model artifacts. We also set a data prefix so that your training data is stored accordingly. Our example notebook walks you through the process of reading, cleaning, and storing the data. One important thing to note is that we store three CSV files to our S3 bucket, namely train_uri, val_uri, and baseline_uri:

The training and validation datasets each have a header when written to CSV. The target column Rings is their first column. The Kernel SHAP algorithm requires a baseline (also known as a background dataset). These baseline samples only include features and have no header by definition. For more information, see SHAP Baselines for Explainability.

You also must set the location of the source code and entry point. For more information, see Using Scikit-learn with the SageMaker Python SDK. You can use the following source directory and entry point from our GitHub repo:

The SKLearn pre-built container enters the predictor.py script for training the model. This script also overwrites the input, output, model, and predict functions in your hosted endpoint.

Develop the predictor.py file

We must modify the code in Using Scikit-learn with the SageMaker Python SDK to work with Clarify. Because we’re applying preprocessing in our entry script, it’s crucial to have the same column names in place for both training and inference. In the main function that runs the model training, we apply the RandomForestRegressor. See the following script for the preprocessing and training step:

In predict_fn, make sure you set the headers of your DataFrame. As we mentioned earlier, Clarify uses a dataset without headers, but the ML model object is trained on data with column names. For this reason, we set the column names during inference before we apply the ML model. See the following code:

The object config_data is passed to the SKLearn container through the .fit() method call as follows:

Within the SKLearn container, the object config_data is returned with the trained model from the model_fn function so that it can be called within predict_fn by reading it into memory like:

Extend the solution to bring your own container

If you’re not using a built-in model from SageMaker or a built-in container, you can also bring your own Docker container. For more information, see Using Docker containers with SageMaker. The concepts we discussed are still valid. When bringing your own Docker image, you also need to define the following:

- Main function to train your model

- Input function

- Output function

- Model function

- Predict function

You can apply the same approach as we did with the pre-built SKLearn container.

Run Clarify a SageMaker Processing job

To run Clarify with your custom-built scikit-learn model, see Fairness and Explainability with SageMaker Clarify. You use the SageMaker Python SDK to define three configurations: DataConfig, ModelConfig, and SHAPConfig. All three are compiled into one JSON file named analysis_config.json and saved in Amazon S3 by the SageMaker Python SDK. We make one slight change to the SHAPconfig in our example. Instead of using your local data available in your SageMaker notebook for the SHAP baseline, we point the configuration to the CSV file we uploaded to Amazon S3 earlier in the notebook. See the following code:

The num_samples value is set low to allow the notebook to run fast. Ideally, this should be set according to the number of features. Higher values result in better fidelity for feature attributions and increase job duration. For more information, see Feature Attributions that Use Shapley Values and Amazon AI Fairness and Explainability Whitepaper.

Clarify runs as a SageMaker Processing job. The job expects two inputs: your training data CSV and the analysis_config.json file, which contains the configuration parameters of Clarify.

We generate this JSON object later using an AWS Lambda function in our automated ML pipeline.

Visualize your SHAP results

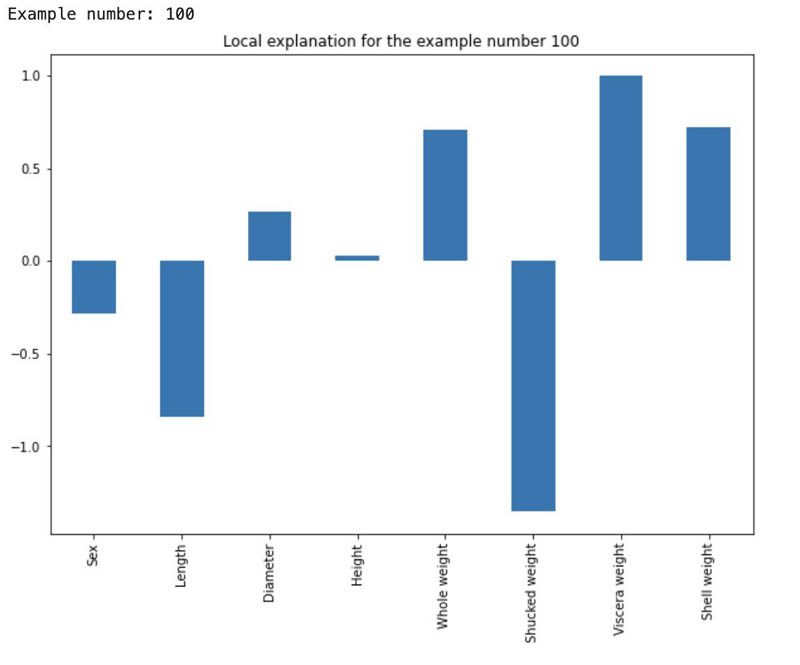

Finally, we want to show a visualization of the processing job running Clarify. In Amazon SageMaker Studio, the results are available on the Experiment tab. From here, choose Trial components on the drop-down menu. For more information, see Viewing the Explainability Report. The code presented here can be found in our example notebook:

We select 100 examples and read the out.csv file from Amazon S3 that was generated by Clarify. Plotting a bar chart with these examples results in the following figure. The higher the bar, the more impact the feature has on the target feature. The bars with positive values are associated with higher predictions in the target variable, and the bars with negative values are associated with lower predictions in the target variable. In this plot, the feature Shucked weight has the biggest impact on the target and it lowers the predictions.

Automate Clarify in a Step Functions pipeline

The previous section demonstrated how to utilize the Clarify processor. This section shows how to orchestrate build, train, explain, and deploy an ML model using Step Functions and the corresponding Data Science Python SDK.

Step Functions can help orchestrate and automate sequences of ML tasks, including data collection, training, testing, evaluation of an ML model, and deployment of the model for inference. For this post, we use the AWS Step Functions Data Science Python SDK to help you develop these workflows. One feature of this SDK is the possibility to integrate SageMaker processing containers in Step Functions. We add Clarify as a processing job in our pipeline.

As a prerequisite, a pre-existing training workflow is assumed.

Integration of the Clarify processing job into the pipeline requires the following high-level steps:

- Provide two inputs:

- The Clarify configuration file

analysis_config.json, which is created by a Lambda function. - The input data to explain predictions for.

- The Clarify configuration file

- Specify the output location (the Amazon S3 destination where the explainability report is saved).

- Create the Clarify processor Amazon Elastic Container Registry (Amazon ECR) container image.

Write the Clarify config file with Lambda

In the first part of this post, we generated a JSON object named analysis_config.json. Because we did this with the SageMaker Python SDK, it can’t be directly incorporated into the Step Functions pipeline, so we need to find another way to construct and save this object to Amazon S3. One building block in Step Functions is Lambda, which is also supported by the AWS Step Functions Python SDK. The following code defines the Lambda step in your Step Functions pipeline using the Python SDK:

This step also gets a payload that can directly be called within your Lambda function through the event variable. The function generates a JSON object analysis_config.json, including the target column, data headers, and location of your baseline dataset. For more information about this configuration, see Configure the Analysis. The function saves the file to your defined S3 bucket to the location specified with CLARIFY_CONFIG_PREFIX.

The following code highlights the major part of the Lambda function. It uses the parameters from the preceding payload to generate the JSON object:

Specify the input to the processing job

The Clarify processing job expects two inputs:

- The dataset to calculate the explainability

- The configuration file

analysis_config.json

Each of these are provided as ProcessingInput objects and then passed as a list. Keep in mind that the local_path for each ProcessingInput needs to be provided as /opt/ml/processing/input/data for data input and /opt/ml/processing/input/config for the configuration file. See the following code:

Specify the output of the processing job

The Clarify processing container saves the output to the local path /opt/ml/processing/output. To save the output outside of the container, you need to specify an Amazon S3 path as the destination within the ProcessingOutput. See the following code:

Create a SageMaker processor job

The AWS Step Functions Python SDK can’t use the SageMakerClarifyProcessor class from the SageMaker Python SDK directly. Instead, we define a SageMaker processor job that runs the Amazon ECR clarify image:

Add the processor to the pipeline

Now that the inputs, outputs, and processing container are defined, we can input them into the ProcessingStep of the AWS Step Functions Python SDK:

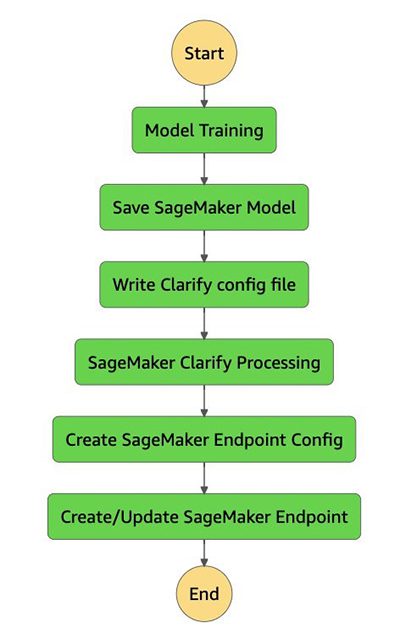

Create the workflow chain

In the example notebook from the GitHub repo, we also define other steps like model training, saving it to SageMaker, and creating an endpoint configuration with an endpoint. The following code demonstrates how you can chain these steps together programmatically:

You can enrich your Step Functions pipeline by adding choice states that check whether your train-validation metric satisfies certain business objectives. For an example, see the following GitHub repo.

Create and run the workflow

Finally, you create and run your workflow. Make sure that all your SageMaker resources like the training job and model have unique names. A good way of doing this is by appending the current date and time:

You can check whether your pipeline ran successfully by navigating to the Step Functions console. Choose the pipeline you created and choose the most recent run. After about 45 minutes, depending on the size of your data, you should see something similar to the following diagram.

Cleanup

To avoid incurring future charges, delete the resources:

- Amazon SageMaker Endpoint

- AWS CloudFormation stack

Conclusion

In this post, we presented a way to use Clarify with your scikit-learn ML model. The approach is similar when bringing your own container. We also provided an example of how you can add Clarify to your Step Functions pipeline through a processing container. We further introduced a Lambda function that writes the analysis_config.json file, which is then used by the Clarify processing job.

Clarify is used by many customers already. One of them is Deutsche Fußball Liga (DFL). With the Bundesliga Match Fact xGoals, the DFL can assess the probability of a player scoring a goal when shooting from any position on the field. For more information about how Clarify helps explain Match Facts, see Explaining Bundesliga Match Facts xGoals using Amazon SageMaker Clarify.

About the Authors

Sabina Przioda is a Data Scientist with AWS Professional Services and is passionate about digging into the data. She helps her customers generate insights out of data and transform them into actionable results. Besides having a deep interest in Data Science, she loves traveling around the world.

Sabina Przioda is a Data Scientist with AWS Professional Services and is passionate about digging into the data. She helps her customers generate insights out of data and transform them into actionable results. Besides having a deep interest in Data Science, she loves traveling around the world.

Michael Wallner is a Global Data Scientist with AWS Professional Services and is passionate about enabling customers on their AI/ML journey in the cloud to become AWSome. Besides having a deep interest in Amazon Connect, he likes sports and enjoys cooking.

Michael Wallner is a Global Data Scientist with AWS Professional Services and is passionate about enabling customers on their AI/ML journey in the cloud to become AWSome. Besides having a deep interest in Amazon Connect, he likes sports and enjoys cooking.

Tags: Archive

Leave a Reply