Hyundai reduces ML model training time for autonomous driving models using Amazon SageMaker

Hyundai Motor Company, headquartered in Seoul, South Korea, is one of the largest car manufacturers in the world. They have been heavily investing human and material resources in the race to develop self-driving cars, also known as autonomous vehicles.

One of the algorithms often used in autonomous driving is semantic segmentation, which is a task to annotate every pixel of an image with an object class. These classes could be road, person, car, building, vegetation, sky, and so on. In a typical development cycle, the team at Hyundai Motor Company tests the accuracy periodically, and gathers additional images to correct for the insufficient predictive performance in specific situations. This can be a challenge, however, as there is often not enough time to prepare all the new data while leaving sufficient time to train the model and meet the scheduled deadlines. Together with the Amazon ML Solutions Lab, Hyundai Motor Company solved this problem by significantly accelerating training using the scalable AWS Cloud and Amazon SageMaker, including the new SageMaker library for data parallelism.

Solution overview

SageMaker is a fully managed ML platform that solves customer challenges by reducing the “heavy lifting” of managing distributed compute infrastructure and monitoring and debugging the training job. For this use case, we use the SageMaker data parallelism library and Amazon SageMaker Debugger to solve Hyundai Motor Company’s technical challenge and meet their business goals in a cost-effective manner.

SageMaker provides distributed training libraries for data parallelism and model parallelism. In this case, the model being trained is fit into memory on a single GPU, but the amount of training data is large, which means one epoch of training takes too long with a single GPU. This is a typical training example in which data parallel distributed training can reduce the overall duration of the training job. SageMaker data parallelism accomplishes this by spreading training data to multiple GPU instances and training the same model on each GPU using the assigned dataset. The SageMaker data parallelism library is designed to exploit the high-speed AWS network infrastructure that brings near-linear scalability with more GPUs being used.

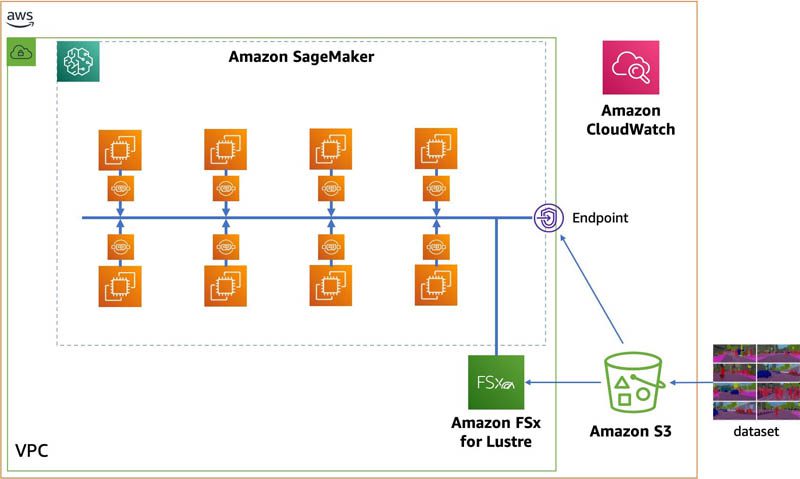

The training architecture uses SageMaker and optionally uses Amazon FSx for Lustre for data storage. We use Amazon Simple Storage Service (Amazon S3) as permanent data storage. We converted the PyTorch Data Parallel based training code into the SageMaker data parallelism library with only a few lines of code, and achieved up to 93% scaling efficiency with 8 GPU instances, or 64 GPUs in total. The following diagram depicts the AWS architecture deployed for distributed training:

Unlike training a model using a single GPU, multiple or distributed GPU training might reveal underlying performance issues that weren’t observed in a single GPU. So it’s important to monitor resource utilization along with training metrics in order to fully utilize the expensive GPU resources and achieve the desired model performance.

The SageMaker Debugger and its profiling capabilities allow deep learning scientists and engineers to monitor, trace, and analyze system-related or model-related performance issues while a training job is running. Enabling debugging output doesn’t require any code changes in the training scripts. Real-time monitoring and visualization are provided by Amazon SageMaker Studio, and you can access the gathered debugging and profiling data via API calls for custom visualization or analysis. You can turn the profiler on or off and even change the profiling configuration during a training job in progress to minimize the overhead introduced by Debugger’s framework-level profiling function.

Distributed training using the SageMaker data parallelism library

To use the SageMaker data parallelism library, only a small code change is needed, which wraps the model using the SageMaker DistributedDataParallel class and performs initialization. The following code example shows how this is done to a PyTorch training script. The user experience on the API is similar to PyTorch’s DistributedDataParallel.

If you’re an experienced user of PyTorch or TensorFlow distributed training, you might ask a question like “How do I set up a cluster for distributed training, and how do I start training processes on each instance in the cluster?” All you must do in SageMaker is specify the number of instances and the instance type, and let SageMaker know what distributed training strategy to use. SageMaker takes care of the heavy lifting, and this configuration is applied to both PyTorch and TensorFlow. See the following example code:

Now that we have updated the training script, we should decide how to access the dataset located in the S3 bucket. The most common method is letting SageMaker copy datasets from your S3 bucket to the attached storage or the internal NVMe SSD storage when the training job is initiated. This storage is not permanent across training jobs. This method is called SageMaker File mode.

In our case, the dataset is about 300 GB in total with a large number of files, which takes about 1 hour to complete the data copy into the training instances before the model training can begin. The actual training script only runs after this step is complete. The amount of data loading time is negligible when we do a large-scale training, which takes days, weeks, or even longer. But during the development and testing phases, this amount of time matters.

To avoid being slowed down by this, we can take one of the following actions:

- Reduce the size of the dataset

- Use SageMaker Pipe mode, which streams data rather than copying it

- Use FSx for Lustre rather than copying data from Amazon S3

We chose FSx for Lustre for the repeated experiment. We created the FSx for Lustre file system using the data located in an S3 bucket, and attached it to training instances for each training job. Because the FSx for Lustre file system persists across SageMaker training jobs (unlike the storage attached to training instances), we can run multiple experiments without any initialization delay.

After we complete the code conversion and make sure that the training code is running without any issue, we switch to File mode so that we can use internal NVMe SSD storage for optimal I/O performance. This is also easily done by changing the SageMaker estimator configuration. Again, no code change to the training script needed. See the following code:

Training performance analysis and tuning

No training code changes are required to use Debugger. Debugger is either configured when you define the estimator, or enabled or disabled via Studio or the Debugger API while a training job is running. We enabled Debugger system profiling on CPU utilization, GPU utilization, GPU memory utilization, and I/O wait with 500-millisecond intervals via a SageMaker estimator in order to get the full picture of the training job performance. This is done by defining ProfilerConfig and setting it to the estimator:

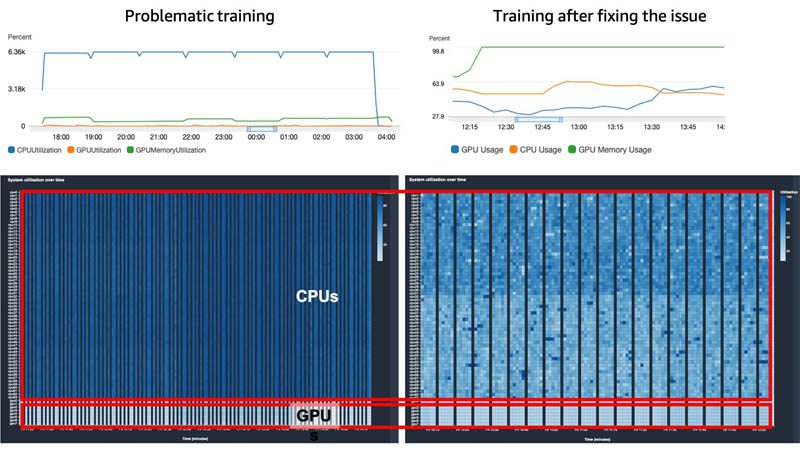

We monitored system resource utilization using Studio’s visualization feature on Debugger to find an abnormal pattern. The time taken for each step got longer in multi-GPU training than single-GPU training. The CPUs were hitting 100% all the time during the multi-GPU training while GPUs were under-utilized. This pattern was quickly identified while the training was in progress via the CPU and GPU utilization heatmap in Studio. We could perform root cause analysis with the help of Debugger’s framework profiling feature, which gives Python-level and deep learning framework-level profiling output.

Amazon ML Solutions Lab and Hyundai Motor Company dived deep into the Debugger data and training code and found the root cause in the custom data loader. This problem did not cause any performance overhead in a single-GPU training context. With the CPU starvation issue resolved, the system resource utilization returned to normal and training performance improved. This effort resulted in improving the multi-GPU training speed to twice as fast using the same amount of GPU resources.

The following figure shows the CPU and GPU utilization graphs and heatmaps. The left is from the problematic training, and the right is from the training with the fix applied.

Conclusion

In this post, we detailed the challenges with this complex use case and how we used the SageMaker data parallelism library to speed up training. We also shared real-world techniques to identify bottlenecks and optimize training performance. As a result, we achieved 10 times faster training speed with just five times as many instances.

“We use computer vision models to do scene segmentation, which is important for scene understanding,” said Jinwook Choi, Senior Research Engineer at Hyundai Motor Company. “It used to take 57 minutes to train the model for one epoch, which slowed us down. Using Amazon SageMaker’s data parallelism library and with the help of Amazon ML Solutions Lab, we were able to train in 6 minutes with optimized training code on five ml.p3.16xlarge instances. With the 10 times reduction in training time, we can spend more time preparing data during the development cycle.”

To learn more about related features of SageMaker, check out the following:

About Amazon ML Solutions Lab

Amazon ML Solutions Lab pairs your team with ML experts to help you identify and implement your organization’s highest-value ML opportunities. If you’d like help with accelerating your use of ML in your products and processes, please contact the Amazon ML Solutions Lab.

About the Authors

Muhyun Kim is a data scientist at Amazon Machine Learning Solutions Lab. He solves customer’s various business problems by applying machine learning and deep learning, and also helps them gets skilled.

Muhyun Kim is a data scientist at Amazon Machine Learning Solutions Lab. He solves customer’s various business problems by applying machine learning and deep learning, and also helps them gets skilled.

Jiyang Kang is a deep learning architect at Amazon Machine Learning Solutions Lab. With experience designing global enterprise workloads on AWS, he is responsible for designing and implementing ML solutions for customers’ new business problems.

Youngjoon Choi is a Solutions Architect for AWS, helping customers design and build their use cases using AWS Services. Youngjoon especially covers a wide range of AI/ML use cases for enterprise customers, including manufacturing, healthcare, and financial services, through a previous data scientist’s experience.

Youngjoon Choi is a Solutions Architect for AWS, helping customers design and build their use cases using AWS Services. Youngjoon especially covers a wide range of AI/ML use cases for enterprise customers, including manufacturing, healthcare, and financial services, through a previous data scientist’s experience.

Aditya Bindal is a Senior Product Manager for AWS Deep Learning. He works on products that make it easier for customers to train deep learning models on AWS. In his spare time, he enjoys spending time with his daughter, playing tennis, reading historical fiction, and traveling.

Aditya Bindal is a Senior Product Manager for AWS Deep Learning. He works on products that make it easier for customers to train deep learning models on AWS. In his spare time, he enjoys spending time with his daughter, playing tennis, reading historical fiction, and traveling.

Nathalie Rauschmayr is an Applied Scientist at AWS, where she helps customers develop deep learning applications.

Nathalie Rauschmayr is an Applied Scientist at AWS, where she helps customers develop deep learning applications.

Jongmo Kim is a deep learning research engineer at Hyundai Motor Company, working on developing autonomous driving/parking products.

Jongmo Kim is a deep learning research engineer at Hyundai Motor Company, working on developing autonomous driving/parking products.

Tags: Archive

Leave a Reply