Personalizing wellness recommendations at Calm with Amazon Personalize

This is a guest post by Shae Selix (Staff Data Scientist at Calm) and Luis Lopez Soria (Sr. AI/ML Specialist SA at AWS).

Today, content is proliferating. It’s being produced in many different forms by a host of content providers, both large and small. Whether it’s on-demand video, music, podcasts, or other forms of rich media, consumers are overwhelmed by choice on a regular basis. This is why Calm is on a mission to make the world happier and healthier, and is achieving this goal by delivering its top-rated app for meditation and sleep to consumers users around the world.

Impressively, Calm was named the “App of the Year” in 2017 after almost 10 million signups with a team of fewer than 20. As Calm scaled, so has their mission to diversify their content library beyond meditation and sleep stories.

Well-known celebrities, such as Lebron James, Harry Styles, and Ariana Grande, have contributed to a 10-times expansion of Calm’s content library. With this growth in content, customers have shared that it has become more challenging to find the content that is right for them. To address this, and to support customers’ expectations for personalized content, the team at Calm turned to Amazon Personalize.

Based on over 20 years of personalization experience at Amazon.com, Amazon Personalize enables you to improve customer engagement by powering personalized product and content recommendations and targeted marketing promotions. Amazon Personalize uses machine learning (ML) to create higher-quality recommendations for your websites and applications. You can get started without any prior ML experience and use simple APIs to easily build sophisticated personalization capabilities in just a few clicks.

Like other content streaming providers such as Amazon Prime Video, Calm embarked on a journey to provide in-app recommendations to help users discover the content best suited to their needs and interests. As a startup with a small ML team, Calm found that Amazon Personalize provided a powerful platform to launch their first set of recommendations. Deploying recommendations and collecting user feedback was much faster with Amazon Personalize than building a deep learning system from scratch.

The recommendations generated from Amazon Personalize led to a 3.4% increase in daily mindfulness practice among Calm’s members, a significant lift considering this custom metric encompasses various factors across engagement, retention, and customer personas. Each day, 100,000 people download Calm to start their meditation journeys. These results enable Calm to achieve their goal of helping people develop a mindfulness habit.

Calm’s content is unique and tailored for their audience

Unlike other content platforms, where content creators can submit content freely, Calm sets a high bar for the content featured on their platform. Calm’s mindfulness and creative teams record and carefully curate content to reflect what end users are looking for.

For example, some users engage with Calm for Sleep Stories only and have no interest in Soundscapes, or Meditations while sitting in a quiet place focused on the task at hand, versus Music that is often listened to while driving or studying.

Alternatively, users come to Calm to learn how to meditate, a new skill that must be learned in an orderly way. This means starting with short, guided meditations with explanations and reminders to focus on the present moment. More experienced meditators often prefer silence, other than the ring of a bell to indicate how much time has passed.

Calm’s beginner content, like “7 Days of Calm” by Tamara or “How to Meditate” by Jeff Warren, is very popular, but entry-level sessions aren’t necessarily relevant recommendations for an experienced meditator. Conversely, a beginner may not be ready for the longer, less guided content.

In summary, Calm’s uniqueness is that while many digital applications are optimizing their experiences to get users to engage for longer periods of time, Calm’s goal is to help users form healthy habits, which may lead to less time using their app.

Calm’s mission, along with the idiosyncrasies in how content is consumed compared to other large applications, leads to a careful and considered approach to recommendations, not simply emulating what others have done.

Launching personalized recommendations at Calm

Calm’s first foray into recommended content was with Sleep Stories. Although meditations might need to be learned in a specific order, Sleep Stories are often interchangeable and users develop clear preferences (for example, for deeper-voiced narrators or stories about nature). Calm began its personalization journey by offering the most popular content while removing previously listened-to stories.

This simple approach allowed the small team to place recommendations in front of users without the need to train and deploy an ML model. However, users stopped engaging with this feature over time. Although the recommended stories were often the most clicked, they were rarely completed, meaning users wanted to try the recommendations but often switched to other stories that were more relevant to their needs. This indicated that a more sophisticated approach could fill a real user need.

Unlike static, rule-based personalization systems, ML-powered personalization can deliver personalization at scale and works best with large volumes of data. Calm, with over 100 million app downloads, operates at a scale where they can leverage deep learning based approaches.

However, the complexity of training, tuning, and provisioning the necessary infrastructure and deploying the model was a daunting challenge for a small team. This is why Calm chose Amazon Personalize, to enable their team to quickly get started with a highly scalable ML-based recommender engine without the need of a big upfront investment.

When Calm looked at what it would take to build the equivalent of Amazon Personalize in-house, they found they would need the following:

- A big data processing service to pull all historical data and split it into training and testing sets

- GPU-enabled servers to train deep learning models, plus all the effort needed to maintain that hardware

- Deep learning code to define and maintain recommender algorithms and models

- Experimentation frameworks and infrastructure to evaluate different model architectures and hyperparameter tuning

- A large-scale scoring pipeline to score recommendations for millions of users a day

- Additional infrastructure to deploy and host real-time endpoints for data ingestion and inference

Additionally, these components needed to be integrated with Calm’s backend systems to display and test recommendations in mobile clients.

At large companies like Amazon, each of these components is handled by a different team. To avoid the complexity of doing it in-house and take advantage of features used by companies at this scale, Calm decided to try Amazon Personalize, a fully managed AI recommendations service.

Defining Calm’s Amazon Personalize Model

The Amazon Personalize user-personalization recipe was a good fit for Calm’s use case. As a sequence model, the algorithm can learn the step-by-step process Calm’s users follow to learn meditation. We also know that new content, like a new Sleep Story from Harry Styles, is very popular with users when first added, and Amazon Personalize can capture quick shifts in user preferences using the real-time event tracker.

Given the fit of the architecture, the complexity of building such a model in house, and the fact that Calm’s data already lived in AWS, Calm moved forward with Amazon Personalize.

Before planning the technical architecture of our Amazon Personalize deployment, we defined our problem in terms of our business objective and set a clear path on how we could achieve it.

The main business objective was to increase user engagement. Calm’s north star metric is the average number of days that it takes users to complete content. Calm uses this metric rather than the total number of complete audio listens because many users did 10 minutes of meditation a day, which is considered a positive signal towards a wellness-focused lifestyle. Calm isn’t trying to get users to engage for hours a day; that would be counter to its mental health mission.

In addition to increasing this key user engagement metric, a secondary business objective was to help users discover new content. Many of Calm’s users listen to the same Sleep Story or Soundscape they love over and over. Consequently, the number of repeat listens was limited in the training set so that the Amazon Personalize model didn’t simply recommend what was listened to previously.

Amazon Personalize has three main operational layers:

- Data layer –Customers ingest three types of data (interactions, items, and users metadata) in batch from Amazon Simple Storage Service (Amazon S3) or incrementally using PUT APIs.

- Training layer – Customers are able to pick algorithms, also known as recipes (user-personalization, personalize-ranking, and related items), which address specific use cases.

- Inference layer – Customers can use their custom private models (solution versions), to infer for recommendations. Amazon Personalize provides the ability to infer in real time using managed endpoints known as campaigns, or through batch jobs from Amazon S3.

Let’s explore Calm’s Amazon Personalize data layer setup:

- Interactions – A typical customer sequence for audio engagement could be: meditating with the Daily Calm, drifting off to Harry Style’s Sleep Story, and grooving to Moses Sumney’s Transfigurations. Each of these listens represents a data point in the interactions dataset. Additionally, we’re providing Amazon Personalize with other event types such as fully completed and favorited interactions; providing stronger signals than incomplete listens or item views.

- Items – Some examples of metadata fields of content features are the narrator (Tamara has quite a fanbase, but other listeners swear by Jeff Warren), the length of the audio track, and when the track was added. All these features are useful for the model to understand and pick signals based on customer behavior (interactions). These features are also available for filtering at inference time.

- Users – Calm uses the user’s account tenure, favorite time of day to use the app, and the country where a user engages the app as metadata. These metadata features are important at training time to enhance the personalization experience for each of these users. Amazon Personalize limits the user metadata size to five columns, which why this dataset is very specific to factors that could influence decision-making across the whole user’s base.

Given that Calm’s use case is focused on delivering personalized recommendations to users, we chose the HRNN-Metadata recipe. Although the content library isn’t as large as other content platforms, Calm’s audio content is tagged with detailed metadata, like voice depth, topic (such as anxiety, self-care, or sleep), whether it’s good for kids, and more. We decided to train a popularity-count solution version with every new model training cycle in order to have a baseline with Amazon Personalize offline metrics.

Calm’s architecture implementation

Prior to implementing our Amazon Personalize deployment, our data engineering team at Calm had already invested a lot of resources deploying analytics solutions on the AWS Cloud. We used Amazon Redshift as a central data warehouse and Amazon S3 as a scalable data lake, merging all application data with events and payments. An Airflow instance, running on Kubernetes, schedules and orchestrates all ETLs and ML pipelines. From a training data volume and runtime perspective, Amazon Personalize was more complex than previous ML pipelines.

Training

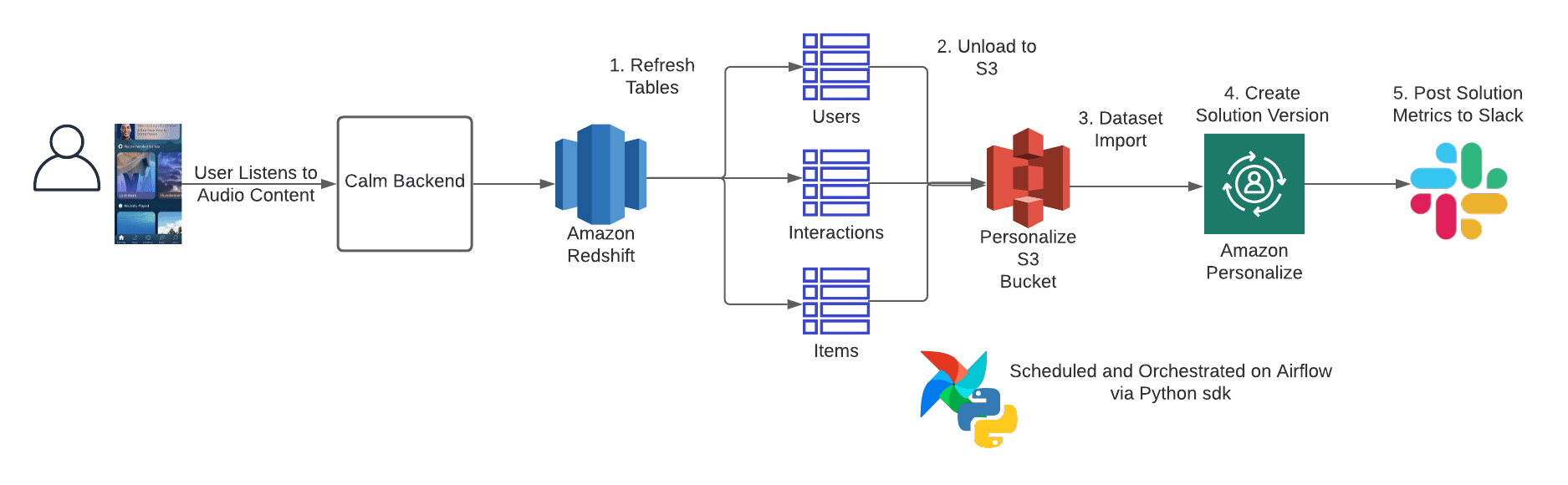

The following diagram illustrates the training workflow.

To train a model in Amazon Personalize, the service needs the following resources:

- A dataset group, which is the highest level of abstraction in the service, allowing you to encapsulate experiments

- Datasets (interactions, users, items), which is where you can create each of the schemas and import your data into the service

- Solutions configured with the recipes and hyperparameters to be used to create your solution versions (models)

We utilized the Boto3 Python SDK to configure and monitor the service. All the required training data for interactions (user audio listens), users (metadata on our users), and items (metadata on content) were already regularly updating in Amazon Redshift. To fit it into a form (schema) that Amazon Personalize could consume, a series of SQL transforms unload the three datasets to Amazon S3. We used Jinja2 to parameterize our queries so we could easily adjust how far back to look for training interactions, which types of users to include, and which metadata fields to leverage.

After the datasets and schema are exported to Amazon S3, we create a new dataset group, datasets, and import jobs for the model. We do this so each pipeline call is idempotent, and doesn’t require external configuration to proceed. After the solution version begins to train, the Airflow task runs a DescribeSolutionVersion call every 15 minutes to check training status. When it’s complete, Airflow posts the solution metrics to Slack so we can monitor, and saves all the training details and ARNs to Amazon S3.

Generating Calm recommendations: Batch inference

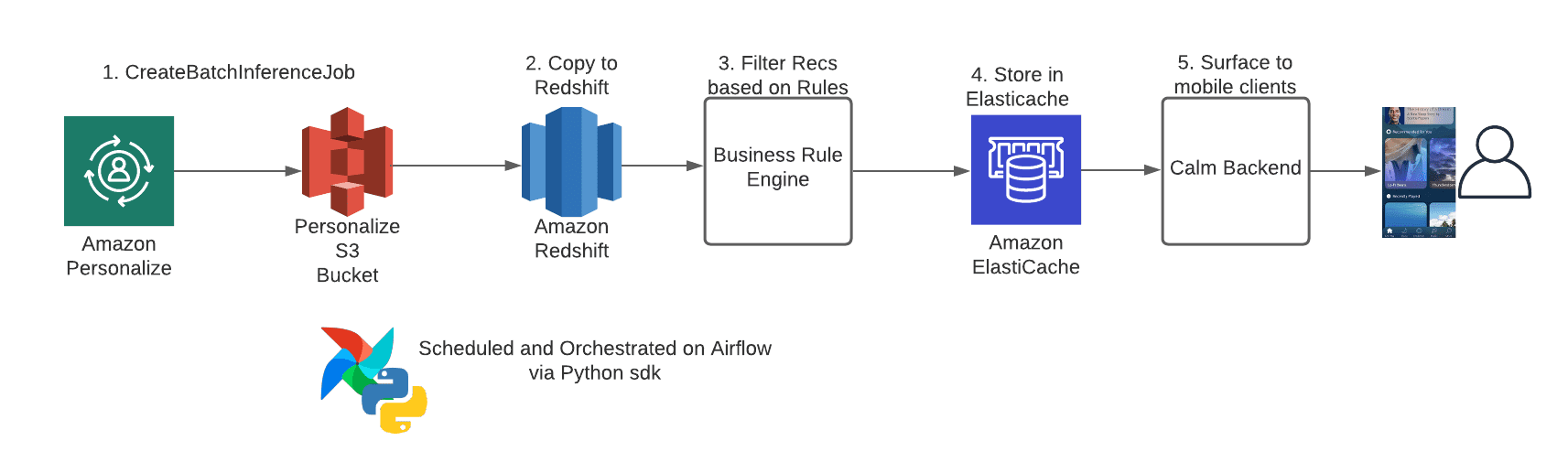

The following diagram illustrates the batch inference workflow.

We generate batch recommendations for each of our users every 2 days, requesting the upper limit (500) items recommendations for each user. Recent activity, subscription status, and active language determine who receives these recommendations. Amazon Personalize outputs batch recommendations as JSON lines, one row per user. Originally, we had a Python module convert the JSON lines to tabular format and load them into Amazon Redshift, but eventually realized the Amazon Redshift COPY command could handle the JSON lines directly, using JSON paths. This saved us an additional step, and allowed us to move some of the compute tasks from our Kubernetes cluster to Amazon Redshift.

One advantage of using batch recommendations instead of the real-time campaigns is that we could encode complex business logic into the recommendations before displaying them in front of users, beyond what is possible with Amazon Personalize filters. Our business rule engine performs the following logic:

- Aggregates individual audio recommendations to collections (like “7 Days of Calm”).

- Filters all recommendations to specific types, such as just Meditations, or only new content.

- Shuffles the recommendations so they stay fresh and diverse, even if a user’s engagement hasn’t changed. We were finding that some users were receiving, for example, all rain sounds at the top of their recommendations, before adding this shuffle.

After the batch recommendations are processed with these business rules, they’re dropped into Amazon ElastiCache. This allows Calm’s backend to access the recommendations with sub-millisecond latency when the user opens the mobile client.

User testing and results

One major hurdle with recommendation models is that it’s very challenging to evaluate them during offline model development. Amazon Personalize provides offline metrics such as precision, cumulative gain, reciprocal rank, and coverage. These traditional out-of-sample evaluation metrics are limited to measure how well the model predictions performed against a holdout set, not how a user would react to new and relevant recommendations surfaced within a personalized user experience. This is why user online testing is the gold standard for evaluating recommender systems. For more information on online testing, see Using A/B testing to measure the efficacy of recommendations generated by Amazon Personalize.

One major hurdle with recommendation models is that it’s very challenging to evaluate them during offline model development. Amazon Personalize provides offline metrics such as precision, cumulative gain, reciprocal rank, and coverage. These traditional out-of-sample evaluation metrics are limited to measure how well the model predictions performed against a holdout set, not how a user would react to new and relevant recommendations surfaced within a personalized user experience. This is why user online testing is the gold standard for evaluating recommender systems. For more information on online testing, see Using A/B testing to measure the efficacy of recommendations generated by Amazon Personalize.

The first round of user tests we performed was in an email campaign. Compared to integrating with a real-time application, displaying recommendations in email is lower lift. Email templates are quicker to build than application UIs, and don’t have the same scalability issues as pulling recommendations from a web service.

Every weekend, we sent a personalized “Weekly Winddown” email to our users, advertising our new content. For this randomized A/B test, the control group received hand-picked “what’s new” content in their email, whereas the treatment group received recommended “what’s new” content from Amazon Personalize. The treatment group was 1% more likely to complete content in the app within 24 hours of opening the email, a statistically significant result, which concluded that Amazon Personalize recommendations had a positive influence on our user behavior. This email test helped us prove out the recommendation pipeline, and the new automation replaced a previously manual task of choosing new content for our emails.

After building out our in-app architecture, we ran a round of “dogfood” tests, where Calm employees had early access to the “Recommended for You” carousel in the home screen of the mobile clients. We sent around a survey to the early testers, asking them detailed questions about how they responded to the in-app experience. This feedback proved invaluable to identifying silent bugs in our pipeline. For example, our kids-focused content is quite popular with younger users. However, when shown in the recommended carousels of adults, the kids content seemed nonsensical, which can erode user trust.

For our full in-app randomized A/B/C test, we enrolled new users into one of three groups:

- Control group – They saw no change in the user experience

- First treatment group – They saw a “Recommended for You” carousel filled with popularity count recommendations

- Second treatment group – They had their carousel filled with recommendations from Amazon Personalize

This three-pronged test allowed us to disentangle whether any improvement in engagement came from the model specifically, or whether a simpler approach could have achieved similar results. Ultimately, the Amazon Personalize group saw a statistically significant lift of 3.4% over both other groups in our distinct days engagement metric, and this test was one of our most successful in 2020.

Conclusion

For organizations aiming to deliver personalized customer journeys by using ML recommender engines, it can seem daunting when looking at the amount of heavy lifting required to create robust production-level systems. Amazon Personalize provides you with easy-to-use high-performance algorithms plus all the required infrastructure to serve your customers at large scale at a fraction of the cost compared to building it from scratch.

As discussed in this post, Calm has used Amazon Personalize to not only simplify their previously manual tasks, but also to accelerate their development and deployment of high-performance models to provide wellness recommendations to millions of their customers.

If this post helps you or inspires you to use Amazon Personalize to improve your business metrics, please share your thoughts in the comments.

Additional resources

For more information about Amazon Personalize, see the following:

- Using A/B testing to measure the efficacy of recommendations generated by Amazon Personalize

- Scale session-aware real-time product recommendations on Shopify with Amazon Personalize and Amazon EventBridge

- Increasing the relevance of your Amazon Personalize recommendations by leveraging contextual information

- Enhancing recommendation filters by filtering on item metadata with Amazon Personalize

About the Author

Shae Selix is a Staff Data Scientist at Calm, building ML services to promote meditation and sleep habits. He is a daily user of the Calm app and, outside work, is a student and teacher of yoga. As a Northern California native, he loves hiking, biking, and running through the coastlines and mountains in the Bay Area.

Shae Selix is a Staff Data Scientist at Calm, building ML services to promote meditation and sleep habits. He is a daily user of the Calm app and, outside work, is a student and teacher of yoga. As a Northern California native, he loves hiking, biking, and running through the coastlines and mountains in the Bay Area.

Luis Lopez Soria is an AI/ML specialist solutions architect working with the Amazon Machine Learning team. He works with AWS customers to help them adopt machine learning on a large scale. He enjoys playing sports, traveling around the world, and exploring new foods and cultures.

Luis Lopez Soria is an AI/ML specialist solutions architect working with the Amazon Machine Learning team. He works with AWS customers to help them adopt machine learning on a large scale. He enjoys playing sports, traveling around the world, and exploring new foods and cultures.

Tags: Archive, case study

Leave a Reply