Gamify Amazon SageMaker Ground Truth labeling workflows via a bar chart race

Labeling is an indispensable stage of data preprocessing in supervised learning. Amazon SageMaker Ground Truth is a fully managed data labeling service that makes it easy to build highly accurate training datasets for machine learning. Ground Truth helps improve the quality of labels through annotation consolidation and audit workflows. Ground Truth is easy to use, can reduce your labeling costs by up to 70% using automatic labeling, and provides options to work with labelers inside and outside of your organization.

This post explains how you can use Ground Truth partial labeling data loaded in Amazon Simple Storage Service (Amazon S3) to gamify labeling workflows. The core of the gamification approach is to create a bar chart race showing the progress of the labeling workflow and highlighting the evolution of completed labeling per workers. The bar chart race can be sent periodically (such as daily or weekly). We present options to create and send your bar chart manually or automatically.

This gamification approach to Ground Truth labeling workflows can allow you to:

- Speed up labeling

- Reduce delays in labeling by continuous monitoring

- Increase user engagement and user satisfaction

We have successfully adopted this solution for a healthcare and life science customer. The labeling job owner kept the internal labeling team engaged by sending a bar chart race daily, and the labeling job was completed 20% faster than planned.

Option 1: Manual chart creation

A first option for gamifying your Ground Truth labeling workflow via a bar chart race is to create an Amazon SageMaker instance to fetch the partial labeling data, parse the data and create the bar chart race manually. You then save it to Amazon S3 and send it to the workers. The following diagram shows this workflow.

To create your bar chart race manually, complete the following steps:

- Create a Ground Truth labeling job and indicate an S3 bucket where the labeling data is continuously loaded.

- Create a SageMaker notebook instance.

- Attach the appropriate AWS Identity and Access Management (IAM) role to allow read access to the S3 bucket containing the outputs of the Ground Truth labeling job.

- Create a notebook using a

conda_python3based kernel, then install the required dependencies. You can run the following commands from the terminal after activating the appropriate environment:

- Import the required packages via the following code:

- Set up the SageMaker notebook instance to access the S3 bucket containing the Ground Truth labeling data (for this post, we use the bucket

Example_SageMaker_GT):

- Analyze the partial Ground Truth labeling data:

- Convert the partial Ground Truth labeling data into a DataFrame and structure the

dateandhoursfields:

- Extract the labeling occurrence per workers and calculate the cumulative sum:

- Create the bar chart race video:

- Download the bar chart race output and save it into an S3 bucket.

- Email this file to your workers.

Option 2: Automatic chart creation

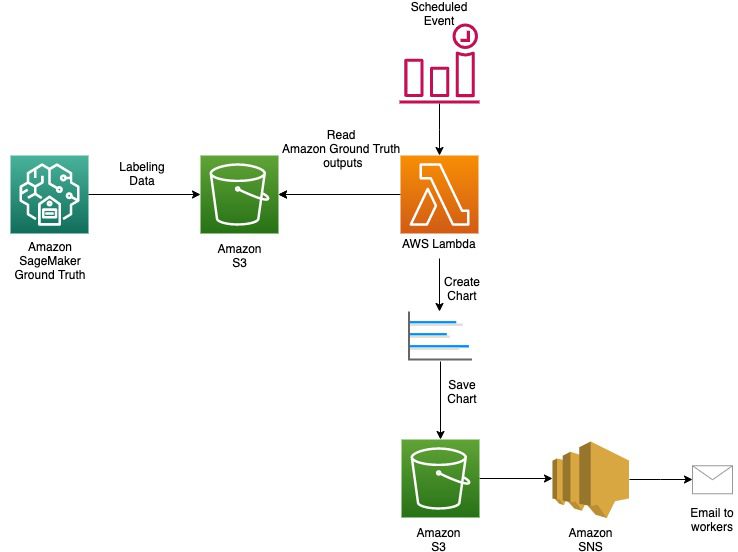

Option 2 requires no manual intervention; the bar chart races are sent automatically to the workers at a fixed interval (such as every day or every week). We provide a completely serverless solution, where the computing is done through AWS Lambda. The advantage of this approach is that the you don’t need to deploy any computing infrastructure (the SageMaker notebook instance in the first option). The steps involved are as follows:

- A Lambda function is triggered at fixed time intervals, and generates the bar chart race by replicating the steps highlighted in Option 1. External dependencies, such as ffmpeg, are installed as Lambda layers.

- The bar chart races are saved to Amazon S3.

- The updates to the video on Amazon S3 trigger a message sent to Amazon Simple Notification Service (Amazon SNS).

- Amazon SNS sends an email to subscribers.

The following diagram illustrates this architecture.

The following is the code for the Lambda function:

Clean up

When you finish this exercise, remove your resources with the following steps:

- Delete your notebook instance.

- Stop your Ground Truth job.

- Optionally, delete the SageMaker execution role.

- Optionally, empty and delete the S3 bucket.

Conclusions

This post demonstrated how to use Ground Truth partial labeling data loaded in Amazon S3 to gamify labeling workflows by periodically creating a bar chart race. Engaging with workers with a bar chart race has been shown to spark a fruitful competition among workers, speed up labeling, and increase user engagement and user satisfaction.

Get started today! You can learn more about Ground Truth and kick off your own labeling and gamification processes by visiting the SageMaker console.

About the Authors

Daniele Angelosante is a Senior Engagement Manager with AWS Professional Services. He is passionate about AI/ML projects and products. In his free time, he likes coffee, sport, soccer, and baking.

Daniele Angelosante is a Senior Engagement Manager with AWS Professional Services. He is passionate about AI/ML projects and products. In his free time, he likes coffee, sport, soccer, and baking.

Andrea Di Simone is a Data Scientist in the Professional Services team based in Munich, Germany. He helps customers to develop their AI/ML products and workflows, leveraging AWS tools. He enjoys reading, classical music and hiking.

Andrea Di Simone is a Data Scientist in the Professional Services team based in Munich, Germany. He helps customers to develop their AI/ML products and workflows, leveraging AWS tools. He enjoys reading, classical music and hiking.

Othmane Hamzaoui is a Data Scientist working in the AWS Professional Services team. He is passionate about solving customer challenges using Machine Learning, with a focus on bridging the gap between research and business to achieve impactful outcomes. In his spare time, he enjoys running and discovering new coffee shops in the beautiful city of Paris.

Othmane Hamzaoui is a Data Scientist working in the AWS Professional Services team. He is passionate about solving customer challenges using Machine Learning, with a focus on bridging the gap between research and business to achieve impactful outcomes. In his spare time, he enjoys running and discovering new coffee shops in the beautiful city of Paris.

Tags: Archive

Leave a Reply