Scan Amazon S3 buckets for content moderation using S3 Batch and Amazon Rekognition

Dealing with content in large scale is often challenging, costly, and a heavy lift operation. The volume of user-generated and third-party content has been increasing substantially in industries like social media, ecommerce, online advertising, and media sharing. Customers may want to review this content to ensure that it follows corporate governance and regulations. But they need a solution to handle scale and automation.

In this blog, I present a solution to scan existing videos in your Amazon Simple Storage Service (Amazon S3) buckets using S3 batch operations and Amazon Rekognition.

S3 batch operations is a managed solution for performing storage actions in large scale. S3 batch operations can perform actions on lists of Amazon S3 objects that you specify. Amazon S3 tracks progress, sends notifications, and stores a detailed completion report of all actions, providing a fully managed, auditable, and serverless experience.

Amazon Rekognition enables you to analyze images and videos automatically on your applications and content. You provide an image or video to the Amazon Rekognition API, and the service can identify the objects, people, text, scenes, and activities, in addition to detecting any inappropriate content.

I show an example to demonstrate how to use S3 batch operations with Amazon Rekognition content moderation. Many companies rely entirely on human moderators to review third-party or user-generated content, while others primarily react to user complaints to take down offensive or inappropriate images, ads, or videos. However, human moderators alone cannot scale to meet these needs at sufficient quality or speed. That leads to a poor user experience, high costs to achieve scale, or even a loss of brand reputation. By using Amazon Rekognition, human moderators can review a much smaller set of content, typically 1% to 5% of the total volume, which has already been flagged by machine learning. This enables them to focus on more valuable activities and still achieve comprehensive moderation coverage at a fraction of their existing cost.

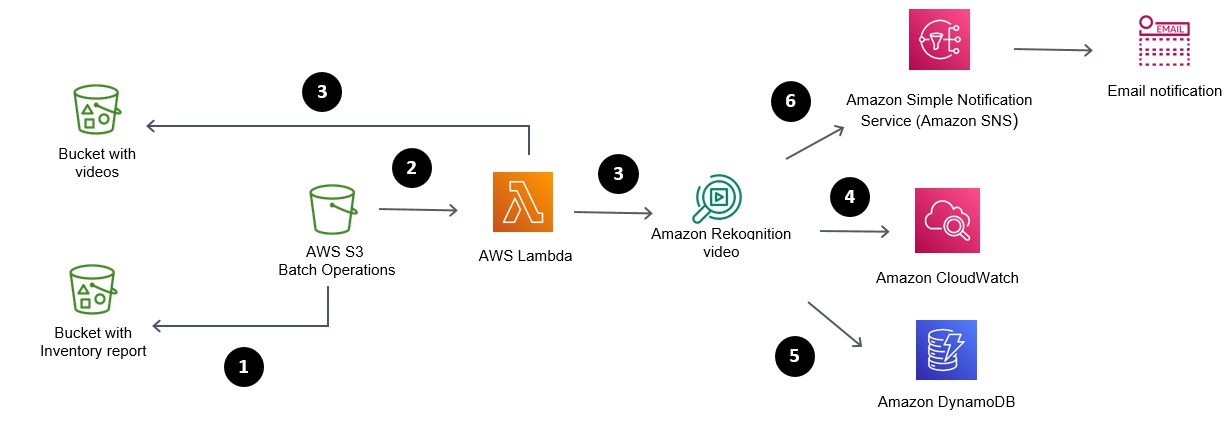

Solution Architecture

This solution uses S3 batch operations to invoke an AWS Lambda function that will scan videos in your S3 buckets using Amazon Rekognition. It will store the findings on an Amazon DynamoDB table and details on the activity will be logged on Amazon CloudWatch. In addition to that, it will send email alerts using Amazon Simple Notification Service (Amazon SNS) when Amazon Rekognition detects such content with high confidence levels.

The following diagram outlines the workflow:

- S3 batch operations read the manifest file

- S3 batch operations invoke a Lambda function

- The Lambda function invokes Amazon Rekognition for content moderation on videos

- Activity is logged on Amazon CloudWatch

- Labels are stored in a DynamoDB table

- Amazon SNS sends an email notification

Let’s look at each step in more detail:

- S3 batch operations reads the manifest file

S3 batch operations can perform operations on large lists of Amazon S3 objects that you specify (manifest file). That allows to scale the solution as a single job can perform a specified operation on billions of objects containing exabytes of data. Amazon S3 tracks progress, sends notifications, and stores a detailed completion report of all actions. I use two buckets in the solution: one containing the videos and another containing the S3 inventory report (manifest file) and S3 batch operations logging. This allows you to isolate videos and processing information. The S3 batch job performs the operations on the objects listed in the manifest file, or you can use a CSV file with the list of videos.

- S3 batch operations invokes a Lambda function

The Lambda function contains the Python code that will use Amazon Rekognition, store data on DynamoDB, and email notifications. AWS Lambda is a compute service that lets you run code without provisioning or managing servers. Lambda runs your code on a high availability compute infrastructure and performs all of the administration of the compute resources. It scales automatically and is cost efficient.

- The Lambda function invokes Amazon Rekognition for content moderation on videos

This is the core machine learning activity in the solution. The S3 batch job provides the S3 key from each object in the manifest file to the Lambda function. The Lambda function calls the Amazon Rekognition APIs. The StartContentModeration operation starts asynchronous detection of inappropriate, unwanted, or offensive content in a stored video. The GetContentModeration operation gets the unsafe content analysis results.

- Activity is logged on Amazon CloudWatch

AWS Lambda logs all requests handled by the function and stores logs generated by the code in Amazon CloudWatch Logs. Details from the runtime, labels identified, and workflow data are stored on CloudWatch.

- Labels are stored in a DynamoDB table

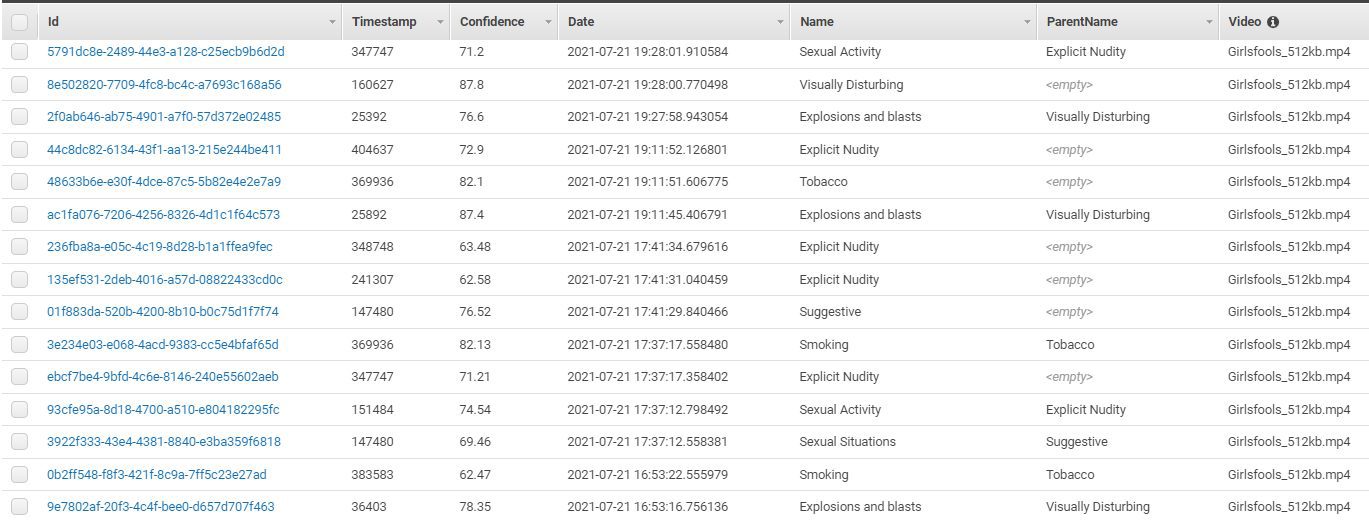

Amazon DynamoDB is a key-value and document database that delivers single-digit millisecond performance at any scale. Details about the labels found on videos are durably stored in a DynamoDB table: Job ID, timestamps of label occurrence, confidence level, hierarchical taxonomy of labels, and video names are recorded in the table. That aggregates value since now you have a database with content moderation information from your videos. You can use the database to classify videos according to their content moderation.

- Amazon SNS sends an email notification

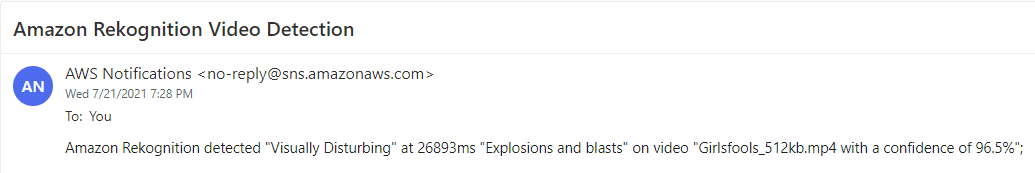

The code checks the confidence level reported by Amazon Rekognition and sends email alerts when there is a high level of certainty (95% or more).

Label categories of inappropriate or offensive content

Amazon Rekognition uses a two-level hierarchical taxonomy to label categories of inappropriate or offensive content. For example, in the top-level category of tobacco you have a second-level category identifying tobacco products and smoking. More details in the following table.

| Top-Level Category | Second-Level Category |

| Explicit Nudity | Nudity |

| Graphic Male Nudity | |

| Graphic Female Nudity | |

| Sexual Activity | |

| Illustrated Explicit Nudity | |

| Adult Toys | |

| Suggestive | Female Swimwear Or Underwear |

| Male Swimwear Or Underwear | |

| Partial Nudity | |

| Bare chested Male | |

| Revealing Clothes | |

| Sexual Situations | |

| Violence | Graphic Violence Or Gore |

| Physical Violence | |

| Weapon Violence | |

| Weapons | |

| Self Injury | |

| Visually Disturbing | Emaciated Bodies |

| Corpses | |

| Hanging | |

| Air Crash | |

| Explosions And Blasts | |

| Rude Gestures | Middle Finger |

| Drugs | Drug Products |

| Drug Use | |

| Pills | |

| Drug Paraphernalia | |

| Tobacco | Tobacco Products |

| Smoking | |

| Alcohol | Drinking |

| Alcoholic Beverages | |

| Gambling | Gambling |

| Hate Symbols | Nazi Party |

| White Supremacy | |

| Extremist |

Prerequisites

In order to implement this solution, you will need:

- an AWS account

- two S3 buckets

- a Lambda function

- a DynamoDB table

- an SNS topic

Implementation

There are four main steps to implement the solution:

- Create the S3 buckets

- Create a DynamoDB table

- Create a Lambda function using the following Python code

- Create and run the S3 batch job

Here are more details on each step:

- Create two S3 buckets: one to store the videos (data bucket) and another to store the inventory reports and logs (work bucket). Use separate buckets to avoid scanning objects that are not videos and, consequently, wasting Lambda cycles. Set up S3 inventory reports from the bucket containing the videos (data bucket) into the work bucket. The inventory reports can take up to 48 hours to be created.

- Create a DynamoDB table using the following Python code.

- Create a Lambda function to invoke the following Python code:

For videos lasting up to 10 minutes, increase the Lambda function timeout to 1 minute and allocated 128 MB of memory.

In the following code, replace the ARN (Amazon Resource Name) for the SNS topic of your choice in the TargetArn variable. That will allow you to receive the email alert.

- Create and launch the S3 batch job by using the following command:

Make sure to replace the appropriate fields in the preceding command. Replace your account ID, the Lambda function ARN, and the ARN of the role you will use to run this batch job. Also, replace the ARN for the manifest file, and the bucket for reporting. The manifest file is generated as part of the S3 inventory.

Results

After running the solution, you will find updates on Amazon CloudWatch, Amazon DynamoDB, and you will get email notifications if the confidence level is at least 95%.

You will get content moderation outputs for each of the videos listed in the S3 manifest file. This solution can take seconds to scan each video, despite of their size. You will be able to scan a large number of videos in a single S3 batch processing job. That allows scaling and automation.

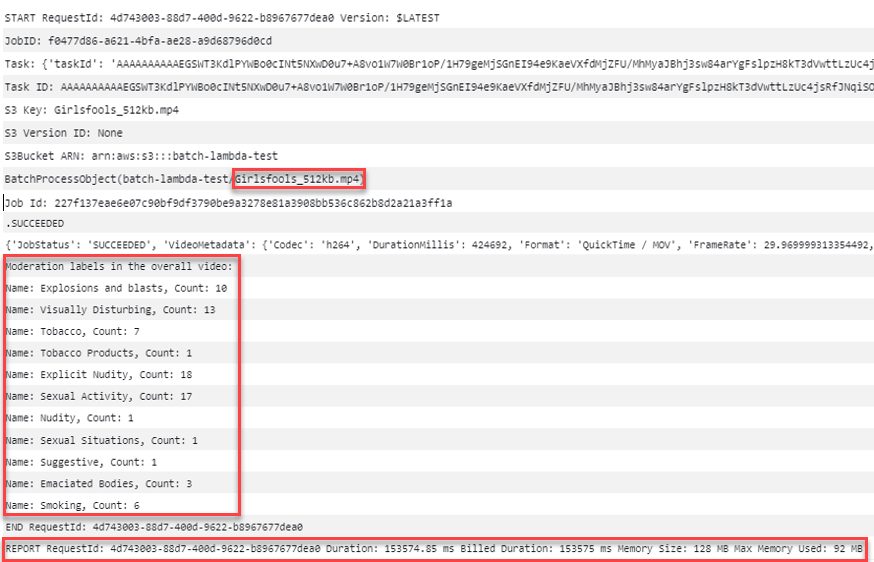

The following is an extract of a CloudWatch Log for a sample video. You can see that for the video named Girlsfools_512kb.mp4, Amazon Rekognition, detected labels such as tobacco and nudity. It also provides Lambda runtime details such as billed duration and memory used.

The following is an extract of the DynamoDB table generated for this video. In this table we can see the timestamp details, the confidence level, and the hierarchical taxonomy findings.

The following is an extract of an email alert generated. In this example, it reported visually disturbing labels found in the video with their corresponding timestamps. It also reported a 96.5% confidence level on the findings.

Cleaning up

If you followed along and would like to remove resources used in this solution to avoid incurring any unwanted future charges, delete the following:

- the two S3 buckets created

- the S3 inventory configuration

- the S3 batch operations job

- the Lambda function

- the DynamoDB table

- the SNS topic

Conclusion

Amazon S3 batch operations can manage billions of objects at a time, saving you time and resources by simplifying storage management at massive scale. Amazon Rekognition is a service that lets you add powerful visual analysis to your applications. You can use Amazon S3 batch operations in addition to Amazon Rekognition to scan existing objects in your buckets.

In this blog, I describe a solution integrating Amazon S3 batch operations and Amazon Rekognition. I show an example that can be used to address content moderation across different domains: social media, broadcasting, law enforcement, public entities among others. In this example, we scan existing videos stored in an S3 bucket to detect explicit or suggestive adult content, violence, weapons, visually disturbing content, drugs, alcohol, tobacco, hate symbols, gambling, and rude gestures.

The process is logged on Amazon CloudWatch, the moderation content is durably stored in a DynamoDB database, and alert emails are sent when needed.

To learn more about Amazon S3 batch operations, you can look through the product documentation. You can also discover more uses and implementations using Amazon S3 batch operations on the AWS Storage Blog.

To learn more about Amazon Rekognition for image and video analysis, you can look through the product documentation. You can also discover more uses and implementations using Amazon Rekognition on the AWS Machine Learning Blog.

If you need any further assistance, contact your AWS account team or a trusted AWS Partner.

About the Author

Virgil Ennes is a Specialty Sr. Solutions Architect at AWS. Virgil enjoys helping customers take advantage of the agility, costs savings, innovation, and global reach that AWS provides. He is mostly focused on Storage, AI, Blockchain, Analytics, IoT, and Cloud Economics. In his spare time, Virgil enjoys spending time with his family and friends, and also watching his soccer team (GALO).

Virgil Ennes is a Specialty Sr. Solutions Architect at AWS. Virgil enjoys helping customers take advantage of the agility, costs savings, innovation, and global reach that AWS provides. He is mostly focused on Storage, AI, Blockchain, Analytics, IoT, and Cloud Economics. In his spare time, Virgil enjoys spending time with his family and friends, and also watching his soccer team (GALO).

Tags: Archive

Leave a Reply