Build a traceable, custom, multi-format document parsing pipeline with Amazon Textract

Organizational forms serve as a primary business tool across industries—from financial services, to healthcare, and more. Consider, for example, tax filing forms in the tax management industry, where new forms come out each year with largely the same information. AWS customers across sectors need to process and store information in forms as part of their daily business practice. These forms often serve as a primary means for information to flow into an organization where technological means of data capture are impractical.

In addition to using forms to capture information, over the years of offering Amazon Textract, we have observed that AWS customers frequently version their organizational forms based on structural changes made, fields added or changed, or other considerations such as a change of year or version of the form.

When the structure or content of a form changes, frequently this can cause challenges for traditional OCR systems or impact downstream tools used to capture information, even when you need to capture the same information year over year and aggregate the data for use regardless of the format of the document.

To solve this problem, in this post we demonstrate how you can build and deploy an event-driven, serverless, multi-format document parsing pipeline with Amazon Textract.

Solution overview

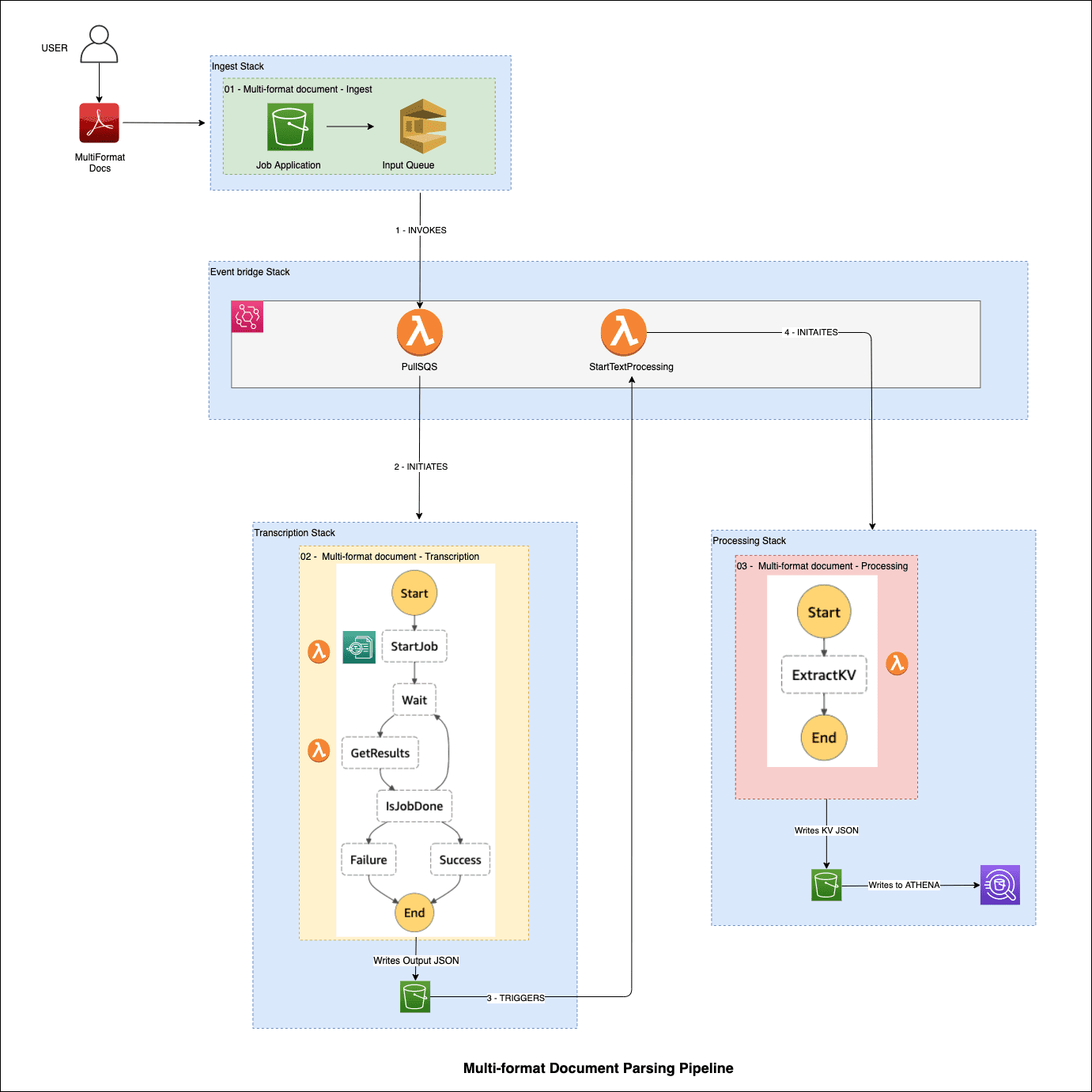

The following diagram illustrates our solution architecture:

First, the solution offers pipeline ingest using Amazon Simple Storage Service (Amazon S3), Amazon S3 Event Notifications, and an Amazon Simple Queue Service (Amazon SQS) queue so that processing begins when a form lands in the target Amazon S3 partition. An event on Amazon EventBridge is created and sent to an AWS Lambda target that triggers an Amazon Textract job.

You can use serverless AWS services such as Lambda and AWS Step Functions to create asynchronous service integrations between AWS AI services and AWS Analytics and Database services for warehousing, analytics, and AI and machine learning (ML). In this post, we demonstrate how to use Step Functions to asynchronously control and maintain the state of requests to Amazon Textract asynchronous APIs. This is achieved by using a state machine for managing calls and responses. We use Lambda within the state machine to merge the paginated API response data from Amazon Textract into a single JSON object containing semi-structured text data extracted using OCR.

Then we filter across different forms using a standardized approach to aggregate this OCR data into a common structured format using Amazon Athena and a SQL Amazon Textract JSON SerDe.

You can trace the steps taken through this pipeline using serverless Step Functions to track the processing state and retain the output of each state. This is something that customers in some industries prefer to do when working with data where you must retain the results of all predictions from services such as Amazon Textract for promoting explainability of your pipeline results in the long term.

Finally, you can query the extracted data in Athena tables.

In the following sections, we walk you through setting up the pipeline using AWS CloudFormation, testing the pipeline, and adding new form versions. This pipeline provides a maintainable solution because every component (ingest, text extraction, text processing) is independent and isolated.

Define default input parameters for CloudFormation stacks

To define the input parameters for the CloudFormation stacks, open default.properties under the params folder and enter the following code:

Deploy the solution

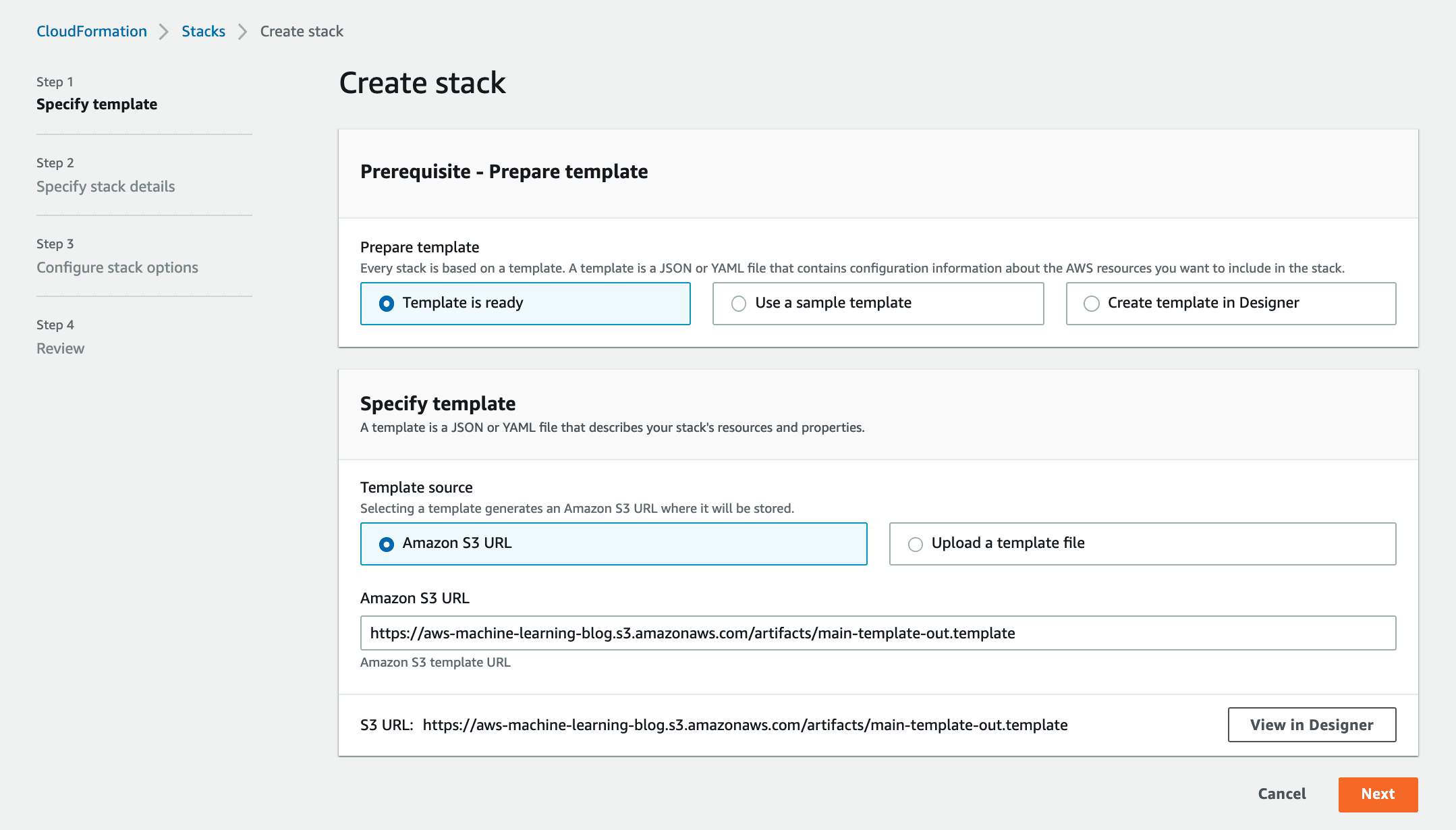

To deploy your pipeline, complete the following steps:

- Choose Launch Stack:

- Choose Next.

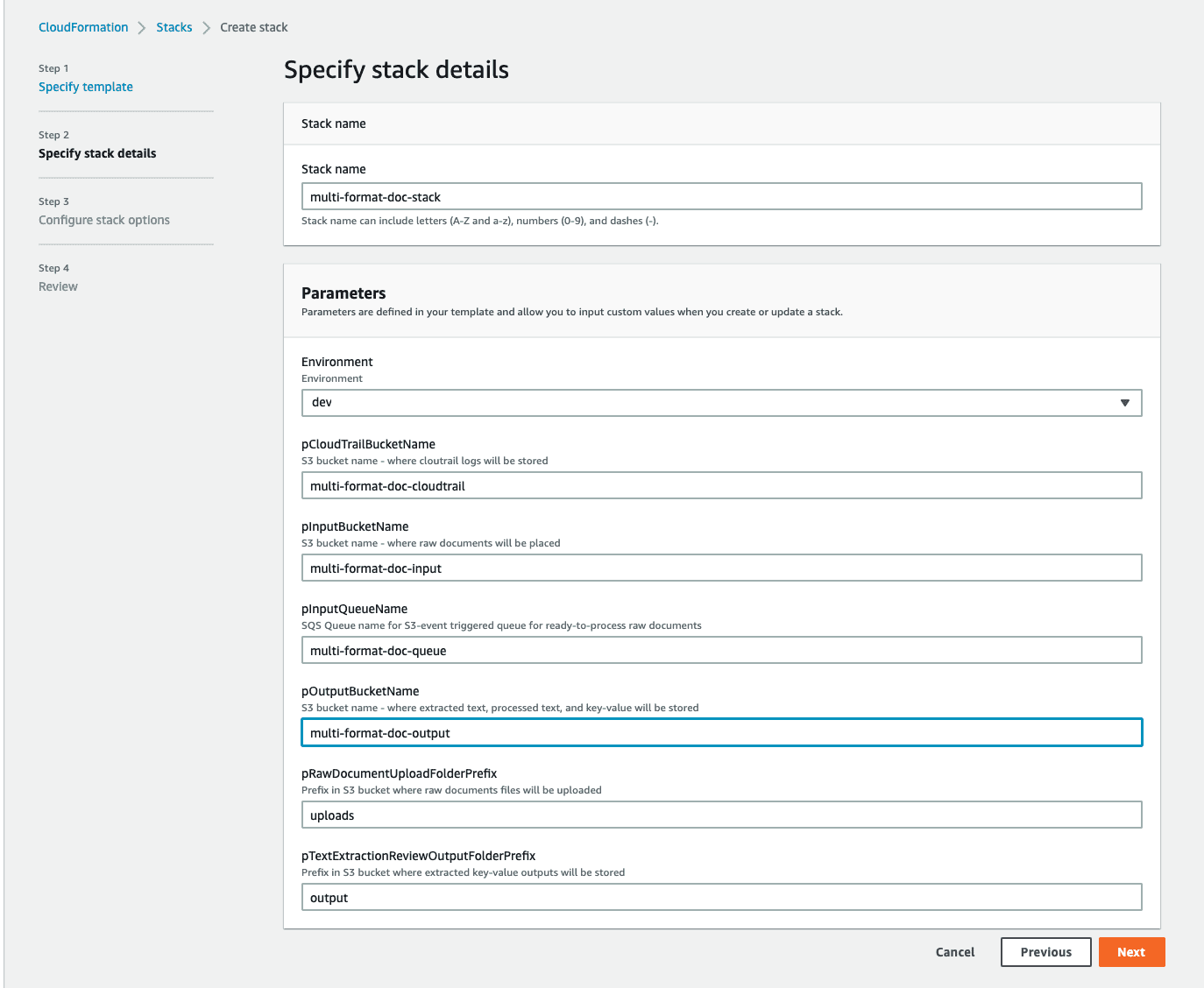

- Specify the stack details as shown in the following screenshot and choose Next.

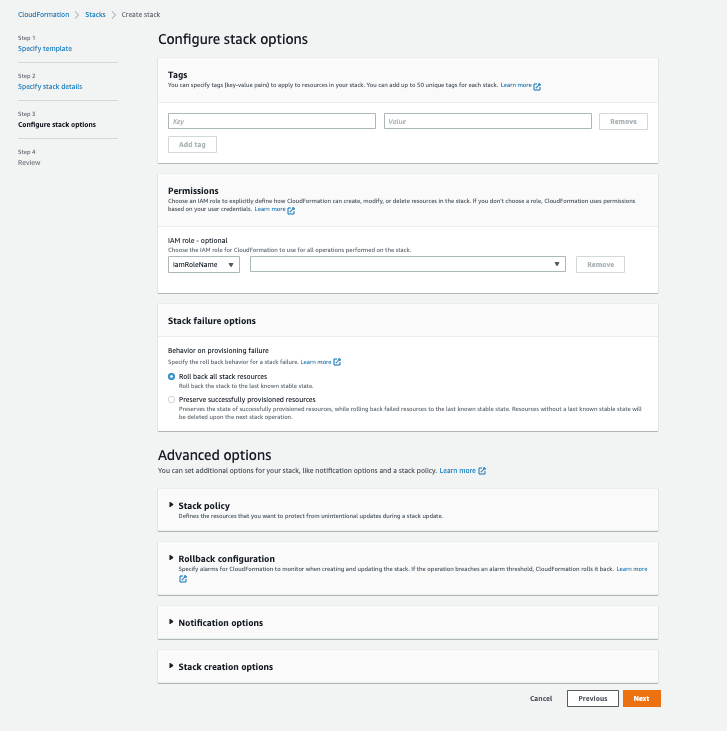

- In the Configure stack options section, add optional tags, permissions, and other advanced settings.

- Choose Next.

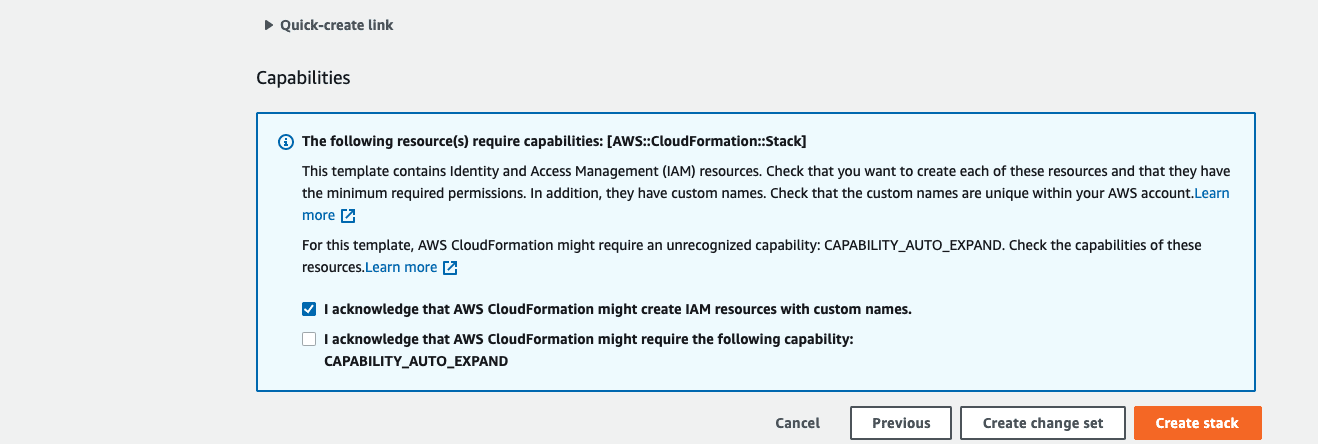

- Review the stack details and select I acknowledge that AWS CloudFormation might create IAM resources with custom names.

- Choose Create stack.

This initiates stack deployment in your AWS account.

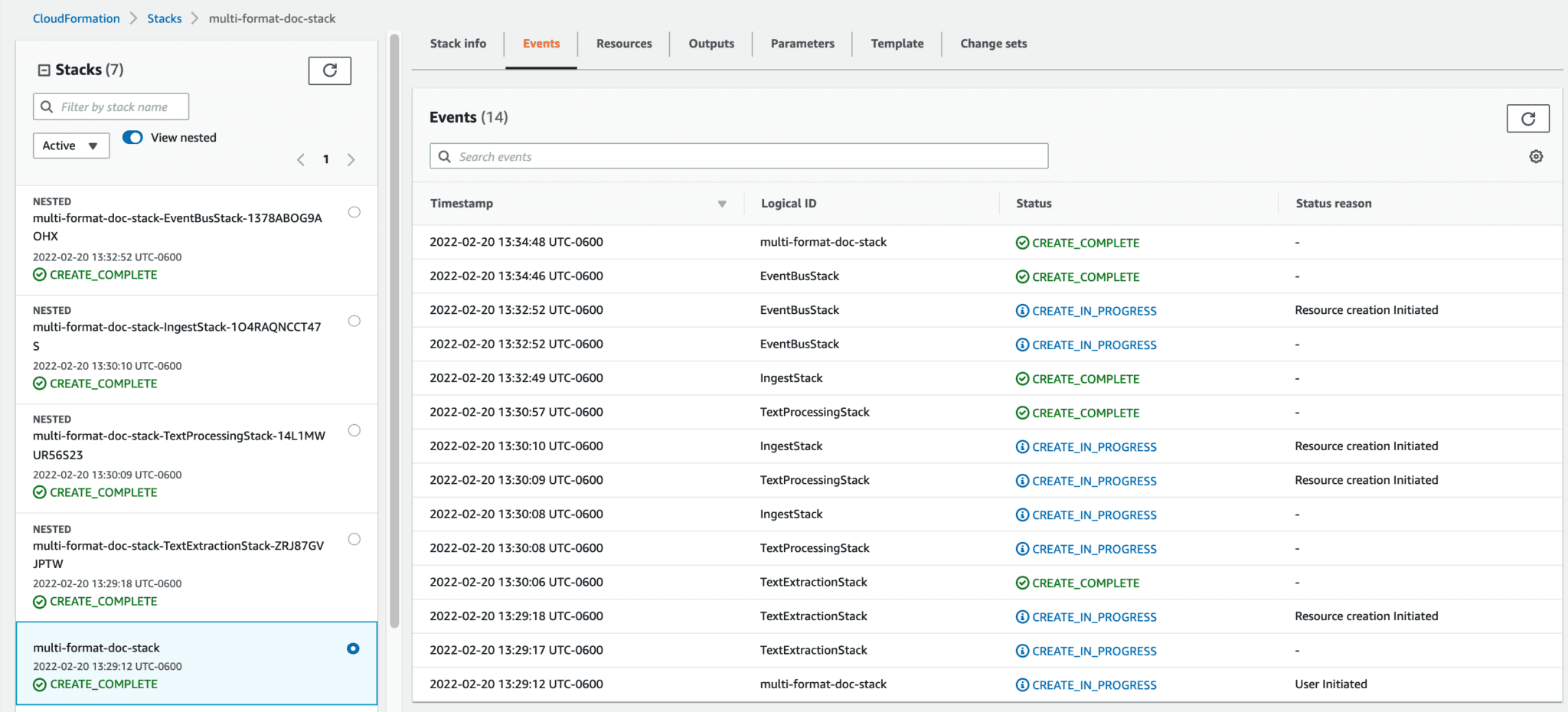

After the stack is deployed successfully, then you can start testing the pipeline as described in the next section.

Test the pipeline

After a successful deployment, complete the following steps to test your pipeline:

- Download the sample files onto your computer.

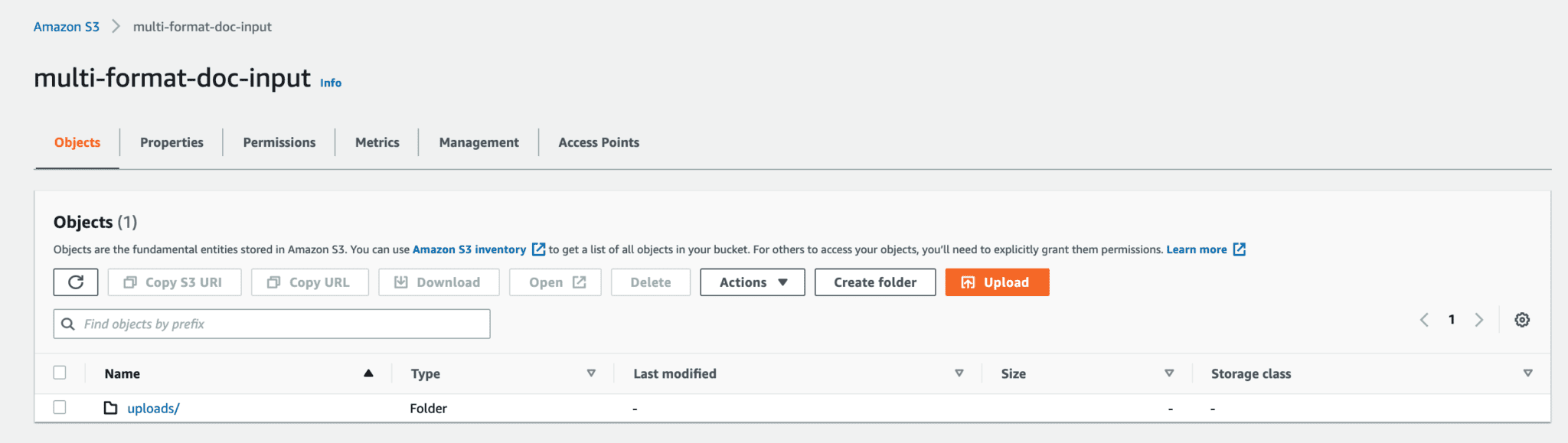

- Create an

/uploadsfolder (partition) under the newly created input S3 bucket.

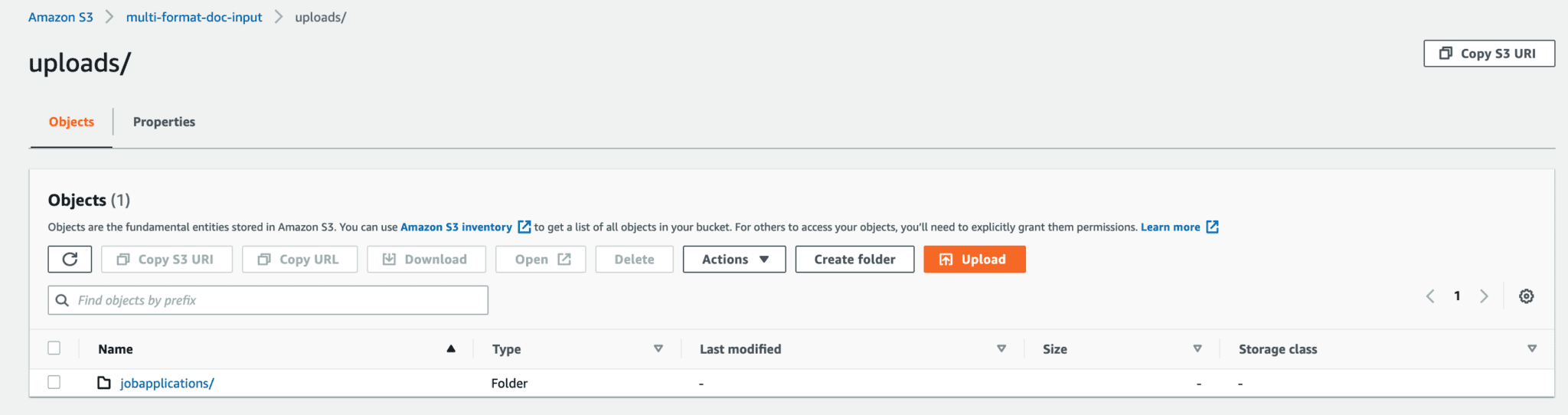

- Create the separate folders (partitions) like

jobapplicationsunder/uploads.

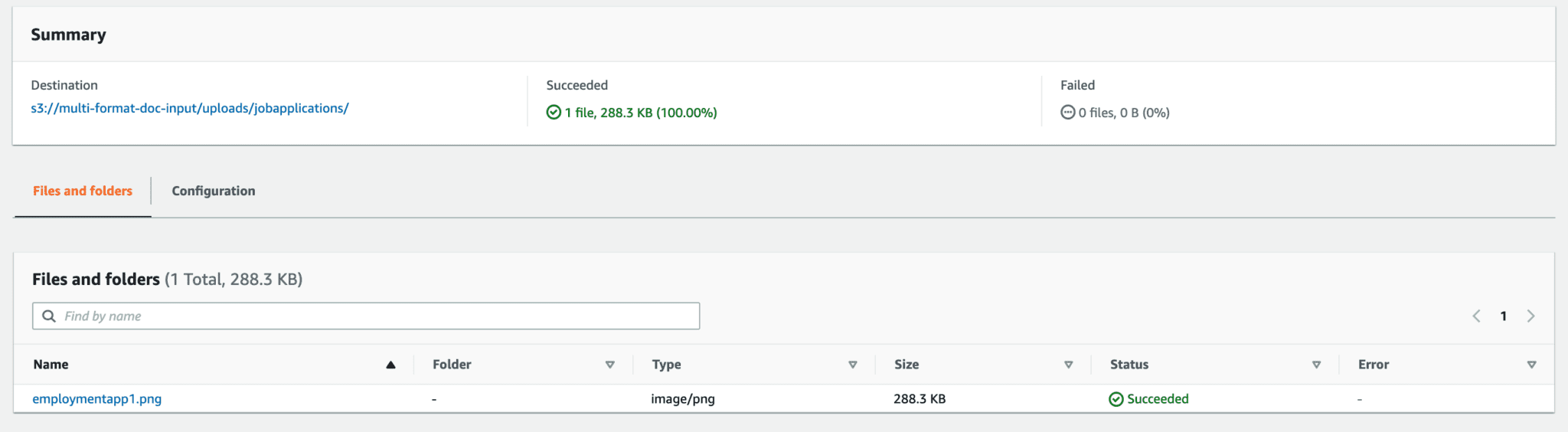

- Upload the first version of the job application from the sample docs folder to the

/uploads/jobapplicationspartition.

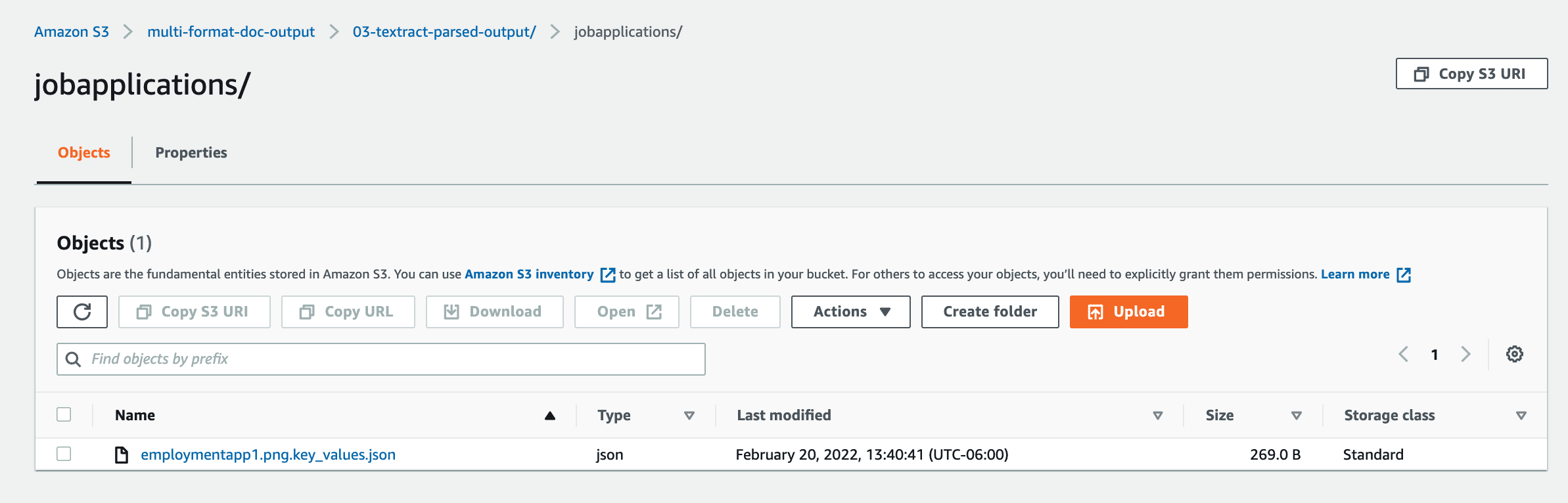

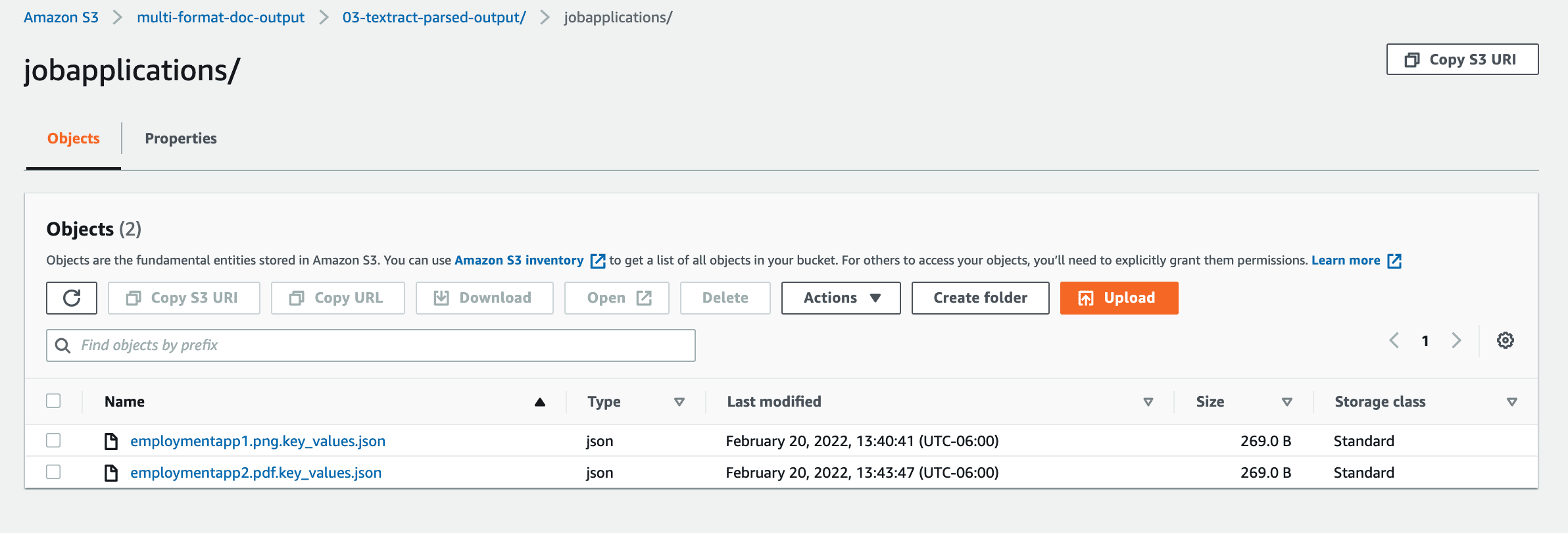

When the pipeline is complete, you can find the extracted key-value for this version of the document in /OuputS3/03-textract-parsed-output/jobapplications on the Amazon S3 console.

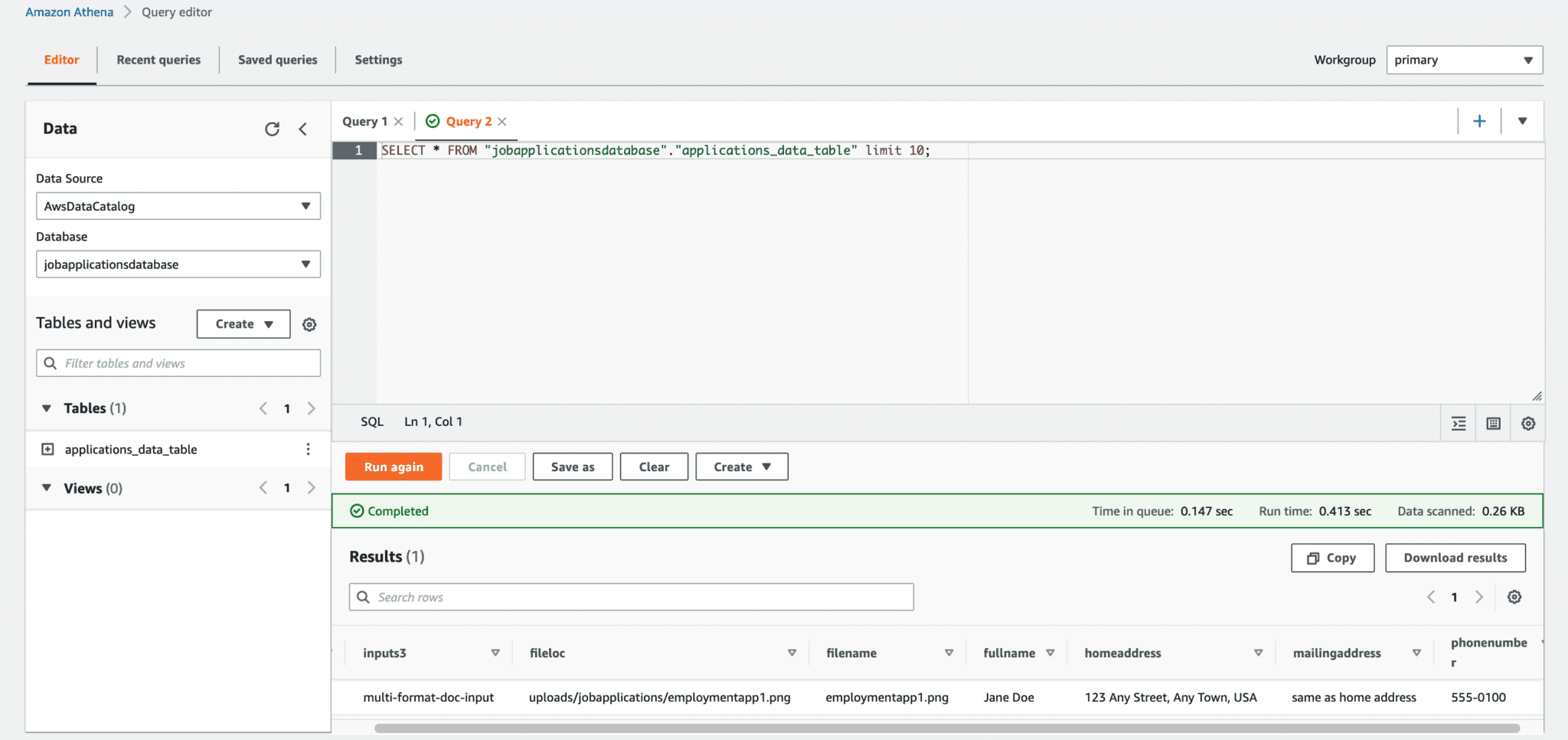

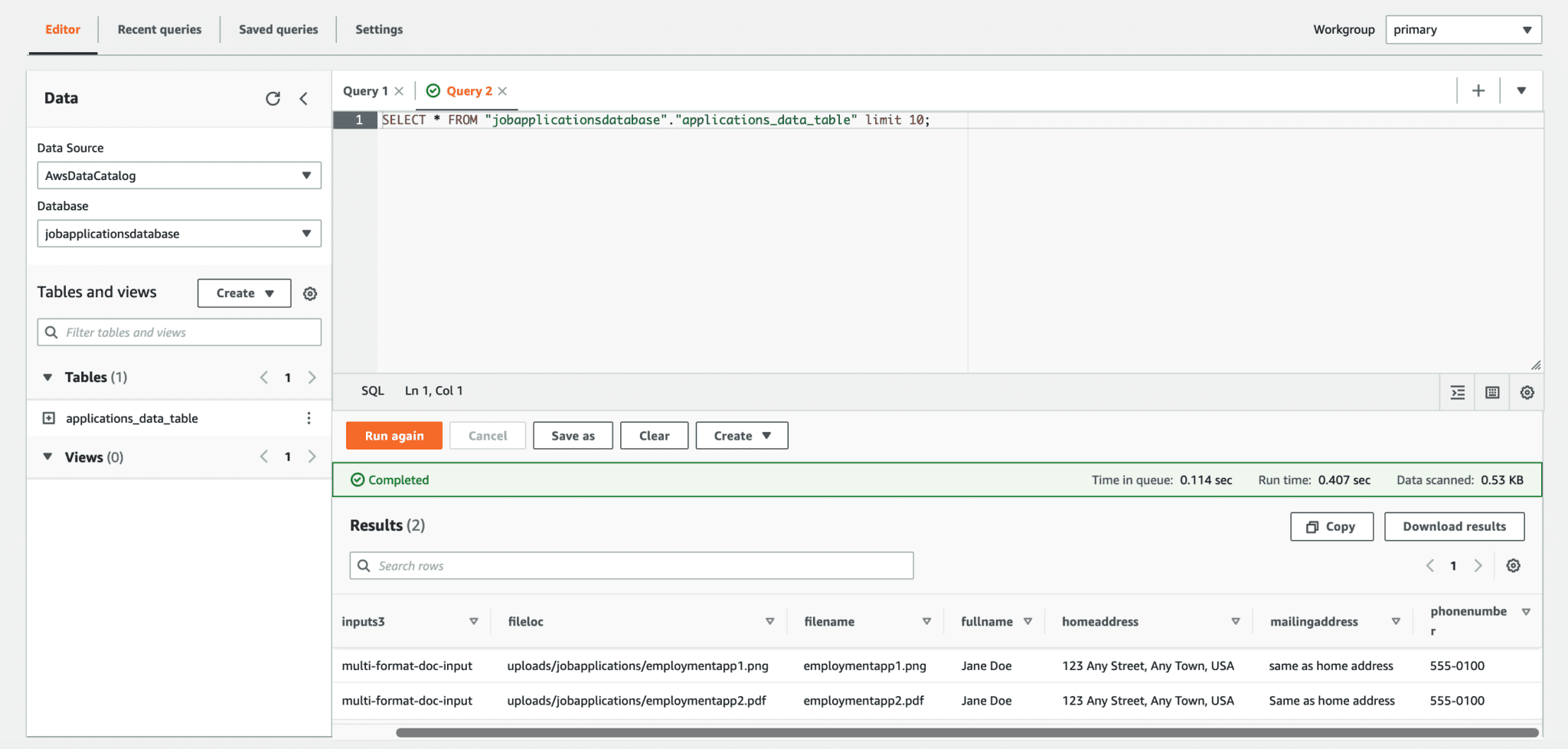

You can also find it in the Athena table (applications_data_table) on the Database menu (jobapplicationsdatabase).

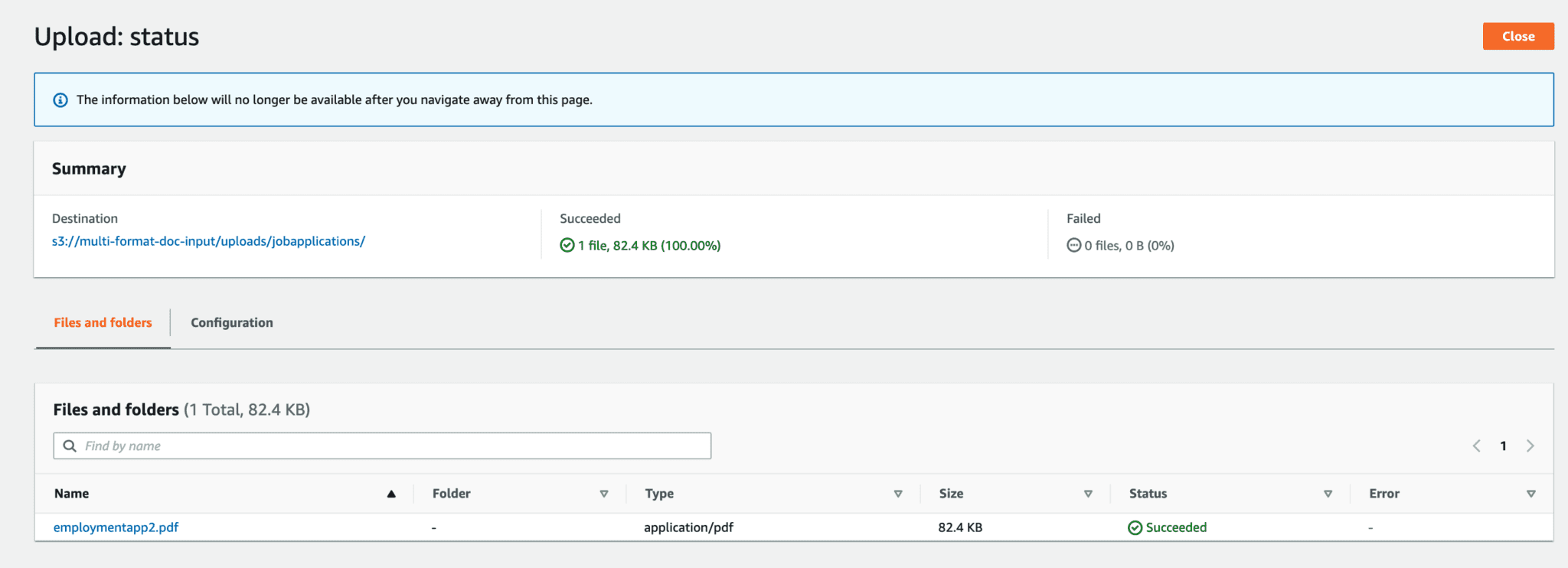

- Upload the second version of the job application from the sample docs folder to the

/uploads/jobapplicationspartition.

When the pipeline is complete, you can find the extracted key-value for this version in /OuputS3/03-textract-parsed-output/jobapplications on the Amazon S3 console.

You can also find it in the Athena table (applications_data_table) on the Database menu (jobapplicationsdatabase).

You’re done! You’ve successfully deployed your pipeline.

Add new form versions

Updating the solution for a new form version is straightforward—each form version only needs to be updated by testing the queries in the processing stack.

After you make the updates, you can redeploy the updated pipeline using AWS CloudFormation APIs and process new documents, arriving at the same standard data points for your schema with minimal disruption and development effort needed to make changes to your pipeline. This flexibility, which is achieved by decoupling the parsing and extraction behavior and using the JSON SerDe functionality in Athena, makes this pipeline a maintainable solution for any number of form versions that your organization needs to process to gather information.

As you run the ingest solution, data from incoming forms is automatically populated to Athena with information about the files and inputs associated to them. When the data in your forms moves from unstructured to structured data, it’s ready to use for downstream applications such as analytics, ML modeling, and more.

Clean up

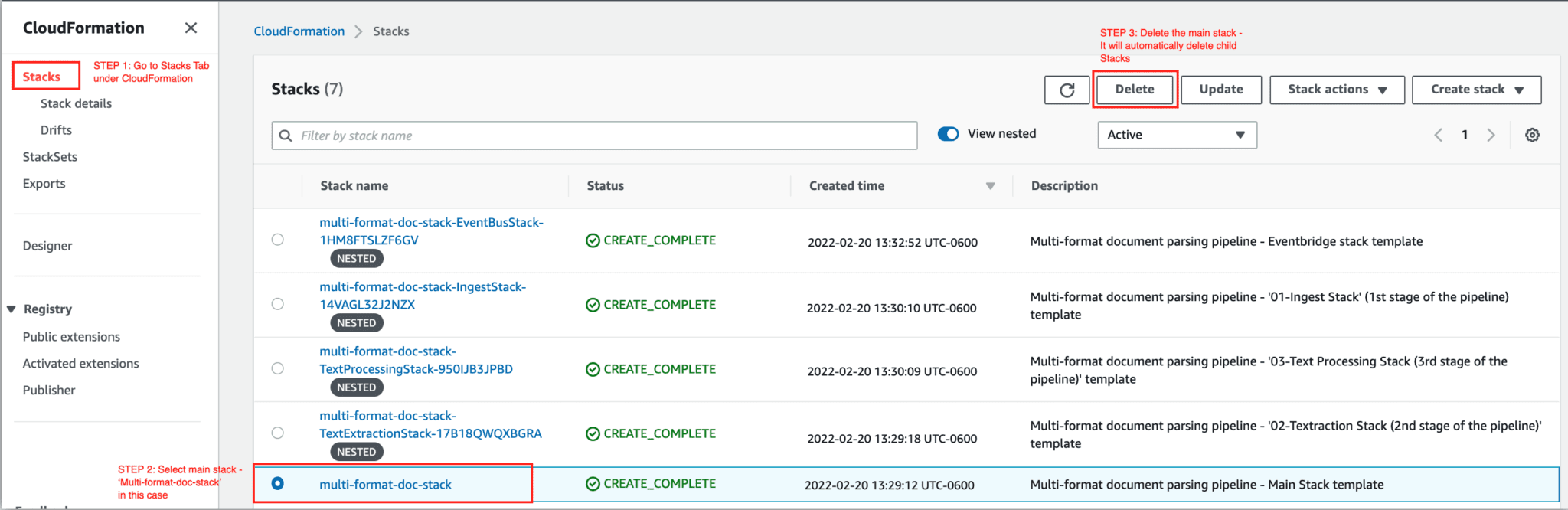

To avoid incurring ongoing charges, delete the resources you created as part of this solution when you’re done.

- On the Amazon S3 console, manually delete the buckets you created as part of the CloudFormation stack.

- On the AWS CloudFormation console, choose Stacks in the navigation pane.

- Select the main stack and choose Delete.

This automatically deletes the nested stacks.

Conclusion

In this post, we demonstrated how customers seeking to trace and customize the document processing can build and deploy an event-driven, serverless, multi-format document parsing pipeline with Amazon Textract. This pipeline provides a maintainable solution because every component (ingest, text extraction, text processing) are independent and isolated, allowing organizations to operationalize their solutions to address diverse processing needs.

Try the solution today and leave your feedback in the comments section.

About the Authors

Emily Soward is a Data Scientist with AWS Professional Services. She holds a Master of Science with Distinction in Artificial Intelligence from the University of Edinburgh in Scotland, United Kingdom with emphasis on Natural Language Processing (NLP). Emily has served in applied scientific and engineering roles focused on AI-enabled product research and development, operational excellence, and governance for AI workloads running at organizations in the public and private sector. She contributes to customer guidance as an AWS Senior Speaker and recently, as an author for AWS Well-Architected in the Machine Learning Lens.

Emily Soward is a Data Scientist with AWS Professional Services. She holds a Master of Science with Distinction in Artificial Intelligence from the University of Edinburgh in Scotland, United Kingdom with emphasis on Natural Language Processing (NLP). Emily has served in applied scientific and engineering roles focused on AI-enabled product research and development, operational excellence, and governance for AI workloads running at organizations in the public and private sector. She contributes to customer guidance as an AWS Senior Speaker and recently, as an author for AWS Well-Architected in the Machine Learning Lens.

Sandeep Singh is a Data Scientist with AWS Professional Services. He holds a Master of Science in Information Systems with concentration in AI and Data Science from San Diego State University (SDSU), California. He is a full stack Data Scientist with a strong computer science background and Trusted adviser with specialization in AI Systems and Control design. He is passionate about helping customers to get their high impact projects in the right direction, advising and guiding them in their Cloud journey, and building state-of-the-art AI/ML enabled solutions.

Sandeep Singh is a Data Scientist with AWS Professional Services. He holds a Master of Science in Information Systems with concentration in AI and Data Science from San Diego State University (SDSU), California. He is a full stack Data Scientist with a strong computer science background and Trusted adviser with specialization in AI Systems and Control design. He is passionate about helping customers to get their high impact projects in the right direction, advising and guiding them in their Cloud journey, and building state-of-the-art AI/ML enabled solutions.

Tags: Archive

Leave a Reply