Accelerate data preparation with data quality and insights in Amazon SageMaker Data Wrangler

Amazon SageMaker Data Wrangler is a new capability of Amazon SageMaker that helps data scientists and data engineers quickly and easily prepare data for machine learning (ML) applications using a visual interface. It contains over 300 built-in data transformations so you can quickly normalize, transform, and combine features without having to write any code.

Today, we’re excited to announce the new Data Quality and Insights Report feature within Data Wrangler. This report automatically verifies data quality and detects abnormalities in your data. Data scientists and data engineers can use this tool to efficiently and quickly apply domain knowledge to process datasets for ML model training.

The report includes the following sections:

- Summary statistics – This section provides insights into the number of rows, features, % missing, % valid, duplicate rows, and a breakdown of the type of feature (e.g. numeric vs. text).

- Data Quality Warnings – This section provides insights into warnings that the insights report has detected including items such as presence of small minority class, high target cardinality, rare target label, imbalanced class distribution, skewed target, heavy tailed target, outliers in target, regression frequent label, and invalid values.

- Target Column Insights – This section provides statistics on the target column including % valid, % missing, % outliers, univariate statistics such as min/median/max, and also presents examples of observations with outlier or invalid target values.

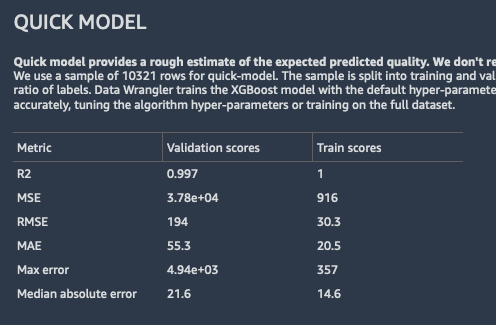

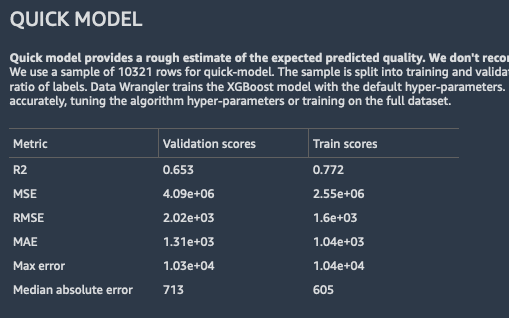

- Quick Model – The data insights report automatically trains a model on a small sample of your data set to provide a directional check on feature engineering progress and provides associated model statistics in the report.

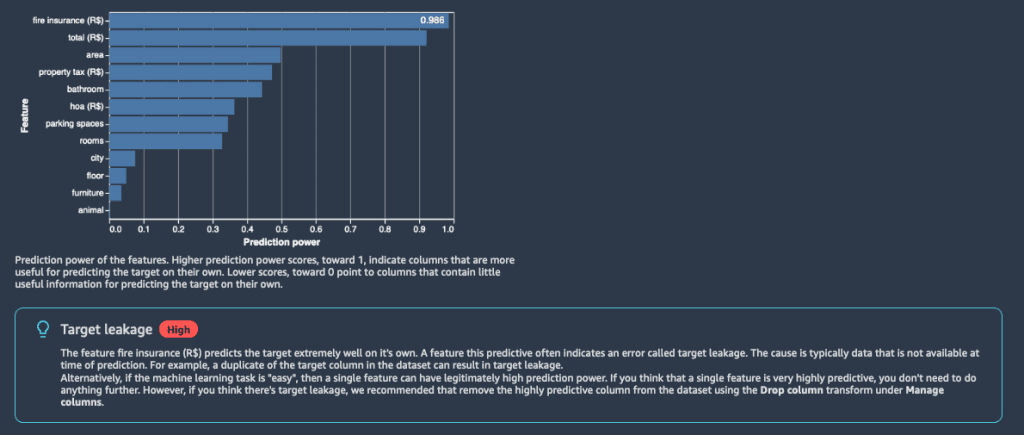

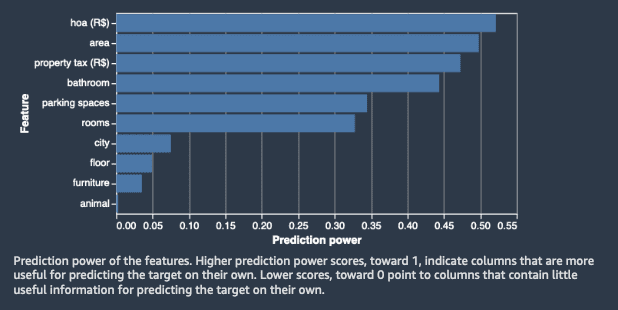

- Feature Importance – This section provides a ranking of features by feature importance which are automatically calculated when preparing the data insights and data quality report.

- Anomalous and duplicate rows – The data quality and insights report detects anomalous samples using the Isolation forest algorithm and also surfaces duplicate rows that may be present in the data set.

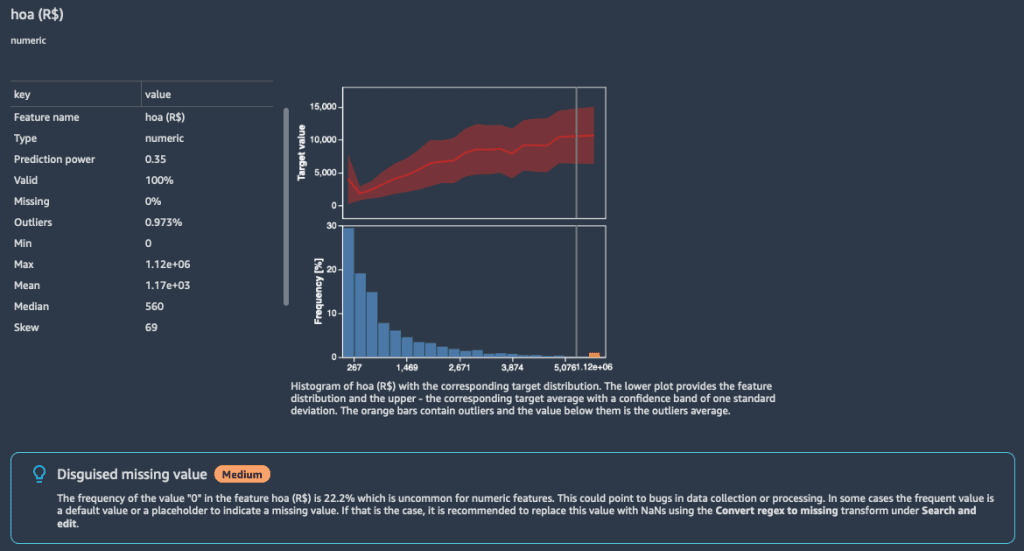

- Feature details – This section provides summary statistics for each feature in the data set as well as the corresponding distribution of the target variable.

In this post, we use the insights and recommendations of the Data Quality and Insights Report to process data by applying the suggested transformation steps without writing any code.

Get insights with the Data Quality and Insights Report

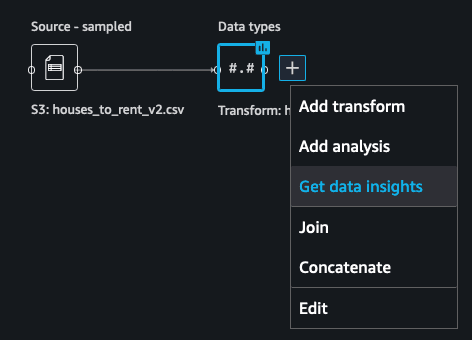

Our goal is to predict the rent cost of houses using the Brazilian houses to rent dataset. This dataset has features describing the living unit size, number of rooms, floor number, and more. To import the data set, first upload it to an Amazon Simple Storage Service (S3) bucket, and follow the steps here. After we load the dataset into Data Wrangler, we start by producing the report.

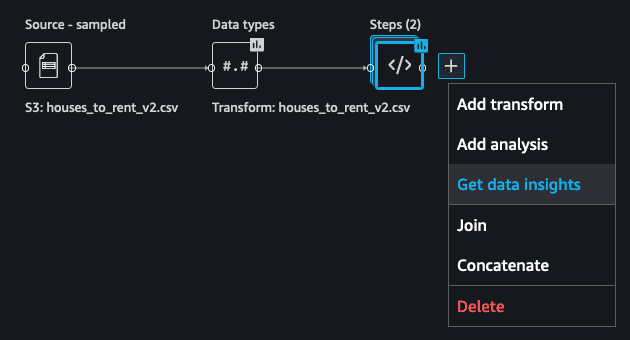

- Choose the plus sign and choose Get data insights.

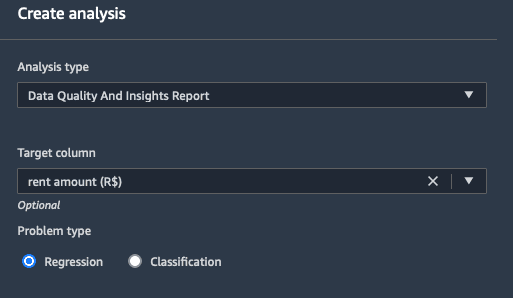

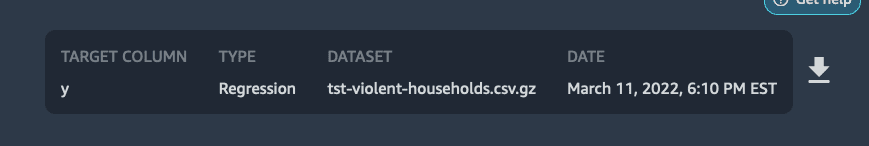

- To predict rental cost, we first choose Create analysis within the Data Wrangler data flow UI.

- For Target column, choose the column

rent amount (R$). - For Problem type, select Regression.

- Choose Start to generate the report.

If you don’t have a target column, you can still produce the report without it.

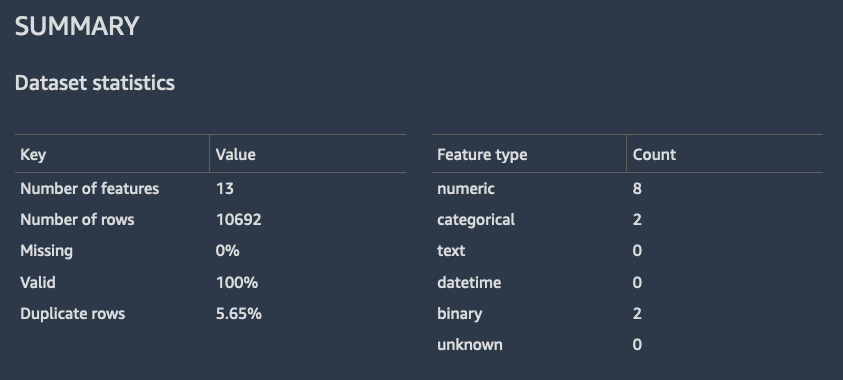

The Summary section from the generated report provides an overview of the data and displays general statistics such as the number of features, feature types, number of rows in the data, and missing and valid values.

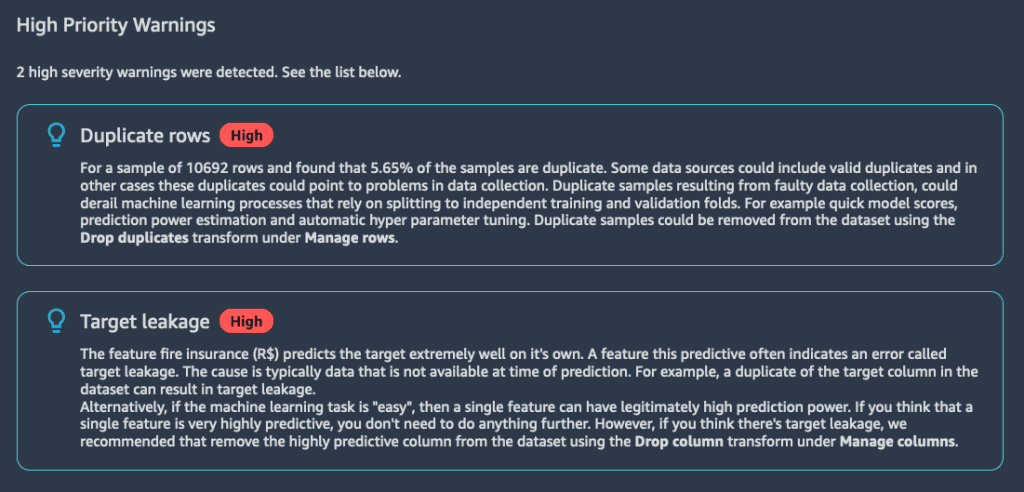

The High Priority Warnings section lists warnings for you to see. These are abnormalities in the data that could affect the quality of our ML model and should be addressed.

For your convenience, high-priority warnings are displayed at the top of the report, while other relevant warnings appear later. In our case, we found two major issues:

- The data contains many (5.65%) duplicate samples

- A feature is suspected of target leakage

You can find further information about these issues in the text that accompanies the warnings as well as suggestions on how to resolve them using Data Wrangler.

Investigate the Data Quality and Insights Report warnings

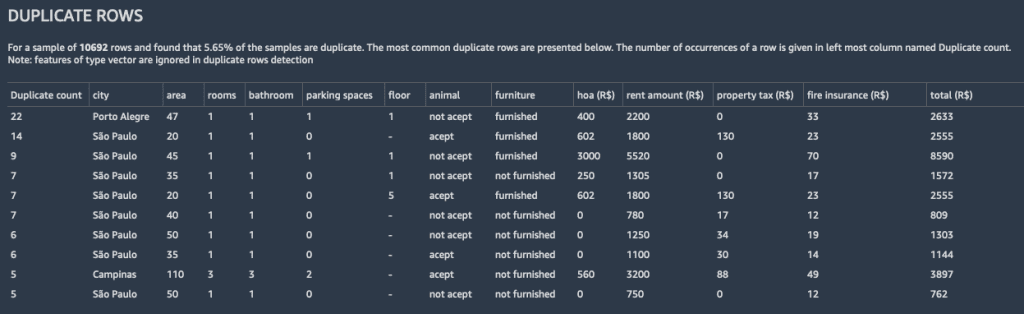

To investigate the first warning, we go to the Duplicate Rows section, where the report lists the most common duplicates. The first row in the following table shows that the data contains 22 samples of the same house in Porto Alegre.

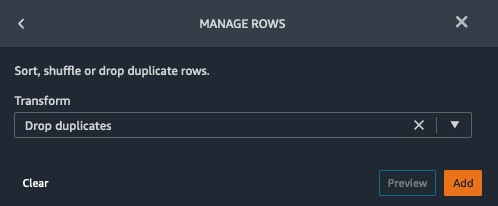

From our domain knowledge, we know that there aren’t 22 identical apartments, so we conclude that this is an artifact caused by faulty data collection. Therefore, we follow the recommendation and remove the duplicate samples using the suggested transform.

We also note the duplicate rows in the dataset because they can affect the ability to detect target leakage. The report warns us of the following:

“Duplicate samples resulting from faulty data collection, could derail machine learning processes that rely on splitting to independent training and validation folds. For example quick model scores, prediction power estimation….”

So we recheck for target leakage after resolving the duplicate rows issue.

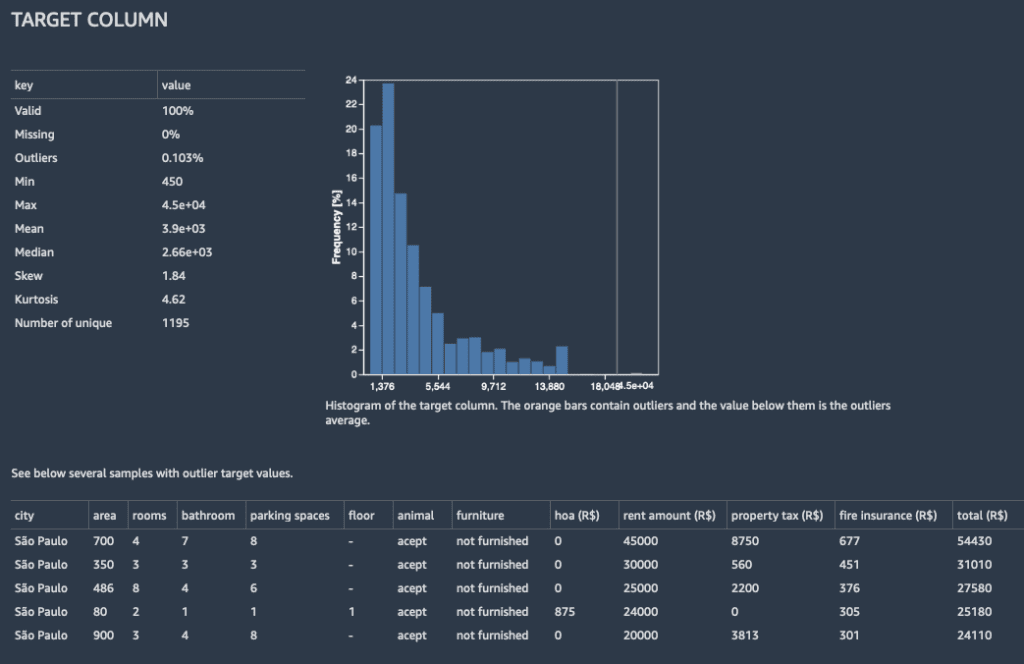

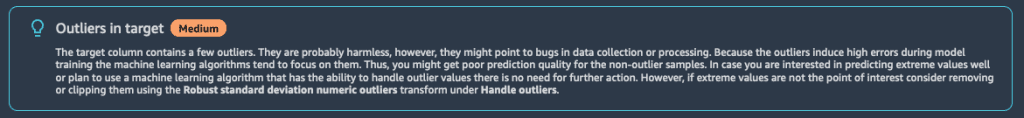

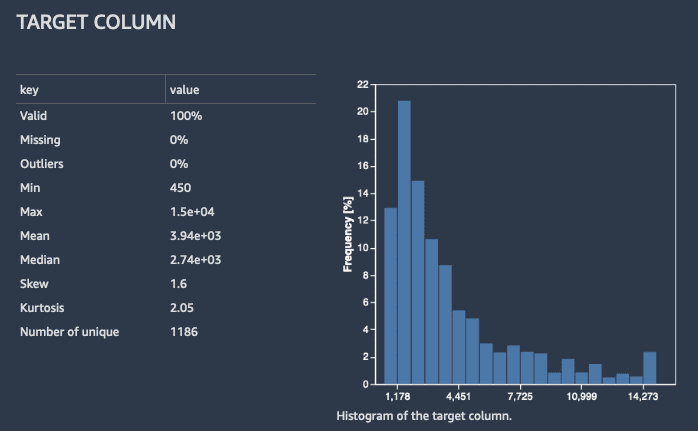

Next, we observe a medium-priority warning regarding outlier values in the Target Column section (to avoid clutter, medium-priority warnings aren’t highlighted at the top of the report).

Data Wrangler detected that 0.1% of the target column values are outliers. The outlier values are gathered into a separate bin in the histogram, and the most distant outliers are listed in a table.

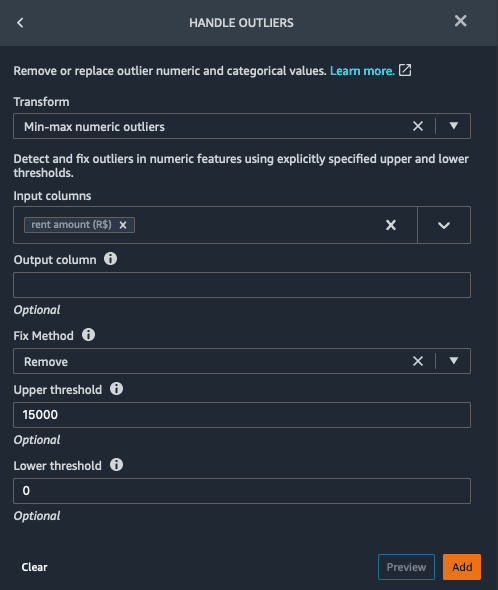

While for most houses, rent is below 15,000, a few houses have much higher rent. In our case, we’re not interested in predicting these extreme values, so we follow the recommendation to remove these outliers using the Handle outliers transform. We can get there by choosing the plus sign for the data show, then choosing Add transform. Then we add the Handle outliers transform and provide the necessary configuration.

We follow the recommendations to drop duplicate rows using the Manage rows transform and drop outliers in the target column using the Handle outliers transform.

Address additional warnings on transformed data

After we address the initial issues found in the data, we run the Data Quality and Insights Report on the transformed data.

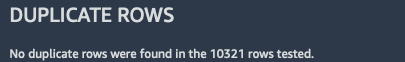

We can verify that the problems of duplicate rows and outliers in the target column were resolved.

However, the feature fire insurance (R$) is still suspected for target leakage. Furthermore, we see that the feature Total (R$) has very high prediction power. From our domain expertise, we know that is tightly coupled with rent cost, and isn’t available when making the prediction.

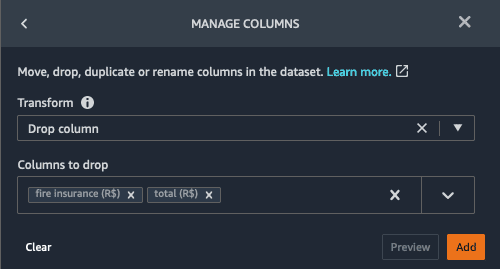

Similarly, Total (R$) is the sum of a few columns, which includes our target rent column. Therefore, Total (R$) isn’t available in prediction time. We follow the recommendation to drop these columns using the Manage columns transform.

To provide an estimate of the expected prediction quality of a model trained on the data, Data Wrangler trains an XGBoost model on a training fold and calculates prediction quality scores on a validation fold. You can find the results in the Quick Model section of the report. The resulting R2 score is almost perfect—0.997. This is another indication of target leakage—we know that rent prices can’t be predicted that well.

In the Feature Details section, the report raises a Disguised missing value warning for the hoa (R$) column (HOA stands for Home Owners Association):

“The frequency of the value 0 in the feature hoa (R$) is 22.2% which is uncommon for numeric features. This could point to bugs in data collection or processing. In some cases the frequent value is a default value or a placeholder to indicate a missing value….”

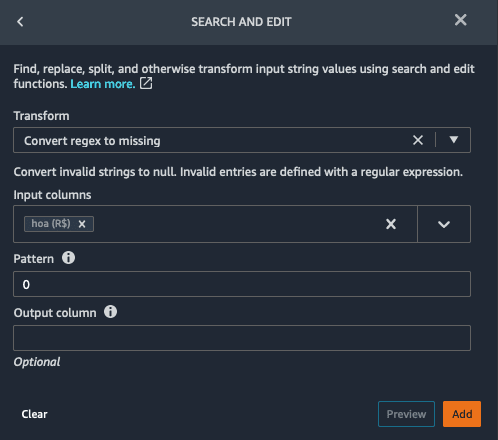

We know that HOA stands for home owner association tax, and that zeros represents missing values. It’s better to indicate that we don’t know if these homes belong to an association. Therefore, we follow the recommendation to replace the zeros with NaNs using the Convert regex to missing transform under Search and edit.

We follow the recommendation to drop the fire insurance (R$) and Total (R$) columns using the Manage columns transform and replacing zeros with NaNs for hoa (R$).

Create the final report

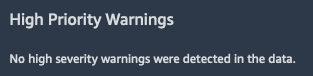

To verify that all issues are resolved, we generate the report again. First, we note that there are no high-priority warnings.

Looking again at Quick model, we see that due to removing the columns with target leakage, the validation R2 score dropped from the “too good to be true” value of 0.997 to 0.653, which is more reasonable.

Additionally, fixing hoa (R$) by replacing zeros with NaNs improved the prediction power of that column from 0.35 to 0.52.

Finally, because ML algorithms usually require numeric inputs, we encode the categorical and binary columns (see the Summary section) as ordinal integers using the Ordinal encode transform under Encode Categorical. In general, categorical variables can be encoded as one-hot or ordinal variables.

Now the data is ready for model training. We can export the processed data to an Amazon Simple Storage Service (Amazon S3) bucket or create a processing job.

Additionally, you can download the reports you generate by choosing the download icon.

Conclusion

In this post, we saw how the Data Quality and Insights Report can help you employ domain expertise and business logic to process data for ML training. Data scientists of all skillsets can find value in this report. Experienced users can also find value in these reports to speed up their work because it automatically produces various statistics and performs many checks that would otherwise be done manually.

We encourage you to replicate the example in this post in your Data Wrangler data flow to see how you can apply this to your domain and datasets. For more information on using Data Wrangler, refer to Get Started with Data Wrangler.

About the Authors

Stephanie Yuan is an SDE in the SageMaker Data Wrangler team based out of Seattle, WA. Outside of work, she enjoys going out to nature that PNW has to offer.

Stephanie Yuan is an SDE in the SageMaker Data Wrangler team based out of Seattle, WA. Outside of work, she enjoys going out to nature that PNW has to offer.

Joseph Cho is an engineer who’s built a number of web applications from bottom up using modern programming techniques. He’s driven key features into production and has a history of practical problem solving. In his spare time Joseph enjoys playing chess and going to concerts.

Joseph Cho is an engineer who’s built a number of web applications from bottom up using modern programming techniques. He’s driven key features into production and has a history of practical problem solving. In his spare time Joseph enjoys playing chess and going to concerts.

Peter Chung is a Solutions Architect for AWS, and is passionate about helping customers uncover insights from their data. He has been building solutions to help organizations make data-driven decisions in both the public and private sectors. He holds all AWS certifications as well as two GCP certifications.

Peter Chung is a Solutions Architect for AWS, and is passionate about helping customers uncover insights from their data. He has been building solutions to help organizations make data-driven decisions in both the public and private sectors. He holds all AWS certifications as well as two GCP certifications.

Yotam Elor is a Senior Applied Scientist at Amazon SageMaker. His research interests are in machine learning, particularly for tabular data.

Yotam Elor is a Senior Applied Scientist at Amazon SageMaker. His research interests are in machine learning, particularly for tabular data.

Tags: Archive

Leave a Reply