Host Hugging Face transformer models using Amazon SageMaker Serverless Inference

The last few years have seen rapid growth in the field of natural language processing (NLP) using transformer deep learning architectures. With its Transformers open-source library and machine learning (ML) platform, Hugging Face makes transfer learning and the latest transformer models accessible to the global AI community. This can reduce the time needed for data scientists and ML engineers in companies around the world to take advantage of every new scientific advancement. Amazon SageMaker and Hugging Face have been collaborating to simplify and accelerate adoption of transformer models with Hugging Face DLCs, integration with SageMaker Training Compiler, and SageMaker distributed libraries.

SageMaker provides different options for ML practitioners to deploy trained transformer models for generating inferences:

- Real-time inference endpoints, which are suitable for workloads that need to be processed with low latency requirements in the order of milliseconds.

- Batch transform, which is ideal for offline predictions on large batches of data.

- Asynchronous inference, which is ideal for workloads requiring large payload size (up to 1 GB) and long inference processing times (up to 15 minutes). Asynchronous inference enables you to save on costs by auto scaling the instance count to zero when there are no requests to process.

- Serverless Inference, which is a new purpose-built inference option that makes it easy for you to deploy and scale ML models.

In this post, we explore how to use SageMaker Serverless Inference to deploy Hugging Face transformer models and discuss the inference performance and cost-effectiveness in different scenarios.

Overview of solution

AWS recently announced the General Availability of SageMaker Serverless Inference, a purpose-built serverless inference option that makes it easy for you to deploy and scale ML models. With Serverless Inference, you don’t need to provision capacity and forecast usage patterns upfront. As a result, it saves you time and eliminates the need to choose instance types and manage scaling policies. Serverless Inference endpoints automatically start, scale, and shut down capacity, and you only pay for the duration of running the inference code and the amount of data processed, not for idle time. Serverless inference is ideal for applications with less predictable or intermittent traffic patterns. Visit Serverless Inference to learn more.

Since its preview launch at AWS re:Invent 2021, we have made the following improvements:

- General Availability across all commercial Regions where SageMaker is generally available (except AWS China Regions).

- Increased maximum concurrent invocations per endpoint limit to 200 (from 50 during preview), allowing you to onboard high traffic workloads.

- Added support for the SageMaker Python SDK, abstracting the complexity from ML model deployment process.

- Added model registry support. You can register your models in the model registry and deploy directly to serverless endpoints. This allows you to integrate your SageMaker Serverless Inference endpoints with your MLOps workflows.

Let’s walk through how to deploy Hugging Face models on SageMaker Serverless Inference.

Deploy a Hugging Face model using SageMaker Serverless Inference

The Hugging Face framework is supported by SageMaker, and you can directly use the SageMaker Python SDK to deploy the model into the Serverless Inference endpoint by simply adding a few lines in the configuration. We use the SageMaker Python SDK in our example scripts. If you have models from different frameworks that need more customization, you can use the AWS SDK for Python (Boto3) to deploy models on a Serverless Inference endpoint. For more details, refer to Deploying ML models using SageMaker Serverless Inference (Preview).

First, you need to create HuggingFaceModel. You can select the model you want to deploy on the Hugging Face Hub; for example, distilbert-base-uncased-finetuned-sst-2-english. This model is a fine-tuned checkpoint of DistilBERT-base-uncased, fine-tuned on SST-2. See the following code:

After you add the HuggingFaceModel class, you need to define the ServerlessInferenceConfig, which contains the configuration of the Serverless Inference endpoint, namely the memory size and the maximum number of concurrent invocations for the endpoint. After that, you can deploy the Serverless Inference endpoint with the deploy method:

This post covers the sample code snippet for deploying the Hugging Face model on a Serverless Inference endpoint. For a step-by-step Python code sample with detailed instructions, refer to the following notebook.

Inference performance

SageMaker Serverless Inference greatly simplifies hosting for serverless transformer or deep learning models. However, its runtime can vary depending on the configuration setup. You can choose a Serverless Inference endpoint memory size from 1024 MB (1 GB) up to 6144 MB (6 GB). In general, memory size should be at least as large as the model size. However, it’s a good practice to refer to memory utilization when deciding the endpoint memory size, in addition to the model size itself.

Serverless Inference integrates with AWS Lambda to offer high availability, built-in fault tolerance, and automatic scaling. Although Serverless Inference auto-assigns compute resources proportional to the model size, a larger memory size implies the container would have access to more vCPUs and have more compute power. However, as of this writing, Serverless Inference only supports CPUs and does not support GPUs.

On the other hand, with Serverless Inference, you can expect cold starts when the endpoint has no traffic for a while and suddenly receives inference requests, because it would take some time to spin up the compute resources. Cold starts may also happen during scaling, such as if new concurrent requests exceed the previous concurrent requests. The duration for a cold start varies from under 100 milliseconds to a few seconds, depending on the model size, how long it takes to download the model, as well as the startup time of the container. For more information about monitoring, refer to Monitor Amazon SageMaker with Amazon CloudWatch.

In the following table, we summarize the results of experiments performed to collect latency information of Serverless Inference endpoints over various Hugging Face models and different endpoint memory sizes. As input, a 128 sequence length payload was used. The model latency increased when the model size became large; the latency number is related to the model specifically. The overall model latency could be improved in various ways, including reducing model size, a better inference script, loading the model more efficiently, and quantization.

| Model-Id | Task | Model Size | Memory Size | Model Latency Average |

Model Latency p99 |

Overhead Latency Average |

Overhead Latency p99 |

| distilbert-base-uncased-finetuned-sst-2-english | Text classification | 255 MB | 4096 MB | 220 ms | 243 ms | 17 ms | 43 ms |

| xlm-roberta-large-finetuned-conll03-english | Token classification | 2.09 GB | 5120 MB | 1494 ms | 1608 ms | 18 ms | 46 ms |

| deepset/roberta-base-squad2 | Question answering | 473 MB | 5120 MB | 451 ms | 468 ms | 18 ms | 31 ms |

| google/pegasus-xsum | Summarization | 2.12 GB | 6144 MB | 22501 ms | 32516 ms | 27 ms | 97 ms |

| sshleifer/distilbart-cnn-12-6 | Summarization | 1.14 GB | 6144 MB | 12683 ms | 18669 ms | 25 ms | 53 ms |

Regarding price performance, we can use the DistilBERT model as an example and compare the serverless inference option to an ml.t2.medium instance (2 vCPUs, 4 GB Memory, $42 per month) on a real-time endpoint. With the real-time endpoint, DistilBERT has a p99 latency of 240 milliseconds and total request latency of 251 milliseconds. Whereas in the preceding table, Serverless Inference has a model latency p99 of 243 milliseconds and an overhead latency p99 of 43 milliseconds. With Serverless Inference, you only pay for the compute capacity used to process inference requests, billed by the millisecond, and the amount of data processed. Therefore, we can estimate the cost of running a DistilBERT model (distilbert-base-uncased-finetuned-sst-2-english, with price estimates for the us-east-1 Region) as follows:

- Total request time – 243ms + 43ms = 286ms

- Compute cost (4096 MB) – $0.000080 USD per second

- 1 request cost – 1 * 0.286 * $0.000080 = $0.00002288

- 1 thousand requests cost – 1,000 * 0.286 * $0.000080 = $0.02288

- 1 million requests cost – 1,000,000 * 0.286 * $0.000080 = $22.88

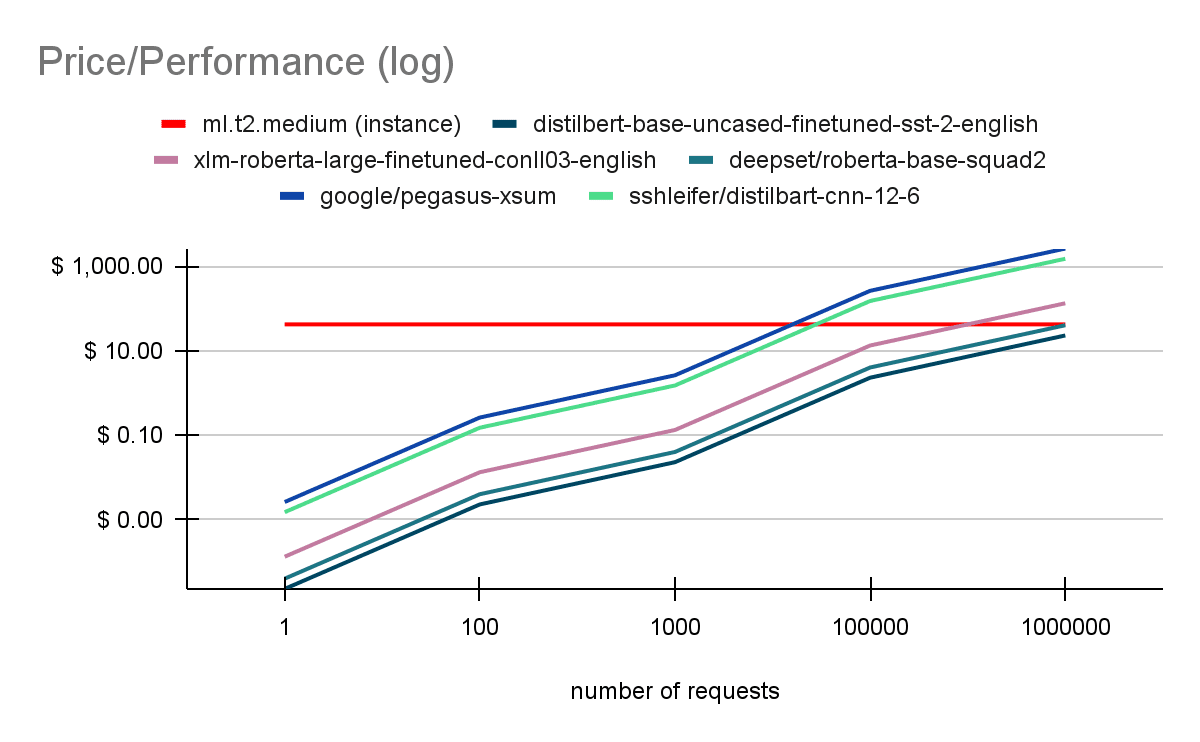

The following figure is the cost comparison for different Hugging Face models on SageMaker Serverless Inference vs. real-time inference (using a ml.t2.medium instance).

Figure 1: Cost comparison for different Hugging Face models on SageMaker Serverless Inference vs. real-time inference

As you can see, Serverless Inference is a cost-effective option when the traffic is intermittent or low. If you have a strict inference latency requirement, consider using real-time inference with higher compute power.

Conclusion

Hugging Face and AWS announced a partnership earlier in 2022 that makes it even easier to train Hugging Face models on SageMaker. This functionality is available through the development of Hugging Face AWS DLCs. These containers include the Hugging Face Transformers, Tokenizers, and Datasets libraries, which allow us to use these resources for training and inference jobs. For a list of the available DLC images, see Available Deep Learning Containers Images. They are maintained and regularly updated with security patches. We can find many examples of how to train Hugging Face models with these DLCs in the following GitHub repo.

In this post, we introduced how you can use the newly announced SageMaker Serverless Inference to deploy Hugging Face models. We provided a detailed code snippet using the SageMaker Python SDK to deploy Hugging Face models with SageMaker Serverless Inference. We then dived deep into inference latency over various Hugging Face models as well as price performance. You are welcome to try this new service and we’re excited to receive more feedback!

About the Authors

James Yi is a Senior AI/ML Partner Solutions Architect in the Emerging Technologies team at Amazon Web Services. He is passionate about working with enterprise customers and partners to design, deploy and scale AI/ML applications to derive their business values. Outside of work, he enjoys playing soccer, traveling and spending time with his family.

James Yi is a Senior AI/ML Partner Solutions Architect in the Emerging Technologies team at Amazon Web Services. He is passionate about working with enterprise customers and partners to design, deploy and scale AI/ML applications to derive their business values. Outside of work, he enjoys playing soccer, traveling and spending time with his family.

Rishabh Ray Chaudhury is a Senior Product Manager with Amazon SageMaker, focusing on Machine Learning inference. He is passionate about innovating and building new experiences for Machine Learning customers on AWS to help scale their workloads. In his spare time, he enjoys traveling and cooking. You can find him on LinkedIn.

Rishabh Ray Chaudhury is a Senior Product Manager with Amazon SageMaker, focusing on Machine Learning inference. He is passionate about innovating and building new experiences for Machine Learning customers on AWS to help scale their workloads. In his spare time, he enjoys traveling and cooking. You can find him on LinkedIn.

Philipp Schmid is a Machine Learning Engineer and Tech Lead at Hugging Face, where he leads the collaboration with the Amazon SageMaker team. He is passionate about democratizing, optimizing, and productionizing cutting-edge NLP models and improving the ease of use for Deep Learning.

Philipp Schmid is a Machine Learning Engineer and Tech Lead at Hugging Face, where he leads the collaboration with the Amazon SageMaker team. He is passionate about democratizing, optimizing, and productionizing cutting-edge NLP models and improving the ease of use for Deep Learning.

Yanyan Zhang is a Data Scientist in the Energy Delivery team with AWS Professional Services. She is passionate about helping customers solve real problems with AI/ML knowledge. Outside of work, she loves traveling, working out and exploring new things.

Yanyan Zhang is a Data Scientist in the Energy Delivery team with AWS Professional Services. She is passionate about helping customers solve real problems with AI/ML knowledge. Outside of work, she loves traveling, working out and exploring new things.

Tags: Archive

Leave a Reply