Open Source AI: Establishing a common ground

The current draft v. 0.0.3 of the Open Source AI Definition borrows wordings from the GNU Manifesto’s golden rule stating:

If I like a program, I must be able to share it with others who like it.

The GNU Manifesto

The GNU Manifesto refers to “program” (not “AI system”), without the need to define it. When it was published in 1985, the definition of a program was pretty clear. Today’s scene around artificial intelligence is not as clear and there are multiple definitions for AI systems floating around.

The process of finding a shared definition of Open Source AI is only in its infancy. I’m fully aware that for many of us here this is trivial and this phase is almost boring.

But the four workshops revealed that a significant number of people in the rooms did not know the 4 Freedoms nor had any idea that OSI has a formal Open Source Definition. And this happened also at two Open Source-focused events!

Which definition of AI system to adopt

I don’t think the Open Source community should write its own definition of an AI system as there are too many dangers with doing that. Most importantly, adopting a vocabulary foreign to the AI world increases the risks of not being understood or accepted. It’s a lot more effective and will be more palatable to use a widely adopted definition.

The OECD definition of AI system

The Organisation for Economic Co-operation and Development (OECD) published one in 2019 and updated it in November 2023. OECD’s definition has been adopted by the United Nations, NIST and the AI Act may use it too.

An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment

Recommendation of the Council on Artificial Intelligence Adopted on: 22/05/2019; Amended on: 08/11/2023

I discovered a 2022 document of the OECD with a slightly amended definition from the one of 2019.The 2022 OECD Framework for the Classification of AI systems removes the words “or decisions” from their previous definition, saying in the note #5:

Experts Working Group decided [“or decisions”] should be excluded here to clarify that an AI system does not make an actual decision, which is the remit of human creators and outside the scope of the AI system

The updated definition used by the Experts WG is:

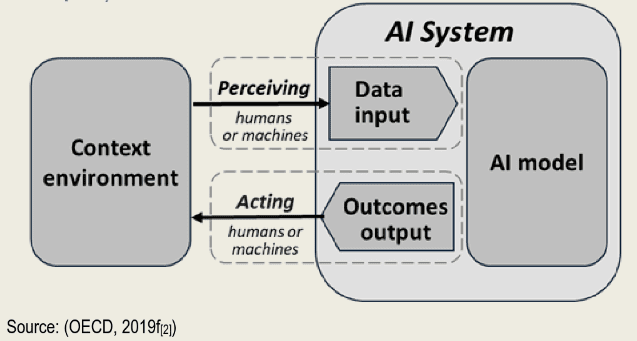

An AI system is a machine-based system that is capable of influencing the environment by producing recommendations, predictions or other outcomes for a given set of objectives. It uses machine and/or human-based inputs/data to:

- perceive environments;

- abstract these perceptions into models; and

- use the models to formulate options for outcomes.

AI systems are designed to operate with varying levels of autonomy (OECD, 2019f[2]).”

Surprisingly, the version amended in November 2023 by the OECD still uses the words “or decisions”.

The definition of AI system for US National Institute of Standards (NIST)

NIST AI Risk Management Framework slightly modified the OECD definition that includes the word “outputs”:

The AI RMF refers to an AI system as an engineered or machine-based system that can, for a given set of objectives, generate outputs such as predictions, recommendations, or decisions influencing real or virtual environments. AI systems are designed to operate with varying levels of autonomy (Adapted from: OECD Recommendation on AI:2019; ISO/IEC 22989:2022)

The definition of AI system in Europe

To complete the picture, I also looked at the EU. In a document from 2019, in the early days of the legislative process, the expert group on AI suggested: https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence:

Artificial intelligence (AI) systems are software (and possibly also hardware) systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information, derived from this data and deciding the best action(s) to take to achieve the given goal. AI systems can either use symbolic rules or learn a numeric model, and they can also adapt their behaviour by analysing how the environment is affected by their previous actions.

As a scientific discipline, AI includes several approaches and techniques, such as machine learning (of which deep learning and reinforcement learning are specific examples), machine reasoning (which includes planning, scheduling, knowledge representation and reasoning, search, and optimization), and robotics (which includes control, perception, sensors and actuators, as well as the integration of all other techniques into cyber-physical systems).

High-Level expert group on AI: Ethics guidelines for trustworthy AI

It’s worth noting that this definition is not used in the AI Act. The text of the EU Council suggests this one be used:

artificial intelligence system’ (AI system) means a system that

- receives machine and/or human-based data and inputs,

- infers how to achieve a given set of human-defined objectives using learning, reasoning or modelling implemented with the techniques and approaches listed in Annex I, and

- generates outputs in the form of content (generative AI systems), predictions, recommendations or decisions, which influence the environments it interacts with;

which seems to be quite similar to the OECD text.

Why we need to adopt a definition of AI system

There is agreement that the Open Source AI Definition needs to cover all AI implementations and not be specific to machine learning, deep learning, computer vision or other branches. That requires using a generic term. For software, the word “program” covers everything, from assembly, interpreted to compiled languages. “AI system” is the equivalent in the context of artificial intelligence.

“Program” is to software as “AI system” is to artificial intelligence.

In the document What is Free Software, the GNU project describes four fundamental freedoms that the “program” must carry to its users. Draft v. 0.0.3 similarly describes four freedoms that the AI system needs to deliver to its users.

In v. 0.0.3 draft there was debate on the wording of the freedom #3 — freedom to modify. For software, that’s the freedom to modify the program to better serve user’s needs, fix bugs, etc. Draft v. 0.0.3 says:

Modify the system to change its recommendations, predictions or decisions to adapt to your needs.

The intention to specify what the object of the change is to establish the principle that anyone should have the right to modify the behavior of the AI system as a whole. The words “recommendations, predictions or decisions” come from the definition of AI system: what does the “system” do and what would I want to modify?

That’s why it’s important to say what it is we expect to have the right to modify. Tying that to an agreed-upon definition of what an AI system does is a way to make sure that all readers are on the same page.

We can change the wordings for that bullet point but I think the verb “modify” should refer to the whole system, not individual components.

We’re trying to adopt a definition of an AI system that is widely understood and accepted, even though it’s not strictly correct scientifically. The Open Source AI Definition should align with other policy documents because many communities (legal, policy makers and even academia) will have to align too.

The newest definition of AI system from the OECD is the best candidate, without the words “or decisions.”

Next steps

I met with the Digital Public Goods Alliance in Addis Ababa on November 14. I expected to encounter a different assortment of competences than the ones I’ve met so far, and that was true. How far we are from consensus on basic principles is something I’m contemplating before releasing draft v.0.0.4 and move on to the next phase of public conversations. For 2024 we’re planning a regular cadence of meetings (online and in- person) and a release roadmap leading to a v. 1.0 before the end of the year. More to come.

The post Open Source AI: Establishing a common ground appeared first on Voices of Open Source.

Leave a Reply