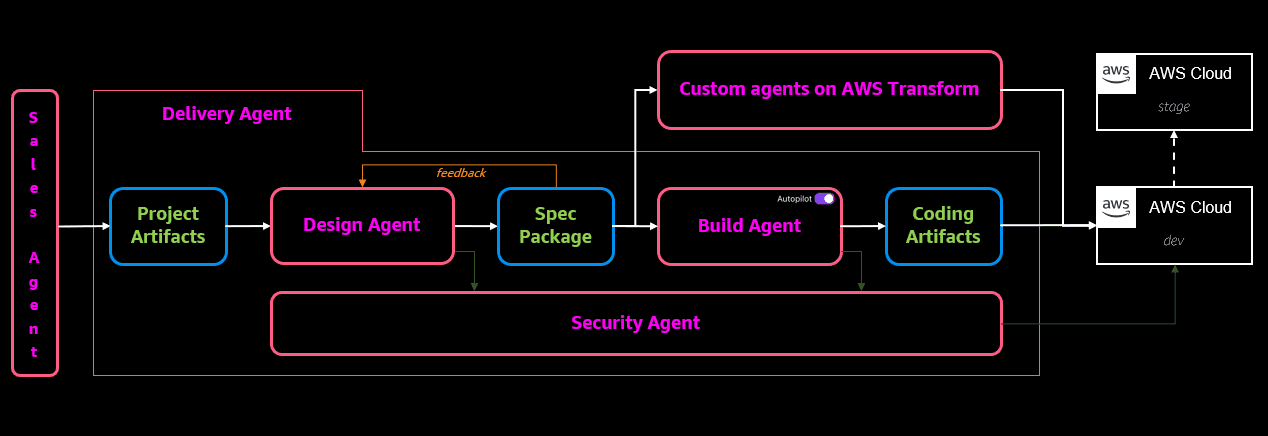

Accelerate enterprise solutions with agentic AI-powered consulting: Introducing AWS Professional Service Agents

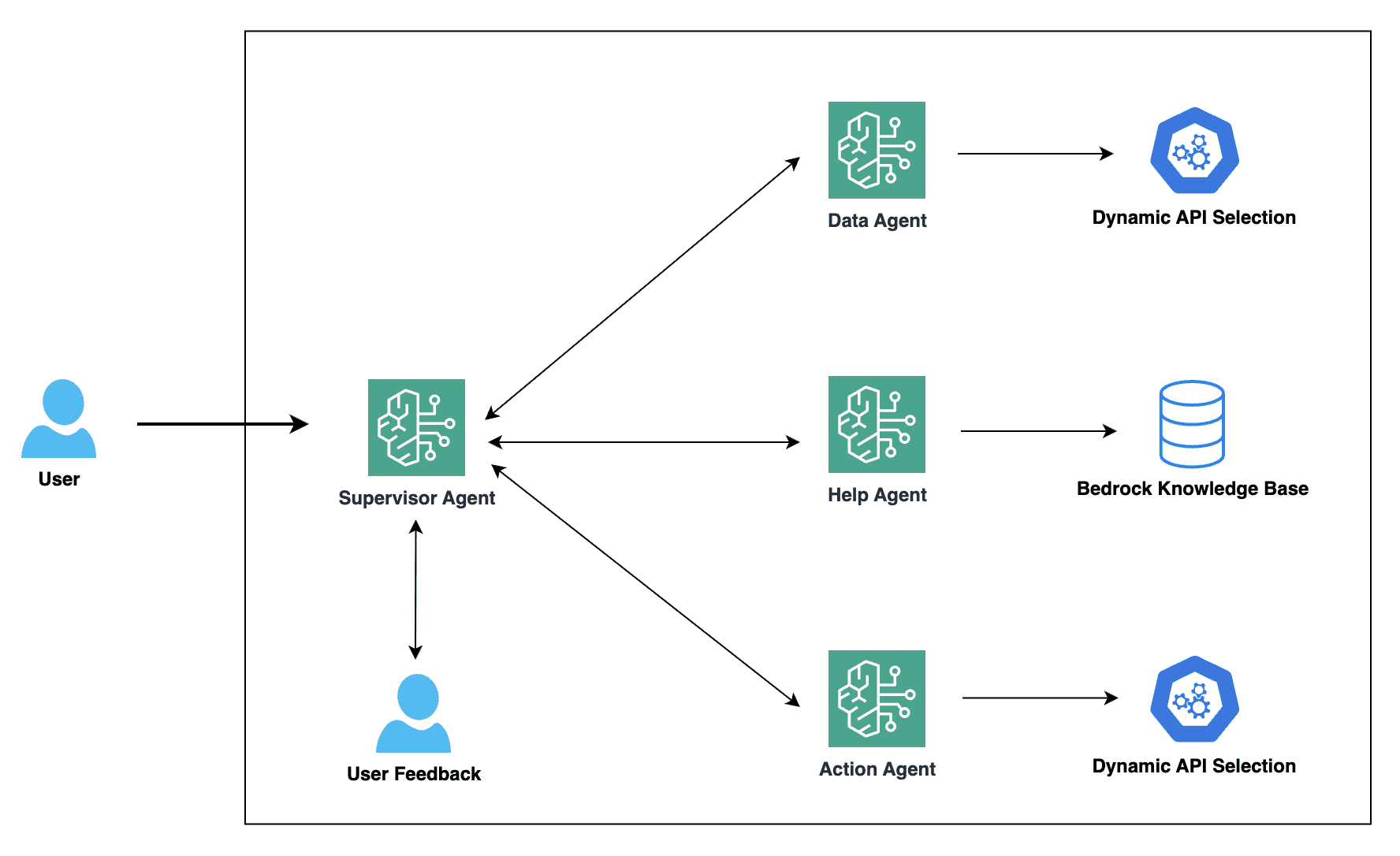

Favorite AWS Professional Services set out to help organizations accelerate their cloud adoption with expert guidance and proven methodologies. Today, we’re at a pivotal moment in consulting. Just as cloud computing transformed how enterprises build technology, agentic AI is transforming how consulting services deliver value. We believe in a future

Read More![]() Shared by AWS Machine Learning November 17, 2025

Shared by AWS Machine Learning November 17, 2025