Favorite Here’s how Google’s AI products and features can help students, educators, parents and guardians have their best back to school season yet. View Original Source (blog.google/technology/ai/) Here.

Favorite Share your thoughts about draft v0.0.9 @mkai added concerns about how OSI will address AI-generated content from both open and closed source models, given current legal rulings that such content cannot be copyrighted. He also suggests clarifying the difference between licenses for AI model parameters and the model itself

Read More

Shared by voicesofopensource September 2, 2024

Shared by voicesofopensource September 2, 2024

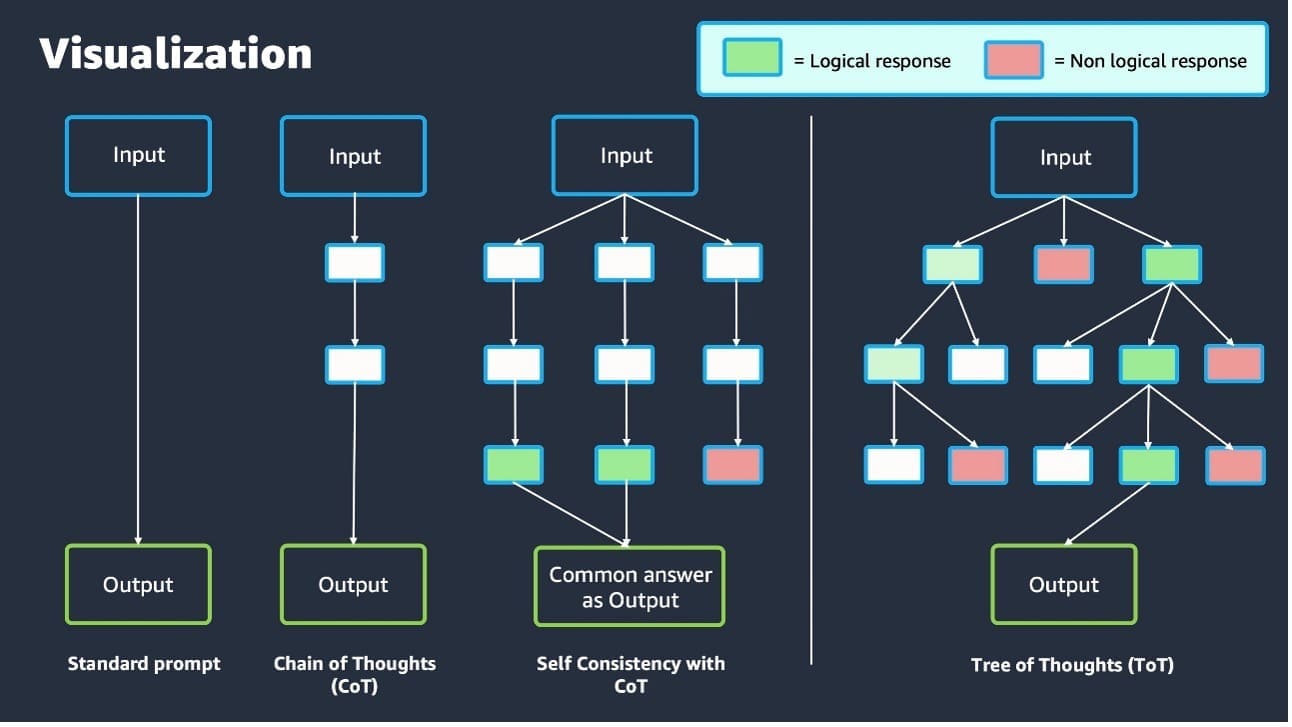

Favorite Despite the ability of generative artificial intelligence (AI) to mimic human behavior, it often requires detailed instructions to generate high-quality and relevant content. Prompt engineering is the process of crafting these inputs, called prompts, that guide foundation models (FMs) and large language models (LLMs) to produce desired outputs. Prompt

Read More

Shared by AWS Machine Learning August 31, 2024

Shared by AWS Machine Learning August 31, 2024

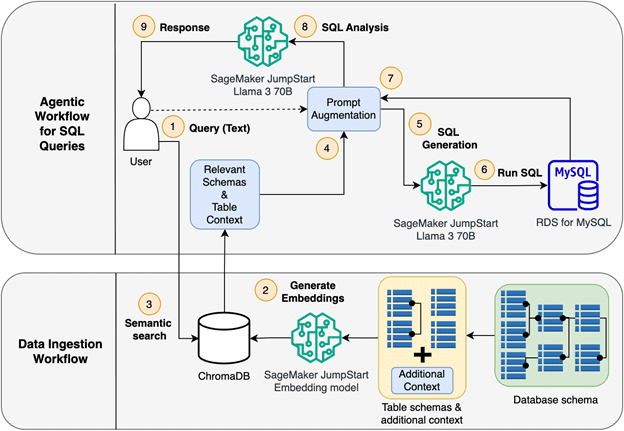

Favorite With the rapid growth of generative artificial intelligence (AI), many AWS customers are looking to take advantage of publicly available foundation models (FMs) and technologies. This includes Meta Llama 3, Meta’s publicly available large language model (LLM). The partnership between Meta and Amazon signifies collective generative AI innovation, and

Read More

Shared by AWS Machine Learning August 31, 2024

Shared by AWS Machine Learning August 31, 2024

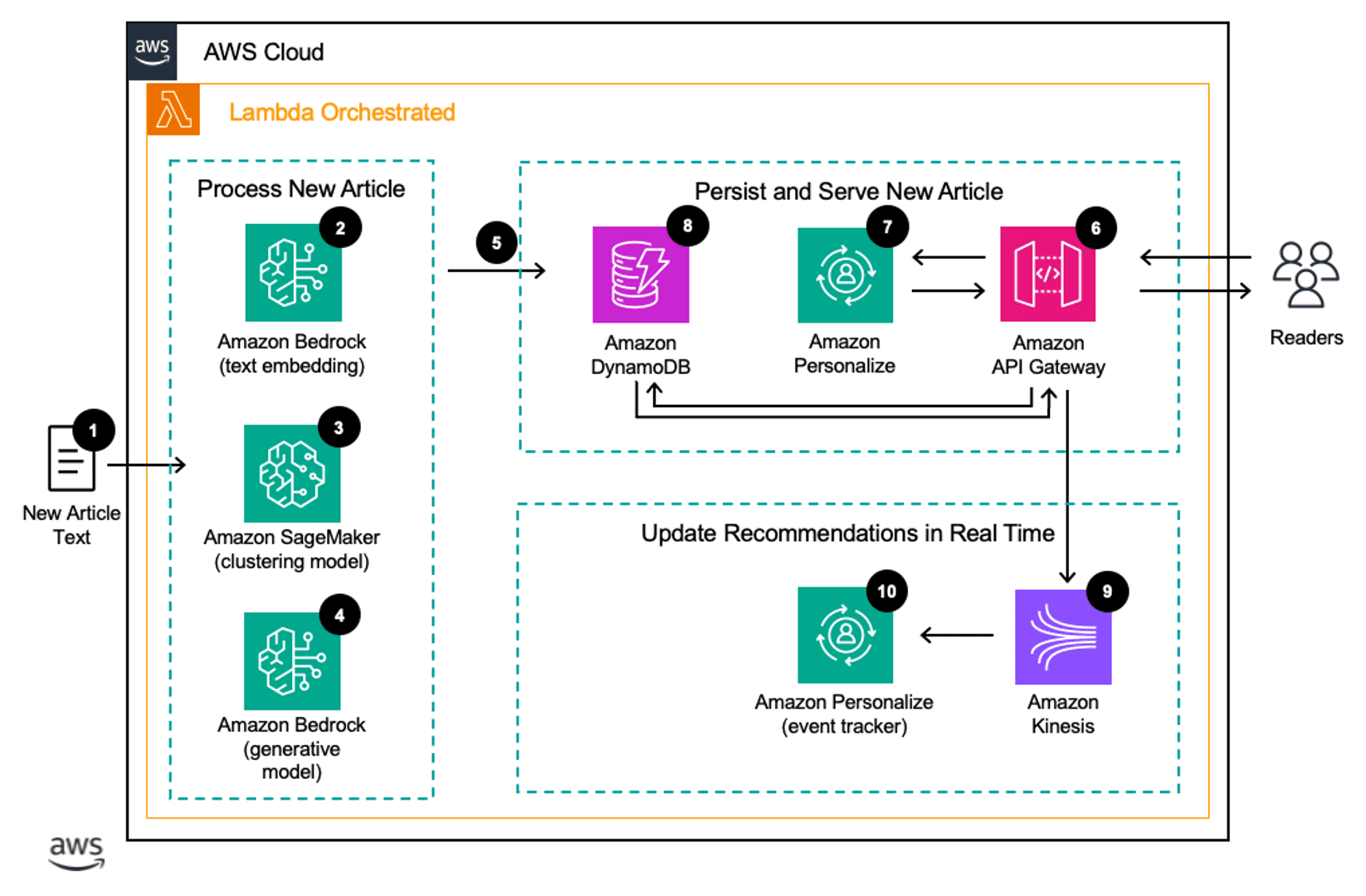

Favorite News publishers want to provide a personalized and informative experience to their readers, but the short shelf life of news articles can make this quite difficult. In news publishing, articles typically have peak readership within the same day of publication. Additionally, news publishers frequently publish new articles and want

Read More

Shared by AWS Machine Learning August 30, 2024

Shared by AWS Machine Learning August 30, 2024

Favorite The AWS DeepRacer League is the world’s first autonomous racing league, open to everyone and powered by machine learning (ML). AWS DeepRacer brings builders together from around the world, creating a community where you learn ML hands-on through friendly autonomous racing competitions. As we celebrate the achievements of over

Read More

Shared by AWS Machine Learning August 30, 2024

Shared by AWS Machine Learning August 30, 2024

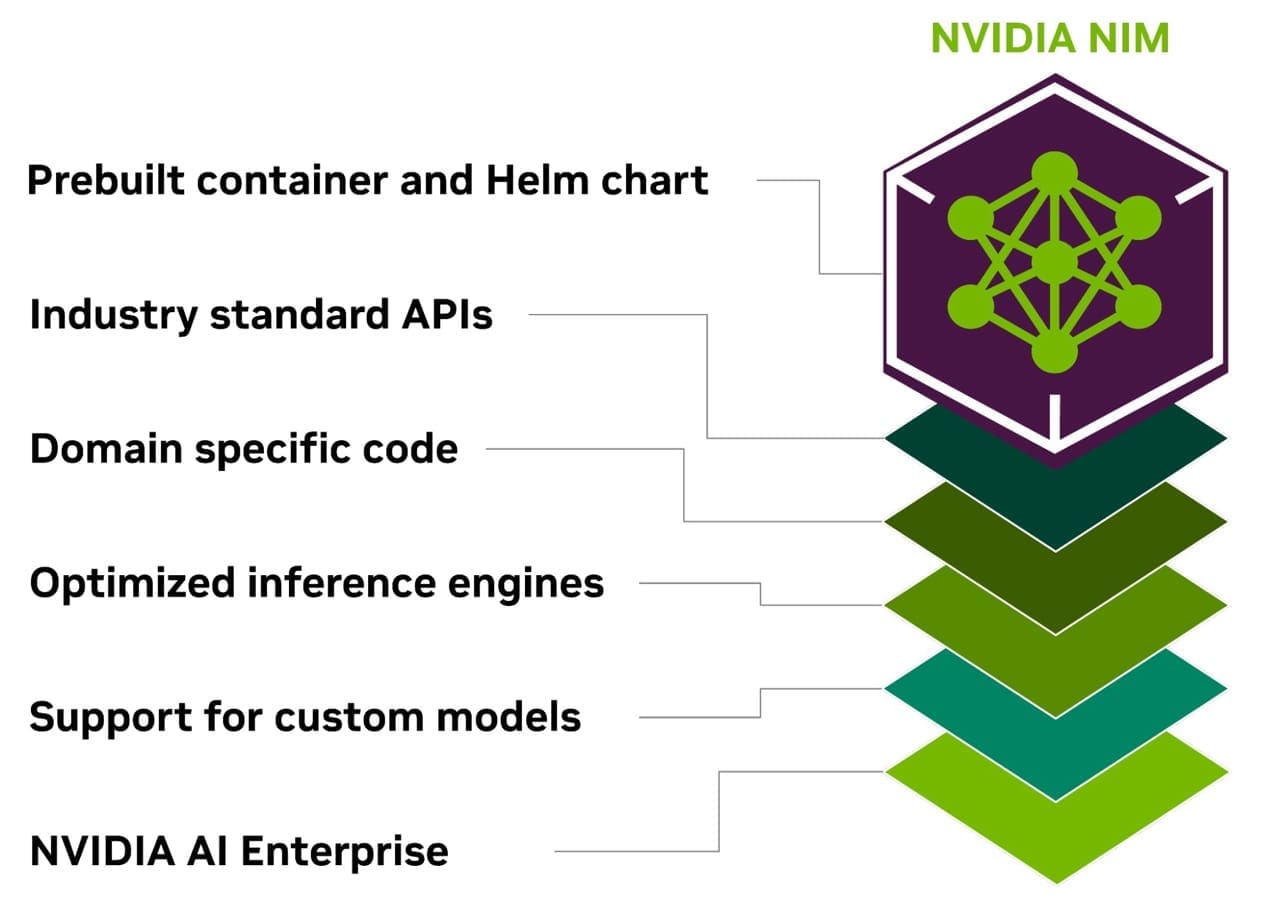

Favorite This post is co-written with Eliuth Triana, Abhishek Sawarkar, Jiahong Liu, Kshitiz Gupta, JR Morgan and Deepika Padmanabhan from NVIDIA. At the 2024 NVIDIA GTC conference, we announced support for NVIDIA NIM Inference Microservices in Amazon SageMaker Inference. This integration allows you to deploy industry-leading large language models (LLMs) on

Read More

Shared by AWS Machine Learning August 30, 2024

Shared by AWS Machine Learning August 30, 2024

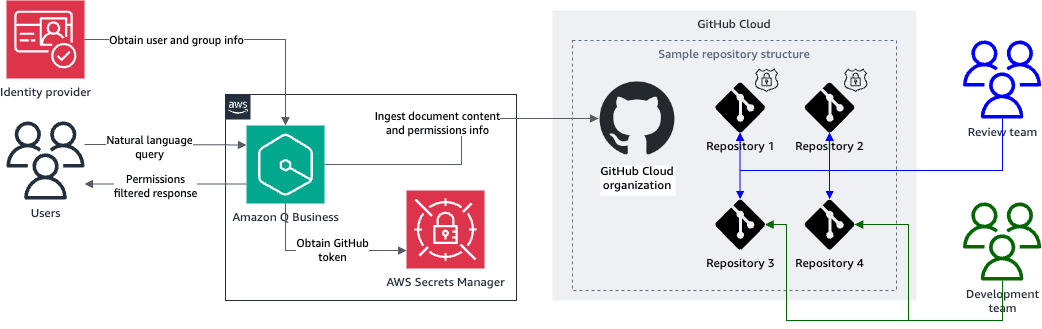

Favorite Retrieval Augmented Generation (RAG) is a state-of-the-art approach to building question answering systems that combines the strengths of retrieval and generative language models. RAG models retrieve relevant information from a large corpus of text and then use a generative language model to synthesize an answer based on the retrieved

Read More

Shared by AWS Machine Learning August 29, 2024

Shared by AWS Machine Learning August 29, 2024

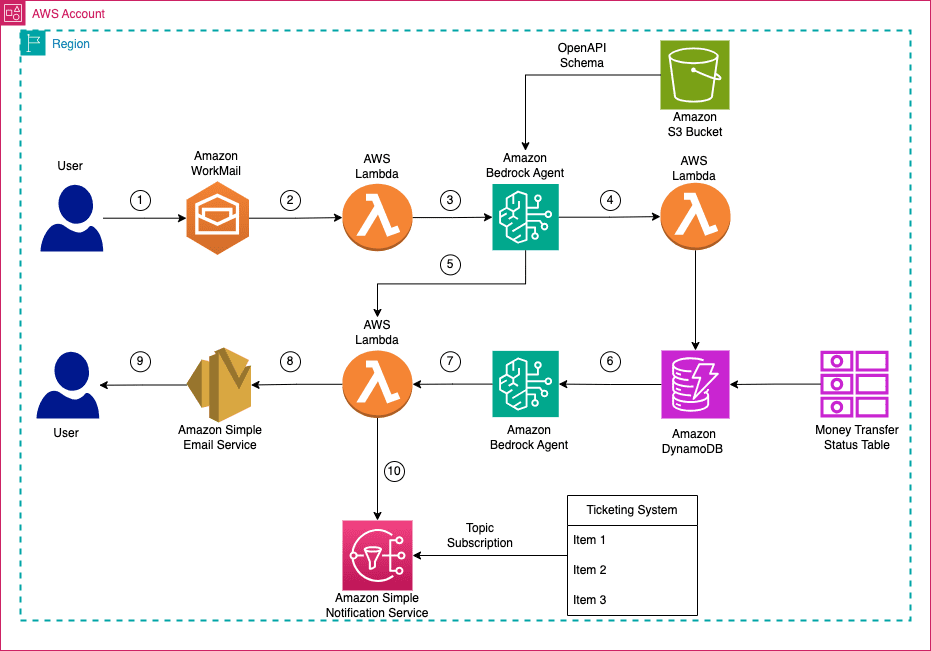

Favorite Organizations spend a lot of resources, effort, and money on running their customer care operations to answer customer questions and provide solutions. Your customers may ask questions through various channels, such as email, chat, or phone, and deploying a workforce to answer those queries can be resource intensive, time-consuming,

Read More

Shared by AWS Machine Learning August 29, 2024

Shared by AWS Machine Learning August 29, 2024

Favorite Incorporating generative artificial intelligence (AI) into your development lifecycle can offer several benefits. For example, using an AI-based coding companion such as Amazon Q Developer can boost development productivity by up to 30 percent. Additionally, reducing the developer context switching that stems from frequent interactions with many different development

Read More

Shared by AWS Machine Learning August 29, 2024

Shared by AWS Machine Learning August 29, 2024