Favorite The latest episode of the Google AI: Release Notes podcast focuses on how the Gemini team built one of the world’s leading AI coding models.Host Logan Kilpatrick chats w… View Original Source (blog.google/technology/ai/) Here.

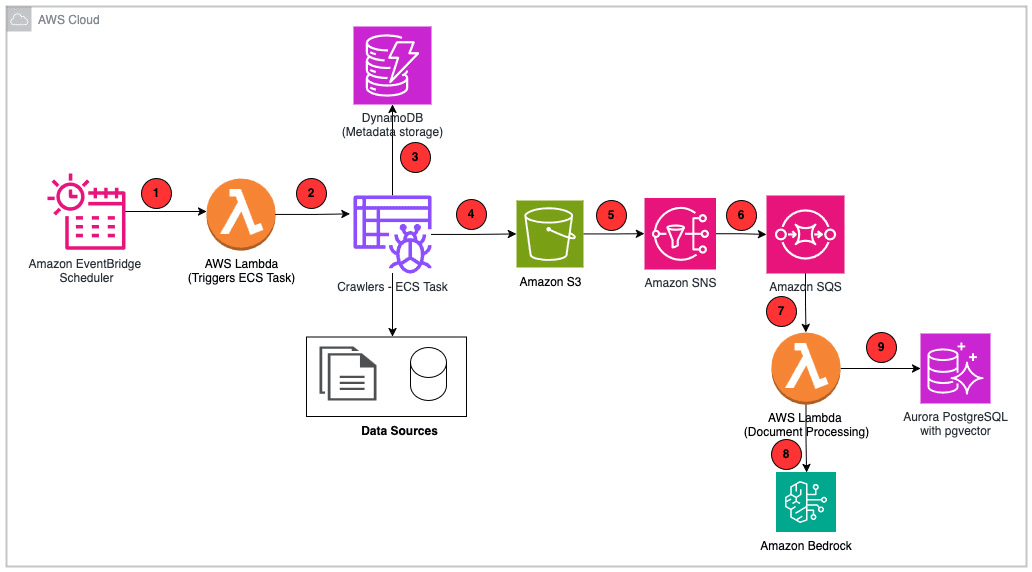

Favorite This post is cowritten by Nikhil Mathugar, Marc Breslow and Sudhanshu Sinha from Totogi. This blog post describes how Totogi automates change request processing. Totogi is an AI company focused on helping helping telecom (telco) companies innovate, accelerate growth and adopt AI at scale. BSS Magic, Totogi’s flagship product,

Read More

Shared by AWS Machine Learning January 27, 2026

Shared by AWS Machine Learning January 27, 2026

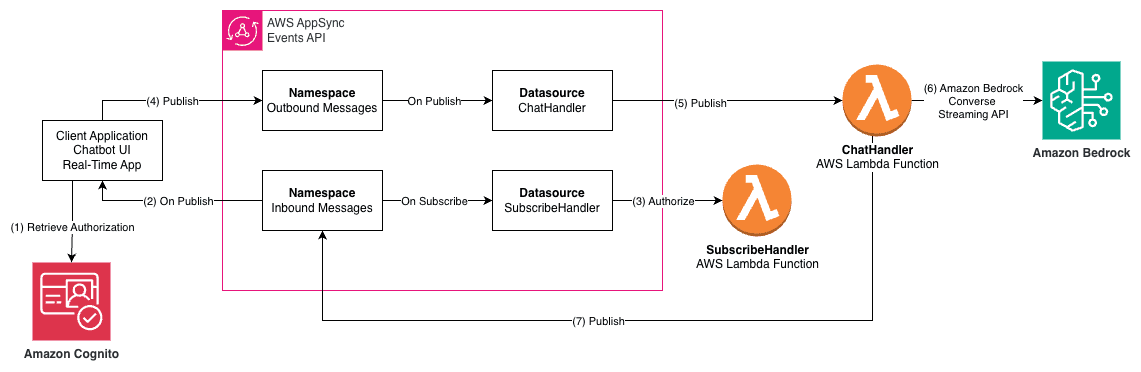

Favorite AWS AppSync Events can help you create more secure, scalable Websocket APIs. In addition to broadcasting real-time events to millions of Websocket subscribers, it supports a crucial user experience requirement of your AI Gateway: low-latency propagation of events from your chosen generative AI models to individual users. In this

Read More

Shared by AWS Machine Learning January 27, 2026

Shared by AWS Machine Learning January 27, 2026

Favorite Today, our animated short film, “Dear Upstairs Neighbors,” previews at the Sundance Film Festival. View Original Source (blog.google/technology/ai/) Here.

Favorite Open Source software plays a central role in global innovation, research, and economic growth. That statement is familiar to anyone working in technology, but the scale of its impact is still startling. A 2024 Harvard-backed study estimates that the demand-side value of the Open Source ecosystem is approximately $8.8

Read More

Shared by voicesofopensource January 26, 2026

Shared by voicesofopensource January 26, 2026

Favorite The Amazon.com Catalog is the foundation of every customer’s shopping experience—the definitive source of product information with attributes that power search, recommendations, and discovery. When a seller lists a new product, the catalog system must extract structured attributes—dimensions, materials, compatibility, and technical specifications—while generating content such as titles that

Read More

Shared by AWS Machine Learning January 24, 2026

Shared by AWS Machine Learning January 24, 2026

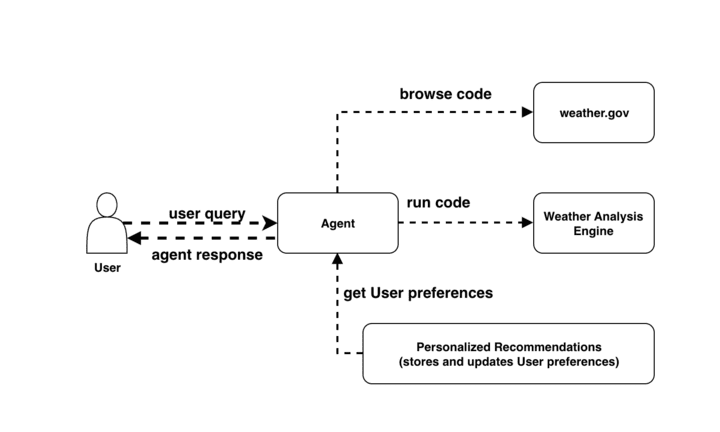

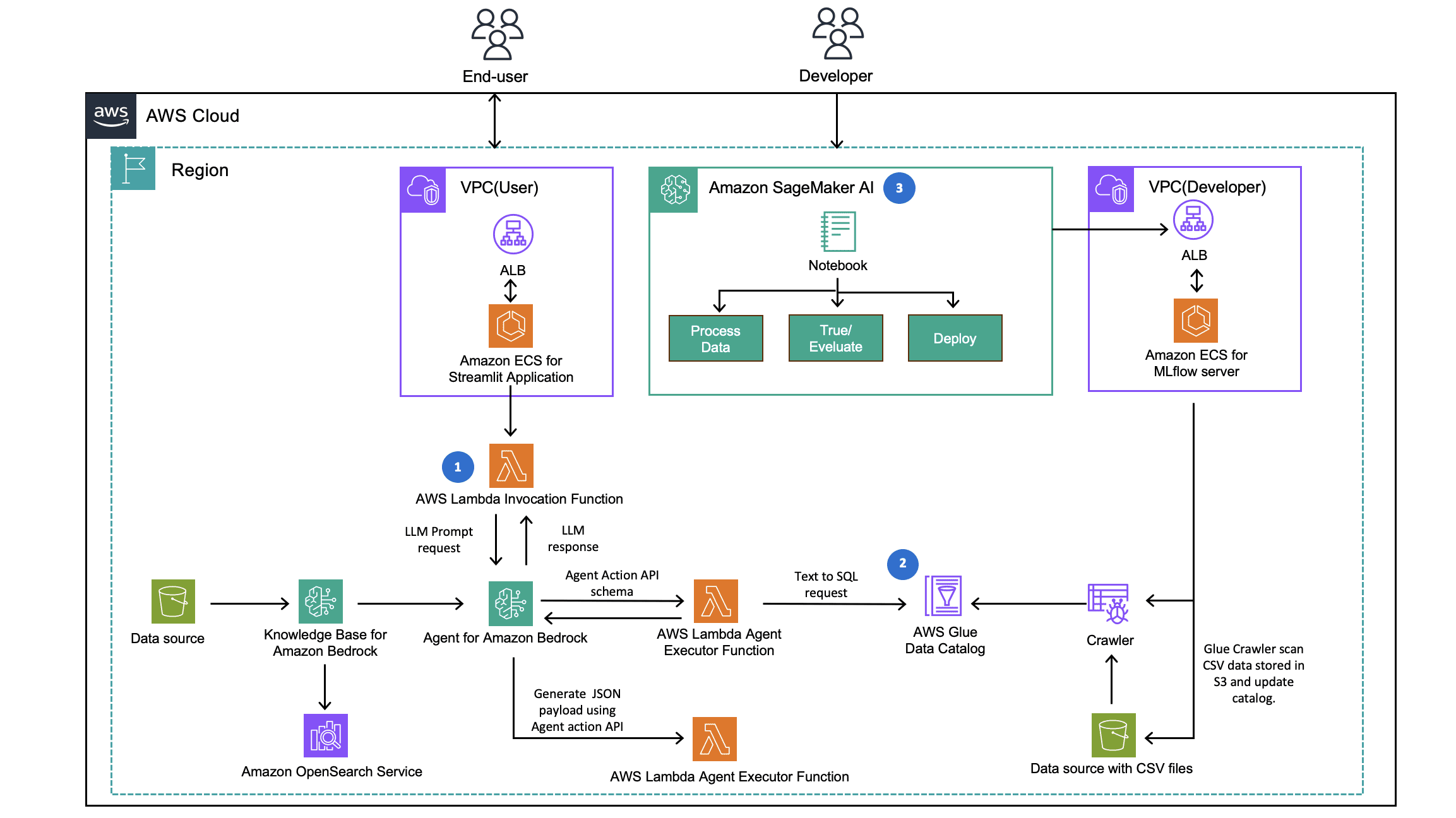

Favorite Agentic-AI has become essential for deploying production-ready AI applications, yet many developers struggle with the complexity of manually configuring agent infrastructure across multiple environments. Infrastructure as code (IaC) facilitates consistent, secure, and scalable infrastructure that autonomous AI systems require. It minimizes manual configuration errors through automated resource management and

Read More

Shared by AWS Machine Learning January 24, 2026

Shared by AWS Machine Learning January 24, 2026

Favorite CLICKFORCE is one of leaders in digital advertising services in Taiwan, specializing in data-driven advertising and conversion (D4A – Data for Advertising & Action). With a mission to deliver industry-leading, trend-aligned, and innovative marketing solutions, CLICKFORCE helps brands, agencies, and media partners make smarter advertising decisions. However, as the advertising

Read More

Shared by AWS Machine Learning January 23, 2026

Shared by AWS Machine Learning January 23, 2026

Favorite PDI Technologies is a global leader in the convenience retail and petroleum wholesale industries. They help businesses around the globe increase efficiency and profitability by securely connecting their data and operations. With 40 years of experience, PDI Technologies assists customers in all aspects of their business, from understanding consumer

Read More

Shared by AWS Machine Learning January 23, 2026

Shared by AWS Machine Learning January 23, 2026

Favorite Personal Intelligence lets you tap into your context from Gmail and Photos to deliver tailored responses in Search, just for you. View Original Source (blog.google/technology/ai/) Here.