Favorite OSI announced a public forum to widen the conversations that will lead to version 1.0 of the Open Source AI Definition. The forums are part of our commitment to inclusiveness and transparency, matching the public town hall meetings that started two weeks ago. The public forum‘s goal is to

Read More

Shared by voicesofopensource January 26, 2024

Shared by voicesofopensource January 26, 2024

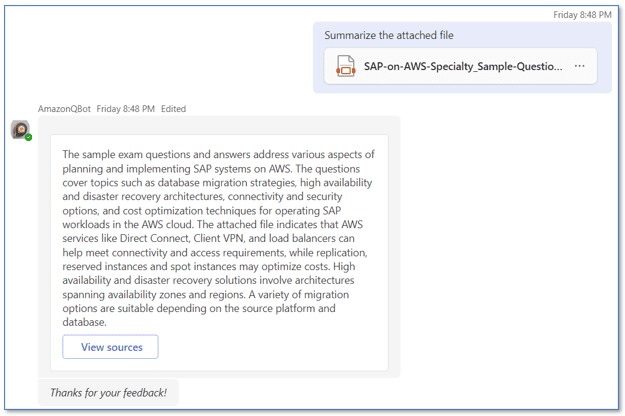

Favorite Amazon Q is a new generative AI-powered application that helps users get work done. Amazon Q can become your tailored business expert and let you discover content, brainstorm ideas, or create summaries using your company’s data safely and securely. You can use Amazon Q to have conversations, solve problems,

Read More

Shared by AWS Machine Learning January 25, 2024

Shared by AWS Machine Learning January 25, 2024

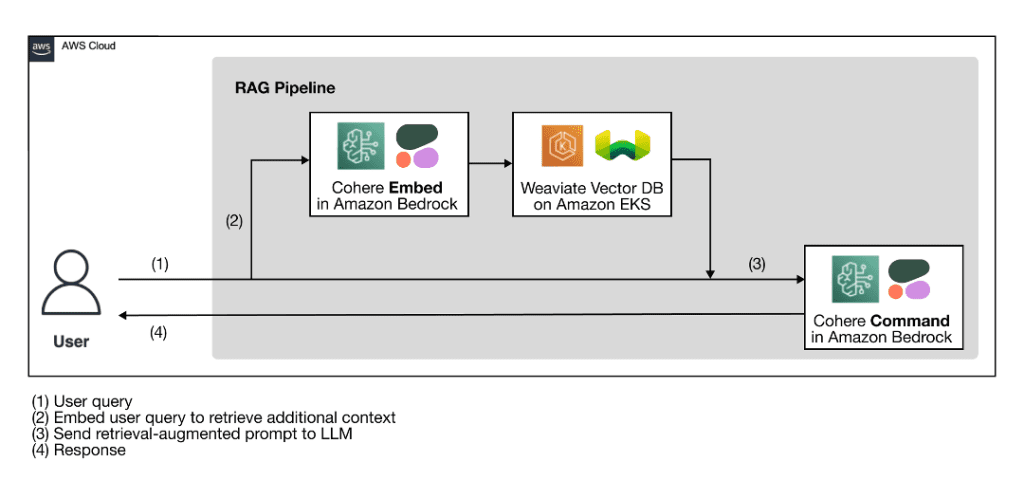

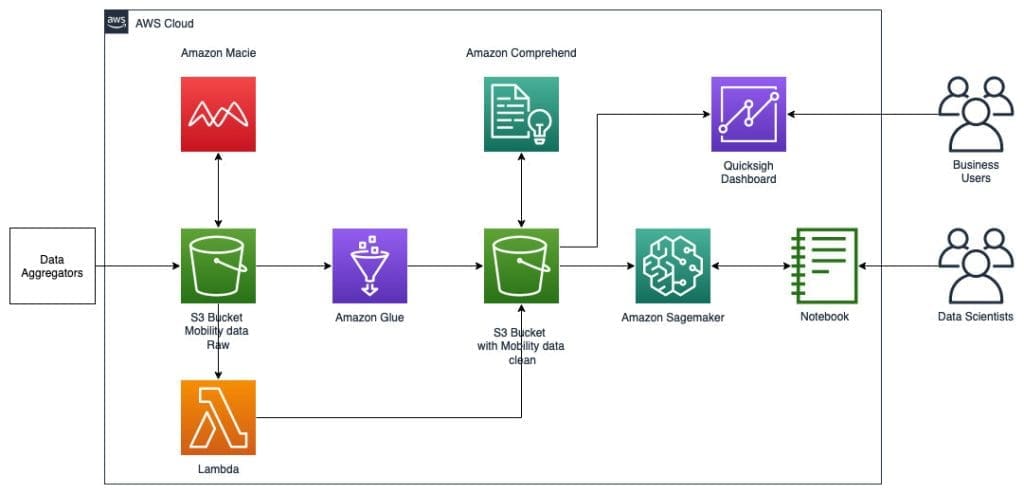

Favorite Generative AI solutions have the potential to transform businesses by boosting productivity and improving customer experiences, and using large language models (LLMs) with these solutions has become increasingly popular. Building proofs of concept is relatively straightforward because cutting-edge foundation models are available from specialized providers through a simple API

Read More

Shared by AWS Machine Learning January 24, 2024

Shared by AWS Machine Learning January 24, 2024

Favorite Posted by Ameya Velingker, Research Scientist, Google Research, and Balaji Venkatachalam, Software Engineer, Google Graphs, in which objects and their relations are represented as nodes (or vertices) and edges (or links) between pairs of nodes, are ubiquitous in computing and machine learning (ML). For example, social networks, road networks,

Read More

Shared by Google AI Technology January 23, 2024

Shared by Google AI Technology January 23, 2024

Favorite Three experimental generative AI features are coming to Chrome on Macs and Windows PCs to make browsing easier and more personalized. View Original Source (blog.google/technology/ai/) Here.

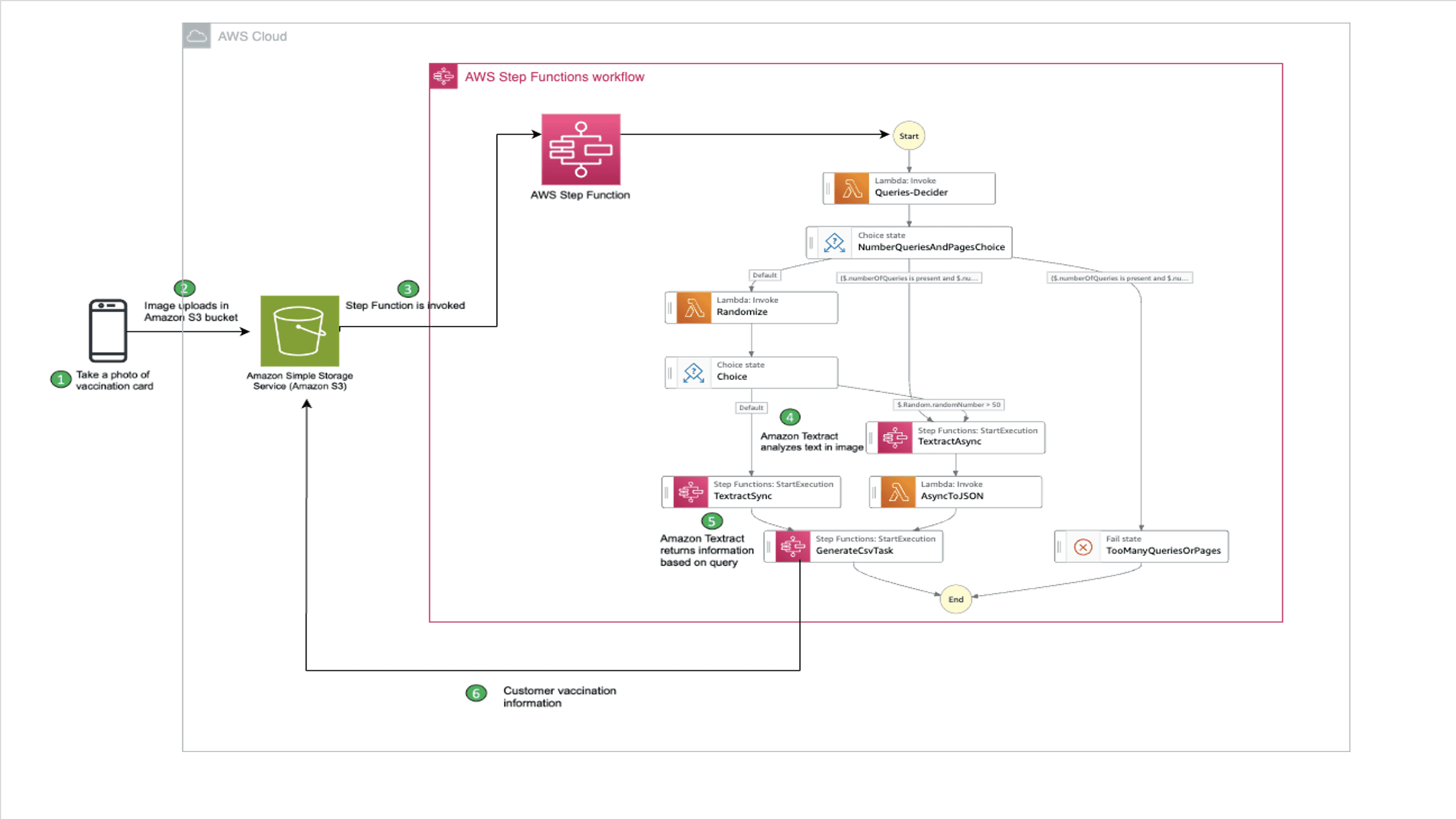

Favorite Amazon Textract is a machine learning (ML) service that enables automatic extraction of text, handwriting, and data from scanned documents, surpassing traditional optical character recognition (OCR). It can identify, understand, and extract data from tables and forms with remarkable accuracy. Presently, several companies rely on manual extraction methods or

Read More

Shared by AWS Machine Learning January 22, 2024

Shared by AWS Machine Learning January 22, 2024

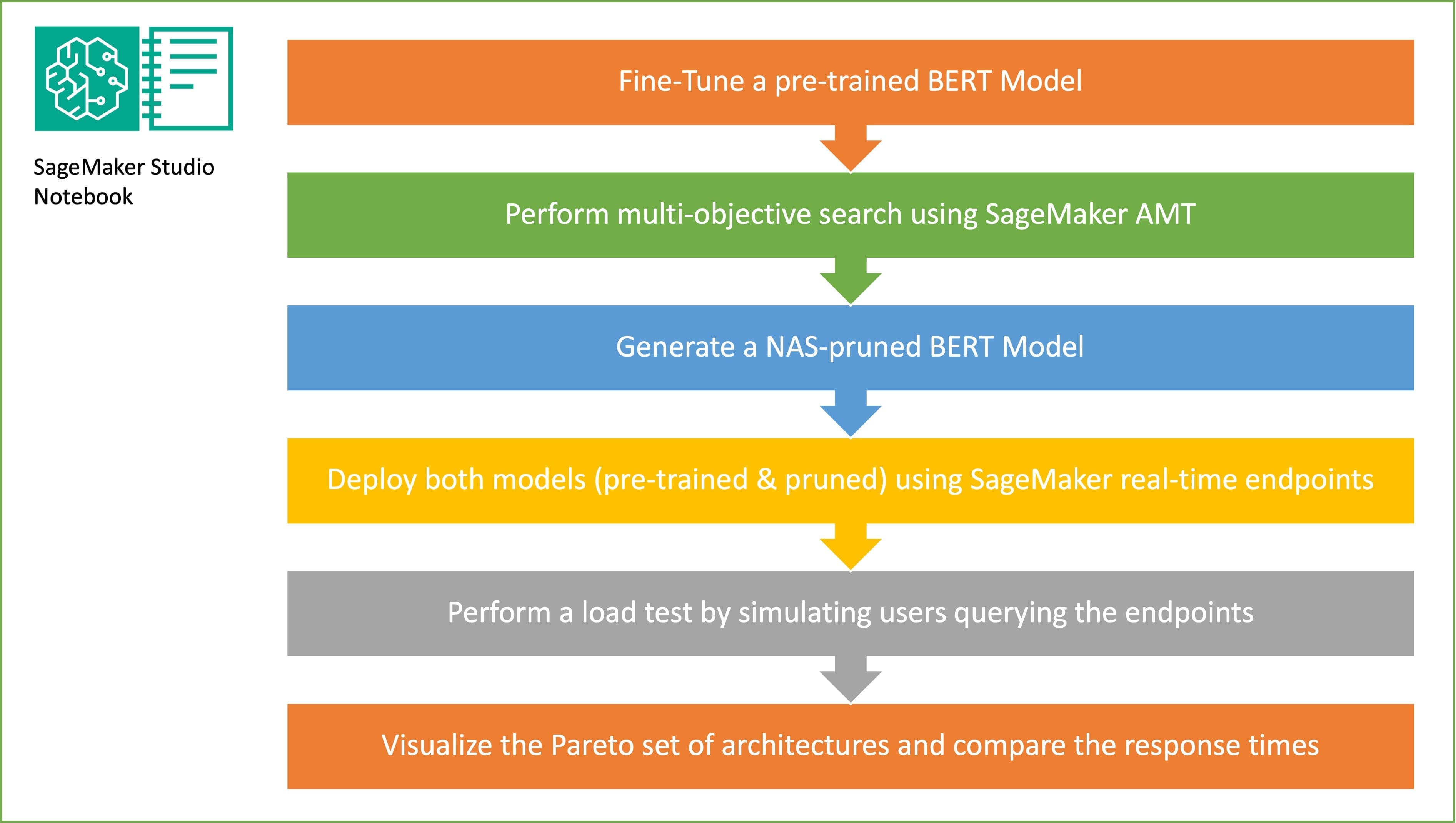

Favorite In this post, we demonstrate how to use neural architecture search (NAS) based structural pruning to compress a fine-tuned BERT model to improve model performance and reduce inference times. Pre-trained language models (PLMs) are undergoing rapid commercial and enterprise adoption in the areas of productivity tools, customer service, search

Read More

Shared by AWS Machine Learning January 19, 2024

Shared by AWS Machine Learning January 19, 2024

Favorite Geospatial data is data about specific locations on the earth’s surface. It can represent a geographical area as a whole or it can represent an event associated with a geographical area. Analysis of geospatial data is sought after in a few industries. It involves understanding where the data exists

Read More

Shared by AWS Machine Learning January 18, 2024

Shared by AWS Machine Learning January 18, 2024

Favorite Today, we’re excited to announce the availability of Llama 2 inference and fine-tuning support on AWS Trainium and AWS Inferentia instances in Amazon SageMaker JumpStart. Using AWS Trainium and Inferentia based instances, through SageMaker, can help users lower fine-tuning costs by up to 50%, and lower deployment costs by

Read More

Shared by AWS Machine Learning January 18, 2024

Shared by AWS Machine Learning January 18, 2024

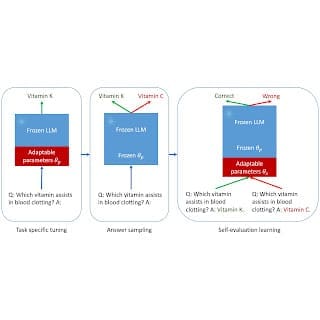

Favorite Posted by Jiefeng Chen, Student Researcher, and Jinsung Yoon, Research Scientist, Cloud AI Team In the fast-evolving landscape of artificial intelligence, large language models (LLMs) have revolutionized the way we interact with machines, pushing the boundaries of natural language understanding and generation to unprecedented heights. Yet, the leap into

Read More

Shared by Google AI Technology January 18, 2024

Shared by Google AI Technology January 18, 2024

Shared by voicesofopensource January 26, 2024