Favorite Amazon Bedrock AgentCore is an agentic platform for building, deploying, and operating effective agents securely at scale. Amazon Bedrock AgentCore Runtime is a fully managed service of Bedrock AgentCore, which provides low latency serverless environments to deploy agents and tools. It provides session isolation, supports multiple agent frameworks including

Read More

Shared by AWS Machine Learning November 4, 2025

Shared by AWS Machine Learning November 4, 2025

Favorite In October, the OSI hosted the State of the Source Track at All Things Open designed to connect developers with the big policy conversations shaping our ecosystem. Katie Steen-James and Nick Vidal participated in a fireside chat (Policy: AI / Data Governance) to discuss the latest AI and data

Read More

Shared by voicesofopensource November 4, 2025

Shared by voicesofopensource November 4, 2025

Favorite Here are Google’s latest AI updates from October 2025 View Original Source (blog.google/technology/ai/) Here.

Favorite In October, the OSI hosted the State of the Source Track at All Things Open designed to connect developers with the big policy conversations shaping our ecosystem. Katie Steen-James, Jeremy Stanley, Barry Peddycord III, and Bob Callaway led the panel Policy: Cybersecurity, with updates on SBOMs, the Cyber Resilience

Read More

Shared by voicesofopensource November 4, 2025

Shared by voicesofopensource November 4, 2025

Favorite In high-volume healthcare contact centers, every patient conversation carries both clinical and operational significance, making accurate real-time transcription necessary for automated workflows. Accurate, instant transcription enables intelligent automation without sacrificing clarity or care, so that teams can automate electronic medical record (EMR) record matching, streamline workflows, and eliminate manual

Read More

Shared by AWS Machine Learning November 3, 2025

Shared by AWS Machine Learning November 3, 2025

Favorite In October, the OSI hosted the State of the Source Track at All Things Open designed to connect developers with the big policy conversations shaping our ecosystem. Ruth Suehle, Patrick Masson, Amir Montazery, and Duane O’Brien organized the panel Beyond the Bottom Line: Sustaining the Open Source Ecosystem, exploring

Read More

Shared by voicesofopensource November 3, 2025

Shared by voicesofopensource November 3, 2025

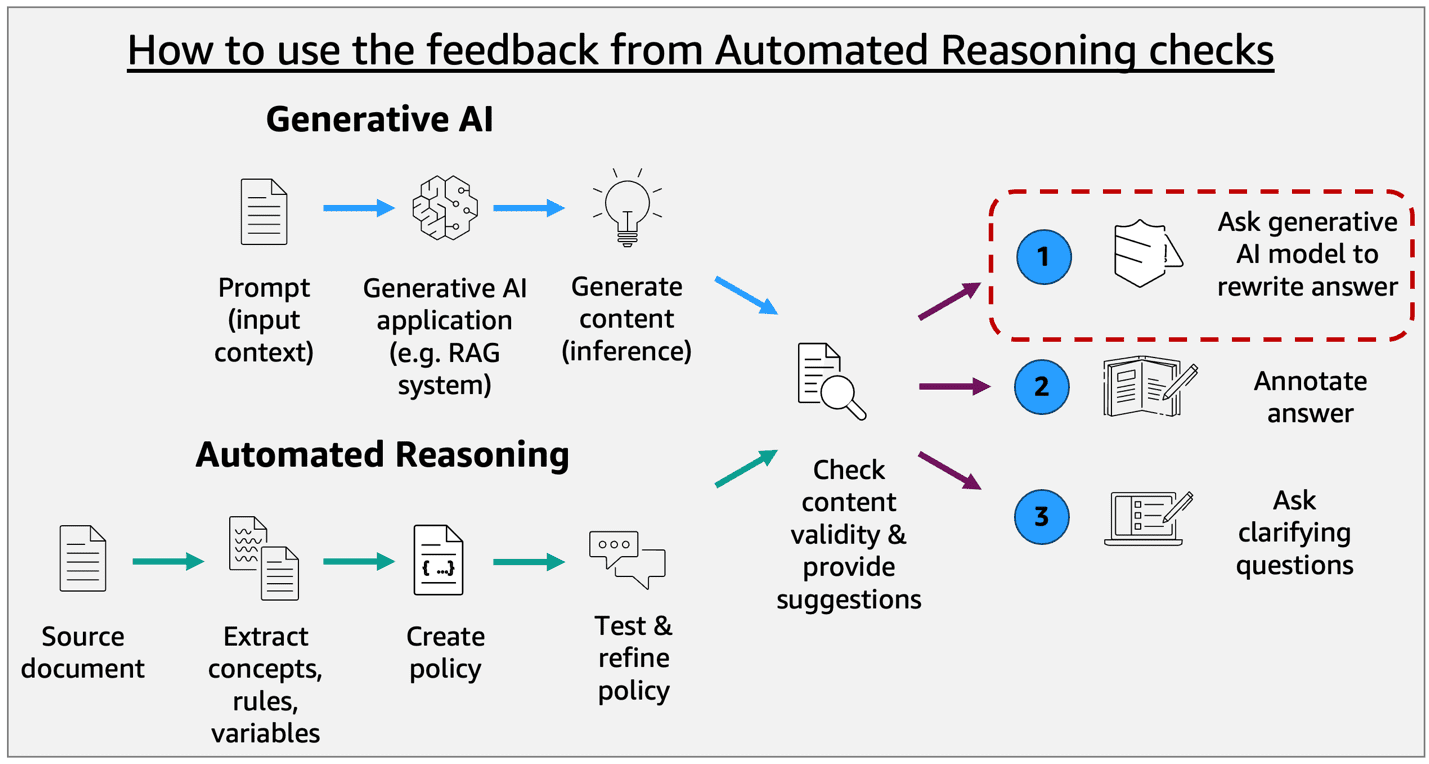

Favorite Enterprises in regulated industries often need mathematical certainty that every AI response complies with established policies and domain knowledge. Regulated industries can’t use traditional quality assurance methods that test only a statistical sample of AI outputs and make probabilistic assertions about compliance. When we launched Automated Reasoning checks in

Read More

Shared by AWS Machine Learning November 1, 2025

Shared by AWS Machine Learning November 1, 2025

Favorite AI agents need to browse the web on your behalf. When your agent visits a website to gather information, complete a form, or verify data, it encounters the same defenses designed to stop unwanted bots: CAPTCHAs, rate limits, and outright blocks. Today, we are excited to share that AWS

Read More

Shared by AWS Machine Learning October 31, 2025

Shared by AWS Machine Learning October 31, 2025

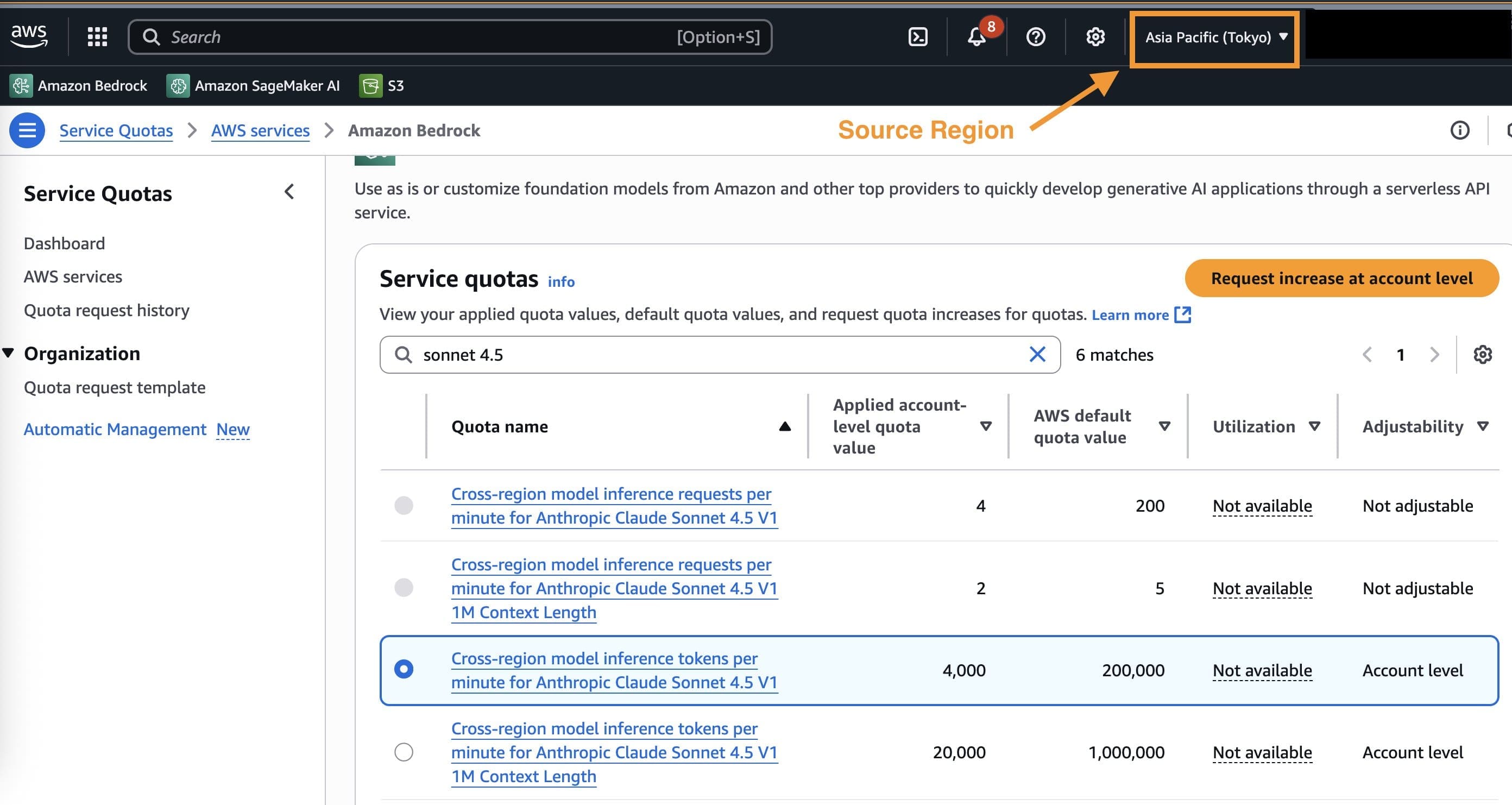

Favorite こんにちは, G’day. The recent launch of Anthropic’s Claude Sonnet 4.5 and Claude Haiku 4.5, now available on Amazon Bedrock, marks a significant leap forward in generative AI models. These state-of-the-art models excel at complex agentic tasks, coding, and enterprise workloads, offering enhanced capabilities to developers. Along with the new

Read More

Shared by AWS Machine Learning October 31, 2025

Shared by AWS Machine Learning October 31, 2025

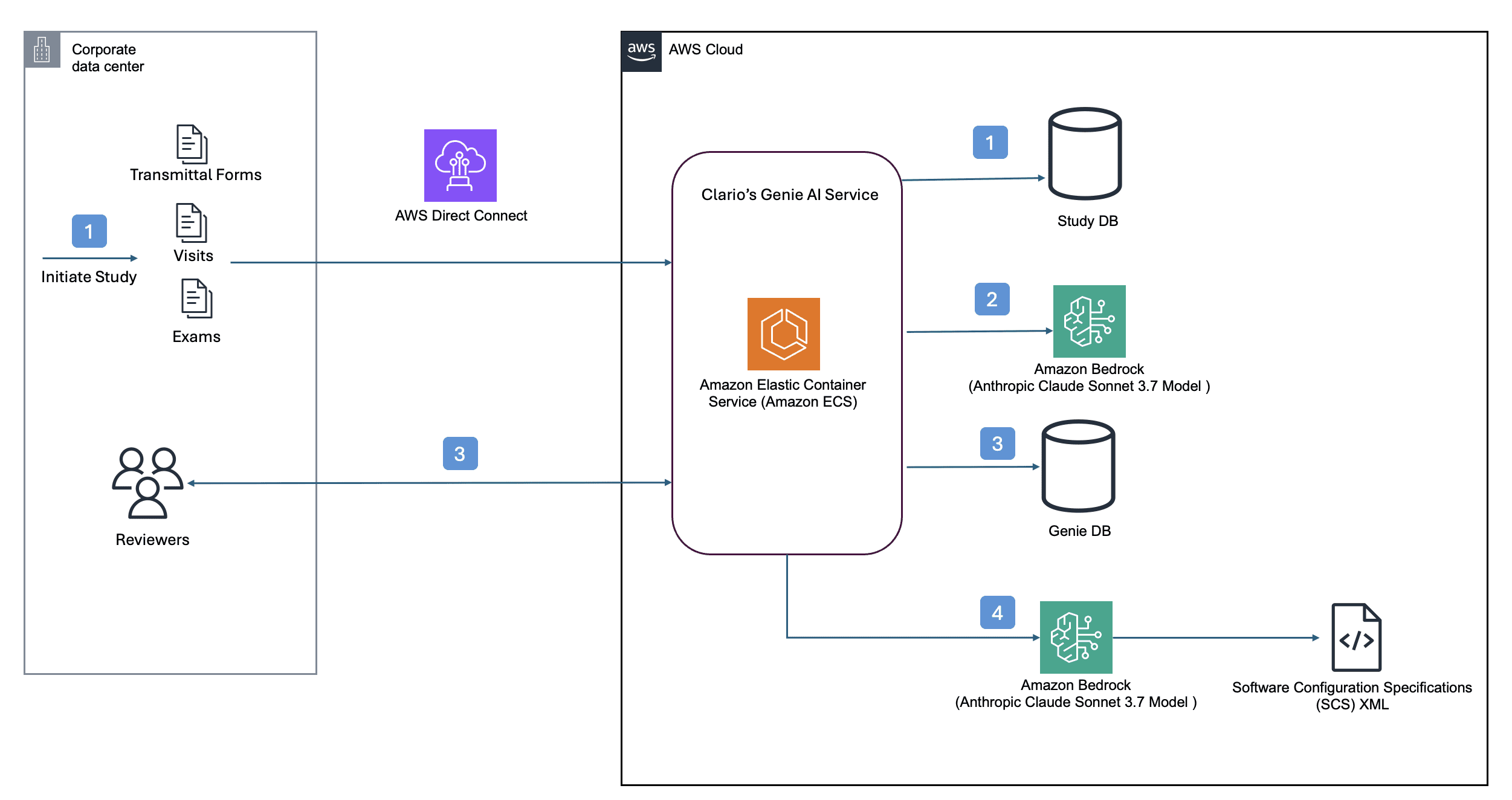

Favorite This post was co-written with Kim Nguyen and Shyam Banuprakash from Clario. Clario is a leading provider of endpoint data solutions for systematic collection, management, and analysis of specific, predefined outcomes (endpoints) to evaluate a treatment’s safety and effectiveness in the clinical trials industry, generating high-quality clinical evidence for

Read More

Shared by AWS Machine Learning October 31, 2025

Shared by AWS Machine Learning October 31, 2025

![]() Shared by AWS Machine Learning November 4, 2025

Shared by AWS Machine Learning November 4, 2025