Favorite As businesses and developers increasingly seek to optimize their language models for specific tasks, the decision between model customization and Retrieval Augmented Generation (RAG) becomes critical. In this post, we seek to address this growing need by offering clear, actionable guidelines and best practices on when to use each

Read More

Shared by AWS Machine Learning April 11, 2025

Shared by AWS Machine Learning April 11, 2025

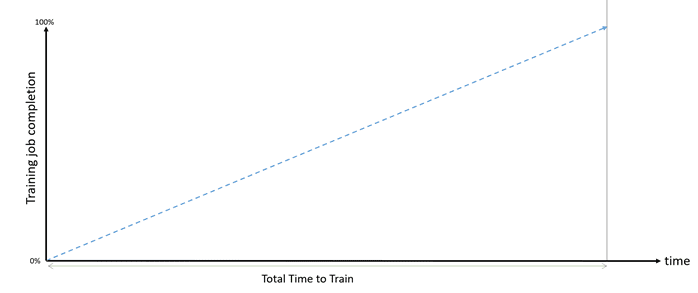

Favorite Training a frontier model is highly compute-intensive, requiring a distributed system of hundreds, or thousands, of accelerated instances running for several weeks or months to complete a single job. For example, pre-training the Llama 3 70B model with 15 trillion training tokens took 6.5 million H100 GPU hours. On

Read More

Shared by AWS Machine Learning April 11, 2025

Shared by AWS Machine Learning April 11, 2025

Favorite Training a frontier model is highly compute-intensive, requiring a distributed system of hundreds, or thousands, of accelerated instances running for several weeks or months to complete a single job. For example, pre-training the Llama 3 70B model with 15 trillion training tokens took 6.5 million H100 GPU hours. On

Read More

Shared by AWS Machine Learning April 11, 2025

Shared by AWS Machine Learning April 11, 2025

Favorite We’re teaming up with Range Media Partners to announce AI on Screen, a new short film program. View Original Source (blog.google/technology/ai/) Here.

Favorite Each year, the State of Open Source Report offers a valuable pulse check on the global Open Source ecosystem—and the 2025 edition is no exception. Produced by Perforce OpenLogic, in partnership with the Eclipse Foundation and the Open Source Initiative, this report uncovers the latest trends, tensions, and transformations

Read More

Shared by voicesofopensource April 10, 2025

Shared by voicesofopensource April 10, 2025

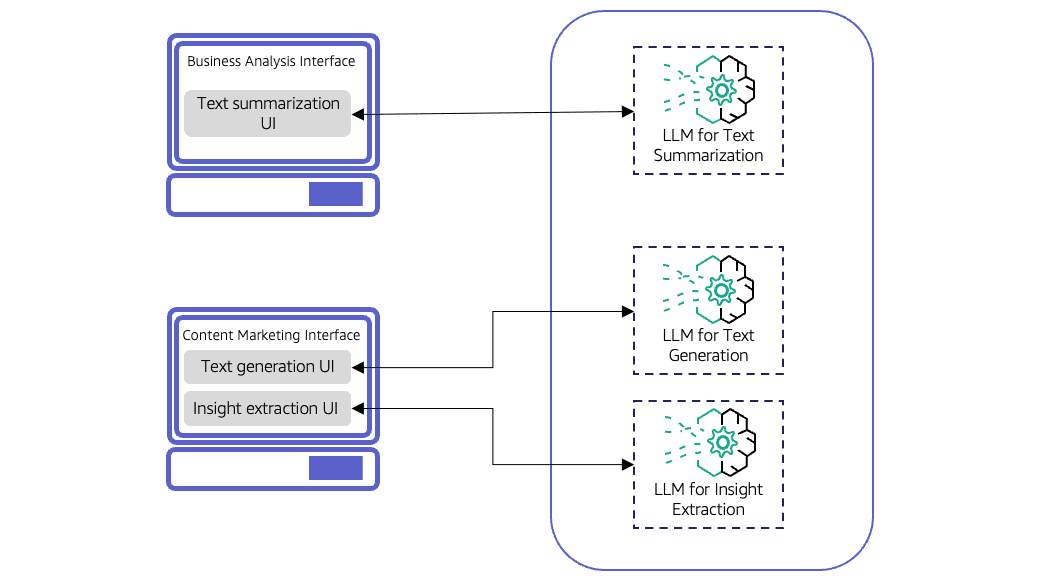

Favorite Organizations are increasingly using multiple large language models (LLMs) when building generative AI applications. Although an individual LLM can be highly capable, it might not optimally address a wide range of use cases or meet diverse performance requirements. The multi-LLM approach enables organizations to effectively choose the right model

Read More

Shared by AWS Machine Learning April 10, 2025

Shared by AWS Machine Learning April 10, 2025

Favorite Organizations are increasingly using multiple large language models (LLMs) when building generative AI applications. Although an individual LLM can be highly capable, it might not optimally address a wide range of use cases or meet diverse performance requirements. The multi-LLM approach enables organizations to effectively choose the right model

Read More

Shared by AWS Machine Learning April 10, 2025

Shared by AWS Machine Learning April 10, 2025

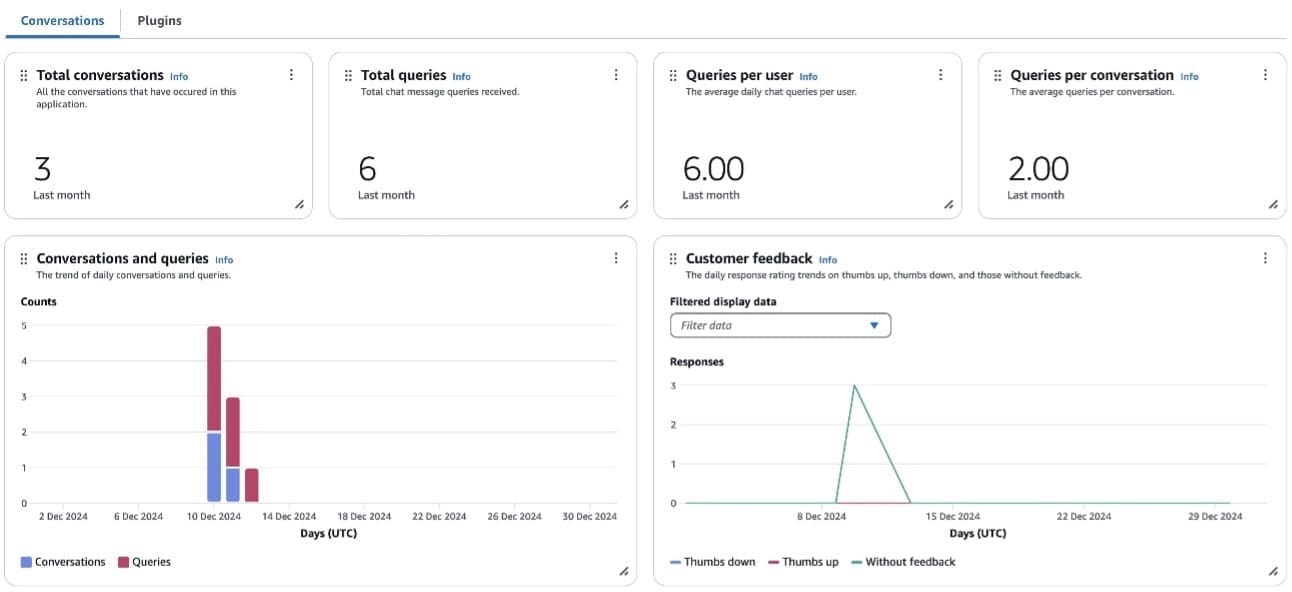

Favorite Employee productivity is a critical factor in maintaining a competitive advantage. Amazon Q Business offers a unique opportunity to enhance workforce efficiency by providing AI-powered assistance that can significantly reduce the time spent searching for information, generating content, and completing routine tasks. Amazon Q Business is a fully managed,

Read More

Shared by AWS Machine Learning April 10, 2025

Shared by AWS Machine Learning April 10, 2025

Favorite Employee productivity is a critical factor in maintaining a competitive advantage. Amazon Q Business offers a unique opportunity to enhance workforce efficiency by providing AI-powered assistance that can significantly reduce the time spent searching for information, generating content, and completing routine tasks. Amazon Q Business is a fully managed,

Read More

Shared by AWS Machine Learning April 10, 2025

Shared by AWS Machine Learning April 10, 2025

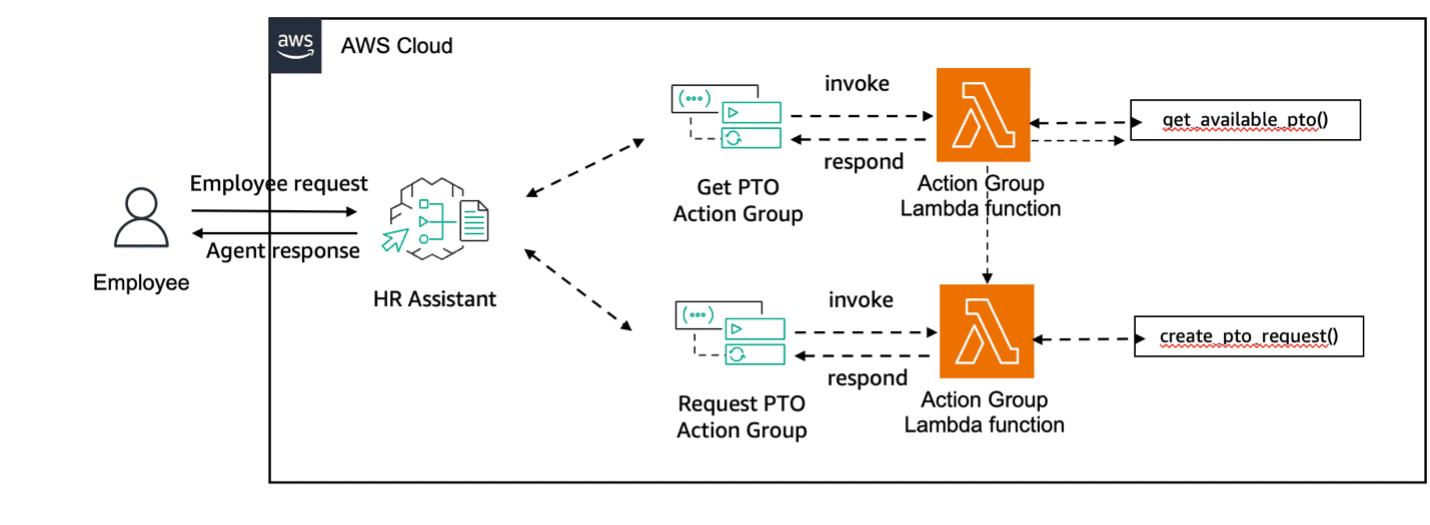

Favorite Agents are revolutionizing how businesses automate complex workflows and decision-making processes. Amazon Bedrock Agents helps you accelerate generative AI application development by orchestrating multi-step tasks. Agents use the reasoning capability of foundation models (FMs) to break down user-requested tasks into multiple steps. In addition, they use the developer-provided instruction

Read More

Shared by AWS Machine Learning April 10, 2025

Shared by AWS Machine Learning April 10, 2025

![]() Shared by AWS Machine Learning April 11, 2025

Shared by AWS Machine Learning April 11, 2025