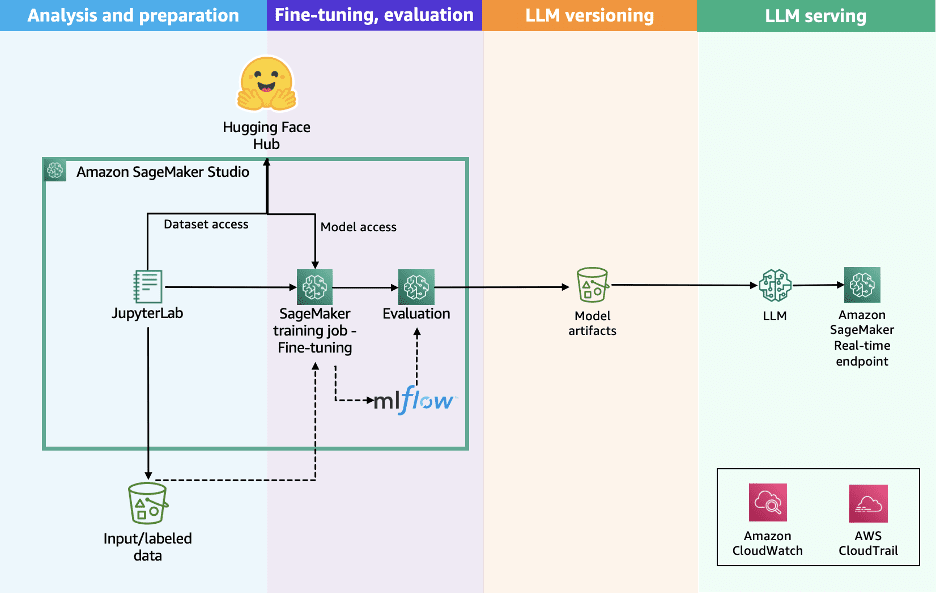

Scale LLM fine-tuning with Hugging Face and Amazon SageMaker AI

Favorite Enterprises are increasingly shifting from relying solely on large, general-purpose language models to developing specialized large language models (LLMs) fine-tuned on their own proprietary data. Although foundation models (FMs) offer impressive general capabilities, they often fall short when applied to the complexities of enterprise environments—where accuracy, security, compliance, and

Read More![]() Shared by AWS Machine Learning February 10, 2026

Shared by AWS Machine Learning February 10, 2026