Favorite The Qwen 2.5 multilingual large language models (LLMs) are a collection of pre-trained and instruction tuned generative models in 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B (text in/text out and code out). The Qwen 2.5 fine tuned text-only models are optimized for multilingual dialogue use cases and outperform both

Read More

Shared by AWS Machine Learning March 13, 2025

Shared by AWS Machine Learning March 13, 2025

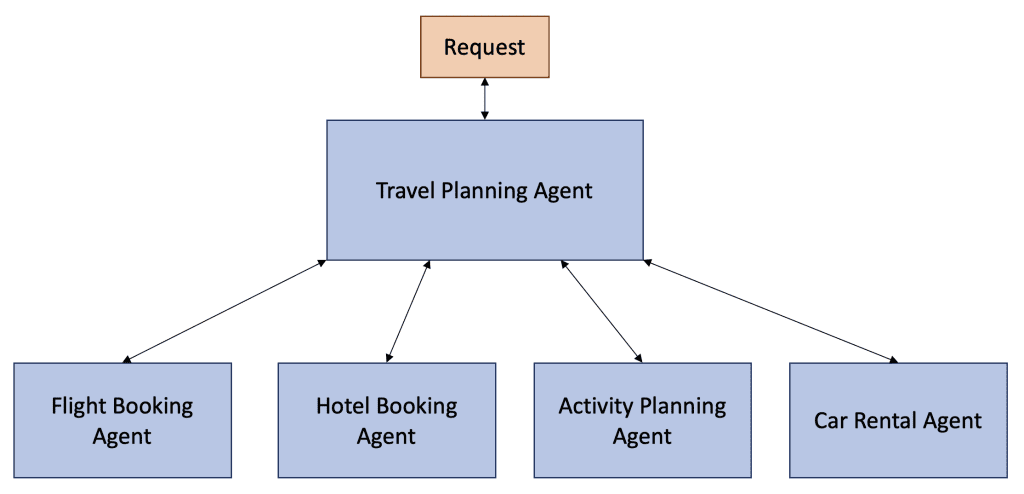

Favorite The integration of generative AI agents into business processes is poised to accelerate as organizations recognize the untapped potential of these technologies. Advancements in multimodal artificial intelligence (AI), where agents can understand and generate not just text but also images, audio, and video, will further broaden their applications. This

Read More

Shared by AWS Machine Learning March 13, 2025

Shared by AWS Machine Learning March 13, 2025

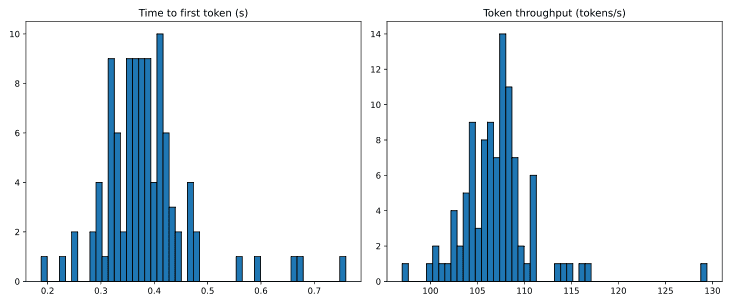

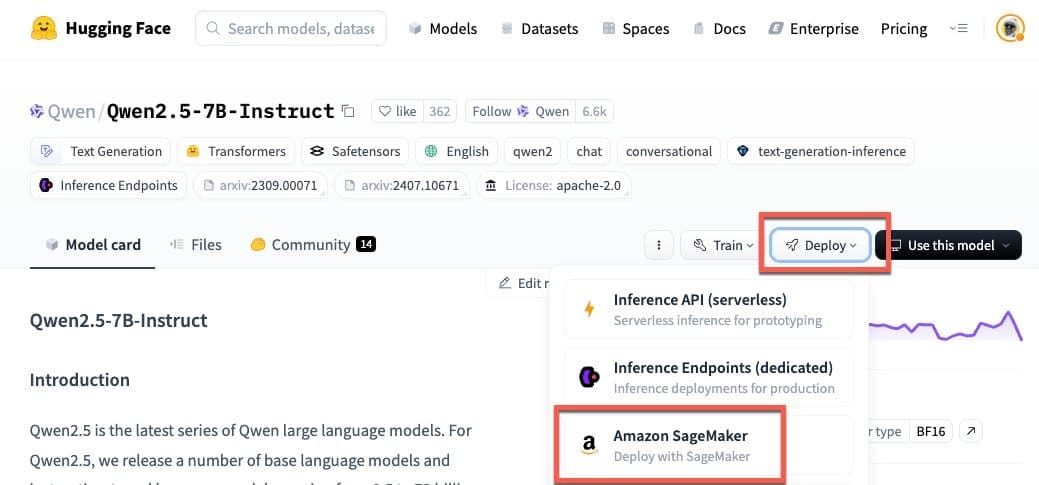

Favorite Open foundation models (FMs) allow organizations to build customized AI applications by fine-tuning for their specific domains or tasks, while retaining control over costs and deployments. However, deployment can be a significant portion of the effort, often requiring 30% of project time because engineers must carefully optimize instance types

Read More

Shared by AWS Machine Learning March 13, 2025

Shared by AWS Machine Learning March 13, 2025

Favorite This post was co-written with Vishal Singh, Data Engineering Leader at Data & Analytics team of GoDaddy Generative AI solutions have the potential to transform businesses by boosting productivity and improving customer experiences, and using large language models (LLMs) in these solutions has become increasingly popular. However, inference of

Read More

Shared by AWS Machine Learning March 13, 2025

Shared by AWS Machine Learning March 13, 2025

Favorite Google shares policy recommendations in response to OSTP’s request for information for the U.S. AI Action Plan. View Original Source (blog.google/technology/ai/) Here.

Favorite Compelling AI-generated images start with well-crafted prompts. In this follow-up to our Amazon Nova Canvas Prompt Engineering Guide, we showcase a curated gallery of visuals generated by Nova Canvas—categorized by real-world use cases—from marketing and product visualization to concept art and design exploration. Each image is paired with the

Read More

Shared by AWS Machine Learning March 12, 2025

Shared by AWS Machine Learning March 12, 2025

Favorite Every year, public authorities in the European Union spend around 14% of annual GDP on services, works and goods. As European governments continue to digitalize, a growing share of this spending is on digital solutions. Sadly, few of these solutions are built on Open Source software, but that might

Read More

Shared by voicesofopensource March 12, 2025

Shared by voicesofopensource March 12, 2025

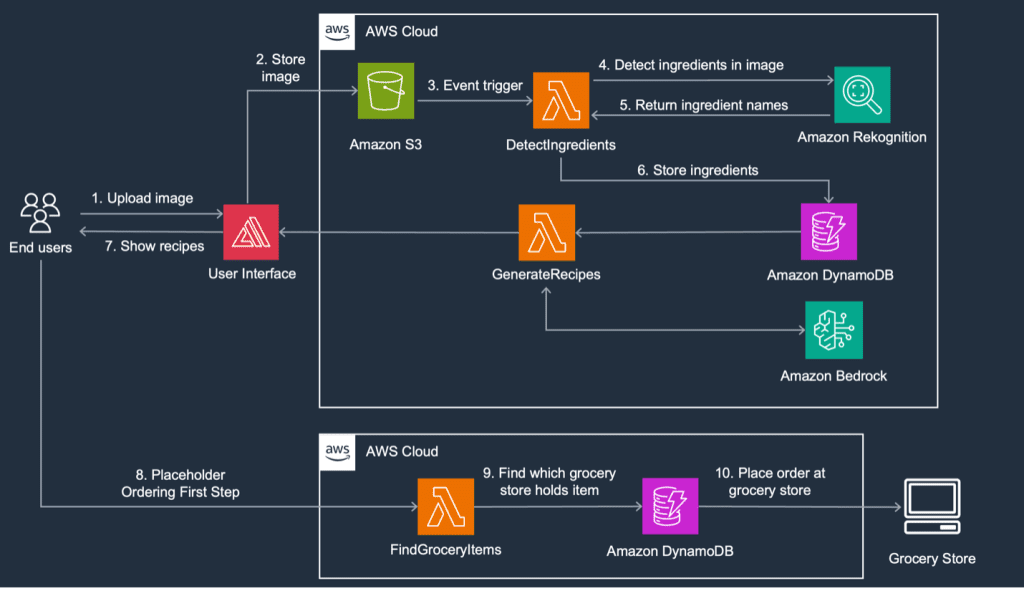

Favorite In today’s fast-paced world, time is of the essence and even basic tasks like grocery shopping can feel rushed and challenging. Despite our best intentions to plan meals and shop accordingly, we often end up ordering takeout; leaving unused perishable items to spoil in the refrigerator. This seemingly small

Read More

Shared by AWS Machine Learning March 11, 2025

Shared by AWS Machine Learning March 11, 2025

Favorite DeepSeek-R1 is a large language model (LLM) developed by DeepSeek AI that uses reinforcement learning to enhance reasoning capabilities through a multi-stage training process from a DeepSeek-V3-Base foundation. A key distinguishing feature is its reinforcement learning step, which was used to refine the model’s responses beyond the standard pre-training

Read More

Shared by AWS Machine Learning March 11, 2025

Shared by AWS Machine Learning March 11, 2025

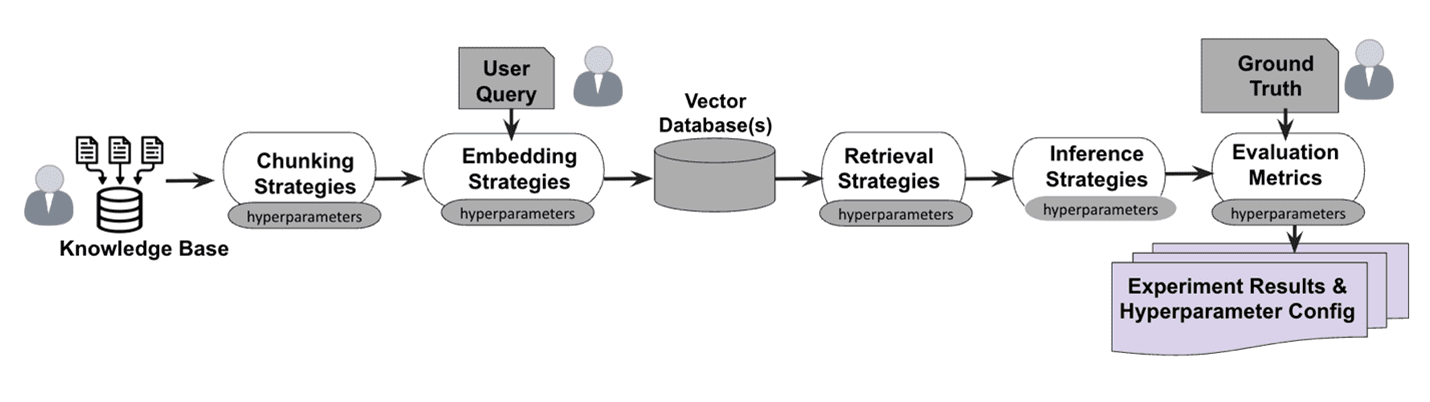

Favorite Based on original post by Dr. Hemant Joshi, CTO, FloTorch.ai A recent evaluation conducted by FloTorch compared the performance of Amazon Nova models with OpenAI’s GPT-4o. Amazon Nova is a new generation of state-of-the-art foundation models (FMs) that deliver frontier intelligence and industry-leading price-performance. The Amazon Nova family of

Read More

Shared by AWS Machine Learning March 11, 2025

Shared by AWS Machine Learning March 11, 2025

![]() Shared by AWS Machine Learning March 13, 2025

Shared by AWS Machine Learning March 13, 2025