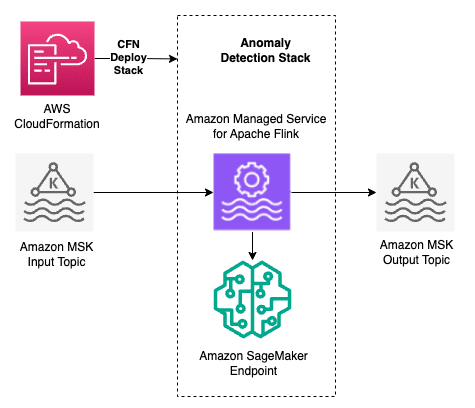

Anomaly detection in streaming time series data with online learning using Amazon Managed Service for Apache Flink

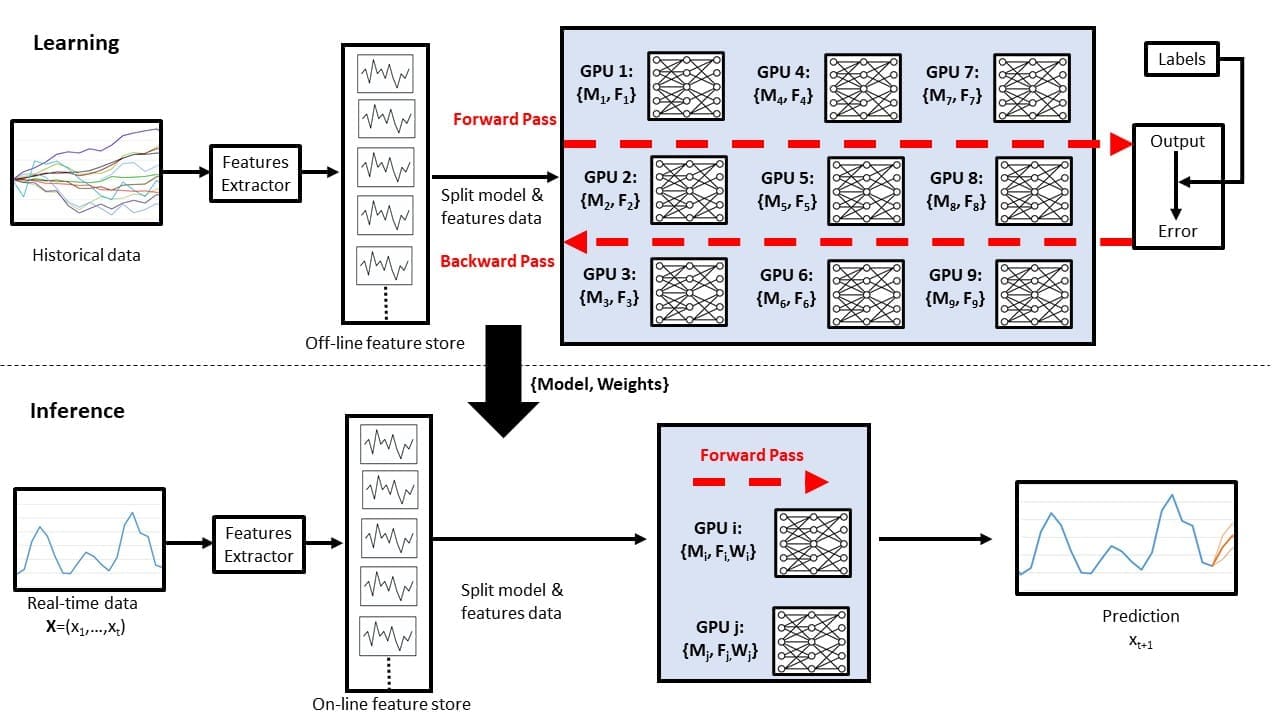

Favorite Time series data is a distinct category that incorporates time as a fundamental element in its structure. In a time series, data points are collected sequentially, often at regular intervals, and they typically exhibit certain patterns, such as trends, seasonal variations, or cyclical behaviors. Common examples of time series

Read More![]() Shared by AWS Machine Learning September 12, 2024

Shared by AWS Machine Learning September 12, 2024