Favorite This post is co-written with Isaac Smothers and James Healy-Mirkovich from Crexi. With the current demand for AI and machine learning (AI/ML) solutions, the processes to train and deploy models and scale inference are crucial to business success. Even though AI/ML and especially generative AI progress is rapid, machine

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

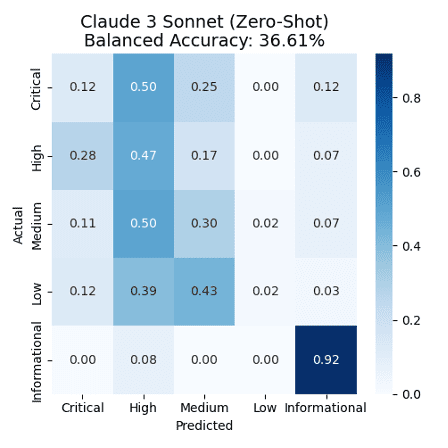

Favorite Large language models (LLMs) have come a long way from being able to read only text to now being able to read and understand graphs, diagrams, tables, and images. In this post, we discuss how to use LLMs from Amazon Bedrock to not only extract text, but also understand

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

Favorite This post is co-written with Ken Kao and Hasan Ali Demirci from Rad AI. Rad AI has reshaped radiology reporting, developing solutions that streamline the most tedious and repetitive tasks, and saving radiologists’ time. Since 2018, using state-of-the-art proprietary and open source large language models (LLMs), our flagship product—Rad

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

Favorite In the field of generative AI, latency and cost pose significant challenges. The commonly used large language models (LLMs) often process text sequentially, predicting one token at a time in an autoregressive manner. This approach can introduce delays, resulting in less-than-ideal user experiences. Additionally, the growing demand for AI-powered

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

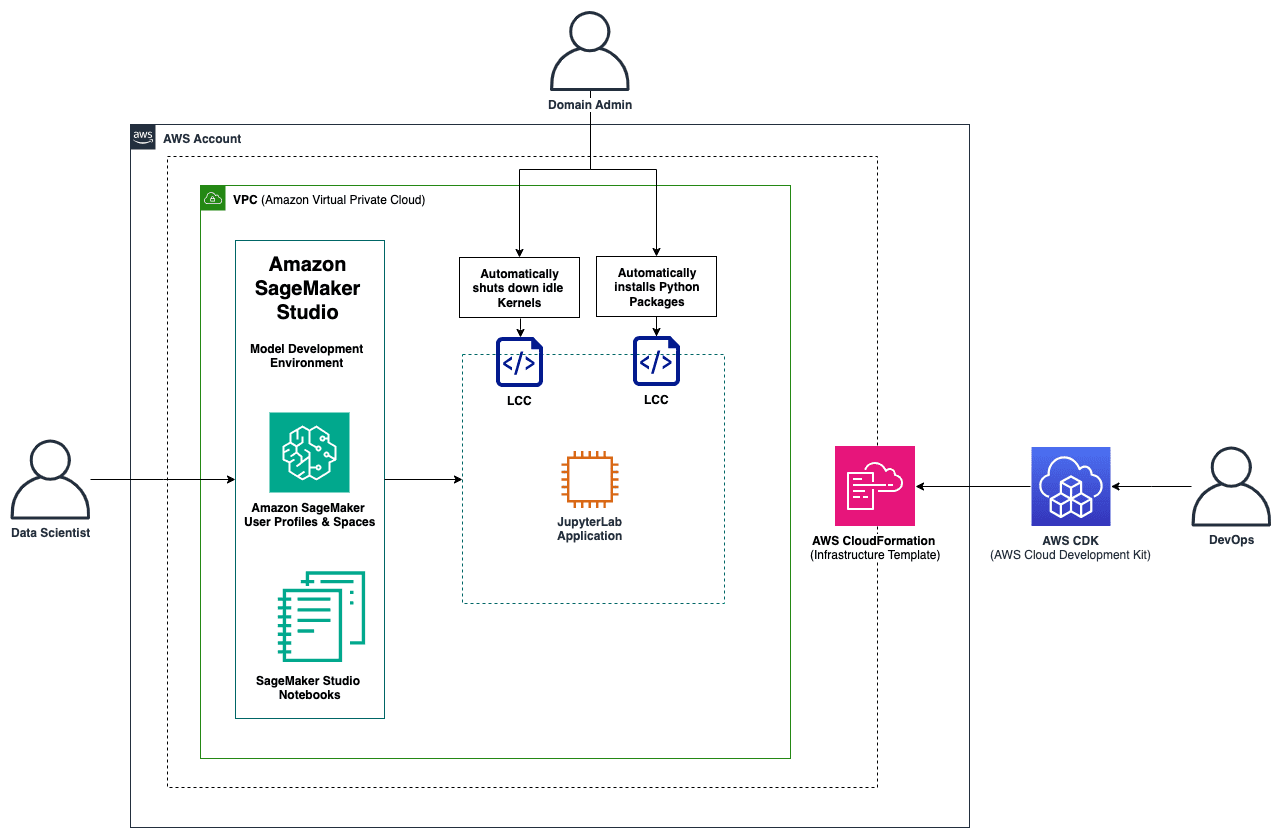

Favorite This post serves as a step-by-step guide on how to set up lifecycle configurations for your Amazon SageMaker Studio domains. With lifecycle configurations, system administrators can apply automated controls to their SageMaker Studio domains and their users. We cover core concepts of SageMaker Studio and provide code examples of

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

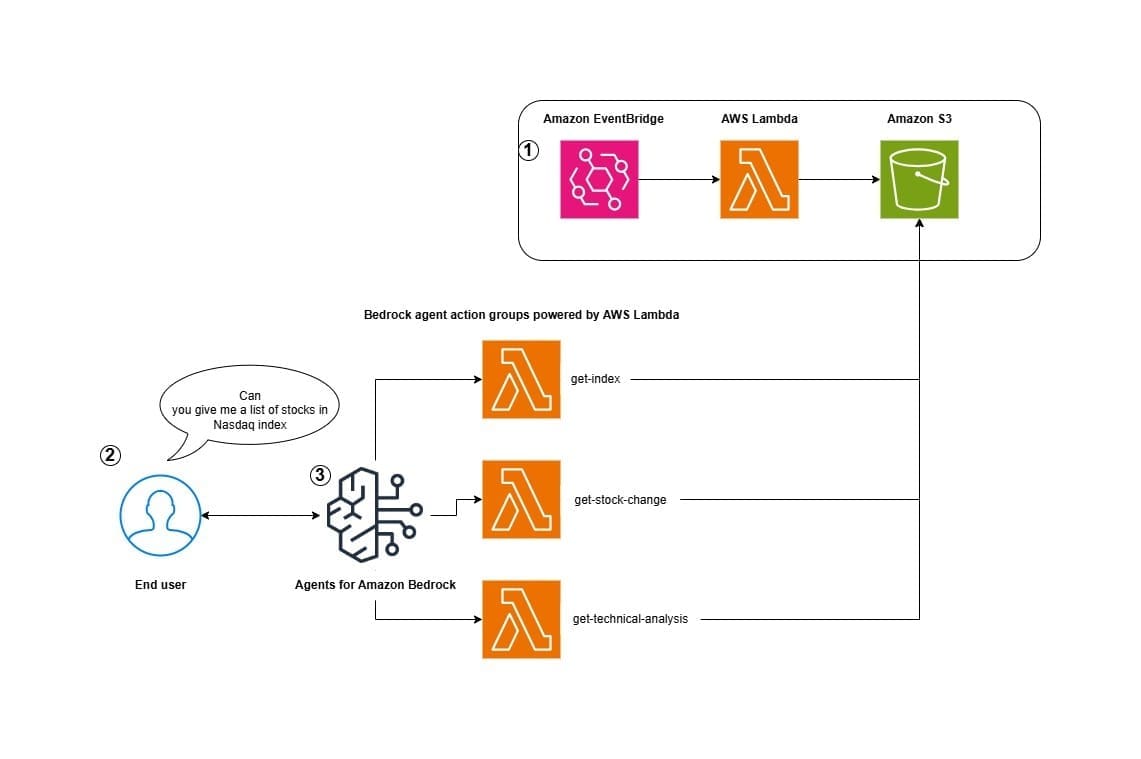

Favorite Stock technical analysis questions can be as unique as the individual stock analyst themselves. Queries often have multiple technical indicators like Simple Moving Average (SMA), Exponential Moving Average (EMA), Relative Strength Index (RSI), and others. Answering these varied questions would mean writing complex business logic to unpack the query

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

Favorite This post is co-written with Curtis Maher and Anjali Thatte from Datadog. This post walks you through Datadog’s new integration with AWS Neuron, which helps you monitor your AWS Trainium and AWS Inferentia instances by providing deep observability into resource utilization, model execution performance, latency, and real-time infrastructure health,

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

Favorite This post is co-written with Adarsh Kyadige and Salma Taoufiq from Sophos. As a leader in cutting-edge cybersecurity, Sophos is dedicated to safeguarding over 500,000 organizations and millions of customers across more than 150 countries. By harnessing the power of threat intelligence, machine learning (ML), and artificial intelligence (AI),

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

Favorite The use of large language models (LLMs) and generative AI has exploded over the last year. With the release of powerful publicly available foundation models, tools for training, fine tuning and hosting your own LLM have also become democratized. Using vLLM on AWS Trainium and Inferentia makes it possible

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

Favorite With the rise of large language models (LLMs) like Meta Llama 3.1, there is an increasing need for scalable, reliable, and cost-effective solutions to deploy and serve these models. AWS Trainium and AWS Inferentia based instances, combined with Amazon Elastic Kubernetes Service (Amazon EKS), provide a performant and low

Read More

Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024

![]() Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024