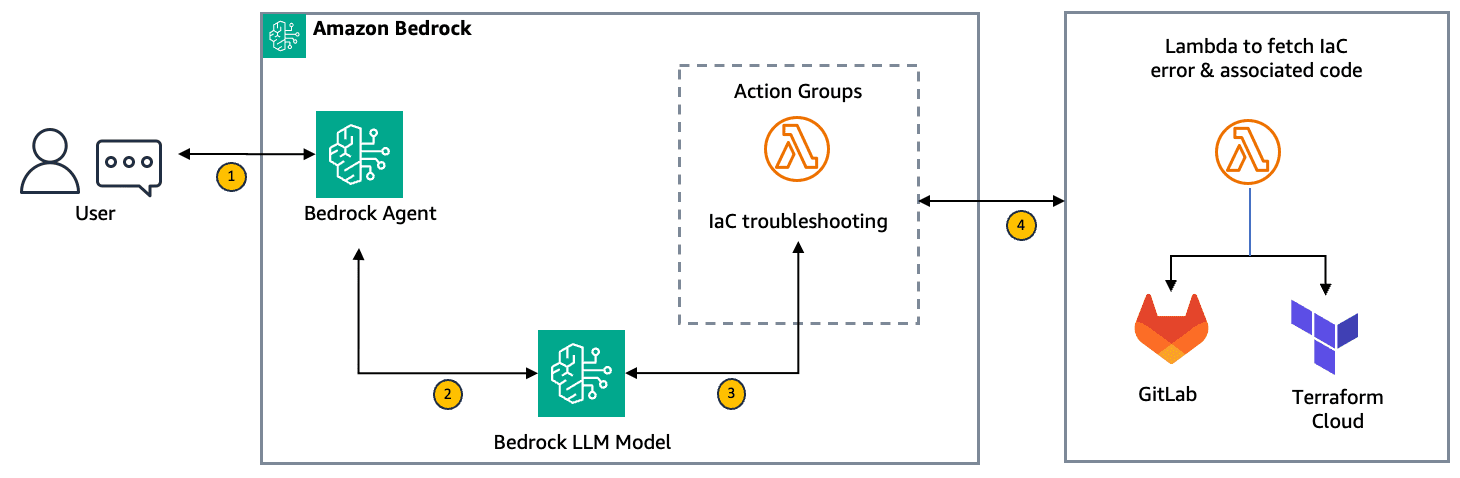

Level up your problem-solving and strategic thinking skills with Amazon Bedrock

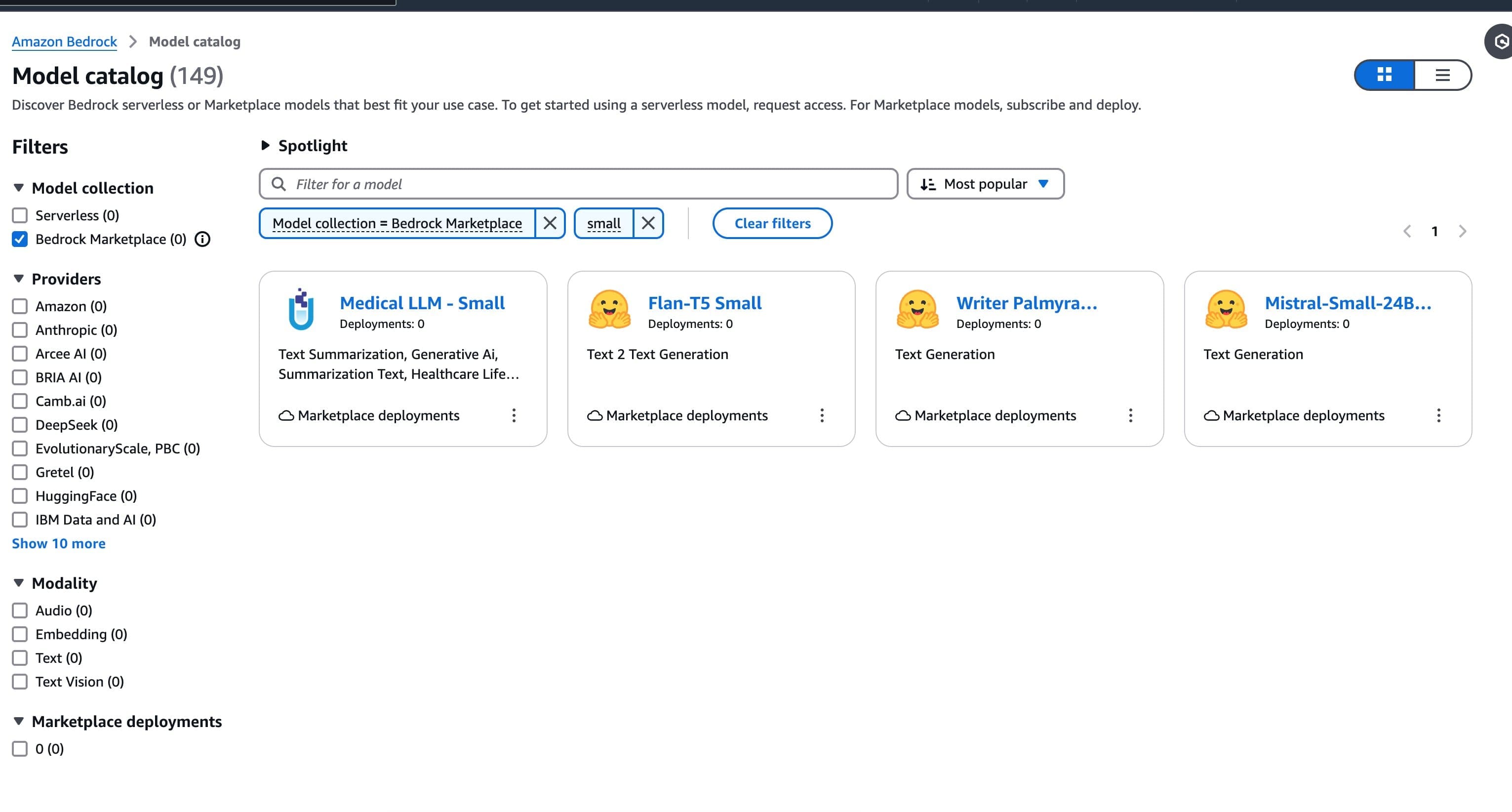

Favorite Organizations across many industries are harnessing the power of foundation models (FMs) and large language models (LLMs) to build generative AI applications to deliver new customer experiences, boost employee productivity, and drive innovation. Amazon Bedrock, a fully managed service that offers a choice of high-performing FMs from leading AI

Read More![]() Shared by AWS Machine Learning February 28, 2025

Shared by AWS Machine Learning February 28, 2025