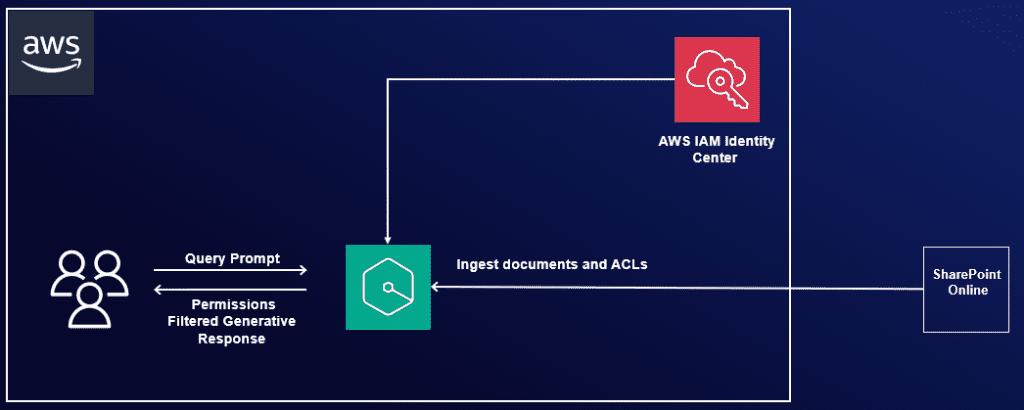

Connect SharePoint Online to Amazon Q Business using OAuth 2.0 ROPC flow authentication

Favorite Enterprises face significant challenges accessing and utilizing the vast amounts of information scattered across organization’s various systems. What if you could simply ask a question and get instant, accurate answers from your company’s entire knowledge base, while accounting for an individual user’s data access levels? Amazon Q Business is

Read More![]() Shared by AWS Machine Learning November 27, 2024

Shared by AWS Machine Learning November 27, 2024