Favorite In today’s rapidly evolving landscape of artificial intelligence (AI), training large language models (LLMs) poses significant challenges. These models often require enormous computational resources and sophisticated infrastructure to handle the vast amounts of data and complex algorithms involved. Without a structured framework, the process can become prohibitively time-consuming, costly,

Read More

Shared by AWS Machine Learning July 16, 2024

Shared by AWS Machine Learning July 16, 2024

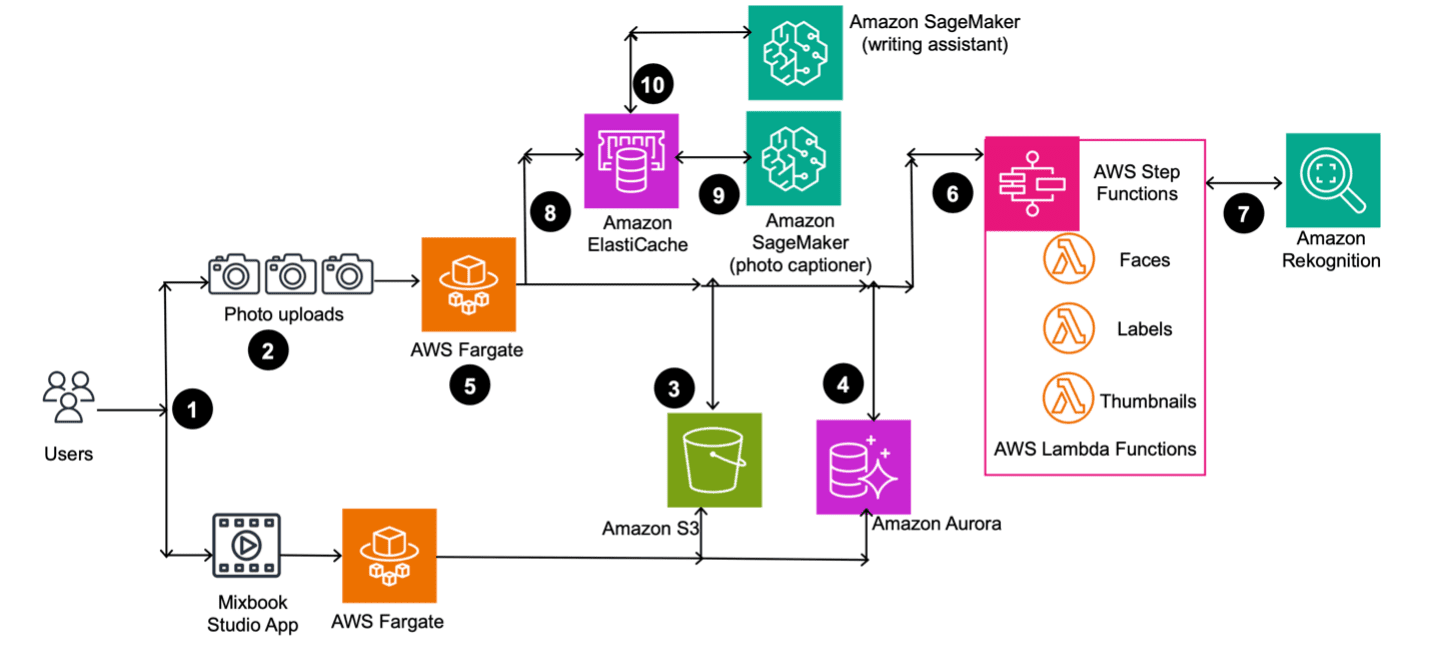

Favorite This post is co-written with Vlad Lebedev and DJ Charles from Mixbook. Mixbook is an award-winning design platform that gives users unrivaled creative freedom to design and share one-of-a-kind stories, transforming the lives of more than six million people. Today, Mixbook is the #1 rated photo book service in

Read More

Shared by AWS Machine Learning July 15, 2024

Shared by AWS Machine Learning July 15, 2024

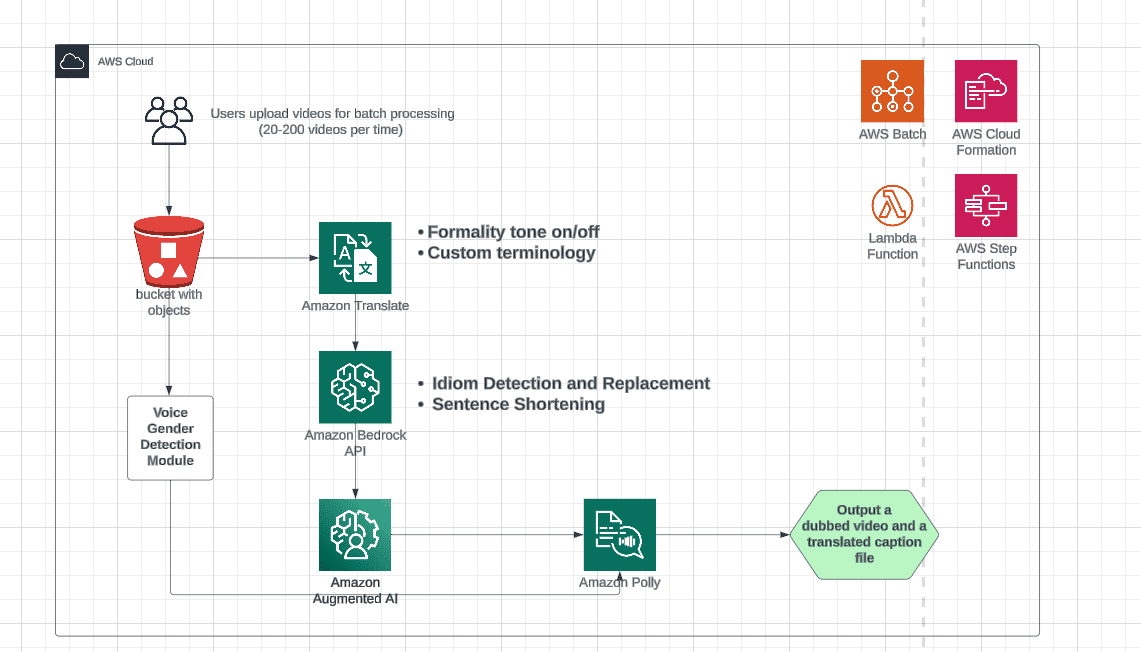

Favorite This post is co-written with MagellanTV and Mission Cloud. Video dubbing, or content localization, is the process of replacing the original spoken language in a video with another language while synchronizing audio and video. Video dubbing has emerged as a key tool in breaking down linguistic barriers, enhancing viewer

Read More

Shared by AWS Machine Learning July 15, 2024

Shared by AWS Machine Learning July 15, 2024

Favorite Knowledge Bases for Amazon Bedrock is a fully managed service that helps you implement the entire Retrieval Augmented Generation (RAG) workflow from ingestion to retrieval and prompt augmentation without having to build custom integrations to data sources and manage data flows, pushing the boundaries for what you can do

Read More

Shared by AWS Machine Learning July 11, 2024

Shared by AWS Machine Learning July 11, 2024

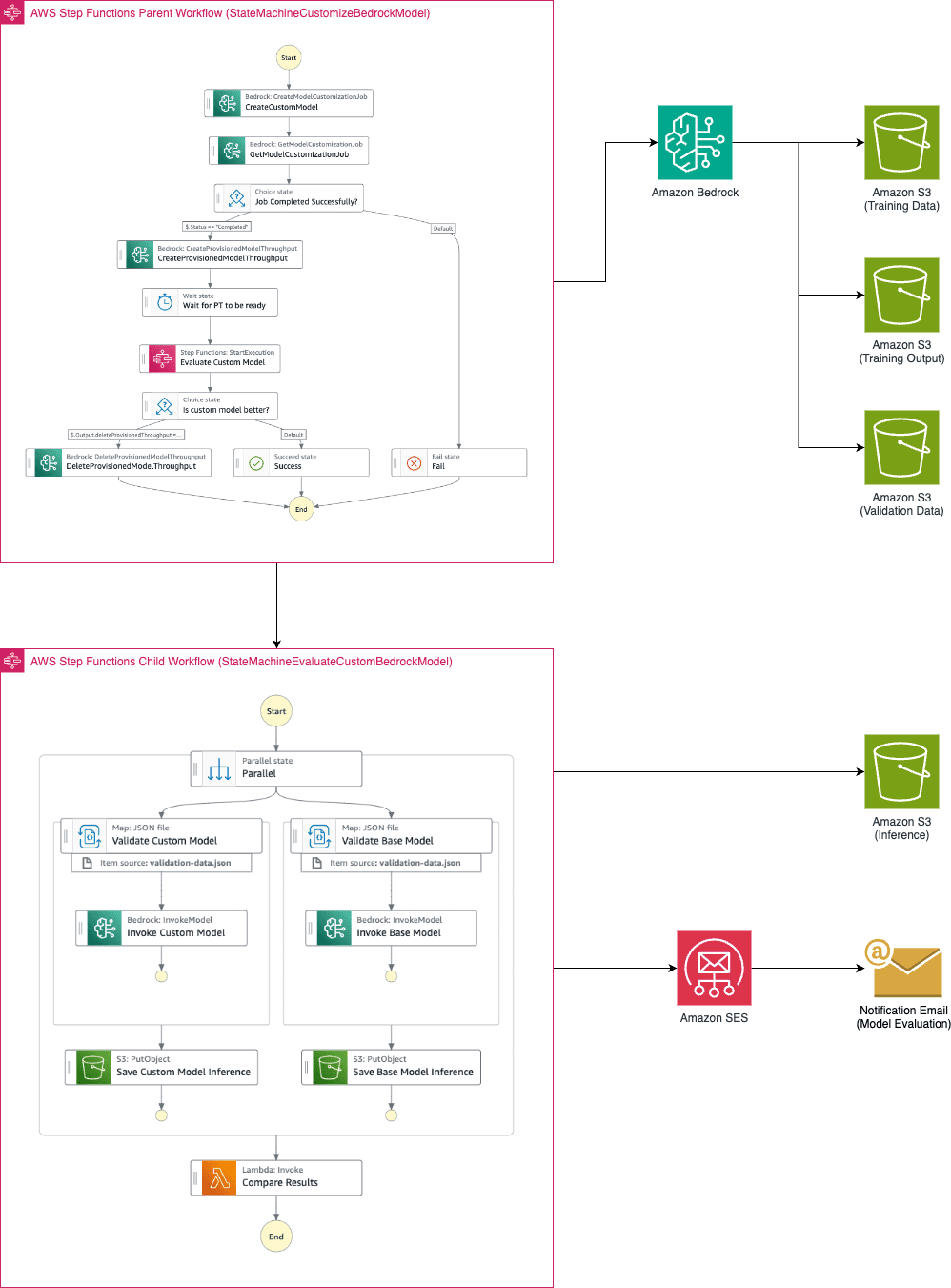

Favorite Large language models have become indispensable in generating intelligent and nuanced responses across a wide variety of business use cases. However, enterprises often have unique data and use cases that require customizing large language models beyond their out-of-the-box capabilities. Amazon Bedrock is a fully managed service that offers a

Read More

Shared by AWS Machine Learning July 11, 2024

Shared by AWS Machine Learning July 11, 2024

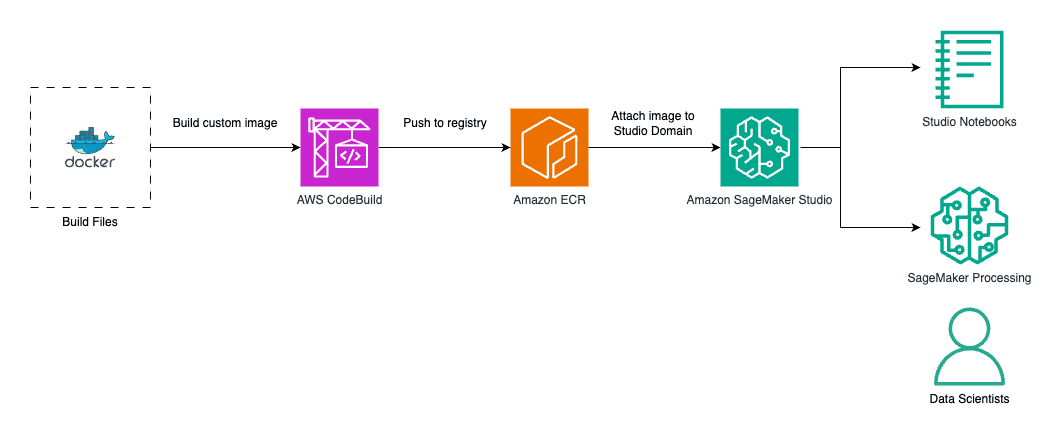

Favorite Amazon SageMaker Studio provides a comprehensive suite of fully managed integrated development environments (IDEs) for machine learning (ML), including JupyterLab, Code Editor (based on Code-OSS), and RStudio. It supports all stages of ML development—from data preparation to deployment, and allows you to launch a preconfigured JupyterLab IDE for efficient

Read More

Shared by AWS Machine Learning July 11, 2024

Shared by AWS Machine Learning July 11, 2024

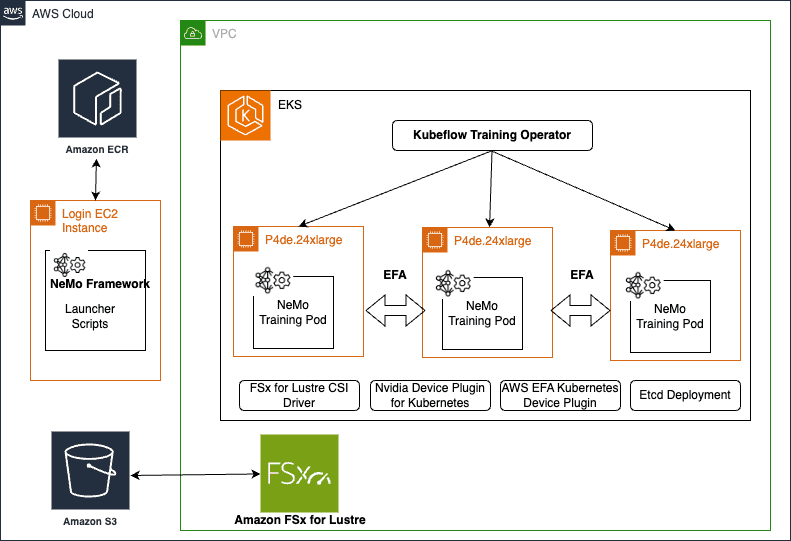

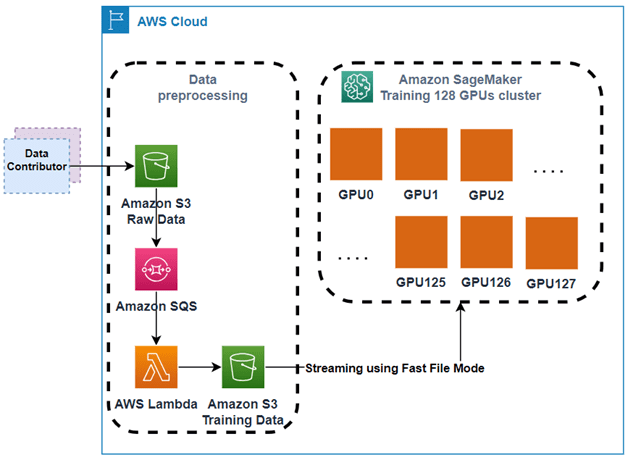

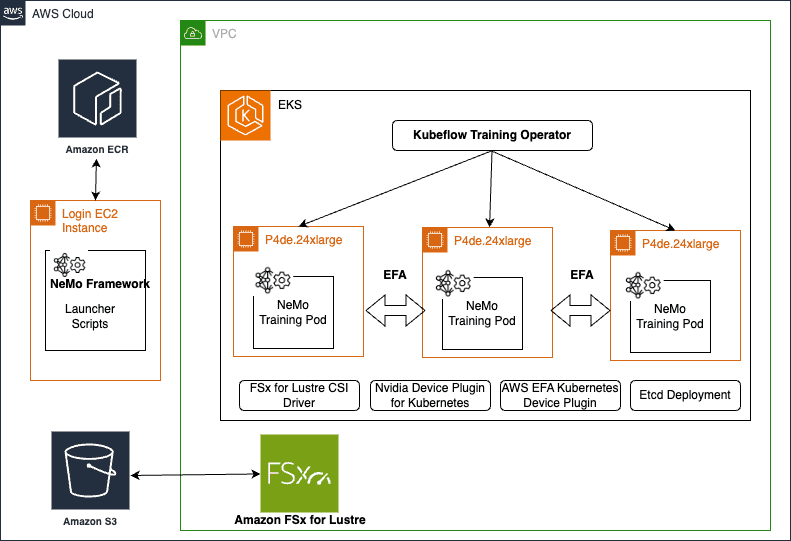

Favorite This post is co-written with Bar Fingerman from BRIA AI. This post explains how BRIA AI trained BRIA AI 2.0, a high-resolution (1024×1024) text-to-image diffusion model, on a dataset comprising petabytes of licensed images quickly and economically. Amazon SageMaker training jobs and Amazon SageMaker distributed training libraries took on the

Read More

Shared by AWS Machine Learning July 11, 2024

Shared by AWS Machine Learning July 11, 2024

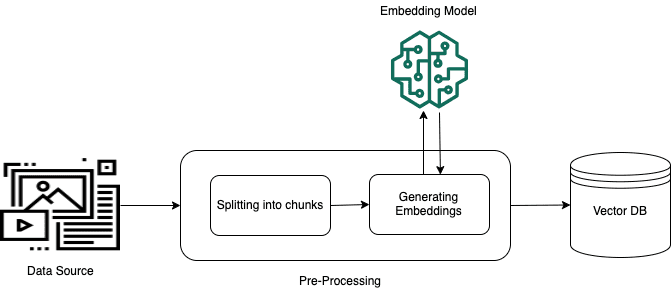

Favorite Retrieval Augmented Generation (RAG) is a popular paradigm that provides additional knowledge to large language models (LLMs) from an external source of data that wasn’t present in their training corpus. RAG provides additional knowledge to the LLM through its input prompt space and its architecture typically consists of the

Read More

Shared by AWS Machine Learning July 11, 2024

Shared by AWS Machine Learning July 11, 2024

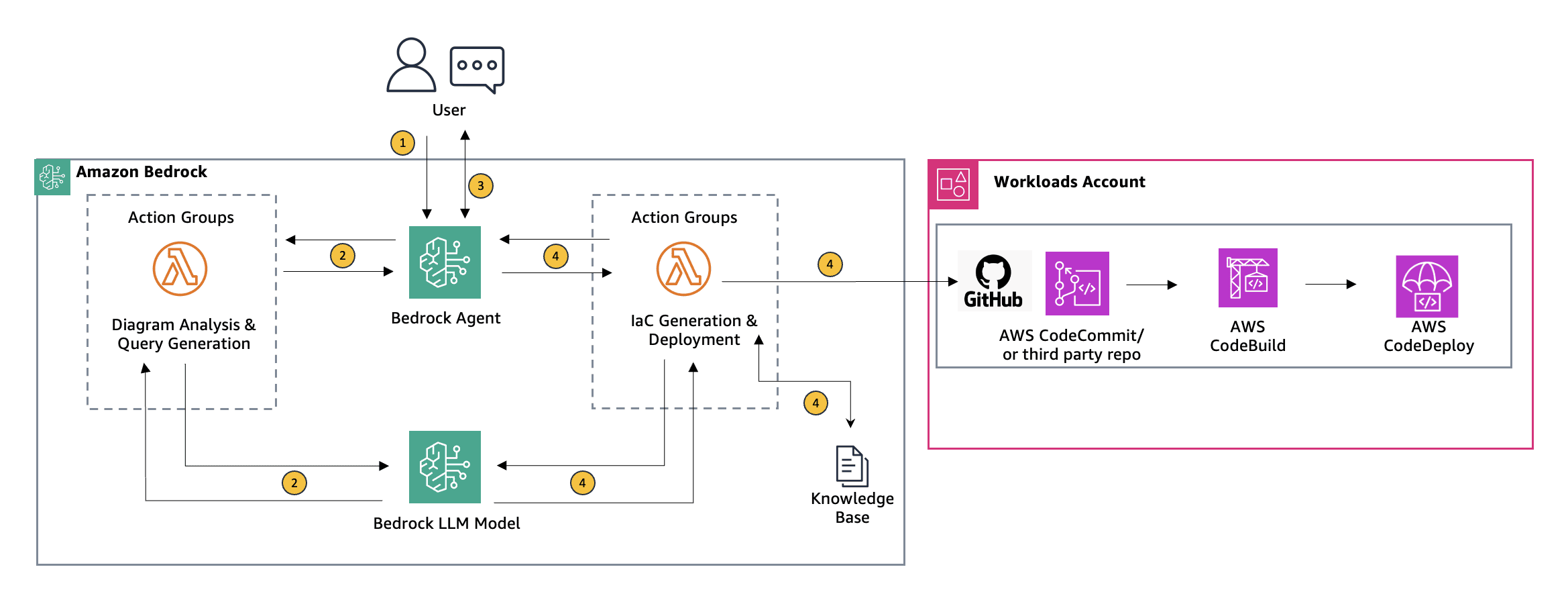

Favorite In the diverse toolkit available for deploying cloud infrastructure, Agents for Amazon Bedrock offers a practical and innovative option for teams looking to enhance their infrastructure as code (IaC) processes. Agents for Amazon Bedrock automates the prompt engineering and orchestration of user-requested tasks. After being configured, an agent builds

Read More

Shared by AWS Machine Learning July 11, 2024

Shared by AWS Machine Learning July 11, 2024

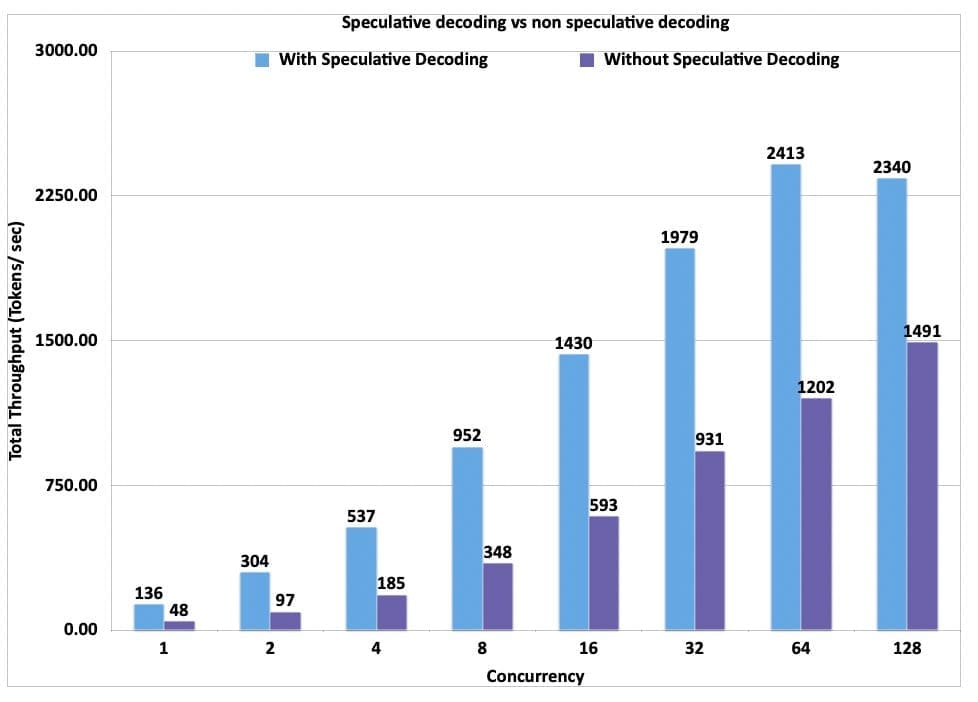

Favorite Today, Amazon SageMaker announced a new inference optimization toolkit that helps you reduce the time it takes to optimize generative artificial intelligence (AI) models from months to hours, to achieve best-in-class performance for your use case. With this new capability, you can choose from a menu of optimization techniques,

Read More

Shared by AWS Machine Learning July 10, 2024

Shared by AWS Machine Learning July 10, 2024

![]() Shared by AWS Machine Learning July 16, 2024

Shared by AWS Machine Learning July 16, 2024