Favorite Posted by Yossi Matias, VP Engineering & Research, and Grey Nearing, Research Scientist, Google Research Floods are the most common natural disaster, and are responsible for roughly $50 billion in annual financial damages worldwide. The rate of flood-related disasters has more than doubled since the year 2000 partly due

Read More

Shared by Google AI Technology March 20, 2024

Shared by Google AI Technology March 20, 2024

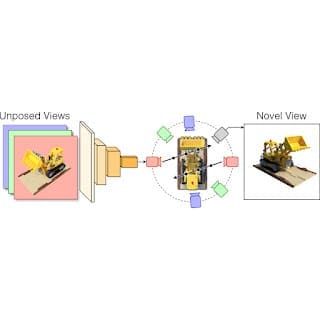

Favorite Posted by Mark Matthews, Senior Software Engineer, and Dmitry Lagun, Research Scientist, Google Research A person’s prior experience and understanding of the world generally enables them to easily infer what an object looks like in whole, even if only looking at a few 2D pictures of it. Yet the

Read More

Shared by Google AI Technology March 18, 2024

Shared by Google AI Technology March 18, 2024

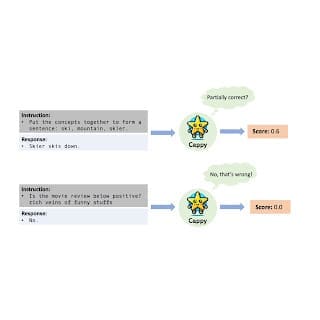

Favorite Posted by Yun Zhu and Lijuan Liu, Software Engineers, Google Research Large language model (LLM) advancements have led to a new paradigm that unifies various natural language processing (NLP) tasks within an instruction-following framework. This paradigm is exemplified by recent multi-task LLMs, such as T0, FLAN, and OPT-IML. First,

Read More

Shared by Google AI Technology March 14, 2024

Shared by Google AI Technology March 14, 2024

Favorite Posted by Dustin Zelle, Software Engineer, Google Research, and Arno Eigenwillig, Software Engineer, CoreML Objects and their relationships are ubiquitous in the world around us, and relationships can be as important to understanding an object as its own attributes viewed in isolation — take for example transportation networks, production

Read More

Shared by Google AI Technology February 6, 2024

Shared by Google AI Technology February 6, 2024

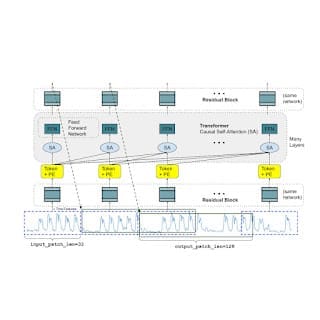

Favorite Posted by Rajat Sen and Yichen Zhou, Google Research Time-series forecasting is ubiquitous in various domains, such as retail, finance, manufacturing, healthcare and natural sciences. In retail use cases, for example, it has been observed that improving demand forecasting accuracy can meaningfully reduce inventory costs and increase revenue. Deep

Read More

Shared by Google AI Technology February 2, 2024

Shared by Google AI Technology February 2, 2024

Favorite Posted by Yang Zhao, Senior Software Engineer, and Tingbo Hou, Senior Staff Software Engineer, Core ML Text-to-image diffusion models have shown exceptional capabilities in generating high-quality images from text prompts. However, leading models feature billions of parameters and are consequently expensive to run, requiring powerful desktops or servers (e.g.,

Read More

Shared by Google AI Technology January 31, 2024

Shared by Google AI Technology January 31, 2024

Favorite Posted by Dan Kondratyuk and David Ross, Software Engineers, Google Research A recent wave of video generation models has burst onto the scene, in many cases showcasing stunning picturesque quality. One of the current bottlenecks in video generation is in the ability to produce coherent large motions. In many

Read More

Shared by Google AI Technology December 19, 2023

Shared by Google AI Technology December 19, 2023

Favorite Posted by Phitchaya Mangpo Phothilimthana, Staff Research Scientist, Google DeepMind, and Bryan Perozzi, Senior Staff Research Scientist, Google Research With the recent and accelerated advances in machine learning (ML), machines can understand natural language, engage in conversations, draw images, create videos and more. Modern ML models are programmed and

Read More

Shared by Google AI Technology December 15, 2023

Shared by Google AI Technology December 15, 2023

Favorite This post is written in collaboration with Balaji Chandrasekaran, Jennifer Cwagenberg and Andrew Sansom and Eiman Ebrahimi from Protopia AI. New and powerful large language models (LLMs) are changing businesses rapidly, improving efficiency and effectiveness for a variety of enterprise use cases. Speed is of the essence, and adoption

Read More

Shared by AWS Machine Learning December 6, 2023

Shared by AWS Machine Learning December 6, 2023

Favorite Amazon SageMaker Canvas is a rich, no-code Machine Learning (ML) and Generative AI workspace that has allowed customers all over the world to more easily adopt ML technologies to solve old and new challenges thanks to its visual, no-code interface. It does so by covering the ML workflow end-to-end:

Read More

Shared by AWS Machine Learning November 25, 2023

Shared by AWS Machine Learning November 25, 2023

![]() Shared by Google AI Technology March 20, 2024

Shared by Google AI Technology March 20, 2024